Controlling for heterogeneity in the Illinois bonus experiment ∗ By Marcel Voia

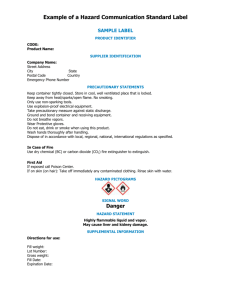

advertisement

Controlling for heterogeneity in the Illinois bonus

experiment∗

By Marcel Voia†

May 2003

Abstract

In this paper I consider a re-employment bonus experiment that was conducted in

Illinois. We answer questions such as: “How much money would be saved if this program

would be introduced generally?’ or “How much does the average unemployment duration

decrease if this program would beintroduced generally?”. To answer to these questions I

estimate a structural hazard model that allows for heterogeneity and partial compliance,

then I simulate the distribution of unemployment durations if the program would be

introduced generally.

1

Introduction

In this paper we re-analyze a segment of the data from the Illinois bonus experiment in a

way which was not done before, even though these data have been analyzed with increasing sophistication by Woodbury and Spiegelman (1987) and Meyer (1996). Given that the

experiment provided exogenous differences in individual incentives, Meyer use them to test

labor supply and search theories of unemployment. He also examined the effect of the fixedamounts bonus on different wage level groups and he examine predictions about timing of

exits from unemployment. In his analysis he did not allow for unobserved heterogeneity or

selective compliance and he concluded that the experimental evidence did not support the

desirability of a permanent program. Fortunately, Meyer gives a clear description of potential

problems of his estimates if there would be unobserved heterogeneity.

“While the Claimant Experiment group and control group are drawn from

identical populations, over time the individuals who have not ended their UI receipt in the control group and Claimant Experiment will become less comparable.

∗

I am gratful to Tiemen Woutersen, my supervisor, and I gratefully acknowlegde stimulating suggestions

from Geert Ridder and Todd Stinebrickner. All errors are mine.

†

Correspondence addresses: University of Western Ontario, Department of Economics, Social Science

Centre, London, Ontario, N6A 5C2, Canada. Email: mvoia@uwo.ca.

1

This change occurs because the effects of the Claimant Experiment may interact

with unobservable differences between individuals”.

Meyer proves this statement in a theorem (Meyer, 1996, appendix A). He also notes

“Thus, estimates of the effect of the Claimant Experiment without heterogeneity controls should be biased in the negative direction (..). Unobserved heterogeneity would also tend to diminish an effect of the experiment on the hazard

just before the offer expires.”

The Illinois bonus experiment is an interesting experiment about unemployment duration.

In particular, a randomized group of people who became unemployed was offered the chance

to participate in a program in which unemployed people were offered US $500 bonus if they

found a job within 11 weeks and kept it for at least four months. Some people declined to

participate even if they receive an offer to participate in the program, this group is called the

non-compliance group. We consider analyzing the characteristics of this group.Surprisingly,

nobody has answered simple policy questions such as, “How much money would be saved

if this program would be introduced generally?” or “How much would the average unemployment duration decrease if this program would be introduced generally?”. The reason

why these questions have not been asked (or answered) is that one has to deal with partial

compliance, which complicates the analysis of the data from a randomized experiment. That

is, some people were randomly offered to participate but declined. The people that decline

to participate from the treatment group are not doing it at random, they have some characteristics, which are important to the re-employment hazard. In this paper we estimate a

structural hazard model that allows for heterogeneity, then we simulate the distribution of

unemployment durations if the program would be introduced generally. Our findings confirm

unobserved heterogeneity and are in complete agreement with these quotes of Meyer. Also,

the results give more optimistic conclusions about monetary incentives than Meyer (1996).

Following the reasoning of two decades of econometric analysis of duration models, (see

Lancaster (1990) and Van den Berg (2000) for overviews), we argue that one needs to control

for unobserved heterogeneity to assess causality in a duration model. Randomly assigned

unemployed people are given the choice to participate. Using this intention to treat framework, we show that the integrated hazard estimator consistently estimates the treatment as

a function of time. Given that the experiment consists of a $ 500 bonus which is given to

people who find a job within the first 11 weeks after becoming unemployed, it follows from

Mortenson (1987) that you might observe a discrete jump in the hazard after 11 weeks. The

integrated hazard estimator does not require smoothness and can therefore accommodate

such a jump. In the biostatistics literature, Tsiatis (1990) and Robin and Tsiatis (1991) censor all their observations to deal with partial compliance. They hereby discard a large part of

the data and cannot make statements about the hazard over the intervals that were censored

away. The integrated hazard estimator avoids such censoring and base inference on the whole

dataset. Thus, our estimator can be viewed as an application of the integrated hazard principle as developed by Woutersen (2000). We consider the duration of unemployment as the

response variable to be analyzed, because it has a direct implication for the hazard rate, and

we use a semiparametric model to analyze the duration, that is the mixed proportion hazard

2

(M P H) model. In our analysis of causation we use the Potential Outcome Model, which

was introduced by Neyman (1923) and Fisher (1935), with major contributions made by Cox

(1958), Cochran (1965) and Rubin (1974,1977). Heckman (2000) argues that the statistical

Potential Outcome Model is a version of the econometric causal model and counterfacuals

are the base of causal inference in statistics. He also argue that the use of joint probability

of counterfacuals help us to better explain the distributional impacts of public policies, thus

counterfacuals help to move beyond comparisons of aggregate overall distributions and to

consider how people in different portions of an initial distribution are affected by a specific

public policy. The Potential Outcome Model was initially linked to the counterfactual notion

of causation by Glymour (1986). Galles and Pearl.(1998) give an axiomatic characterization

of causal couterfactuals in comparing logical properties of counterfactuals in Structural Equation Models and Kluve (2001) concentrates his paper on the counterfactual-based nature of

the Potential Outcome Model and pointed out which counterfactual causal question can be

asked and answered within a model.

Given that in our case the potential outcome model is applied to the non-compliers, to

construct counterfactual duration of unemployment for the non-complience group (the duration of unemployment of the non treated if they were treated), we use preliminary estimates

for the baseline hazard. These estimates were obtained from the compliance group. Also, we

extend the analysis to see how the duration of unemployment changes if the experiment is

applied to the overall population.

This paper is organized as follows. Section ?? gives a description of the data. Section

?? applies the integrated hazard principle to simple examples. Section ?? discusses counterfactuals in duration analysis and shows how to approximate counterfactual distributions.

Section ?? gives estimation results. Section ?? concludes.

2

Description of the Data

In our analysis we use the same data as in Meyer (1996) from the Illinois experiment, which

was conducted by the Illinois Department of Employment Security. The experiment randomly

assigned those in the eligible population to one of three groups, which are identified by Meyer

(1996) as control group, Claimant Experiment, and Employer Experiment. The goal of the

experiment was to explore if the unemployment duration is reduced when a bonus is paid to

Unemployment Insurance (UI) beneficiaries (treatment 1) or to their employers (treatment 2)

relative to a randomly selected control group. We concentrate our attention to the Claimant

Experiment (treatment 1), which consists of a random sample of new claimants for U I that

received a $500 bonus if they found a job (of 30 hours or more per week) in less than

11 weeks after filing for U I and held that job for at least 4 months. The size of the bonus

reflected a balancing of the experiments’ budget constraint (a maximum of $750,000 in bonus

payments) against an arbitrary judgement about how small a bonus could be to generate a

response (5% of annual wage or 4 weeks of U I payments). The 11 week period was chosen

to be approximately 40% of the potential duration of benefits in Illinois, which is 26 weeks.

The minimum of 4 months employment was required to avoid fraudulent hire and to avoid

payment of bonuses to seasonal workers and employers. Eligibility criteria excluded younger

and older claimants in order to reduce the number of complicated factors such as: special

3

programs for young people, and incentives to retire early for older workers which can influence

the job-finding behavior of those enrolled in the experiment. Three variables can be used

to control for the size of the sample: the number of sites (22), the length of the enrollment

period, and the proportion of claimants selected at any given site. More sites permitted a

shorter enrollment period, originally designed to be 13 weeks, ultimately 16. Thus, in the

treatment 1 group 4186 individuals were selected, 3527 (84%) (compliance group) agreed to

participate in the experiment, while 659 (16%) (non-compliance group) did not agree. The

individuals from the control group were excluded from participating in the experiment, they

actually did not know that the experiment took place.

3

Estimation using the integrated hazard, examples

In this section, we derive an estimator using the identification results of Elbers and Ridder

(1982) and the ‘integrated hazard principle’ of Woutersen (2000). The integrated hazard

principle suggests to use those parameter values (or functions) as estimates for which the

integrated hazard is an unit exponential variable. In this section we illustrate with simple

examples how this principle can be applied; in the next section we proof that the integrated

hazard principle yields a consistent estimator for the re-employment bonus experiment. Let

Z denote the integrated hazard which has a unit exponential distribution.

Z T

θ(s, x)ds ∼ ε(1).

(1)

Z=

0

R

f (s;x)

θ(s; x)ds = − ln(1 − F (s; x)) where F (s; x) is uniformly distribProof: θ(s) = 1−F

(s;x) ⇒

uted between 0 and 1.

We can use equation (1) to estimate parameters of the hazard function. To illustrate how

this is done, consider almost the simplest problem.

Example 1. Let T1 , ..., TN be independent durations with hazard θ(t) = λ so Z = λT . The

integrated hazards are independent unit exponentials: Z = λT ∼ ε(1). Equating the sample

analogue of the integrated hazard to one gives:

PN

i=1 λti

= 1.

N

This suggests an estimate for λ,

N

λ̂ = ( PN

i=1 ti

),

which is the maximum likelihood estimator.

Equation (1) can also be used for censored duration data. Suppose a duration is right

censored if it lasts longer than c where c is exogenous. Let Z 0 denote the integrated hazard

of this potentially censored observation and let the indicator d be zero if the observation is

censored and one otherwise. Then the expectation of Z 0 equals the expectation of d, i.e.

EZ 0 = Ed where d = 0, 1.

4

(2)

Proof: See appendix 1.

P 0

P

z

Equation (2) suggests to censor the durations as c and use g(λ) = Ndi − N i as one of the

moment equations.

Example 2. Let T1 , ..., TN be independent durations with the following hazard

½

λ1 if t ≤ c

θ(t) =

λ2 if t > c

The indicator di is one if ti ≤ c and zero otherwise. We can write the realization of the

integrated hazard of individual i as

zi = di λ1 ti + (1 − di )λ1 c + (1 − di )λ2 (ti − c).

Using the moment equations g1 (λ) =

estimates

λ̂1 =

λ̂2 =

P

i zi

− 1 and g2 (λ) =

N

P

i

N

di

−

P

0

i zi

N

gives the following

P

i di

P

i ti

i {d

P

+ (1 − di )c}

i (1 − di )

P

.

i (1 − di )(ti − c)

(3)

These estimates are, again, the maximum likelihood estimates.

The maximum likelihood estimator of example 1 and 2 is consistent and efficient and one

can therefore argue that the integrated hazard ‘should’ yield the same estimating function.

This equivalence result holds for any number of intervals over which the hazard is supposed

to be constant 1 . Consider, however, the mixed proportional hazard model as introduced

by Lancaster (1979) with hazard θ = ηφ(x)λ(t) where η is the realization of a random

(unobserved heterogeneity), φ(x) a function of a regressor x and λ(t) denotes the baseline

hazard. Joint estimation of φ(x), λ(t) and the distribution of η has to deal with one of

the following complications. If a parametric mixing distribution is chosen then λ(t) can be

estimated by a parametric or nonparametric technique. However, Heckman and Singer (1985)

show that the estimates are very sensitive to the choice of the mixing distribution. The other

option is to estimate the mixing distribution nonparametrically. As Horowitz (1999) shows,

however, a deconvolution problem cannot be avoided and the rate of convergence would be

very slow for all parameters (and functions). These two choices are less than attractive

and we, therefore, avoid this kind of joint estimation. We thus need moment functions

whose expectation does not depend on the mixing distribution. As ‘building blocks’ for these

moment functions we use the indicator d of equation (2) and what we call the semi-integrated

hazard, i.e.

Z

1 ti

θi du.

si =

η 0

An extension of example 1 shows how si can be used for estimation.

1

If the number of intervals increases with N then integrated hazard estimator is equivalent to the nonparametric estimator of Kaplan and Meier (1958).

5

Example 3. Suppose we observe N independent durations and that the treatment R is

randomly assigned to half of the population. Let the hazard have the following form

θ = η i γ R where R = 0, 1

where η is the realization of a mixing distribution. The random assignment ensures independence of η and X. Let the realizations denoted by ti , i = 1, ..., N. The semi-integrated

hazard has the following form

Z

1 ti

si =

θi du = γ R ti R = 0, 1

ηi 0

Consider the following moment function.

1 X R

(si − s1−R

)

i

N

i

1 X

{(γti )R − t1−R

}.

i

N

g(γ) =

=

i

This moment function is monotonic in γ and has expectation zero at γ = γ 0 , the true value

of γ.

1

γ

γ

1

1

Eg(γ) = ( )E − E = ( − 1)E .

γ0 η

η

γ0

η

Therefore, the parameter γ can be consistently estimated.

Our next, example writes the treatment effect γ as a function of the regressor x, φ(x),

and assumes that the baseline hazard is piece-wise constant. In example 2 we censored the

data at c to derive an estimator that was equivalent to maximum likelihood estimator. In

general, we can artificial censor durations to create more moments. Suppose that we censor

the durations of the treatment group at c, and denote the semi-integrated hazard by s0 , then

Es0 = E

where smax =

1

η

Rc

0

1

z0

= (1 − e−ηsmax )

η

η

θi du. Similarly, the indicator d has the following expectation

Ed = (1 − e−ηsmax ).

Suppose the regressor xi has two different values, x0 and x1 . and let N0 and N1 denote

the number of individuals with x = x0 and x = x1 , respectively. We can censor all the

observations with x = x0 at c0 and those with x = x1 at c1 . Consider choosing the censoring

point c1 such that

1 X

1 X

di −

di = 0.

(4)

N 0 x=x

N1 x=x

0

1

This expectation of this equation is satisfied if smax is the same for x = x0 and x = x1 , i.e.

smax (c0 , x0 ) = smax (c1 , x1 )

6

(5)

Suppose we have a MPH model and that the assumptions of Elbers and Ridder (1982) hold so

that φ(x0 ) 6= φ(x1 ) for time-invariant x. Then c0 6= c1 and equation (5) yields restrictions on

the parameters. In fact, artificially censoring the data many times gives as many restrictions.

Suppose that the regressor x is not relevant for the hazard rate so that φ(x0 ) = φ(x1 ) and

the identification condition of ER fails. This touches upon what ER consider the ‘main

contribution’ of their paper:

The main contribution of our paper is, that we prove that time dependence

and unobserved heterogeneity can be distinguished (..). Surprisingly, this is due

to the variation of individual probabilities with the explanatory variable x.

Equation (5) sheds some light on the need of “variation of the individual probabilities the

explanatory with x”. Let φ(x0 ) = φ(x1 ) then equation (5) holds for c0 = c1 so that the

function φ(x) is not identified through this restriction. This is in accordance with ER since

the MPH model is not identified in that case. Thus, we can use equation (4) to derive a

moment function. Let N0 and N1 denote the number of individuals with x = x0 and x = x1 ,

respectively. We write our first moment function as a function of c1 2 .

P

P

di

x=x0 di

− x=x1 .

(6)

g1 (c1 (θ)) =

N0

N1

To improve efficiency of the estimator, we also use a second moment function that is a

function of the parameters and c1 .

P

P

P

P

x=x0 di

x=x1 si

x=x1 di

x=x0 si

g2 (θ, c1 (θ)) =

−

.

(7)

N0

N1

N1

N0

Note that

P

x=x0

N0

di

is just the average of the indicators and not a function of the parameters.

At the true value of the parameter of interest, θ0 , E

P

x=x0

PN0

si

x=x0

si

=

P

1

−ηsmax )

x=x0 η (1−e

P

x=x1

N0

si

. The

distribution of η does not depend on x and therefore E N0

= E N1 . This implies

that the expectation of g2 (θ0 , c1 (θ0 )) is zero.

P

P

1

−ηsmax )

−ηsmax )

x=x1 η (1 − e

x=x0 (1 − e

Eg2 (θ0 , c1 (θ0 )) =

N0

N1

P

P

1

−ηs

max

(1 − e

) x=x0 η (1 − e−ηsmax )

− x=x1

N1

N0

= 0.

Example 4 illustrates the use of the moment function of equations (6) and (7).

Example 4.

Assume the following hazard model

θ = ηφ(x)λ(t)

2

Since the moment function is an implicit function of the parameters, one could call it a minimum distance

procedure.

7

where x is a time invariant regressor and φ(x0 ) 6= φ(x1 ) and η a realization of a mixing

distribution. Let λ(t) be a piecewise constant function that allows for a different baseline

hazard before and after c. For convenience, we refer to the individuals with x = x0 as the

group. We firstPcensor the treatment

treatment group and those with x = x1 as the control

P

di

di

x=x

0

and d¯1 (c) = x=x1 . Assume that

and control group at c and calculate d¯0 (c) =

N0

N

P1

P

di

di

0

1

− x=x

,

d¯0 (c) < d¯1 (c), otherwise relabel. Using the moment function g1 (c1 (θ)) = x=x

N0

N1

¯

¯

¯

¯

or, equivalently, g1 (c1 (θ)) = d0 (c)− d1 (c1 ) yields a censoring point c1 and d0 (c) < d1 (c)

implies c1 < c0 . Note that

Z

1 c0

θi du = φ(x0 ) ∗ c0

s0,max =

η 0

Z c1

1

s1,max =

θi du = φ(x1 ) ∗ c1 .

η 0

In large samples, the moment function g1 (c1 (θ)) yields s0,max = s1,max . This suggest the

1)

following estimator for φ(x

φ(x0 ) .

c1

φ(x1 )

= .

(8)

φ(x0 )

c

The sample is presumably finite and we use the moment function of equation (7) to improve

efficiency

g2 (θ, c1 (θ)) = d¯0 (c)s̄1 (c1 ) − d¯1 (c1 )s̄0 (c)

P

s0

P

s0

1 i

0 i

and s̄0 (c) = x=x

. Note that both g1 (c1 (θ)) and g2 (θ, c1 (θ)) are

where s̄1 (c1 ) = x=x

N1

N0

just functions of φ(x1 ) and φ(x1 ) since the durations were censored at (or before) c. Therefore,

misspecification of the baseline hazard for t > c has no effect on the consistency.

Suppose that the baseline hazard is normalized to have the unit value before c and the

value λ after c. Possible moment functions for estimating λ are

g3 (c1 (θ)) = d¯0 (c0 ) − d¯1 (c) or

g4 (θ, c1 (θ)) = d¯0 (c0 )s̄1 (c) − d¯1 (c)s̄0 (c0 )

g5 (θ) = s̄1 (c) − s̄0 (∞)

Note that this pair {g3 , g4 } resembles {g1 , g2 }. However, this time, the ‘treatment’ group is

1)

censored at c. One can test whether φ(x

φ(x0 ) is constant over time by allowing for a separate

φ(x1 )

φ(x0 )

1)

for t > c. Alternatively, one could estimate φ(x

φ(x0 ) as a function of all moment functions

(joint estimation of all parameters of interest) to increase the efficiency of the estimate.

The random distribution is unrestricted in example 4 while the baseline hazard was piecewiseconstant.

The next section is using the integrated likelihood principle to show how we can consistently

estimate the counterfactual durations, that is the duration of the non-treated as they were

treated.

8

4

Counterfactuals in duration analysis

The counterfacuals were introduced in econometric analysis to make inference of causal effects in treatments by analyzing those aspects of a counterfactual theory of causation in

terms of possible worlds, which are of great importance for empirical social scientists. Thus,

counterfactuals characterizes a possible world with minimum deviation from the actual world.

Three approaches are used in literature to model causation:

a) Structural Equation Model represented by the following type of equations

Y = Xβ + ε,

where β capture all causal connection between Y and X.

b) Potential Outcome Model of causation, which is used to explain the effect on the outcomes of some particular treatment (treatment group) relative to other particular treatment

(control group).

c) Directed Acyclic Graphs, which is a recent development and uses graphical approaches

to causation.

Counterfacuals become very important when we look at the differences between the factual

and counterfacual questions. Also, the choice of the counterfacual has to be close enough

to the data so that statistical methods provide empirical answers and the research questions

are well defined.

Thus, one of the most important conditions necessary to have a counterfactual causal question

is the relativity condition (the effect of one treatment is always relative to the effect of

another treatment or causal inference about a treatment in the actual world is based on

the counterfactual relation to what would have happened under exposure to a treatment in

the alternative possible world). Rubin (1980, 1986) considered that the basic assumption

necessary to assess potential outcomes and infer meaningful causal statements is the stableunit-treatment-assumption, which states that the outcome of a treatment for a given

unit is the same independent of the mechanism that is used to assign the treatment to that

unit. He also argue that one of the most important causal parameters to be analyzed is the

average causal effect of a treatment or the average treatment effect (of a treatment in

the actual world versus a treatment in the possible world), and is defined as the difference

between the expected value of the unit-level of outcomes in the actual world and possible

world

AT E = E (Ya ) − E (Yp ) .

To make the model applicable we need to have satisfied the independence assumption, which

can be obtain by looking at a randomized experiment in which units are randomly assigned to

different treatments. Thus, the initial population and the subpopulations in the treatments

do not differ from each other on average. The Illinois bonus experiment satisfy all the above

conditions and thus a counterfactual analysis on the noncompliers in the treatment group

can infer results about the applicability of the program to the overall population.

9

4.1

Counterfactuals for uncensored spells

To motivate our choice for a M P H model, we estimate the unobserved heterogeneity using

the following estimator

1

b

ηi = .

si

Regressing b

η i on the observables (age-average(age), (age-average(age)) squared, log(base period earnings)-average(log(BPE), dummy for race, dummy for sex), we can make inference

about our choice for the hazard model. Looking at the results from table 1 we can conclude

that there is correlation between the b

η i and the observables. This result suggest that the

best hazard model necessary to estimate the unemployment duration is M P H model.

4.1.1

I. Consider the following model of the hazard rate

θ (t) = η i φ (t) λ (t) ,

where vi is the heterogeneity, λ (t) is the baseline hazard, φ (t) = e−βxt , with

φ (t1 ) = 1,

½

λ1 if t1 ≤ 11

λ (t1 ) =

λ2 otherwise

where t1 is the duration of the non treated group, and

½

φ if t2 ≤ 11

φ (t2 ) =

1 otherwise

½

λ1 if t2 ≤ 11

,

λ (t2 ) =

λ2 otherwise

where t2 is the counterfactual

½ duration (duration of the

½ non-treated as they were treated).

1 if t1 ≤ 11

1 if t2 ≤ 11

and di2 =

.

Define the indicator di1 =

0 otherwise

0 otherwise

We have the semi-integrated hazard (the semi-integrated hazard is defined as the integrated

hazard divided by η i ) for the first spell equal to

s1 = λ1 t1 d1 + 11 (1 − d1 ) λ1 + (1 − d1 ) λ2 (t1 − 11) ,

and for the second spell

s2 = φλ1 t2 d2 + 11φ (1 − d2 ) λ1 + (1 − d2 ) λ2 (t2 − 11) .

Equalizing s1 = s2 we get the counterfactual duration t2 . Thus, we have

t2 (φλ1 d2 + (1 − d2 ) λ2 )+11 (1 − d2 ) (φλ1 − λ2 ) = 11 (1 − d1 ) (λ1 − λ2 )+t1 (λ1 d1 + (1 − d1 ) λ2 ) ,

and

t2 =

=

11 (1 − d1 ) (λ1 − λ2 ) − 11 (1 − d2 ) (φλ1 − λ2 ) + t1 (λ1 d1 + (1 − d1 ) λ2 )

φλ1 d2 + (1 − d2 ) λ2

11 [λ1 ((1 − d1 ) − φ (1 − d2 )) + λ2 (d2 − d1 )] + t1 (λ1 d1 + (1 − d1 ) λ2 )

.

φλ1 d2 + (1 − d2 ) λ2

10

Given that φ, λ1 , λ2 , are unknown, we can get an estimate for the counterfactual duration t2 ,

by replacing φ, λ1 , λ2 with their estimates, which are obtained solving the moment conditions

of Ridder and Woutersen (2001) . Thus,

³

´

h ³

´

i

b1 d1 + (1 − d1 ) λ

b1 (1 − d1 ) − φ

b (1 − d2 ) + λ

b2 (d2 − d1 ) + t1 λ

b2

11 λ

b

t2 =

.

b2

bλ

b1 d2 + (1 − d2 ) λ

φ

To get the distribution of the counterfactual duration t2 we use the Bootstrap method.

The Bootstrap Bootstrapping is characterized by using simulations generated from an

estimated model (estimated probability distribution). Given that, both theory and practice have validated the bootstrap for a wide range of applications, we use bootstrap to

estimate features of the distribution: as the standard errors and confidence intervals for

our contrafactual estimates. Although the methodology is nonparametric, the bootstrap

can be used to solve both parametric and nonparametric problems. Consider the following

model X1 , X2 , . . . , Xn ∼ iidD and we wish to estimate some parameter θ: If θ happens to

parametrize a family of distributions, then we have a parametric problem; otherwise it is

nonparametric (D -unknown).

For our situation we use the nonparametric bootstrap. Suppose that we have constructed

an estimator for theta, called b

θ; this is a function of the random variables X1 , X2 , . . . , Xn .

Our objective, is to develop confdence intervals for θ via estimating the distribution of b

θ − θ.

We can accomplish this objective by simulating bootstrap samples (pseudo-data). A

single bootstrap sample is obtained by sampling randomly, with replacement, n observations

b as an estimate

from the original sample. To do this we examine the empirical distribution D

of the unknown distribution D, by forming the Empirical Distribution Function (edf ) of the

data. We construct the edf as the Cumulative Distribution Function (cdf ) F of the data.

Thus using the realizations x1 , x2 , . . . , xn of the data we construct edf as

n

1X

b

F :=

1{Xi <x} ,

n

i=1

where F (x) is the cdf of the probability model, that is

F = Pr[X1 ≤ x].

Once Fb is constructed, we use it to generate new random variables from this distribution by

inverting the edf and plugging uniform random variables into the resulting function. This

is a bit problematic because the edf is not monotonic, and thereby is not easily invertible.

In practice, a “generalized inverse” is constructed. Thus, we use this method to generate at

least B = 1000 new data sets and we compute the statistic on each pseudo-data set:

´

³

(1)

(1)

(1)

b

= b

θ X1 , X2 , . . . , Xn(1)

θ

´

³

(2)

(2)

(2)

b

θ

= b

θ X1 , X2 , . . . , Xn(2)

(B)

b

θ

..

.³

´

(B)

(B)

b

= θ X1 , X2 , . . . , Xn(B) ,

11

which gives us B new estimates of θ, which are intimately related to the original b

θ. Now,

we can estimate the distribution of b

θ − θ. To estimate the the bias and the variance of the

estimator we use

B

³ (∗) ´

1 X b(b) b

b

=

bias θ

θ −θ

B

b=1

¶2

B µ

³ (∗) ´

X

(b)

(∗)

1

b

=

θ −b

,

θ

V ar b

θ

B−1

b=1

holds for bootstrap quantities under the probability mechanism Fb and

where the asterisk

(∗)

P

(b)

b

b

θ = B1 B

b=1 θ .

To determine the confidence interval. Thus, we form the edf of the data generated by

(1)

(2)

(B)

b

θ, b

θ −b

θ, . . . , b

θ −b

θ and compute its quintals x

bα which are estimates of the quintals

θ −b

xα . The estimate for 1 − α confidence interval will be determined as

(∗)

θ−x

b α2 ].

[b

θ−x

b1− α2 ≤ θ ≤ b

¡ ¢

Alternatively, to compute the V ar b

t2 , we linearize b

t2 . Thus,

b

t2 =

and

11 [λ1 ((1 − d1 ) − φ (1 − d2 )) + λ2 (d2 − d1 )] + t1 (λ1 d1 + (1 − d1 ) λ2 )

φλ1 d2 + (1 − d2 ) λ2

³

´

³

´

³

´

b

b

∂b

t2

b1 − λ1 + ∂ t2 |λ ,λ ,φ λ

b2 − λ2 + ∂ t2 |λ ,λ ,φ φ

b−φ ,

+

|λ1 ,λ2 ,φ λ

b1

b2 1 2

b 1 2

∂λ

∂λ

∂φ

¡ ¢

V ar b

t2 =

Ã

!2

!2

³ ´

³ ´

b

∂

t

∂b

t2

2

b1 +

b2

|λ1 ,λ2 ,φ V ar λ

|λ1 ,λ2 ,φ V ar λ

b1

b2

∂λ

∂λ

Ã

!2

³ ´

∂b

t2

b ,

|λ1 ,λ2 ,φ V ar φ

+

b

∂φ

Ã

b1 , λ

b2 , and φ.

b Thus, this method is not recomwhich require distribution assumptions for λ

mended here.

Having the variance of the counterfactual duration we can compute confidence intervals

for t2 and we can get the counterfactual distribution.

4.1.2

II. Now consider the following M P H model

ηex1 β Λ (t1 ) = z ⇔ ex1 β Λ (t1 ) = s,

where s is the semi-integrated hazard for the observed spell of unemployment for the nontreated group. Suppose x2 is the counterfactual treatment, then we can find the counterfactual duration, t2

ηex2 β Λ (t2 )

z ⇔ ex2 β Λ (t2 ) = s

µ

¶

³ s ´

³

´

z

−1

−1

−1

(x1 −x2 )β

=

Λ

e

Λ

(t

)

.

=

Λ

⇒ t2 = Λ

1

ηex2 β

ex2 β

=

12

Assume three breaking points c1 , c2 , c3 . We have the first spell hazard at each breaking point:

η exi1 β , if t1 ≤ c1

i xi1 β

ηie

λ1 , if c1 < t1 ≤ c2

θ (t1 ) =

,

xi1 β λ , if c < t ≤ c

η

e

2

1

3

i xi1 β 2

ηie

λ3 , if c3 < t1

and second spell hazard

η exi2 β ,

i xi2 β

ηie

λ1 ,

θ (t2 ) =

x

β

i2

ηe

λ ,

i xi2 β 2

ηie

λ3 ,

Define the indicator dlj , with

points) as

½

dl1 =

½

dl3 =

if

if

if

if

t2 ≤ c1

c1 < t2 ≤ c2

c2 < t2 ≤ c3

c3 < t2

l = 1, 2 (for the two spells) and j = 1, 2, 3, 4 (for the breaking

1 if tl ≤ c1

0 otherwise

1 if c1 < tl ≤ c2

0 otherwise

½

1 if c3 < tl

, dl4 =

0 otherwise

, dl2 =

1 if c2 < tl ≤ c3

0 otherwise

½

.

Then we have the integrated hazard for the first spell defined as

z1 = ηex1 β (c1 t1 d11 + (c1 + λ1 (t1 − c1 )) d12 +

+ (c1 + λ1 (c2 − c1 ) + λ2 (t1 − c2 )) d13 +

+ (c1 + λ1 (c2 − c1 ) + λ2 (c3 − c2 ) + λ3 (t1 − c3 )) d14 )

= ηi ex1 β (c1 (1 − λ1 ) (d12 + d13 + d14 ) + c2 (λ2 − λ3 ) (d13 + d14 )

+c3 (λ3 − λ4 ) d14 + t1 (c1 d11 + λ1 d12 + λ2 d13 + λ3 d14 )),

and for the counterfactual spell as

z2 = ηex2 β (c1 t2 d21 + (c1 + λ1 (t2 − c1 )) d22 +

+ (c1 + λ1 (c2 − c1 ) + λ2 (t2 − c2 )) d23 +

+ (c1 + λ1 (c2 − c1 ) + λ2 (c3 − c2 ) + λ3 (t2 − c3 )) d24 )

= ηex2 β (c1 (1 − λ1 ) (d22 + d23 + d24 ) + c2 (λ2 − λ3 ) (d23 + d24 )

+c3 (λ3 − λ4 ) d24 + t2 (c1 d21 + λ1 d22 + λ2 d23 + λ3 d24 )).

Given that z1 = z2 we can find the counterfactual duration, t2 by solving the following

equation

³

´

t2 = Λ−1 e(x1 −x2 )β Λ (t1 ) ,

which gives

c1 d11 + λ1 d12 + λ2 d13 + λ3 d14

ti1

c1 d21 + λ1 d22 + λ2 d23 + λ3 d24

c1 (1 − λ1 ) (d12 + d13 + d14 ) + c2 (λ1 − λ2 ) (d13 + d14 ) + c3 (λ2 − λ3 ) d14

+e(x1 −x2 )β

c1 d21 + λ1 d22 + λ2 d23 + λ3 d24

c1 (1 − λ1 ) (d22 + d23 + d24 ) + c2 (λ1 − λ2 ) (d23 + d24 ) + c3 (λ2 − λ3 ) d24

−

.

c1 d21 + λ1 d22 + λ2 d23 + λ3 d24

t2 = e(x1 −x2 )β

13

The condition that t2 < t1 is that xi1 − xi2 < 0. An estimate of the counterfactual duration

can be obtained by replacing λ1 , λ2 , λ3 , with their estimates, which are obtained, again,

solving the moment conditions of Ridder and Woutersen (2001) . Thus,

b1 d12 + λ

b2 d13 + λ

b3 d14

c1 d11 + λ

b

t2 = e(x1 −x2 )β

t

b1 d22 + λ

b2 d23 + λ

b3 d24 i1

c1 d21 + λ

³

´

³

´

³

´

b2 (d13 + d14 ) + c3 λ

b3 d14

b1 (d12 + d13 + d14 ) + c2 λ

b1 − λ

b2 − λ

c1 1 − λ

+e(x1 −x2 )β

b1 d22 + λ

b2 d23 + λ

b3 d24

c1 d21 + λ

³

´

³

´

³

´

b1 (d22 + d23 + d24 ) + c2 λ

b1 − λ

b2 − λ

b2 (d23 + d24 ) + c3 λ

b3 d24

c1 1 − λ

−

,

b1 d22 + λ

b2 d23 + λ

b3 d24

c1 d21 + λ

and the variance is obtained using again either the bootstrap method or by solving

¡ ¢

V ar b

t2 =

Ã

Ã

!2

!2

³ ´

³ ´

b

∂b

t2

∂

t

2

b1 +

b2

|λ1 ,λ2 ,λ3 V ar λ

|λ1 ,λ2 ,λ3 V ar λ

b1

b2

∂λ

∂λ

!2

Ã

³ ´

∂b

t2

b3 .

|λ1 ,λ2 ,λ3 V ar λ

+

b

∂φ

Again, having the variance of the counterfactual duration we can compute confidence intervals

for t2 and we can get the counterfactual distribution.

4.1.3

Problems in estimation

If x1 = x2 we have

x1 = x2 ⇔ Λ−1 (Λ (t1 )) = t1

³

´

t2 = Λ−1 e∆xβ Λ (t1 ) = Λ−1 (Λ (t1 )) = t1 ,

or when x1 = 0, given that ex2 β Λ (t2 ) = ex1 β Λ (t1 ) we have

x2 β + ln (Λ (t2 )) = ln (Λ (t1 ))

1 Λ (t2 )

.

x2 = − ln

β Λ (t1 )

4.2

MPH model examples

Suppose at the beginning of week 25, 18 people are unemployed.

hazard

¡ Assume the 1baseline

¢

is 1 for all periods

(λL−1 =

¢ λL = 1). In week 25, 6 find a job PLeave,L−1 = 3 . In week 26,

¡

2 find a job PLeave,L = 16 .

I. Consider PLeave,L = Fraction of leaves during last period = 1 − e−ηλL , and assume η i = η,

then

ln (1 − PLeave,L )

⇒η=−

.

λL

14

Using just data on the last period, we can find η

ln (1 − PLeave,L )

⇒ η=−

λ

¶L

µ

1

= − ln 1 −

6

5

= − ln = ln 6 − ln 5 = 0.182.

6

II. Assume η i = η H , η L , similarly, having PLeave,L , PLeave,L−1 , λL−1 , λL , we can find η H ,

p,given that with probability p, η = η H and with probability 1 − p, η = 0.

Thus, we have that

¡

¢

−η H λL−1 p

(1)

PLeave,L−1 =

¡ Pr (Leave ¢at L−1 |ηH ) Pr (η H ) = 1 − e

=

Pr

(Leave

at

L|η

,

L

)

Pr

(η

PLeave,L = PLeave,L|L−1 PLeave,L−1

.

−1

H

H) P

¢Leave,L−1

¡

p

p

−η

λ

L

H

= Pr (Leave at L|ηH , L−1 ) 3 = 1 − (fraction that leave at L−1 ) e

(2)

3

Solving this system of two equations with two unknowns we can find p and η H . Thus, we

have from the first equation that:

p= ¡

PLeave,L−1

1

¢= ¡

¢.

−η

λ

−η

L−1

H

3 1 − e H λL−1

1−e

Replacing p in the second equation we get e−ηH = 13 .

Thus η H = − ln (0.3333) = 1.0986 and

1

1

¢=

¡

3 1 − e−ηH λL−1

3 − 3 13

p = 0.5.

p =

5

Estimation Results

Table1. Regression results for unobserved heterogeneity on observables (for

experiment)

Valid cases: 8134 Dependent variable: b

ηi

R-squared: 0.025

V ariable

Estimate Standard t − value P rob > |t|

Error

Constant

0.0798

0.0011

70.3992

0.000

age − age

−0.00057 0.0001

−6.9751

0.000

(age − age)2

0.00003

0.00001

4.2421

0.000

log(BP E) − log(BP E) 0.0057

0.0016

3.4454

0.001

black

−0.0132

0.0013

−9.6831

0.000

male

0.0010

0.0012

0.8406

0.401

log(wb_d) − log(wb_d) −0.0136

0.0028

−4.8235

0.000

15

claimant bonus

Correlation

with b

ηi

−

−0.0728

0.0209

−0.0244

−0.1102

0.0133

−0.0607

Table2. Regression results for unobserved heterogeneity on observables (for treated observations)

Valid cases: 4183 Dependent variable: b

ηi

R-squared: 0.023

V ariable

Estimate Standard t − value P rob > |t| Correlation

Error

with b

ηi

Constant

0.0829

0.0016

51.4813

0.000

−

age − age

−0.0006

0.0001

−5.5307

0.000

−0.0781

2

(age − age)

0.00003

0.00001

3.1328

0.002

0.0127

log(BP E) − log(BP E) 0.0053

0.0023

2.2966

0.022

−0.0162

black

−0.0134

0.0019

−6.8638

0.000

−0.1083

male

−0.0004

0.0017

−0.2389

0.811

0.0038

log(wb_d) − log(wb_d) −0.0103

0.0039

−2.5990

0.009

−0.0472

Looking at the results from tables 1and 2 we can conclude that there is correlation between

the b

η i and the observables. This result suggest that the best hazard model necessary to

estimate the unemployment duration is M P H model.

Table 3. Regression results for treatment effect

Valid cases: 8134 Dependent variable: duration of unemployment

Total SS: 1293806.222 Degrees of freedom: 8132

R-squared: 0.003

V ariable

Estimate Standard t − value P rob > |t|

Error

Constant

17.2816

0.1947

88.7369

0.000

X = 1 (control group) 1.3391

0.2794

4.7922

0.000

Table 4. Results for the average treatment effect for treated versus non-treated

Control Group T reated

Causal ef f ect

(X = 1)

All(X = 0) AT E = E (Ynt ) − E (Yt )

Benef it weeks

18.6207

17.28

1.3391

Standard errors 0.2028

0.2000

0.2739

N (individuals)

3951

4183

−

Comparing tables 3 and 4 we observe that we get the same treatment effect and standard

errors by regressing the duration of unemployment on the dummy for treatment, or by

computing the average treatment effect and bootstrapping it to get standard errors.

Table 4. IV Regression results for the treatment effect on the treated

Valid cases: 8134 Dependent variable: duration of unemployment

Total SS: 1293806.222 Degrees of freedom: 8132

R-squared: 0.004

V ariable

Estimate Standard t − value P rob > |t|

Error

Constant

17.0565

0.2120

80.4336

0.000

−1 0

0

b

0.3286

5.4845

0.000

X = (R R) R X 1.8023

We observe that when we use IV (an instrument for the treatment) we get the treatment

effect on the treated. In this case, we observe a slight increase in the R − squared, and we

16

also observe that the effect of the treatment effect on the treated is increasing from 1.3391

weeks to 1.8023 weeks. This result help us to conclude that if the program will be applied

to the overall population we’ll get smaller unemployment durations for the unemployed and,

thus, the U I claims we’ll be lower.

The fallowing results are referring to the counterfactual duration for non-compliers.

5.1

Assumption 1

We assume that people who have not find a job after 26 weeks will never find a job. Thus,

we censor the observations that exceed 26 weeks to 26 and we compute counterfactuals for

the non-compliance group. For the individuals from the non compliance group we have 658

individuals. To derive the counterfactual durations we use estimates for the baseline hazard

obtained by Woutersen and Ridder (2001) . Looking at the average duration of unemployment (table 7) for non-compliers we observe that is smaller than the average duration of

unemployment for the control group (both groups being not treated), also, the distribution

of the unemployment duration of non-compliers (fig.4), is different from the distribution of

unemployment duration for control group (fig.2).in the sense that the duration of unemployment is all the time below the one from the control group. Thus, we can infer that

non-compliers have different incentives to find a job in the absence of a treatment, and, their

unobserved heterogeneity is different than the ones from the control and compliance groups

(see fig 2,3 4). The individuals from the noncompliance group seem more willing to find a

job quicker. Thus, we expect that if they will be treated they will have a smaller duration of

unemployment than the compliers in the treatment group (average counterfactual duration

for non-compliers would be smaller than the average duration of unemployment for compliers, see table 7.) The counterfactual durations and they standard errors obtained using

bootstrap method are presented in the Table 6.

17

Table 6. Counterfactuals durations and standard errors for the non-compliance group

weeks of unemployment counterf actuals f or 26 breaking points Standard errors

1

0.15

0.0223

2

1.15

0.0003

3

2.05

0.00001

4

3

0.0002

5

3.95

0.0244

6

4.85

0.0243

7

5.8

0.1305

8

6.7

0.1227

9

7.65

0.0485

10

8.55

0.0486

11

9.5

0.0600

12

10.4

0.0723

13

11.35

0.0728

14

12.25

0.0837

15

13.2

0.1684

16

14.15

0.3385

17

15.05

0.1642

18

16

0.1202

19

16.9

0.1085

20

17.85

0.1082

21

18.75

0.1139

22

19.7

0.1326

23

20.6

0.1121

24

21.55

0.1106

25

22.5

0.1147

26

22.95

0.1437

We observe that for the individuals from the non-compliance group that found a job within 26

weeks, their duration of unemployment would decrease if they would receive the treatment,

and that people that have shorter durations of unemployment, less than 11 weeks, will have

shorter durations if they will get the treatment than people that have higher duration of

unemployment and they get the treatment.

In Table 7 we present the average unemployment duration for non-compliers when they are

not receiving the treatment and the average unemployment duration for non-compliers when

they would get the treatment and we compare with the other groups.

18

Table 7 . Average unemployment durations and standard errors obtain using Bootstrap

method for 5000 replica

Number of individuals in the claimant experiment is 8134

Control Group

T reated

Counterfactuals

(X = 1)

All(X = 0) Compliance(R = 1) (R = 0) N on − compliance

Benef it weeks

18.6207

17.28

17.056

18.48

16.41

Standard errors 0.2028

0.2000

0.2103

0.4789

0.3877

N (individuals)

3951

4183

3525

658

658

Table 8. Results for the average treatment effect (AT E) for the non-compliers

Counterfactuals

Causal ef fect

N on − compliance(R = 0) N on − compliance AT E = E (Ya ) − E (Yp )

Benef it weeks

18.48

16.41

2.0719

Standard errors 0.4789

0.3877

0.6447

N (individuals)

658

658

658

We observe that if we would have treated the non-compliance group, their average duration of

unemployment will be smaller (with higher standard errors) than the one from the compliance

group. This result is what we expected to see. Looking at the causal effect (the average

treatment effect for non-compliers), we observe that their average unemployment duration

decreased by about 2 weeks if they were treated, which implies that we get a better treatment

effect that the one we estimate by looking at the results of IV regression (treatment effect

on the treated). Thus, if the program will be applied to overall population, we’ll get a better

treatment effect than the one estimated by Meyer. This result help us to conclude that if the

bonus will be applied to overall population the U I claims will be lower and thus the program

is more efficient than initially thought.

19

Distribution of unemployment duration for individuals from the claimant

experiment

60

Unemployment duration

50

40

30

20

10

0

0

1000

2000

3000

4000

5000

6000

7000

8000

9000

# of individuals

Figure 1:

Distribution of unemployment duration for individuals from the control group

60

Unemployment duration

50

40

30

20

10

0

0

500

1000

1500

2000

2500

# of individuals

Figure 2:

20

3000

3500

4000

4500

Distribution of unemployment duration for individuals from compliance group

60

Unemployment duration

50

40

30

20

10

0

0

500

1000

1500

2000

2500

3000

3500

4000

# of individuals

Figure 3:

Distribution of unemployment duration for individuals from non-compliance group

45

Unemployment duration

40

35

30

25

20

15

10

5

0

0

100

200

300

400

# of individuals

Figure 4:

21

500

600

700

5.2

5.2.1

Assumption 2 (censored spells)

Estimating θ.

First we smooth the data by assuming

it uniformly distributed in the interval before leavR

f (s;x)

ing. We have θ(s) = 1−F (s;x) ⇒ θ(s; x)ds = − ln(1 − F (s; x)) where F (s; x) is uniformly

distributed between 0 and 1. We consider the following hazard model

θ = ηφ(x)λ(t)

where x is a time invariant regressor and φ(x0 ) 6= φ(x1 ) and η a realization of a mixing

distribution. Let λ(t) be a piecewise constant function that allows for a different baseline

hazard before and after c. For convenience, we refer to the individuals with x = x0 as the

treatment group and those with x = x1 as the control group. We censor the treatment and

control group at c0 and c1 respectively. We choose the censoring points in such a way that the

survival probabilities are equal. Thus, we obtain a sequence c0j and c1j ,where j = 1, 2, . . . , 25,

by equating

Pr (x = 1, j) = Pr(x = 0, c0j )

Pr (x = 0, j) = Pr(x = 1, c1j ).

We have that

1 − Pr(x = 0, c0j ) = e−c0j φ(x0 )

1 − Pr(x = 1, c1j ) = e−c1j φ(x1 )

And given that

s0,max =

s1,max =

Z

1 c0

θi du = φ(x0 ) ∗ c0

η 0

Z

1 c1

θi du = φ(x1 ) ∗ c1 .

η 0

In large samples s0,max = s1,max . This suggest the following estimator for

φ(x1 )

φ(x0 ) ,

φ(x1 )

c0

= .

φ(x0 )

c1

Using moments of the form

gj = s0j (c0j , λ0j ) − s1j (c1j , λ1j ), j = 1, 2, . . . , 25,

which are linear in parameters λ, we estimate the hazard rates for the control and treatment

group.

Having estimates for the baseline hazard we can compute the counterfactuals for the non

treated group. To estimate the heterogeneity we consider the following cases:

Results for counterfactual duration and average durations for non compliers will be provided.

22

6

Conclusion

In this paper we use a new estimator for estimating the duration of unemployment, and

it allows that λ(t) to be discontinuous. This estimator can deal with selective compliance

so that we can use it to evaluate the re-employment bonus experiment of Illinois. We find

that allowing for selective-compliance and unobserved heterogeneity leads to more optimistic

conclusions about monetary incentives than Meyer (1996).

7

Appendices

Appendix 1

Rc

We define zis,max = 0 is θ(s, x)ds;

Edis

Z

= Pr{tis ≤ cis } = Pr{

tis

θ(s, x)ds ≤

0

= 1 − e−zis,max ;

Ezis =

Z

zis,max

Z

cis

0

θ(s, x)ds} = Pr{zis ≤

Z

cis

θ(s, x)ds}

0

zis ezis dzis + zis,max e−zis,max

0

= 1 − e−zis,max = Edis .

Q.E.D.

Appendix 2,

In this appendix we are concerned with the estimation of two hazard models. The first

model is the exponential model with a exponential mixing distribution.

θ = η where η ∼ Gamma(α, β).

(9)

This yields the following density function for t given η

f (t|η) = ηe−ηt .

Let M (η) denote the mixing distribution; the density of a observed realization has the following form.

Z

g(t) =

ηe−ηt dM (η)

Z α α −η(β+t)

αβ α

β η e

dη =

=

(10)

Γ(α)

(β + t)α

However, the density of equation (??) could also be generated by the second model.

θ=

α

.

(β + t)

23

(11)

It follows from ER that we need a regressor to distinguish between the models of equation

(9) and (11). Suppose this regressor has two values and that we normalize φ(x0 ) to be one

and denote φ(x1 ) by γ. We first estimate γ.

1 P

x=x si (ε)

N0

γ̂ = 1 P 0

.

x=x1 si (ε)

N1

In large samples we have the following for equation (9).

1 P

γε

x=x Esi

N0

.

=

γ̂ ≈ 1 P 0

ε

x=x1 Esi

N

1

Appendix 3A

We first censor the treatment and control group at c and calculate d¯0 (c) =

d¯1 (c) =

P

x=x1

NP

1

di

P

x=x0

N0

di

and

. Assume that d¯0 (c) < d¯1 (c), otherwise relabel. Using the moment function

di

P

di

1

− x=x

, or, equivalently, g1 (c1 (θ)) = d¯0 (c)− d¯1 (c1 ) yields a censoring

g1 (c1 (θ)) =

N0

N1

point c1 and d¯0 (c) < d¯1 (c) implies c1 < c0 . Note that

Z

1 c0

θi du = φ(x0 ) ∗ c0

s0,max =

η 0

Z c1

1

s1,max =

θi du = φ(x1 ) ∗ c1 .

η 0

x=x0

In large samples, the moment function g1 (c1 (θ)) yields s0,max = s1,max . This suggest the

1)

following estimator for φ(x

φ(x0 ) .

c1

φ(x1 )

= .

(12)

φ(x0 )

c

Appendix 4

With full compliance and time-invariant treatment effect, theorem 2 would just be corollary of theorem 1 and X would always have the same value as R. With partial compliance, X

is a probabilistic function of R and η. For example, in our application, R = 1 is a necessary

condition for treatment, X = 1 but the people with a low values of η refuse more often to

participate in the re-employment bonus experiment. As a result, X is correlated with η and

X is not randomly assigned. In general, we do not need {X = 1 ⇒ R = 1}; it suffices for our

estimator that R and X are correlated (for example, path-dependent preferences will do).

The artificial censoring point is a function of the regressor X, i.e. we censor at c0 if

X = 0 and at c1 if X = 1. Consider moment function g1 (c1 |c0 ) and let N0 and N1 denote

the number of individuals for which R = 0 and R = 1 respectively.

1 X

1 X

di −

di

g1 (c1 |c0 ) =

N0

N1

R=0

R=1

X

X

X

X

1

1

1

1

=

di +

di −

di −

di

N0

N0

N1

N1

R=0,X=0

R=0,X=1

24

R=1,X=0

R=1,X=1

Artificial censoring ensures that

Eg1 (c1 |c0 ) = E{

1

N0

X

di +

R=0,X=0

1

N0

X

R=0,X=1

di −

1

N1

X

R=1,X=0

di −

1

N1

X

R=1,X=1

di } = 0

while correlation between R and X ensures that Eg1 (c1 |c0 ) is monotone in c1. Choosing

c0 = ε, 2ε, 3ε, ... and then determining c1 gives enough moments to identify the baseline

hazard in the setting of theorem 1. We now add moments by choosing c1 = ε, 2ε, 3ε, ... to

identify the treatment effect as a piecewise-constant function of time. For efficiency, we also

use the moments of g2 .

Appendix 5

Variances of moment functions

g1 = d¯1 − d¯0

Eg1 (γ 0 , λ0 ) = 0

X

1

Eg1 g10 = E(d¯1 − d¯0 )2 = 2 E{ (di1 − di0 )}2

N

X

1

1

i

=

E{ (d1 − di0 )2 } = E{(di1 − di0 )2 }

N2

N

(13)

An estimator for the expression in (13):

Estimator(Eg1 g10 ) =

1 X i

{ (d1 − di0 )2 }.

N2

g1 = d¯1 − d¯0

Eg1 (γ 0 , λ0 ) = 0

X

1

Eg1 g10 = E(d¯1 − d¯0 )2 = 2 E{ (di1 − di0 )}2

N

X

1

1

i

=

E{ (d1 − di0 )2 } = E{(di1 − di0 )2 }

2

N

N

8

(14)

Literature

Chamberlain, G. (1985): “Heterogeneity, Omitted Variable Bias, and Duration Dependence,” in Longitudinal Analysis of Labor Market Data, ed. by J. J. Heckman and

B. Singer. Cambridge: Cambridge University Press.

Cochran, W.G. (1965), ”The Planning of Observational Studies of Human Populations” (with

discussion), Journal of the Royal Statistical Society Series A 128, 234-266.

Cox, David R. (1958), Planning of Experiments, New York: Wiley.

Dolton, P., and D. O’Neill (1996): “Unemployment Duration and the Restart Effect: Some

Experimental Evidence,” The Economic Journal, 106, 387-400.

Elbers, C. and G. Ridder (1982): “True and Spurious Duration Dependence: The

Identifiability of the Proportional Hazard Model,” Review of Economic Studies, 49,

25

402-409.

Fisher, Ronald A. (1935), The Design of Experiments, Edinburgh: Oliver & Boyd.

Ridder, G. and Woutersen, T. (2001).

Galles, David and Judea Pearl (1998), ”An Axiomatic Characterization of Causal

Counterfactuals”, Foundations of Science 3, 151-182.

Glymour, Clark (1986), ”Statistics and Metaphysics”, Comment on ’Statistics and Causal

Inference’ by P.W. Holland, Journal of the American Statistical Association 81, 964966.

Govert E.B. and Ridder, G. (2002): “Correcting for Selective Compliance in a

Re-employment Bonus Experiment” USC Center for Law, Economics & Organization,

Research Paper No. C01-15.

Gørgens, T. and J. L. Horowitz (1996): “Semiparametric Estimation of a Censored Regression

Model with Unknown Transformation of a dependent variable,” Journal of Econometrics,

90, 155-191.

Ha̋rdle, W. (1990): Applied Nonparametric Regression.: Cambridge University

Press.

Heckman, James J. (2000), ”Causal Parameters and Policy Analysis in Economics: A

Twentieth Century Retrospective”, Quarterly Journal of Economics 115, 45-97.

Heckman, J. J. (1991): “Identifying the Hand of the Past: Distinguishing State Dependence

from Heterogeneity,” American Economic Review, 81, 75-79.

Heckman, J. J., and G. J. Borjas (1980): “Does Unemployment Cause Future

Unemployment? Definitions, Questions and Answers for a Continuous Time Model

of Heterogeneity and State Dependence,” Economica, 47, 247-283.

Heckman, J. J., and B. Singer (1982): “The Identification Problem in Econometric Models

for Duration Data,” in Advances in Econometrics, ed. by W. Hildenbrand. New York:

Cambridge University Press.

Heckman, J. J., and B. Singer (1984): “A Method for Minimizing the Impact of Distributional Assumptions in Econometric Models for Duration Data,” Econometrica,

52, 271-320.

Honoré, B. E. (1990): “Simple Estimation of a Duration Model with Unobserved

Heterogeneity,” Econometrica, 58, 453-473.

Honoré, B. E. (1993): “Identification Results for Duration Models with Multiple Spells,”

Review of Economic Studies, 60, 241-246.

Honoré, B. E. (1998): “A Note of the Rate of Convergence of Estimators of Mixtures of

Weibulls,” Working paper, Princeton University.

Honoré, B. E., and J. L. Powell (1998): “Pairwise Difference Estimators for Non-Linear

Models,” Working paper, Princeton University.

Horowitz, J. L. (1996): “Semiparametric Estimation of a Regression Model with an

Unknown Transformation of the Dependent Variable,” Econometrica, 64, 103-107.

Horowitz, J. L. (1999): “Semiparametric Estimation of a Proportional Hazard Model with

Unobserved Heterogeneity” Econometrica, 67, 1001-1028.

Kaplan, E. L. and P. Meier (1958): “Nonparametric estimation from incomplete observations”.

Journal of of the American Statistical Association, 53, 457-481.

26

Kluve, Jochen (2001): “On the Role of Counterfactuals in Inferring Causal Effects of Treatments”

IZA Discussion Paper #354 (www.iza.org).

Lancaster, T. (1976): “Redundancy, Unemployment and Manpower Policy: a Comment,”

Economic Journal, 86, 335-338.

Meyer, D. (1996): “What Have We Learned from the Illinois Reemployment Bonus

Experiment,” Journal of Labor Economics, Vol.14, No.1, 26-51.

Neyman, Jerzy (1923 [1990]), ”On the Application of Probability Theory to Agricultural

Experiments. Essay on Principles. Section 9.”, translated and edited by D.M.

Sabrowska and T.P. Speed from the Polish original, which appeared in Roczniki Nauk

Rolniczych Tom X (1923), 1-51 (Annals of Agriculture), Statistical Science 5, 465472.

Rubin, Donald B. (1974), ”Estimating Causal Effects of Treatments in Randomized and

Nonrandomized Studies”, Journal of Educational Psychology 66, 688-701.

Rubin, Donald B. (1977), ”Assignment to Treatment Group on the Basis of a Covariate”,

Journal of Educational Statistics 2, 1-26.

Rubin, Donald B. (1980), ”Comment” on ’Randomization Analysis of Experimental Data:

The

Fisher Randomization Test’ by D. Basu, Journal of the American Statistical

Association 75, 591-593.

Rubin, Donald B. (1986), ”Which Ifs Have Causal Answers”, Comment on ’Statistics and

Causal Inference’ by P.W. Holland, Journal of the American Statistical Association 81,

961-962.

Vytacil, E (2001): “Semiparametric Identification of the Average Treatment Effect in

Nonseparable Models.” mimeo, 2000.

Woodbury, et al. (1987): “Bonuses to Workers and Employers to Reduce Unemployment:

Randomized Trials in Illinois.” American Economic Review 77, 513-30.

Woutersen, T. (2000): “Consistent Estimators for Panel Duration Models

with Endogenous Regressors and Endogenous Censoring,” Research Report.

27