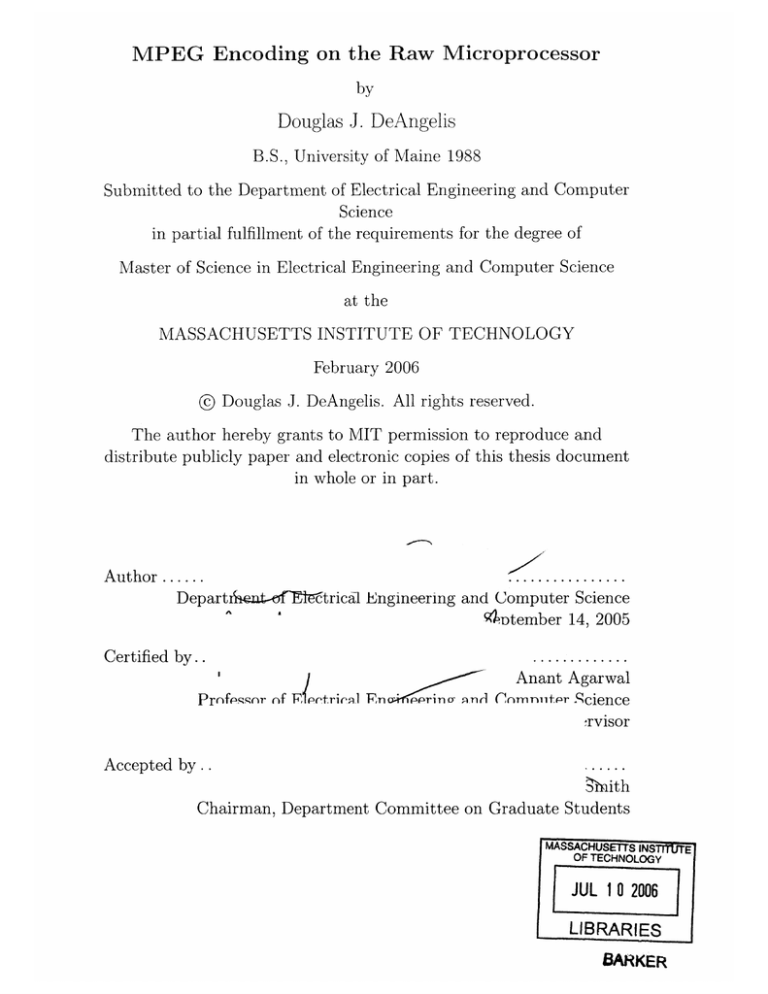

MPEG Encoding on the Raw Microprocessor

by

Douglas J. DeAngelis

B.S., University of Maine 1988

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

February 2006

@ Douglas J. DeAngelis. All rights reserved.

The author hereby grants to MIT permission to reproduce and

distribute publicly paper and electronic copies of this thesis document

in whole or in part.

Author ......

Departiiaext- fEWtrical Engineering and Computer Science

4 Dtember 14, 2005

Certified by..

Anant Agarwal

Prnfpqqor of E1Petrieal En ~

prino

n

Cnmntiifr Jqcience

,rvisor

Accepted by..

Shith

Chairman, Department Committee on Graduate Students

MASSA7CHUSMS IN-S

OF TECHNOLOGY

JUL 10 2006

LIBRARIES

BARKER

-E

MPEG Encoding on the Raw Microprocessor

by

Douglas J. DeAngelis

Submitted to the Department of Electrical Engineering and Computer Science

on September 14, 2005, in partial fulfillment of the

requirements for the degree of

M-aster of Science in Electrical Engineering and Computer Science

Abstract

This work investigates the real-world application of MPEG-2 encoding on the Raw

microprocessor. Much of the work involves partitioning the problem so that linear

speedup can be achieved with respect to both number of Raw tiles and size of the

video imnage being encoded. These speedups are confirmed through the use of real

Raw hardware (up to 16 tiles) and the Raw simulator (up to 64 tiles). It will also

be shown that processing power can be allocated at runtime, allowing a Raw system

of the future to dynamically assign any number of tiles to the encoding task. In

the process both the advantages and the limitations of the RAW architecture will be

discussed.

Thesis Supervisor: Anant Agarwal

Title: Professor of Electrical Engineering and Computer Science

Acknowledgments

The author would like to thank Hank Hoffmian for his oversight of this work and his

gentle encouragenent to press on in the darkest hours. Hank was responsible for the

initial port of the MPEG encoder to Raw which was the initial code base for this

research, as well as the enhancements to motion estimation that greatly improved the

system performance.

Maria Sensale deserves mention for her ability to extract the most. obscure papers

in electronic form, thereby saving untold visits to the reading room. Forza Italia!

Thanks to everyone in the Raw group for bringing to life a very cool computer.

Special thanks to Arrant Agarwal for encouraging the author to leave il 1992 and

encouraging him to return in 2005.

Lastly the author wishes to acknowledge his lovely wife Kim who put up with the

whole process while carrying our first child.

5

6

Contents

1

13

Introduction

1.1

Contributions . . . . . . . . . . . . . . . . . .

1.2

O rganization

.. .

.. . . .

14

. .. .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . ..

14

.

15

2 The MPEG Standard

2.1

MPEG Encoding ........

2.2

MPEG Syntax ........

15

.............................

16

...............................

3

Related Work

21

4

MPEG on the Raw Microprocessor

25

5

4.1

The Raw Microprocessor .......

4.2

Porting MPEG to Raw .......

.........................

25

...........................

26

29

Parallelizing MPEG on Raw

5.1

A Parallel Encoder

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

5.2

Conversion to Arbitrary Slice Size . . . . . . . . . . . . . . . . . . . .

31

6

Partitioning MPEG on Raw

37

7

Making Communication Efficient

41

7.1

Improvements to memcpy . . . . . . . . . . . . . . . . . . . .. . .

.

41

7.2

Improvements to sendrecv . . . . . . . . . . . . . . . . . . . . . . . .

42

7.2.1

Full Routing and Elemental Routes in R aw . . . . . . . . . . .

43

7.2.2

Border Routing........

7

. . . . . . . . . . . . . . . . .

48

7.2.3

8

9

Border Routing on Larger Raw Machines . . . . . . . . . . . .

52

. . . . . . . . . . . . . . . . . . . .

57

7.3

Alternate Macroblock Allocation

7.4

Suggested Improvements to Raw . . . . . . . . . . . . . . . . . . . . ..

58

61

Experimental Results

8.1

Phase Speedups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

63

8.2

Absolute Performance and Speedup . . . . . . . . . . . . . . . . . . .

63

8.3

Performance Across Sample Files

. . . . . . . . . . . . . . . . . . . .

70

8.4

Effect of Arbitrary Slice Size on Image Quality . . . . . . . . . . . . . .

70

8.5

Comparing Hardware and Simulated Results . . . . . . . . . . . . . .

72

75

Conclusion

9.1

Further Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8

75

List of Figures

2-1

An MPEG encoder............................

2-2

M PEG syntax.

4-1

The Raw Microprocessor..

. 17

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

. . . . . . . . . . . . . . . . . . . . . . . .

26

4-2

352x240 pixel video frame mapped to a 15 tile Raw machine. . . . . .

27

5-1

A parallel \IPEG encoder. . . . . . . . . . . . . . . . . . . . . . . . .

30

5-2

Individual tile activity for a baseline encoding. . . . . . . . . . . . . .

32

5-3

Slice map for 352x240 video on 16 tiles. . . . . . . . . . . . . . . . . .

34

5-4

Individual tile activity after parallelizing putpic.

. . . . . . . . . . .

35

7-1

Bordering macroblocks required for motion estimation.

. . . . . . . .

42

7-2

Example routes for Tile 0 sending data to all other tiles.

. . . . . . .

44

7-3

Possible routes for sending on Raw. . . . . . . . . . . . . . . . . . . .

45

7-4

Possible routes for receiving from the East on Raw. . . . . . . . . . .

46

7-5

Determination of the receiver opcode when Border Routing.

. . . . .

49

7-6

Example routes for Tile 3 sending to Tiles 2, 4 and 5. . . . . . . . . .

50

7-7

720x480 pixel video frame mapped to a 64 tile Raw machine. . . . . .

54

7-8

352x240 pixel video frame mapped to a 16 tile Raw machine using a

more square macroblock allocation scheme. . . . . . . . . . . . . . . .

59

. . . . . . . . . . . . . .

62

. . . . . . . . . . . . . . . . . . . . . . . . .

62

8-1

The video sequences chips360 and chips720.

8-2

The video sequence dhl.

8-3

Speedup of individual phases of the MPEG Algorithm for a 352x240

sequence on 16 tiles.

. . . . . . . . . . . . . . . . . . . . . . . . . . .

9

64

8-4

Speedup and absolute performance for a 352x240 sequence on 16 tiles

using hardware. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8-5

Speedup and absolute performance for a 720x480 sequence on 16 tiles

using hardw are. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8-6

67

Speedup and absolute performance for a 352x240 sequence on 64 tiles

using sim ulator. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8-7

65

68

Speedup and absolute performance for a 720x480 sequence on 64 tiles

using sim ulator. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

. . . . . . .

71

8-8

Comparison of speedups for the three sample sequences.

8-9

Comparison of a 720x480 encoding on the simulator and the hardware.

10

73

List of Tables

6.1

iMacroblock partitioning for a variety of available tiles and problem sizes. 38

7.1

Number of macroblocks required on each tile in full frame memcpy con. . . . . . . . . . . . . . . . . . .

43

7.2

Partial border routing map for 352x240 video on 16 tiles. . . . . . . .

50

7.3

Comparison of number of the network hops required to full route and

pared to memcpy with border data.

border route 352x240 video on 16 tiles. . . . . . . . . . . . . . . . . .

52

7.4

Partial border routing map for 720x480 video on 64 tiles. . . . . . . .

53

7.5

Partial border routing map for 720x480 video on 64 tiles with biased

op cod e.

7.6

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Comparison of number of the network hops required to full route and

border route 720x480 video on 64 tiles. . . . . . . . . . . . . . . . . .

7.7

55

57

Comparison of number of the network hops required to route 352x240

video on 16 tiles when using a more square macroblock allocation scheme. 58

8.1

Characteristics of the video files used for testing the MPEG encoder.

8.2

Frame rates for encoding various sequence sizes on various numbers of

tiles.

8.3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .70

Comparison of SNR and file size for a 352x240 encoding using the

baseline code and the parallel putpic code ..

8.4

63

. . . . . . . . . . . . . .71

Calculating simulation correction factors for each phase of the MPEG

algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

72

12'

Chapter 1

Introduction

As multimedia applications continue to demand greater performance on smaller platforms, the need for a general purpose multiprocessor that is also well suited to highbandwidth real-time stream processing is increasing. The Raw microprocessor[23] is

an example of just such a processor. The prototype Raw chip has 16 processors (called

tiles) arranged in a 4x4 grid and connected to each other via four 32-bit full-duplex

networks. With its ability to simultaneously move and operate on 128 bytes of data

every clock cycle, Raw is ideally suited to a variety of multimedia tasks.

The goal of this research is to explore the adaptation of a particular real-world

application to the Raw architecture. We chose MPEG-2 encoding for a, number of

reasons:

" There was an existing public domain code base[18] for the single processor case,

allowing us to spend time focusing on how best to adapt the problem to Raw.

" Unlike other more "traditional" benchmarks like specint and matmult, it is

relatively easy to explain the application in layman's terms. By the end of this

thesis we will, in fact, be able to give very real numbers on

just how many tiles

of a Raw chip would be required to encode DVD quality video in real-time.

* As the foundation technology behind DVDs and videoconferencing. it offers a

wide variety of avenues for further exploration.

13

1.1

Contributions

In the most general sense this thesis is intended to demonstrate that a general purpose

tiled architecture microprocessor such as Raw can exhibit performance characteristics previously only associated with custom architectures.

It will also demonstrate

that this performance can be extracted using using well understood programnning

toolsets (such as Gnu C) as opposed to requiring the programmer to have an intimate

knowledge of the underlying architecture.

More specifically, through the course of this work we hope to achieve the following

goals:

" A parallel implementation of the MPEG-2 encoding algorithm that overcomes

the major sequential dependency of MPEG while exploiting the Raw architecture to achieve the best baseline performance possible.

A well partitioned implementation of the MPEG-2 encoding algorithm that

allows an input data stream of arbitrary frame dimension to be mapped to an

arbitrary number of tiles at runtime.

* A scalable implementation of the MPEG-2 encoding algorithm that exhibits

linear speedups with the addition of tiles as well as linear speedup with the

reduction in video frame dimensions.

1.2

Organization

The rest of this thesis is organized as follows. Chapter 2 gives an overview of the

MPEG algorithm and MPEG encoding. Chapter 3 discusses previous methodologies

for parallelizing the MPEG algorithm. Chapter 4 is a brief introduction to the Raw

miicroprocessor. Chapters 5, 6 and 7 outline the implementation of MPEG encoding

on Raw. These chapters form the core of the thesis and address in turn the three

fundamental goals of parallel, well partitioned and scalable. Chapter 8 goes on to

demonstrate that these goals were met through experimentation.

14

Chapter 2

The MPEG Standard

The M.IPEG standard[9] describes a lossy compression algorithn for moving pictures.

The MPEG-1 standard was intended to support applications with a continuous transfer bitrate of around 1.5Mbps, matching the capability of early CD-ROMs. MPEG-2

uses the same basic compression methodology, but adds functionality to support interlaced video (such as NTSC), much higher bitrates and a numer of a number of other

features not particularly relevant to this research. MPEG-2 is backward compatible

with MPEG-1 [7].

2.1

MPEG Encoding

MPEG video encoding is similar to JPEG still picture encoding in that it uses the

Discrete Cosine Transform (DCT) to exploit spatial redundancy. A video encoder

which stopped there would generally be termed an intraframe coder. MPEG enhances

intraframe coding by employing an initial interframe coding phase known as motion

compensation which exploits the temporal redundancy between frames. As a result,

there are three types of picture in an MPEG data stream. The first type, known as the

intra (I) picture, is fully coded, much like a JPEG still image. The compression ratio

is low, but this picture is independent of any other pictures and can therefore act as

an entry point into the data stream for purposes of random access. The second type

of picture is the predicted (P) picture, which is formed using motion compensation

15

from a previous I or P picture. The final type is the bidirectional (B) picture, which is

formed using motion compensation from either previous or succeeding I or P pictures.

In a typical MPEG data stream, the compression ratio of a P picture might be 3x

that of an I picture, and the B picture might be 10x higher compression than an I

picture.

However, just encoding more B pictures will not necessarily imply better

compression since temporal redundancy will decrease as the B picture gets further

from its reference I or P picture.

For this reason, we use a value of N=15 (the

number of frames between successive I pictures) and M=3 (the number of frames

between successive I/P pictures) in this research.

A block diagram of an MPEG-2 encoder can be seen in Figure 2-1. The input

video is preprocessed into a luminance-chrominance color space (we use YUV where

Y is the luminance component and U and V are chrominance components).

If the

particular picture will not be an I-picture, it enters the Motion Estimation phase,

vhere motion vectors are calculated to determine locations in previous or succeeding

pictures that best match each area of the image. The Motion Compensation phase

then uses these vectors in combination with a frame store to create a predicted frame.

In the case of P or B pictures, it is the prediction error and not the actual picture

itself that enters the DCT phase. The output of the DCT stage is then quantized to

a variable level based on the bit budget for the output stream and fed into a variable

length coder (VLC) which acts as a final lossless compression stage. The quantized

output is also reversed to create a, reconstructed picture just as the decoder will see

it for purposes of future motion compensation.

2.2

MPEG Syntax

The MPEG bitstream is broken down into hierarchical layers as shown in Figure 22[11].

At the top is a sequence layer consisting of one or more groups of pictures. These

groups of pictures (GOP) are just that: collections of I, P and B pictures in display

order. Each GOP must have at least one I picture and as stated earlier, our reference

16

Uncompressed

Video (UV)

Rate Control 4

Inpu Frae

Input

DCT

Frame

Quantize

D

VLC

Ouput

Bitstream

MPEG

Video

Inverse

Quantize

Estimation

Inverse

DCT

Predicted Frame

Motion Vectors

Motion

Reconstructed

Compensation

Frame Buffer

Figure 2-1: An MPEG encoder.

GOP size is 15 pictures.

Each picture is composed of slices which can be as small as a single macroblock

but must begin and end on the same row[9]. Slices are primarily intended to allow

smoother error recovery since the decoder can drop a slice without dropping the whole

picture.

Slices are composed of macroblocks. Macroblocks are considered the minimum

coded unit and in our case always consist of four 8x8 blocks of luminance (Y), one 8x8

block each of two chromiiance values (U and V). The chroma values are subsampled

in both the horizontal and vertical dimensions during preprocessing to create what

is called a 4:2:0 inacroblock.

Since a macroblock covers a 16x16 pixel area of the

image, the horizontal and vertical dimensions of the picture are padded to be an even

multiple of 16. As one example, a picture which is 352x240 pixels would contain

17

M

GOPO

GOP1I

I-Picture

GOP2

B-Picture

Sequence Layer

GOP n

GOP3

B-Picture

P-Picture

* * *

P-Picture

Group of Pictures Layer

SliceO

Slicel

Slice2

MBO

MB1

MB2

MB n

Slice Layer

Slice n

Picture Layer

U

V

Y

Macroblock Layer

8x8 Block

Figure 2-2: MPEG syntax.

22x15 or 330 macroblocks.

The encoder implemented as part of this research was not intended to support all

features of the MPEG standard, although nothing in the methodology would preclude

a full implementation. It was simply more important to go deep into exploring the

parallelism that could be extracted than it was to have a code base that perfectly met

all the functionality of the standard. Specifically, the MPEG-2 encoder described in

this research:

" Only works with progressive (non-interlaced) video input sources

" Is only intended to create Main Profile (4:2:0) bitstreams (e.g., DVD standard)

The encoder is not limited in the horizontal and vertical resolution of the input

18

video sequence, however, as this is an important part of investigating the scalability

of the encoder.

19

20

Chapter 3

Related Work

Much work has been done in the area of creating parallel algorithms for MPEG

encoding on general purpose hardware. The vast majority of this work until recently

[25, 17, 21, 10, 1, 4, 8, 14, 19] has assumed that the entire video sequence is available

in advance of the encoding. This is often termed off-line encoding, and offers many

avenues for exploiting parallelism that focus around pre-processing of the sequence

and allocation of data at a coarse (typically GOP) level. The goal of these methods

is to reduce or remove the interdependencies inherent in the MPEG algorithm, and

as a result reduce or remove the corresponding high-latency communication between

processing elements. The bulk of these efforts targeted a network of workstations,

although some [21, 1] used a MIMD architecture such as the Intel Paragon and another

specifically targeted shared mnemory ntmiltiprocessors [14].

A variant of off-line encoding is what could be called on-line with constant delay

[22]. Encoding methods are similar to off-line, but there exists a, constant delay in the

output sequence relative to the input sequence (on the order of 10 to 30 seconds in

this study). If this delay is acceptable, then the encoding can appear to be happening

in real-time.

Our focus, however, is on true real-time on-line encoding, where individual frames

arrive at the encoder at a constant rate (typically on the order of 30 framnes/sec) and

each one is presented at the output before the next frame arrives. In this environment,

it is not possible to exploit any temporal parallelism [22]. Obvious applications are in

21

videoconferencing or live digital video broadcastino or even narrowcasting (e.g., using

a webcam or cell phone). An early effort at this problem [3, 15] used a data-parallel

approach for MIMD and network of workstation architectures. This method achieved

an impressive for the day 30 frames per second on a 330 processor Intel Paragon when

using a simplified motion estimation algorithm on 352x288 data, but did not scale

well. More recent work [5] has attempted to address the problem of on-line encoding

by modeling the fine-grain parallelism in the MPEG-4 algorithm, but does not yet

offer any real world performance data for their methods.

Kodaka [16] and Iwata [11] both describe MPEG encoding algorithms that appear

to have the potential to achieve real-time on-line encoding on general purpose single

chip multiprocessors.

Unfortunately neither of these solutions address the need to

process the output bitstream sequentially, a problem which we will address in detail

in Chapter 5, nor do they offer much in the way of experimental results on real

hardware.

One recent project that takes a different approach is the Stanford Imagine stream

processor [13]. This processor contains 48 FPUs and is intended to be as power efficient as a special purpose processor while retaining some level of programmability for

streaming media applications. So while it is not specifically tuned to the MPEG algorithm, it is also not a general purpose processor. Early work predicted that 720x48

pixel frames would encode at 105 frames per second. Recent experimental work [2]

found that the prototype hardware was capable of 360x288 pixel frames at 138 frames

per second and made no mention of performance on 720x480 data. The reader should

be warned, however, that comparing absolute performance between different studies

can be misleading as there are many parameters in any MPEG encoder, particularly

the method and search window for motion estimation, that can dramatically improve

or degrade performance.

Analog Devices recently described different methodologies for using their Blackfin

dual-core processor for MPEG encoding[20].

The implementations are highly archi-

tecturally dependent, however, and not particularly scalable or generalized.

With Raw we have the advantage of very small latencies between general purpose

22

processing elements.

If we can successfully combine this with a methodology that

minimizes shared data and breaks the dependencies in the MPEG algorithm, we can

expose the spatial parallelism in the encoding of each individual MPEG frame without

needing to exploit any temporal parallelism that would limit the application space.

In this thesis we will demonstrate that real-time on-line encoding is possible on a

single-chip general purpose multiprocessor such as Raw.

23

24

Chapter 4

MPEG on the Raw Microprocessor

This chapter gives an overview of the Raw microprocessor and discusses the existing

condition of the code base when work began on this thesis.

4.1

The Raw Microprocessor

The Raw microprocessor is a single-chip tiled-architecture computational fabric with

enormous on-chip and off-chip communications bandwidth [23]. The prototype Raw

chips divides 122 million transistors into 16 identical tiles, each tile consisting of:

* an eight-stage single-issue RISC processor;

" a four-stage pipelined FPU;

" a static network router (routes specified at compile time);

" two dynamic network routers (routes specified at runtime);

" a 32KB data cache; and

" a 96KB instruction cache.

The architectural overview in Figure 4-1 shows how the tiles are connected by

four 32-bit full-duplex networks [24]. The cleverness in Raw is that each tile is sized to

match the wire delay of a signal traveling across the tile. Since each tile only connects

2.5

to its (at most) four neighbors, this means that the clock speed for the chip can scale

alongside the individual tile without regard to the number of tiles in a future version

of the chip.

1r24

C

C

S

S

C

C

C

S

C

S

z264

r26

S

S

S

S

CACHE

)r27

C

S

network"

Input

Compute

FIFOs

Pipeline

frma

SE

Outps

FOr

to

Static

Router

Static

Rote

Router

S

CS

Oc cle

PC

IEEI

Figure 4-1: The Raw Microprocessor.

4.2

Porting MPEG to Raw

When work on this thesis began, Hank Hoffman had managed to successfully port the

MPEG code to the Raw hardware. This initial code base, which will be referred to

as the baseline code in this thesis, was able to take advantage of multiple tiles and

created a valid MPEG-2 bitstream. It still had a number of scalability shortcomings,

however, that would be addressed as part of this research.

First, while the baseline code was able to execute most of the algorithm in parallel

(most importantly motion estimation) when it reached the stage of creating the output

bitstrean, it was forced to move sequentially through the tiles to create the bitstream

in a linear fashion. This was primarily because there are natural dependencies in the

MPEG bitstream - tile 1 could not start creating the bitstream from its data until it

had received some parameters from tile 0 regarding (for example) the current state

of the feedback loop for rate control. The primary goal of finding a way to break this

dependency and implementing it is described in Chapter 5.

The next barrier to scalability was that the baseline code was not able to partition

the problem to make use of all available tiles. More than one tile could be used per

26

j

0

0

1

1

0

0

0

1

1

2122

2 2

0

0

1

1

22

0

0

1

1

22

2

0

0

0

1

1

1

22

6 16 16

6

7

7

7

7

7

8

8

8

8

818

8

8

8

8

9

9

9

9

9

9

9

9

9

10 6 10 6 10 6 106

106

106

106

106

12

12

12

12

12 112

12

13

13

13

13

13

13

1 141

14

6

6 16

414

7

13

0

1

2

0

0

01010

0

1

1

1

2

222

1

1

22

0

0

1

0

1

212

2 2

6 16 16

6 6

6 16

6

6

6 6

7

7

7

7

7

7

7

7

7

7

7

8

8

8

8

8

8

8

8

818 _8

8

9

9

9

9

9

9

9

9

9

9

9

10

6

10

6

10

6

10

6

10

6

610

610

610 6 10 6 10 6 10 6 10 6 10 6 10 ]

12

12

12

12

12

12

12

12

12

13

13

13

13

13

13

13

13

13

141414

14

14

14

14

14

14

14

14

6 16

7

7

6

7

6

7

9

9

12

12

112

12

12

13

13

13

13

13

13

14

14

14

12

1

4

Figure 4-2: 352x240 pixel video frame mapped to a 15 tile Raw machine.

row, but if more than one row was on a tile then it had to be the whole row. For

example, if the input file was 352x240 pixels (or 15 rows of 22 Macroblocks each) then

the problem would be partitioned so that one tile handled each row of Macroblocks.

This is fairly good use of resources - it only leaves one tile idle. But if this same

example were run on 8 tiles, only 5 tiles would get used (3 rows on each tile) leaving

close to half the resources idle. Chapter 6 describes a method for partitioning that

applies as much of the available resources as possible to the problem.

Lastly, for each frame that was encoded, there was a data synchronization that

had to occur so that all tiles were seeing the necessary reconstructed frame data.

Because motion estimation looks in a 16 pixel region around each macroblock, there

is reconstructed frame data that is resident on other tiles. A simple example is the

case of mapping a 352x240 pixel video frame onto 15 tiles shown in Figure 4-2. In

this case, each row of 22 macroblocks is resident on a different tile. But when tile 4

does its motion estimation, it will need data which is resident on tiles 3 and 5. This

border data must therefore be synchronized every frame.

Using the static network of Raw to synchronize large chunks of data involves

very careful choreography of the network routes between tiles. The simplest way

27

to accomplish this was to have every tile read in, store and synchronize the entire

video frame. This is quite wasteful, however, as can be seen from the earlier data

map. In order to make the implementation scale to large numbers of tiles, it would

be necessary to come up with a methodology for routing just a subset of the data in

each frame. This "border routing" is outlined in Chapter 7.

28

Chapter 5

Parallelizing MPEG on Raw

Extracting much of the spatial parallelism' from the MPEG algorithm is fairly straitforward. 'This is due to the fact that the algorithm primarily operates on one macroblock of the image at a time.

Since a single frame of video typically contains

hundreds of macroblocks, the obvious method is to divide up the macroblocks evenly

among however many tiles you have available. This simplistic approach to parallelism

works for almost all phases of the algorithm. To see where it breaks down we need

to take a closer look at the inner workings of MPEG.

5.1

A Parallel Encoder

The primary differences between the parallel MPEG encoder shown in Figure 5-1 and

the more traditional single processor version in Figure 2-1 are:

" The incoming image data is split up between the tiles, so that only a portion

of each picture is actually being fed into the input frame buffer on each tile.

" Each tile is creating a serial bitstreaim which is only a portion of the output

bitstream, and which miuch be concatenated in a post-processing step.

'By spatial parallelism we are referring to parallelism that can be extracted in the compression of

a single frame of video data. This is in contrast to temporal parallelism, which can only be extracted

by allocating frarnes to differen t processiing elements, and wlhich cannot be used to perform truly

on-line real-time encoding [22].

29

* There is an additional Tile Synchronization stage required before the reconstructed picture can be placed into the Reconstructed Frame Buffer. This is

necessary to properly perform motion compensation since each tile needs to see

not only its own data but also data in a 16 pixel region around all of its data,

and some of this region may be resident on other tiles.

5

Uncompressed

Vide (YUV)

Inu

Rate Control

Fa:

.DCT

-bQuantize

---

VLC

Inverse

2p ,

Predicte

Quantize

Frameea

Inverse

Estimation

Bitu eram -+

Conctenation

Bitstream Buffer 2

MPEG

Video

7

DCT

Compensationuffe

Frm1ufrOtu

Predicted Frame

-r-

Motion Vectors

Tile

Syncronization

3

Motion

Compensation

Reconstructed

FrameBuffer

Output

BitstreamBuffer n

Figure 5-1: A parallel MPEG encoder.

The phases of the algorithm which roughly correspond to modules in the MSSG

code have been shaded and numbered. Throughout the rest of this thesis, we will

often refer to the phases by their module name, and so they will be outlined here.

1. memCpy - Although a final implementation would be accepting data from a realtime data source such as a camera, we are approximating this data movement

phase using a standard memcpy of the image data to a data structure local to

each tile.

30

2. motion - Motion estimation and generation of the motion vectors.

3. predict - Motion compensation and creating of the prediction.

4. transform - Includes the subtraction of the predicted frame (if any) and the

forward DCT of the resulting data.

5. putpic - In this module, the transformed data is quantized and run through

a variable length coder (VLC). The quantization factor is continuously varied

based on the kind of picture (I,BP), the bits that have been allocated for the

GOP and the current buffer fullness.

6. iquant - This is simply the inverse of the quantization step.

7. itransform - Inverse DCT and addition of the predicted frame (if any).

8. sendrecv - Reference frame data synchronization between tiles.

5.2

Conversion to Arbitrary Slice Size

As outlined earlier, in the case of the baseline code, the only options for partitioning

the incoming data are either an integer number of rows per tile or an integer number of

tiles per row. The MSSG code appeared to adhere to a convention that a, single row of

mnacroblocks was equivalent to a slice. The combination of these two factors required

the rate control function for a single slice to potentially span multiple tiles. This in

turn required a number of parameters related to the state of the rate control function

to be passed from one tile to the next in sequential fashion, effectively sequentializing

the putpic phase of the algorithm. In addition to this, since MPEG is a bitstream,

each successive tile needs to know the bit location that the previous tile last wrote

before it can proceed. Both these issues must be addressed in order to break this

dependency.

The effects of this dependency on a real test run of the baseline code are shown

in Figure 5-2. Here it can be seen that the first four phases of the encoding proceed

31

in parallel, and that the sequential aspect of putpic is a significant portion of the

runtime. Clearly as we scale to more tiles, this will become the single limiting factor

in performance. It should be noted that a similar recognition of the sequential aspect

of this phase can be found in the literature [16, 11, 20].

TileO

Tilel

MI

Tile2

|0 I

-N

W

Tile3

Tile4

Tile5

Tile6

Tile7

MEN

Tile8

Tile9

MHnd

TilelO

Tilel11

. . .. . . . ...

Tilel2

Tile13

Tilel4

20%

0%

60%

40%

0 memcpy

4

*

III

motion estimation

predict

transform

*

E

80%

100%

iquant

itransform

wait

sendrecv

N putpic

Figure 5-2: Individual tile activity for a baseline encoding.

The seminal realization in breaking this dependency was that slices could be an

arbitrary number of macroblocks [7, 9]. This, in turn, assured that a slice never had

to span tiles, and obviated the need for passing rate control parameters. Because

slices are by definition word aligned, it also meant that the output of each tile no

longer had to be concerned with the bit-level alignment of any other tile.

It is interesting to note that the purpose of the slice in the MPEG standard is

32

primarily for error recovery [7].

A decoder can drop a slice, for example, without

dropping an entire frame. For Raw, however. the slice becomes the ultimate parallelization construct. In effect it is an arbitrarily sized (at least to the granularity of

a single macroblock) sub-frame which can be allocated its own bit-budget for rate

control purposes2 .

One subtlety that was passed over in the previous discussion is that although slices

can be of arbitrary size, they are actually not allowed to span rows of rnacroblocks 3.

This is not a. difficult exception to make; it simply requires that any tile handling a

slice that spans multiple rows must make sure that it terminates slices at the end of

the row and creates the proper headers at the beginning of rows. This, in turn, will

typically lead to a slightly larger output file size.

We can now create a new mapping for a 352x240 pixel example running on 16

tiles. 'This is shown in Figure 5-3. Each block represents a macroblock of the frame

and the nurmber is the tile which is responsible for that macroblock. Separate slices

are denoted by heavy outlines.

Figure 5-4 shows the result of parallelizing the putpic phase. All phases are now

running in parallel, and for Tile 9 there is no idle time at all. For all other tiles,

the idle time is simply the difference between their motion estimation phase and

the same phase on Tile 9. Because the rest of the algorithm even after this phase

can still proceed in parallel, this idle time does not actually show up until before

sendrecv. All tiles must participate in data communication, so this phase acts as a

kind of barrier at the end of each frame.

2Although this usage of the slice is clearly allowable under the ISO standard [9], not all decoders

will properly interpret the resulting bitstream. In particular. the MSSG decoder does not handle it

correctly. Once we moved to this construct, we were forced to use a more robust commercial grade

decoder such as CyberLink PowerDVD for verification.

3

This is actually a contradiction between the ISO standard [9] and the popular literature [7], the

latter of which states that a slice can be "as big as the whole picture". Interestingly, the MSSG

decoder does properly decode bitstreams which contain slices that span multiple rows.

33

0

0

0

0

0

0

0

1 11 11

0

0

1 1

0

0

1

1

0

0

0

1

0

0

1

1

0

0

0

0

0

1

1

1

1

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

4

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

110

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

101 11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11 112

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13 114

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14 115

15

15

15

15

15

15

15

15

15

15

15

15

15

15

Figure 5-3: Slice mnap for 352x240 video on 16 tiles.

34

TiIeO

Tilel

TiIe2

TiIe3

..

.....

m............

m......

.. . . m

..s e mmmmmm..

H 1! 1! 1

TiIe4

TiIe5

TiIe6

TiIe7

TiIe8

TiIe9

nn .. ..nn .... n...u n.........~......n.........

.........-

Tile1O

Tilel1

Tile12

Tile13

TiIe14

0%

20%

U||

memcpy

motion estimation

predict

transform

40%

60%

80%

iquant

itransform

EU

wait

sendrecv

putpic

Figure 5-4: Individual tile activity after parallelizing putpic.

35

100%

36

Chapter 6

Partitioning MPEG on Raw

Simply solving the parallelization problem is not sufficient foi a truly efficient inplementation of MPEG on Raw. By default, the MSSG code operates primarily at the

inacroblock level, which is to say that most of the loops proceed sequentially through

all the macroblocks in a frame.

In creating the baseline parallel implementation,

however, these loops were changed to be more row oriented. Now that we had a parallel methodology that would allow placement of an arbitrary number of macroblocks

on each tile, it was necessary to go back through the code and reorganize it on a

macroblock level.

The first step in doing this was to create a generic partitioning algorithm that

would work for any size image and any number of tiles. This involved:

* Calculating the number of macroblocks that each tile would be responsible for

(mb-most-tiles);

* Given the above, then calculating the maximum number of tiles that could be

applied (tiles-used);

" Given that, calculating the number of macroblocks (if any) that must be handled

by the last or "remainder" tile (mblasttile).

" Finally, creating an "ownership map" data structure which simply held the

identity of the owner for a given macroblock.

37

Since each tile must "own" an integer number of macroblocks. it will not always be

possible to perfectly partition the frame (and thus the task). The 'best" partitioning

would be achieved when most of the tiles own the fewest number of macroblocks

possible such that the last tile owns fewer macroblocks than the others. If totalmbs

is the total number of macroblocks in a frame and tiles is the total number of tiles

available, this proceeds as follows:

1. mbrmostiiles =

Im"al

2. Find the largest tiles-used s.t. tiles-used . mbbmostiles < mb-total

3. mb-last-til

=- mblot.al - tidcs-used - nb-mostitiles

Table 6.1 shows how the MPEG encoding is partitioned on four example frame

sizes for a wide range of available tiles. As the table shows, as long as the number of

macroblocks per tile is larger than the number of available tiles, the algorithm will

always use all available tiles. When the number of macroblocks per tile gets smaller

than the number of available tiles, it gets more difficult to use all available tiles.

Although the table only shows available tiles that are a power of two, the partitioning

algorithm will create the optimal partitioning for any number of available tiles.

Tiles

352x240

used I MBs I last

640x480

used I MBs I last

720x480

used I MBs [last

1

2

4

8

16

32

64

128

256

1

2

4

8

16

30

55

110

165

1

2

4

8

16

32

64

120

240

1

2

4

8

16

32

62

122

225

330

165

83

42

21

11

6

3

2

0

0

81

36

15

0

0

0

0

1200

600

300

150

75

38

19

10

5

0

0

0

0

0

22

3

0

0

1350

675

338

169

85

43

22

11

6

0

0

336

167

75

17

8

8

0

1920x1080

MBs last

I used

1

2

4

8

16

32

64

128

255

8160

4080

2040

1020

510

255

128

64

32

0

0

0

0

0

0

96

32

0

Table 6.1: Macroblock partitioning for a variety of available tiles and problem sizes.

The discussion in Chapter 5 does expose the one weakness in the partitioning

methodology. Since most tiles have to wait for the slowest tile to finish before they

38

can proceed to sendrecv, the time it takes to do motion estimation on the slowest

tile becomes the "weakest link in the chain".

For our examjples the effects are not

bad, but if one were to use a larger search space for motion estimation, this would

increase the percentage of time spent in this phase and the difference between slowest

and fastest tile would also increase. More serious could be a particular video signal

which included fast moving objects in just one area of the screen. This might bog

down motion estimation for a particular tile as it continually needs to search large

areas to find best matching blocks.

One solution which might be effective would be to continually monitor the time

it takes for each tile to do motion estimation and vary slightly the number of miacroblocks assigned to each tile as a result. Tiles which are responsible for regions with

more activity would naturally be assigned fewer macroblocks. while those in low activity regions would get more. This dynamic "load balancing' is another advantage of

the ability to have arbitrary slice sizes. The disadvantage of this methodology is that

it takes the communication pattern from being static to being dynamic which, as we

will see in Chapter 7, would require new hardware support to accomplish efficiently.

39

40

Chapter 7

Making Communication Efficient

The bulk of the effort in this thesis revolved around improving the efficiency of the

communication between tiles. Although the greatest performance gains came from

parallelizing the putpic phase up to 16 tiles, communication becomes the limiting

factor as MPEG encoding on Raw is scaled to 64 or more tiles.

7.1

Improvements to memcpy

The most obvious inefficiency in the baseline code, and the easiest to address, was the

fact that the memcpy phase loaded the entire frame into every tile. The inefficiency

here is obvious, and is particularly acute as the number of tiles increases. The solution,

however, is unfortunately not as simple as only bringing in the macroblocks that a

particular tile "owns". In order to perform motion analysis, it is also necessary for

each tile to have access to data all around the border of its macroblocks'.

Part

of the initialization phase was therefore a border discovery where each tile goes

through its inacroblocks and determines the identity of the macroblocks on its border.

Figure 7-1 shows an example of the border (in gray) around tile 3 in the 352x240

example on 16 tiles.

The size of the border for a given tile can be up to 2,n+ 6,. where n is the number

'Akramullah[15]

describes this as "overlapped data distribution"'. However, because this study

was conternplating processing elements vith much higher commnimcation Iatencies than Raw. this

method was not sufficient to provide good scalability with a large number of processing elements.

41

0

0

1

1

-

3

0

0

0

0

0

1

1

1

1

1

1

1

2

2

2

-

2

'

3

3

3

13 1

3

1

13

1

1

0

0

1

3

3

0

0

1

1

1

2

2'

3

3

0 0 0 0

1

1

1,'

'Z

1

,-.

0

0

0

0

1

2

1

1

1

1. 1

.2

2

2

3

3

3

3

13 3

13

13

1

5

5

5

3 3 13 3 3

1

0

13

-

1

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

5

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

616

6

6

7

71717

7

7

7

7

7

7

7

7

7

7

7

7

7

7

717

7

8

8

8

81818

8

8

8

8

8

8

8

8

8

8

8

8

8

818

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

11

11

11 111

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

12

12

12

12

12

12

12

12 112 112

12

12

12

12

12

12

12

12

12

12

12

13

13

13 113

13

13

13

13

13

13

13

13

13

13

13

13 113

13

13

13 113

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

15

15

15

15

15

15

15

15

15

15

15

15

15

15

14

15

6

Figure 7-1: Bordering macroblocks required for motion estimation.

of macroblocks on that tile. This can still represent a significant decrease in the

amount of data that must be transferred. If we consider the 352x240 example on

16 tiles again, Table 7.1 compares the total number of macroblocks that need to be

transferred with and without border discovery. With border discovery, over 7 times

less data needs to be brought into the tiles each frame, and this translates directly to

the performance of this phase.

7.2

Improvements to sendrecv

The baseline code accomplished the sendrecv phase by having each tile send the data

it owned to every other tile. We will call this full routing. The net result of this

was that by the end of the sendrecv phase, every tile had a complete version of the

reference frame in its reconstructed frame buffer. The problem here is analogous to

the problem with the original memcpy, which is simply that the only data that actually

needs to be in reconstructed frame buffer for a given tile is the data corresponding

to the macroblocks that it owns as well as the border around those macroblocks.

However, since these border macroblocks are distributed around the tiles, the solution

of border routing is significantly more difficult to achieve even for a single problem

42

Full Frame

Border Onlyv

0

1

2

3

4

5

6

7

330

330

330

330

330

330

330

330

23

44

46

46

46

46

46

46

8

330

46

9

10

11

12

13

14

15

Total MBs

330

330

330

330

330

330

330

5280

46

46

46

46

46

38

17

720

Tile

Table 7.1: Number of macroblocks required on each tile in full frame memcpy compared

to memcpy with border data.

size let alone for the generalized problein.

7.2.1

Full Routing and Elemental Routes in Raw

It is useful to understand how full routing works on R-aw in more detail so that one can

better understand the gains that may or may not be achievable with border routing.

First we must look again at the architecture of the Raw microprocessor.

A 16-tile

Raw microprocessor is arranged as a 4x4 grid, and each tile has both a compute

processor as well as a switch processor for routing data. Each processor has its own

instruction set and each runs separate code which must be carefully synchronized by

the programmer. In the figures, we will represent the compute processor as a small

box in the upper left of the tile with the switch processor in the lower right.

As Figure 7-2 shows, in order for Tile 0 to send its data to all the other tiles, each

tile must not only be ready to receive the data but also ready to route the data as

required depending on the identity of the sending tile 2 . After Tile 0 has sent all of

2

1f any tile is not ready, the other tiles will stall. If one or more tiles loads the wrong route into

43

rx W t

t x E

rx

%tx N

i

ES

rx W

x

tx E

k

rx W

rx W tx E k

rx W

rx W

rx W

r

E k

k

x

W t x E

71

rx N tx ES Ic

rx N tx E

k

rx W tx E k

rx W tx E k

I

tx E k

rx W tx Ek

r

Figure 7-2: Example routes for Tile 0 sending data to all other tiles.

its data, each tile will load a new route into its switch and Tile 1 will begin sending.

This process will proceed until all tiles have been senders.

It can be seen from Figure 7-2 that the sending of data from Tile 0 to the other

tiles requires a total of one sending route (labelled _txES) and four receiving routes

(labelled _rxN-txES-k, _rxN_txE-k, _rx-W-txE-k, and _rxW) 3 . Raw has the ability

to send to up to four destinations at once or to route to three destinations while

receiving. This leads to 15 elemental sending routes as well as 15 elemental receiving

routes from each of four directions, for a total of 75 possible routes. The 15 sending

routes are shown in Figure 7-3, while the 15 receiving routes from the East are shown

in Figure 7-4. The 15 receiving routes from the North, South and West are similar

and can be easily inferred.

the switch, then the entire Raw processor will deadlock. This is a source of much debugging fun!

3

Our labelling convention for routes is as follows:

" If the tile is a receiver, start with _rx and the direction from which data is being received;

* If the tile is a sender, add _tx and the direction(s) to which data will be sent, starting with

N and going clockwise;

* If the tile is a router (a receiver and a sender) and the data being routed will also be delivered

to the processor, add a -k (for "keep").

44

-tx

W

tx_NE

_tx_NS

-txNW

-txEW

tx SW

txNES

tx NSW

txESW

-txNEW

txNESW

Figure 7-3: Possible routes for sending on Raw.

45

rx_E

rx_ Etx

_rx_E-tx

W

_rxE txNk

_rxE_txNW

rx -E-tx NS k

S

_rx_Etx W-k

_rx_EtxSW

xE

tx NWk

_rx_E-txNSW

_rx_E-zxS-k

_rx_E_tx_N

_rx_E-txSW-k

N_rx_E-txNS

_rx E tx NSW k

Figure 7-4: Possible routes for receiving from the East on Raw.

46

The first step in actually implementing either full or lorder routing was to create

the switch code for all 75 of these cases. For our purposes. the number of bytes that

would be sent on a gfiven route was not known in advance, and so it was necessary to

create switch code that allowed for routing of an arbitrary sized block of data. The

following code segment accomplishes this for the "send east" route:

_txE:

move $0, $csto

bnezd- $0, $0,

nop

.

route $csto->$cEo

route $0->$csti

j Similarly, the following code segment will cause the switch to receive data from

the East, route it to the North, South and West and deliver it to the processor:

_rx_E_txNSWk:

move $0, $csto

bnezd- $0, $0,

nop

.

route $cEi->$csti,$cEi->$cNo,$cEi->$cSo,$cEi->$cWo

route $0->$csti

J The previous code fragments must be called at the appropriate time by the main

processor code. In order to determine which of the 75 routes a given tile should run,

case statements are used to set the function pointer. For example, the case statement

for the send function in the case of full routing is:

static void set-sendjfn(void (**f)(void)) {

switch(tile-id) {

case 0:

*f = _txES;

break;

case 3:

*f =

_txSW;

break;

case 12:

*f = -txNE;

break;

case 15:

*f = _txNW;

break;

case 1:

case 2:

*f = _txESW;

break;

case 4:

case 8:

*f = _txNES;

break;

case 7:

47

case 11:

*f = _txNSW;

break;

case 13:

case 14:

*f = _txNEW;

break;

case 5:

case 6:

case 9:

case 10:

*f = _txNESW;

break;

default:

rawtestfail(OxOOO);

break;

}

}

The route is selected and the switch code is invoked as follows, where each of the

lw instructions is essentially loading one word of the macroblock into the switch:

set-sendjfn(&switchjfn);

SWPC,%0" : : "r" (switchjfn));

__asm__ volatile ("mtsr

counter = mb-per-tile*64-1; /* number of Y words to send */

__asm__ volatile ("move $csto,O" : : "r" (counter));

__asm__ volatile ("lw $csto,%0" : : "m" (pEO));

41

__asm__ volatile ("lw $csto,%0" : : "m" (p[ ));

__asm__ volatile ("lw $csto,%0" : : "m" (p[81 ));

__asm__ volatile ("1w $csto,%O" : : "m" (p[12]));

The case statement for receiving is somewhat more involved since the appropriate

function depends not only on the identity of the receiving tile, but also the sending

tile. The concept, however, is the same.

7.2.2

Border Routing

The first step in border routing is similar to the border discovery of the improved

memcpy.

We calculate a receiver opcode which is a bitfield containing as many

bits as there are potential receivers. As a result of the partitioning described earlier,

we already have an ownership map for each inacroblock which also can be used to

determine the sender of a given macroblock. With these two pieces of information,

each macroblock will have attached to it a route specifying where it is coming from

and all the places it is going. This route can be specified as {scndcr, receiveropcodc}.

48

W

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

1

2

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

4

4

4

4

4

4

4

4

4

41414

4

4

4

4

4

4

4

4

4

5

5

5

5

5

5

5

5

5

5

5

5

5

6

5 5 515 5_

6

6 6 6

6

6

6

6

6

6

6

5 5

6 6 6

6

6

6

6

6

6

7

7

7

7

7

77

7

7

7

7

5

7

7

7

7

7

7

7

7

7

7

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

8

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

9

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

10

111

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

11

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

12

13

13

13

13

13

13

13

13

13

13

13

13

13

13

13

141

13

13 1 3

13

13

13

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14

14 114

14

14

15

15

15

15

15

15

15

15

15

15

15

15

15151

14:

Figure 7-5: Determination of the receiver opcode when Border Routing.

Filling in the receiver opcode bitfield is a matter of an initialization function which

walks through each macroblock and sets bit n if Tile n is a receiver. In Figure 7-5,

Tile 3 needs to send its first macroblock to tiles 1, 2 and 4. This translates into

a receiver opcode of 10110 or 1616. Tile 14 on the other hand has 6 macroblocks

that only need to go to Tile 13, and therefore have an opcode of 10000000000000 or

200016. Table 7.2 shows part of the route map for the example of 352x240 on 16 tiles.

The full route map has 45 entries, which translates into 45 unique routes that must

be programmed in order to border route the macroblocks. An example of the route

{3, 3416} is shown in Figure 7-6.

On the surface, there would seem to be a lot to gain from border routing. After

all, since the majority of tiles only actually need to send data to a few neighboring

tiles, there should be a lot of wasted communication to those other tiles that can be

eliminated. However, the architecture of the Raw network is such that delivering an

operand to a processor is a zero cost operation if that tile is also involved in routing.

In the example route of Figure 7-6, Tiles 0 and 1 are not receivers, but if they were

the route would not run any slower. We should be able to quantify the advantage of

border routing by comparing the theoretical number of cycles it should take to do a

49

Tile

0

0

IL

3

3

3

NIBs

0-18

19-20

21-39

40-41

42-43

44-60

61-62

63-64

65-81

82-83

14

14

14

14

15

15

294-295

296-307

308-313

314

315-316

317-329

1

1

21

2

0

1

1

1

1 2

1

1

10

31415161 7819

1112113114115

opcodel6

2

6

5

1

1

1

1

1

1

1

1

1

1

1

1

D

B

A

IA

16

14

34

1

1

1

1

1

1

1

1

1

1

16

1

Table 7.2: Partial border routing map for 352x240 video on 16 tiles.

1-1

_rx

E tx S

-

1-1 1

1

4

tx W

rx E t

rx MS

E tx

x W k

4

rx N

El

31

rx N

17

idle

idle

idle

idle

idle

idle

idle

idle

idle

idle

11

Figure 7-6: Example routes for Tile 3 sending to Tiles 2, 4 and 5.

50

BOOO

AOOO

2000

AOOO

6000

4000

full routing of the 352x240 example with border routing of the same data.

The Rav network requires three cycles to communicate an operand between neighboring tiles: one to get from the processor to the switch in the first tile, one to get

from the first tile switch to the neighbor tile switch, and a third to get from the second switch to the second processor. However, once a pipeline is setup, operands can

be delivered every cycle and so if the size of a data block being transferred is large

enough (as it is in this case) the startup time of the pipeline can be safely ignored.

Since a macroblock is the same size regardless of the kind of routing being performed,

and since all we are looking for is the relative speedup of border routing compared

to full routing, we can also factor out the number of cycles required to emit a single

macroblock from one of the tiles. This leaves us to focus on the product of the number

of network hops between the sender and the furthest destination for each route and

how many macroblocks follow that route.

Making this calculation for the full routing case is fairly simple, since there is only

one route for each tile. The four corner tiles are 6 hops from the furthest destination,

and the four center tiles are 4 hops. All other tiles are 5 hops. All the tiles have 21

macroblocks except the last tile which has 15. So the total number of macroblock. hops

is 21(3 - 6 + 4 - 4 + 8 - 5) + 15 - 6 = 1644.

The calculation for border routing is a bit more involved since there are 45 routes.

While full routing does not have any routes that are shorter than 4 hops, border

routing does not have any routes that are longer than 4 hops and has a large number

that are only one hop.

Table 7.3 4 compares full routing with border routing by

showing the number of macroblocks that have to travel a particular distance in each

case.

The net result for border routing is a total of 752 mnacroblock - hops.

This

means that the most one could expect to improve the sendrecv phase using border

routing for this example is 1644/752 or about 2x if we take into account the additional

route creation overhead inherent in border routing. As we will see in Chapter 8, the

4

1t is interesting to note that although all the macroblocks in the frame (330) get routed in both

cases for this example. as the number of macroblocks resident on a single tile gets larger (either

because the image is larger or the number of tiles is smaller), a significant number of miacroblocks

may not need to be routed at all since they do not have a border that is resident on another tile.

51

Max Hops

1

2

3

4

5

ftVBs

Border

Full

IB - hops 1iBs

336

840

84

168

6

78

468

Total

330

1644

MB - hops

172

20

12

126

172

40

36

504

330

752

Table 7.3: Comparison of number of the network hops required to full route and

border route 352x240 video on 16 tiles.

experimental results bear this out, although they also expose a feature of border

routing that was riot anticipated. Unlike full routing, it is not necessary for all tiles

to have entered the sendrecv phase before routing can begin. The effect of this is that

some of the wait time before sendrecv is hidden. Unfortunately, because there is still

an explicit barrier at frame boundaries, this does not. have a true performance benefit

in the current implementation. If a future implementation was to dynamically allocate

varying numbers of macroblocks across tiles as described at the end of Chapter 6,

however, this feature could in fact be useful.

7.2.3

Border Routing on Larger Raw Machines

One of the big problems with border routing is that it removes much of the generality

that was possible with full routing. This is because the required routes are a function

of the way a particular frame size maps to a, particular number of tiles. Fortunately,

the route set for 352x240 on 16 tiles is a superset of what is needed to border route the

same size frame on less than 16 tiles. Still, the loss of generality with respect to frame

size is probably not worth

just a 2x improvement in this one phase of the algorithm.

The real advantages of border routing do riot become truly apparent until we start

using a larger number of tiles.

Unfortunately, at this point the number of unique

routes quickly becomes unmanageable, at least if the coding of these routes is being

done manually. In the end, we decided that it was important to implement border

routing on a 64 tile example in order to prove that Raw was capable of real-time

52

Tile

I

0

MBs

[10 1

0-19

1

1

1

1

2

2

2

2

3

3

3

3

3

20

21

22

23-41

42

43

44

45-63

64

65

66

67

68-85

86

87

4

88

4

4

4

4

89

90-107

108

109

o

1

2 3

1

1

1

4 15

6

7

4

C

E

9

8

18

1

1

1

1

1

1

1

1

1

1

1

1

1

1

IC

1

1

1

1

1

1 1

1 1 1

1

1

1 1

1

1

1

1

1 11

1 1

opcode1 ,

1

1

1

1

1 1

1 1 1

12

11

31

39

27

23

22

62

72

4E

46

44

C4

E4

Table 7.4: Partial border routing map for 720x480 video on 64 tiles.

encoding and near linear scalability for DVD quality (720x480) video.

A 720x480 pixel video frame has 45 macroblocks in each of 30 rows for a total of

1350 macroblocks. The mapping of a 720x480 frame to a 64 tile Raw microprocessor

is shown in Figure 7-75.

Recall from the partitioning in Table 6.1 that only 62 tiles

are actually used and most tiles will have 22 macroblocks while the last will have 8.

This tile mapping will generate a routing map with 299 unique routes. The beginning

of the route map showing the routes for the first five sending tiles is in Table 7.4. The

routes for the higher number tiles would have very large (up to 62 bit) opcodes and

would make it difficult to properly code the massive case statement needed to set up

the routes.

The resulting route map has a two important characteristics that suggest some

optimizations, however. First, it turns out that a given tile never needs to commu5Because it is so dense, we have shaded the ownership blocks simply so that it is easier to pick

out the borders visually.

53

Figure 7-7: 720x480 pixel video frame mapped to a 64 tile Raw machine.

Tile

0

0

0

1

1

1

1

2

2

2

2

3

3

3

3

3

4

4

4

4

4

j

MBs

0-19

20

21

22

23-41

42

43

44

45-63

64

65

66

67

68-85

86

87

88

89

90-107

108

109

-3 1 -2 1-110

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

+3

+1+2

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

01 /,iSC

ode(lO(

C16

32

96

112

36

32

96

112

36

34

98

114

39

35

34

98

114

39

35

34

98

114

Table 7.5: Partial border routing map for 720x480 video on 64 tiles with biased

opcode.

nicate with a tile further than three behind it or three in front of it (in absolute tile

numbering - this does not mean that the tile is not more than three hops away). This

allows us to create a relative or biased opeode instead of the absolute opcodes that

we used in the 16 tile case. This biased opcode only needs to be 7 bits long. When

this shorter opcode is calculated for all the routes, it exposes the second important

characteristic of the route map which is that when expressed in this form, the opcode

pattern repeats in a regular pattern6 . This fact would allow us to group many of the

tiles together in the case statements. The repeating pattern can be seen in tiles 2, 3