“DATA SCIENCE” Workshop November 12-13, 2015 “DATA SCIENCE” Workshop will be held

advertisement

“DATA SCIENCE” Workshop

November 12-13, 2015

Paris-Dauphine University

Place du Maréchal de Lattre de Tassigny, 75016 Paris

“DATA SCIENCE” Workshop will be held

at Paris-Dauphine university, on November 12th and 13th, 2015

« DATA SCIENCE » Workshop (cf. www.univ-orleans.fr/mapmo/colloques/sda2015/DS.php)

is a satellite workshop of SDA2015 (cf. www.univ-orleans.fr/mapmo/colloques/sda2015 )

Organizers: Edwin Diday, Patrice Bertrand (CEREMADE Paris-Dauphine University)

Tristan Cazenave, Suzanne Pinson (LAMSADE Paris-Dauphine University)

Registration is free but mandatory due to a limited number of participants.

To register, please send an email to E. Diday (diday@ceremade.dauphine.fr)

Interested students may have their travel expenses reimbursed; Please send your request

with a CV .when your inscription is confirmed.

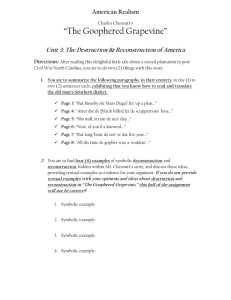

CONTEXT AND AIM OF THIS « DATA SCIENCE » SATELLITE WORKSHOP.

A “Data Scientist” is someone who is able to extract knew knowledge from Standard, Big and Complex

Data: unstructured data, unpaired samples, multi sources data (as mixture of numerical, textual, image,

social networks data). The fusion of such data can be done into classes of row statistical units which are

considered as new statistical units. The description of these classes can be vectors of intervals, probability

distributions, weighted sequences, functions, and the like, in order to express the within-class variability.

One of the advantage of this approach is that unstructured data and unpaired samples at the level of row

units, become structured and paired at the level of classes. The study of such new type of data, built in

order to describe classes in an explanatory way, has led to a new domain called Symbolic Data Analysis

(SDA). Recently, four international journals have published special issues on SDA, including ADAC

which is now known as a leading international journal of classification.

In this satellite meeting of the next SDA’2015 workshop (Orléans, November 17-19,

http://www.univ-orleans.fr/mapmo/colloques/sda2015/), the talks will concern the state of the

art and recent advances in SDA, or more generally visualization in Data Science.

SCHEDULE

Nov.12 th.

Welcome speech: 14:00 to 14:15

Lynne Billard: 14:15 to 15:15

“Data Science and Statistics” followed by

Maximum Likelihood Estimation for Interval-valued Data

Chun-houh Chen: 15:15 to 15:45

Matrix Visualization: New Generation of Exploratory Data Analysis

Coffee Break: 15:45 to 16:15

Oldemar Rodrıguez: 16:15 to 16:45

Shrinkage linear regression for symbolic interval-valued variables

Edwin Diday, Richard Emilion: 16:45 to 17:30

Symbolic Bayesian Networks

Nov. 13 th

Welcome Breakfast: 8h30

Edwin Diday: 9:15 to 10:00

Thinking by classes in Data Sciences: the Symbolic Data Analysis paradigm for Big

and Complex Data

Oldemar Rodrıguez:: 10:00 to 10:45

Probabilistic/statistical setting of SDA

Coffee Break: 10:45 to 11:00

Richard Emilion 11:00 to 11:45

Latest developments of the RSDA: An R package for Symbolic Data Analysis

Lunch: 12:00 to 14:00

Manabu Ichino: 14:00 to 14:45

The Lookup Table Regression Model for Symbolic Data

Paula Brito: 14:45 to 15:30

Multivariate Parametric Analysis of Interval Data

Coffee Break: 15:30 to 16:00

Chun-houh Chen: 16:00 to 16:45

Some Extensions of Matrix Visualization: the GAP Approach for Standard and

Symbolic Data Analysis.

Cheng Wang: 16:45 to 17:30

Multiple Correspondence Analysis for Mixed Symbolic Data

ABSTRACTS

Nov. 12th

Lynne Billard (University of Georgia, USA)

Title: Maximum Likelihood Estimation for Interval-valued Data

Abstract: Bertrand and Goupil (2000) obtained empirical formulas for the mean and variance of

interval-valued observations. These are in effect moment estimators. We show how, under

certain probability assumptions, these are the same as the maximum likelihood estimators for the

corresponding population parameters.

Chun-houh Chen (Institute of Stat. Science, Academia Sinica, Taiwan)

Title: Matrix Visualization: New Generation of Exploratory Data Analysis

Abstract: “It is important to understand what you CAN DO before you learn to measure how

WELL you seem to have DONE it” (Exploratory Data Analysis: John Tukey, 1977). Data analysts

and statistics practitioners nowadays are facing difficulties in understanding higher and higher

dimensional data with more and more complex nature while conventional graphics/visualization

tools do not answer the needs. It is statisticians’ responsibility for coming up with

graphics/visualization environment that can help users really understand what one CAN DO for

complex data generated from modern techniques and sophisticated experiments. Matrix

visualization (MV) for continuous, binary, ordinal, and nominal data with various types of

extensions provide users more comprehensive information embedded in complex high

dimensional data than conventional EDA tools such as boxplot, scatterplot, with dimension

reduction techniques such as principal component analysis and multiple correspondence

analysis. In this talk I’ll summarize our works on creating MV environment for conducting

statistical analyses and introducing statistical concepts into MV environment for visualizing

more versatile and complex data structure. Many real world examples will be demonstrated in

this talk for illustrating the strength of MV for visualizing all types of datasets collected from

scientific experiments and social surveys.

Oldemar Rodrıguez (University of Costa Rica, San José, Costa Rica)

Title: Shrinkage linear regression for symbolic interval-valued variables

Abstract: This paper proposes a new approach to fit a linear regression for symbolic internalvalued variables, which improves both the Center Method suggested by Billard and Diday (2006)

and the Center and Range Method suggested by Lima-Neto, E.A. and De Carvalho, F.A.T.

(2008). Just in the Centers Method and the Center and Range Method, the new methods proposed

fit the linear regression model on the midpoints and in the half of the length of the intervals as

an additional variable (ranges) assumed by the predictor variables in the training data set, but to

make these fitments in the regression models, the methods Ridge Regression, Lasso, and Elastic

Net proposed by Tibshirani, R. Hastie, T., and Zou H are used. The prediction of the lower and

upper of the interval response (dependent) variable is carried out from their midpoints and

ranges, which are estimated from the linear regression models with shrinkage generated in the

midpoints and the ranges of the interval-valued predictors. Methods presented in this document

are applied to three real data sets “cardiologic interval data set”, “Prostate interval data set” and

“US Murder interval data set” to then compare their performance and facility of interpretation

regarding the Center Method and the Center and Range Method. For this evaluation, the rootmean-squared error and the correlation coefficient are used. Besides, the reader may use all the

methods presented herein and verify the results using the RSDA package written in R language,

that can be downloaded and installed directly from CRAN.

Edwin Diday (Ceremade, University Paris-Dauphine, France), Richard Emilion (University

of Orléans, France)

Title: Symbolic Bayesian Networks

Abstract:

We first consider a n x p table of probability vectors. Each vector in column j is the probability

distribution of a random variable taking values in a finite set Vj that only depends on j, with |Vj|

= dj. Column j is considered as a sample of size n of a random distribution Pj on Vj. We are

considering the problem of building a Bayesian network from these samples in order to express

the joint distribution of (P1, …, Pj ,..., Pp).

This problem is very popular for estimating the joint distribution of p real-valued random

variables, we extend it to the case it of random distributions.

A first solution to the finite sets case consists in discretizing the probability vectors. A second

solution consists in using partial distance correlations (Székély-Rizzo, Ann. Stat. 2014) that

evaluate the influence of Pj on Pj'. The general case will be discussed.

Nov. 13th

Edwin Diday (Ceremade, Paris-Dauphine University, France)

Title: Thinking by classes in Data Sciences: the Symbolic Data Analysis paradigm for Big and

Complex Data

Abstract: Data science is, in general terms, the extraction of knowledge from data, considered as

a science by itself. The Symbolic Data Analysis (SDA) gives a new way of thinking in Data

Sciences by extending standard data to “symbolic data” in order to extract knowledge from

aggregated classes of individual entities. The SDA is born from the classification domain by

considering classes of a given population to be units of a higher level population to be studied.

Such classes allow a summary of the population and often represent the real units of interest. In

order to take care of the variability between the members of each class, these classes are

described by intervals, distributions, set of categories or numbers sometimes weighted and the

like. In that way, we obtain new kinds of data expressing variability, called "symbolic" as they

cannot be reduced to numbers without losing much information. The aim of SDA is to study and

extract new knowledge from these new kinds of data by at least an extension of Computer

Statistics and Data Mining to symbolic data. We show that SDA is a new paradigm which opens

up a vast domain of research and applications to standard, complex and big data.

Richard Emilion (University of Orléans, France)

Title: Probabilistic/statistical setting of SDA

Abstract: Given some raw units described by some variables and a specific class variable,

Symbolic Data Analysis (SDA) deals with objects described by probability distributions

describing classes of raw units. Our SDA formalism hinges on the notion of random distribution.

In the case of paired samples, we show that this formalism depends on a regular conditional

probability existence theorem. We also show the interest of SDA in the case of unpaired samples.

We then discuss on the extension of some classical methods such as PCA and probabilistic

classification.

Oldemar Rodrıguez (University of Costa Rica, San José, Costa Rica)

Title: Latest developments of the RSDA: An R package for Symbolic Data Analysis

Abstract: This package aims to execute some models on Symbolic Data Analysis. Symbolic Data

Analysis was propose by the professor E. DIDAY in 1987 in his paper “Introduction à l’approche

symbolique en Analyse des Données”. Premières Journées Symbolique-Numérique. Université

Paris IX Dauphine. Décembre 1987. A very good reference to symbolic data analysis can be

found in “From the Statistics of Data to the Statistics of Knowledge: Symbolic Data Analysis”

of L. Billard and E. Diday that is the journal American Statistical Association Journal of the

American Statistical Association June 2003, Vol. 98. The main purpose of Symbolic Data

Analysis is to substitute a set of rows (cases) in a data table for an concept (second order

statistical unit). For example, all of the transactions performed by one person (or any object) for

a single “transaction” that summarizes all the original ones (Symbolic-Object) so that millions

of transactions could be summarized in only one that keeps the customary behavior of the person.

This is achieved thanks to the fact that the new transaction will have in its fields, not only

numbers (like current transactions), but can also have objects such as intervals, histograms, or

rules. This representation of an object as a conjunction of properties fits within a data analytic

framework concerning symbolic data and symbolic objects, which has proven useful in dealing

with big databases. In RSDA version 1.2, methods like centers interval principal components

analysis, histogram principal components analysis, multi-valued correspondence analysis,

interval multidemensional scaling (INTERSCAL), symbolic hierarchical clustering, CM, CRM,

Lasso, Ridge and Elastic Net Linear regression model to interval variables have been

implemented. This new version also includes new features to manipulate symbolic data through

a new data structure that implements Symbolic Data Frames and methods for converting SODAS

and XML SODAS files to RSDA files.

Manabu Ichino (Tokyo Denki University, Japan)

Title: The Lookup Table Regression Model for Symbolic Data

Abstract: This paper presents a preliminary research on the lookup table regression model for

symbolic data. We apply the quantile method to the given symbolic data table of the size (N

objects)×(d feature variables), and we represent each object by (m+1) d-dimensional numerical

vectors, called the quantile vectors, for a preselected integer m. The integer m controls the

granularity of the descriptions for symbolic objects. In the new data table of the size

{N×(m+1)}×d, we interchange N×(m+1) rows according to the values of the selected objective

variable from the smallest to the largest. For each of remained d-1 features, i.e., columns, we

execute the segmentation of feature values into blocks so that the generated blocks satisfy the

monotone property. We discard columns that have only a single block. Then, we execute the

segmentation of the objective variable according to the blocks of the remained explanatory

feature variables. Finally, we obtain the lookup table of the size N’×d’, where N’ ≤ N×(m+1) and

d’ ≤ d. Each element of the table is an interval value corresponding to the segmented block. We

realize the interval-value estimation rule for the objective variable by the search of the “nearest

element” in the lookup table. We present examples to illustrate the lookup table regression

model.

Paula Brito (Porto University, Portugal)

Title: Multivariate Parametric Analysis of Interval Data

Abstract: In this work we focus on the study of interval data, i.e., when the variables' values are

intervals of |R.

Parametric probabilistic models for interval-valued variables have been proposed and studied in

(Brito & Duarte Silva, 2012). These models are based on the representation of each observed

interval by its MidPoint and LogRange, and Multivariate Normal and Skew-Normal distributions

are assumed for the whole set of 2p MidPoints and LogRanges of the original p interval-valued

variables. The intrinsic nature of the interval-valued variables leads to different structures of the

variance-covariance matrix, represented by different possible configurations. For all cases,

maximum likelihood estimators of the corresponding parameters have been derived. This

framework may be applied to different statistical multivariate methodologies, thereby allowing

for inference approaches for symbolic data.

The proposed modelling has first been applied to (M)ANOVA of interval data, using a

likelihood-ratio approach. Linear and quadratic models for discriminant analysis of data

described by interval-valued variables have been obtained, and their performance compared with

alternative distance-based approaches. We have also addressed the problem of mixture

distributions, developing model-based clustering using the proposed models.

For the Gaussian model, the problem of outlier identification is addressed, using Mahalanobis

distances based on robust estimations of the joint mean values and the covariance matrices.

The referred modelling, for the Gaussian case, has been implemented in the R-package

MAINT.DATA, available on CRAN. MAINT.DATA introduces a data class for representing

interval data and includes functions for modeling and analysing these data. In particular,

maximum likelihood estimation and statistical tests for the different considered configurations

are addressed. Methods for (M)ANOVA and Linear and Quadratic Discriminant Analysis of this

data class are also currently provided.

Chun-houh Chen (Institute of Stat. Science, Academia Sinica, Taiwan)

Title: Some Extensions of Matrix Visualization: the GAP Approach

Abstract: Exploratory data analysis (EDA, Tukey, 1977) has been extensively used for nearly 40

years yet boxplot and scatterplot are still the major EDA tools for visualizing continuous data in

the 21st century. Many extended modules of matrix visualization via the Generalized Association

Plots (GAP) approach have been developed or under developing. Some details of the following

MV modules will be provided in this talk:

1. Matrix visualization for high-dimensional categorical data structure. For categorical data,

MCA (multiple correspondence analysis) is most popular for visualizing reduced joint space for

samples and variables of categorical nature. But similar to it’s continuous counter part: PCA

(principal component analysis), MCA loses its efficiency when data dimensionality gets really

high. In this study we extend the framework of matrix visualization from continuous data to

categorical data. Categorical matrix visualization can effectively present complex information

patterns for thousands of subjects on thousands of categorical variables in a single matrix

visualization display.

2. Matrix Visualization for High-Dimensional Data with a Cartography Link. When a cartography

link is attached to each subject of a high-dimensional categorical data, it is necessary to use a

geographical map to illustrate the pattern of subject (region)-clusters with variable-groups

embedded in the high-dimensional space. This study presents an interactive cartography system

with systematic color-coding by integrating the homogeneity analysis into matrix visualization.

3. Matrix visualization for symbolic data analysis. Symbolic data analysis (SDA) has gained

popularity over the past few years because of its potential for handling data having a dependent

and hierarchical nature. Here we introduce matrix visualization (MV) for visualizing and

clustering SDA data using interval-valued symbolic data as an example; it is by far the most

popular SDA data type in the literature and the most commonly encountered one in practice.

Many MV techniques for visualizing and clustering conventional data are converted to SDA

data, and several techniques are newly developed for SDA data. Various examples of data with

simple to complex structures are brought in to illustrate the proposed methods.

4. Covariate-adjusted matrix visualization via correlation decomposition. In this study, we extend

the framework of matrix visualization (MV) by incorporating a covariate adjustment process

through the estimation of conditional correlations. MV can explore the grouping and/or

clustering structure of high-dimensional large-scale data sets effectively without dimension

reduction. The benefit is in the exploration of conditional association structures among the

subjects or variables that cannot be done with conventional MV.

Several biomedical examples will be employed for illustrating the versatility of the GAP

approach matrix visualization. (cf. http://gap.stat.sinica.edu.tw/Software/)

Cheng Wang (Beihang University), Edwin Diday, Richard Emilion, Huiwen Wang

Title: Multiple Correspondence Analysis for Mixed Symbolic Data

Abstract: Under the circumstance of cross-platform data collection technology develops rapidly

and the big data era is coming, there are always a mixture of single-valued data, histogram data,

composition data and functional data in one table, which can be called mixed feature-data.

Different types of data may be belong to different space, which leads to that it is a pretty

complicated problem to conduct crosstab analysis among several data types. In this paper, we

propose a Multiple Correspondence Analysis (MCA) for mixed data to detect and represent

underlying structures involved. Before MCA, we first transfer different types of data to vector

data, which is further converted to nominal data. Two ways are considered to convert the vector

data to nominal data, respectively is hierarchal clustering and discretization. An empirical

analysis is conducted to compare the performance of MCA for mixed data based on these two

different ways.