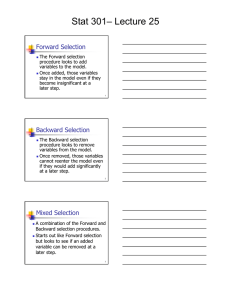

Stat 301– Lecture 27 Forward Selection

advertisement

Stat 301– Lecture 27 Forward Selection The Forward selection procedure looks to add variables to the model. Once added, those variables stay in the model even if they become insignificant at a later step. 1 Backward Selection The Backward selection procedure looks to remove variables from the model. Once removed, those variables cannot reenter the model even if they would add significantly at a later step. 2 Mixed Selection A combination of the Forward and Backward selection procedures. Starts out like Forward selection but looks to see if an added variable can be removed at a later step. 3 Stat 301– Lecture 27 Mixed – Set up Stepwise Fit for MDBH Stepwise Regression Control Stopping Rule: P-value Threshold Prob to Enter Prob to Leave Direction: SSE 10.3855 0.25 0.25 Mixed DFE RMSE RSquare RSquare Adj 19 0.7393276 0.0000 0.0000 Cp 102.4885 p AICc BIC 1 48.35699 49.64257 Current Estimates Lock Entered Parameter Intercept X1 X2 X3 Estimate nDF SS "F Ratio" "Prob>F" 6.265 1 0 0.000 1 0 1 6.207045 26.739 6.42e-5 0 1 0.616027 1.135 0.30079 0 1 7.335255 43.287 3.52e-6 Step History Step Parameter Action "Sig Prob" Seq SS RSquare Cp p AICc BIC 4 Stepwise Regression Control Direction – Mixed Prob to Enter – controls what variables are added. Prob to Leave – controls what variables are removed. Prob to Enter = Prob to Leave 5 Current Estimates The current estimates are exactly the same as with the Forward selection procedure. Clicking on Step will initiate the Mixed procedure that starts like the Forward procedure. 6 Stat 301– Lecture 27 Stepwise Fit for MDBH Stepwise Regression Control Stopping Rule: P-value Threshold Prob to Enter Prob to Leave Direction: SSE 3.0502449 0.25 0.25 Mixed DFE RMSE RSquare RSquare Adj Cp 18 0.4116528 0.7063 0.6900 19.387747 p AICc BIC 2 26.64733 28.13453 Current Estimates Lock Entered Parameter Intercept X1 X2 X3 Estimate nDF SS "F Ratio" "Prob>F" 3.8956688 1 0 0.000 1 0 1 1.000159 8.294 0.0104 0 1 0.403296 2.590 0.12594 32.9371533 1 7.335255 43.287 3.52e-6 Step History Step 1 Parameter Action "Sig Prob" Seq SS RSquare Cp X3 Entered 0.0000 7.335255 0.7063 19.388 p AICc BIC 2 26.6473 28.1345 7 Current Estimates – Step 1 X3 is added to the model Predicted MDBH = 3.896 + 32.937*X3 R2=0.7063 MSE 3.0502499 / 18 0.4117 RMSE = 8 Current Estimates – Step 1 By clicking on Step you will invoke the Backward part of the Mixed procedure. Because X3 is statistically significant and is the only variable in the model, clicking on Step will not do anything. 9 Stat 301– Lecture 27 Current Estimates – Step 1 Of the remaining variables not in the model X1 will add the largest sum of squares if added to the model. SS = 1.000 “F Ratio” = 8.294 “Prob>F” =0.0104 10 JMP Mixed – Step 2 Because X1 will add the largest sum of squares and that addition is statistically significant, by clicking on Step, JMP will add X1 to the model with X3. 11 Stepwise Fit for MDBH Stepwise Regression Control Stopping Rule: P-value Threshold Prob to Enter Prob to Leave Direction: SSE 2.0500859 0.25 0.25 Mixed DFE RMSE RSquare RSquare Adj Cp 17 0.3472654 0.8026 0.7794 9.7842935 p 3 AICc BIC 21.8672 23.18346 Current Estimates Lock Entered Parameter Intercept X1 X2 X3 Estimate nDF SS "F Ratio" "Prob>F" 3.14321066 1 0 0.000 1 0.03136639 1 1.000159 8.294 0.0104 0 1 0.670967 7.784 0.01311 22.953752 1 2.128369 17.649 0.0006 Step History Step 1 2 Parameter Action "Sig Prob" Seq SS RSquare Cp Entered 0.0000 7.335255 0.7063 19.388 X3 Entered 0.0104 1.000159 0.8026 9.7843 X1 p AICc BIC 2 26.6473 28.1345 3 21.8672 23.1835 12 Stat 301– Lecture 27 Current Estimates – Step 2 X1 is added to the model Predicted MDBH = 3.143 + 0.0314*X1 + 22.954*X3 R2=0.8026 MSE 2.0500859 / 17 0.3473 RMSE = 13 Current Estimates – Step 2 By clicking on Step you will invoke the Backward part of the Mixed procedure. Because X3 and X1 are statistically significant, clicking on Step will not do anything. 14 Current Estimates – Step 2 Of the remaining variables not in the model X2 will add the largest sum of squares if added to the model. SS = 0.671 “F Ratio” = 7.784 “Prob>F” =0.0131 15 Stat 301– Lecture 27 JMP Mixed – Step 3 Because X2 will add the largest sum of squares and that addition is statistically significant, by clicking on Step, JMP will add X2 to the model with X3 and X1. 16 Stepwise Fit for MDBH Stepwise Regression Control Stopping Rule: P-value Threshold Prob to Enter Prob to Leave Direction: SSE 1.3791191 0.25 0.25 Mixed DFE RMSE RSquare RSquare Adj 16 0.2935898 0.8672 0.8423 Cp 4 p AICc BIC 4 17.55751 18.25046 Current Estimates Lock Entered Parameter Intercept X1 X2 X3 Estimate nDF SS "F Ratio" "Prob>F" 3.23573225 1 0 0.000 1 0.09740562 1 1.26783 14.709 0.00146 -0.0001689 1 0.670967 7.784 0.01311 3.46681347 1 0.014774 0.171 0.68437 Step History Step 1 2 3 Parameter X3 X1 X2 Action "Sig Prob" Seq SS RSquare Cp Entered 0.0000 7.335255 0.7063 19.388 Entered 0.0104 1.000159 0.8026 9.7843 Entered 0.0131 0.670967 0.8672 4 p AICc BIC 2 26.6473 28.1345 3 21.8672 23.1835 4 17.5575 18.2505 17 Current Estimates – Step 3 X2 is added to the model Predicted MDBH = 3.236 + 0.0974*X1 – 0.000169*X2 + 3.467*X3 R2=0.8672 RMSE = MSE 1.3791191 / 16 0.2936 18 Stat 301– Lecture 27 Current Estimates – Step 3 By clicking on Step you will invoke the Backward part of the Mixed procedure. Note that variable X3 is no longer statistically significant and so it will be removed from the model when you click on Step. 19 Stepwise Fit for MDBH Stepwise Regression Control Stopping Rule: P-value Threshold Prob to Enter Prob to Leave Direction: SSE 1.3938931 0.25 0.25 Mixed DFE RMSE RSquare RSquare Adj Cp 17 0.2863454 0.8658 0.8500 2.1714023 p AICc BIC 3 14.15158 15.46784 Current Estimates Lock Entered Parameter Intercept X1 X2 X3 Estimate nDF SS "F Ratio" "Prob>F" 3.26051366 1 0 0.000 1 0.10691347 1 8.37558 102.149 1.32e-8 -0.0001898 1 2.784561 33.961 0.00002 0 1 0.014774 0.171 0.68437 Step History Step 1 2 3 4 Parameter X3 X1 X2 X3 Action "Sig Prob" Entered 0.0000 Entered 0.0104 Entered 0.0131 Removed 0.6844 Seq SS RSquare Cp 7.335255 0.7063 19.388 1.000159 0.8026 9.7843 0.670967 0.8672 4 0.014774 0.8658 2.1714 p 2 3 4 3 AICc 26.6473 21.8672 17.5575 14.1516 BIC 28.1345 23.1835 18.2505 15.4678 20 Current Estimates – Step 4 X3 is removed from the model Predicted MDBH = 3.2605 + 0.1069*X1 – 0.0001898*X2 R2=0.8658 RMSE = MSE 1.3938931 / 17 0.2863 21 Stat 301– Lecture 27 Current Estimates – Step 4 Because X1 and X2 add significantly to the model they cannot be removed. Because X3 will not add significantly to the model it cannot be added. The Mixed procedure stops. 22 Response MDBH Summary of Fit RSquare 0.865785 RSquare Adj 0.849995 Root Mean Square Error 0.286345 Mean of Response 6.265 Observations (or Sum Wgts) 20 Analysis of Variance Source Model Error C. Total DF 2 17 19 Sum of Squares Mean Square F Ratio 8.991607 4.49580 54.8311 0.08199 Prob > F 1.393893 10.385500 <.0001* Parameter Estimates Term Intercept X1 X2 Estimate Std Error t Ratio Prob>|t| 3.2605137 0.333024 9.79 <.0001* 0.1069135 0.010578 10.11 <.0001* -0.00019 3.256e-5 -5.83 <.0001* Effect Tests Source X1 X2 Nparm 1 1 DF 1 1 Sum of Squares F Ratio Prob > F 8.3755800 102.1490 <.0001* 2.7845615 33.9607 <.0001* 23 Finding the “best” model For this example, the Forward selection procedure did not find the “best” model. The Backward and Mixed selection procedures came up with the “best” model. 24 Stat 301– Lecture 27 Finding the “best” model None of the automatic selection procedures are guaranteed to find the “best” model. The only way to be sure, is to look at all possible models. 25 All Possible Models For k explanatory variables there k are 2 1 possible models. There are k 1-variable models. k There are 2-variable models. 2 There are k 3 3-variable models. 26 All Possible Models When confronted with all possible models, we often rely on summary statistics to describe features of the models. R2: Larger is better adjR2: Larger is better RMSE: Smaller is better 27 Stat 301– Lecture 27 All Possible Models Another summary statistic used to assess the fit of a model is Mallows Cp. SSE p C p MSEFull (n 2 p); p k 1 28 MDBH Example Model with X1 and X2 (p=3) SSEp=1.3938931 MSEFull=0.08619 1.3938931 Cp (20 6) 16.1714 14 2.1714 0.0861949 29 All Possible Models The smaller Cp is the “better” the fit of the model. The full model will have Cp=p. 30 Stat 301– Lecture 27 All Possible Models There are several criteria that incorporate the maximized value of the Likelihood function, L. Which gives the probability of getting the sample data given the best estimates of the parameters in the model. 31 All Possible Models Another summary statistic is the Akaike Information Criterion or AIC. AIC = 2p – 2ln(L) AICc = AIC +2p(p+1)/(n –p–1) The smaller the AICc the “better” the fit of the model. 32 All Possible Models Another summary statistic is the Bayesian Information Criterion of BIC. BIC = –2ln(L) + pln(n) The smaller the BIC the better the fit of the model. 33 Stat 301– Lecture 27 JMP – Fit Model Personality – Stepwise – Run Red triangle pull down next to Stepwise Fit All Possible Models Maximum number of terms: 3 Number of best models: 3 34 All Possible Models Right click on table – Columns Check Cp 35 Stepwise Fit for MDBH SSE 10.3855 DFE RMSE RSquare RSquare Adj 19 0.7393276 0.0000 0.0000 Cp 102.4885 p AICc BIC 1 48.35699 49.64257 All Possible Models Ordered up to best 3 models up to 3 terms per model. Model Number RSquare X3 1 0.7063 X1 1 0.5977 X2 1 0.0593 X1,X2 2 0.8658 X1,X3 2 0.8026 X2,X3 2 0.7451 X1,X2,X3 3 0.8672 RMSE 0.4117 0.4818 0.7367 0.2863 0.3473 0.3946 0.2936 AICc 26.6473 32.9417 49.9281 14.1516 21.8672 26.9777 17.5575 BIC 28.1345 34.4289 51.4153 15.4678 23.1835 28.2940 18.2505 Cp 19.3877 32.4768 97.3416 2.1714 9.7843 16.7089 4.0000 0.9 0.8 0.7 0.6 0.5 0.4 X3 0.3 X1,X2 X1,X2,X3 0.2 0.1 1 2 3 4 5 6 p = Number of Terms 36 Stat 301– Lecture 27 All Possible Models Lists all 7 models. 1-variable models – listed in order of the R2 value. 2-variable models – listed in order of the R2 value. 3-variable (full) model. 37 All Possible Models Model with X1, X2, X3 – Highest R2 value. Model with X1, X2 – Lowest RMSE, Lowest AICc, Lowest BIC, and lowest Cp. 38 Best Model Which is “best”? According to our definition of “best” we can’t tell until we look at the significance of the individual variables in the model. 39