Stat 643 Spring 2010 Assignment 04

advertisement

Stat 643 Spring 2010

Assignment 04

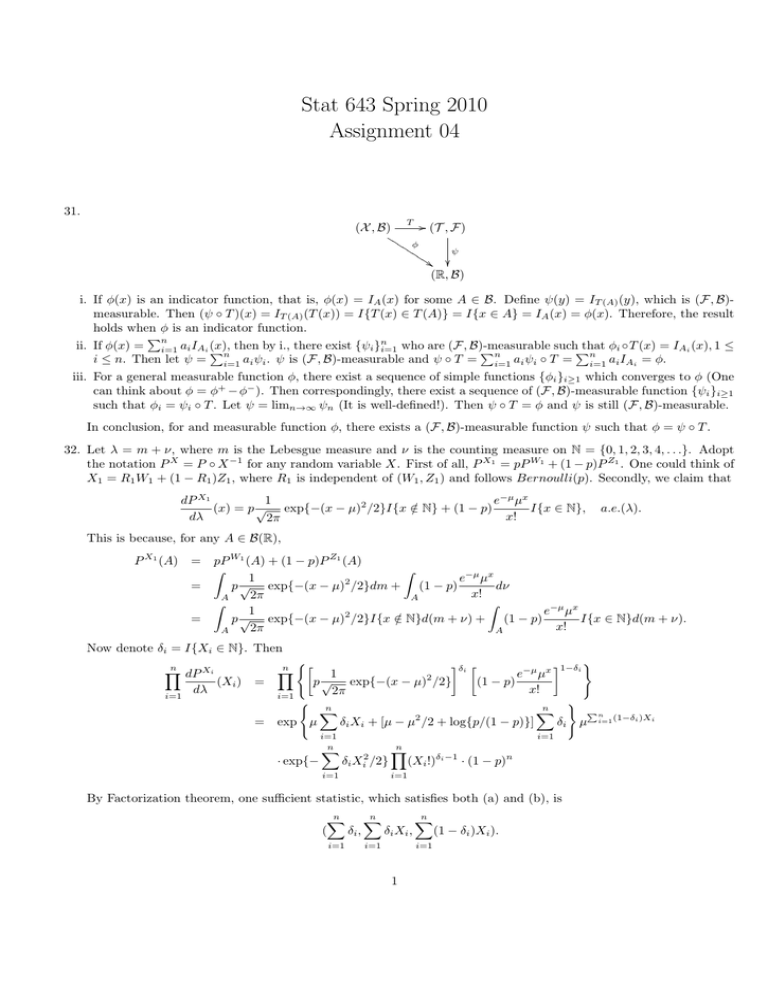

31.

/ (T , F)

(X , B)

II

II φ

II

ψ

II

I$ (R, B)

T

i. If φ(x) is an indicator function, that is, φ(x) = IA (x) for some A ∈ B. Define ψ(y) = IT (A) (y), which is (F, B)measurable. Then (ψ ◦ T )(x) = IT (A) (T (x)) = I{T (x) ∈ T (A)} = I{x ∈ A} = IA (x) = φ(x). Therefore, the result

holds when φ is an indicator function.

Pn

n

ii. If φ(x) = i=1 ai IAi (x),

suchP

that φi ◦T (x) = IAi (x), 1 ≤

Pn then by i., there exist {ψi }i=1 who are (F, B)-measurable

Pn

n

i ≤ n. Then let ψ = i=1 ai ψi . ψ is (F, B)-measurable and ψ ◦ T = i=1 ai ψi ◦ T = i=1 ai IAi = φ.

iii. For a general measurable function φ, there exist a sequence of simple functions {φi }i≥1 which converges to φ (One

can think about φ = φ+ − φ− ). Then correspondingly, there exist a sequence of (F, B)-measurable function {ψi }i≥1

such that φi = ψi ◦ T . Let ψ = limn→∞ ψn (It is well-defined!). Then ψ ◦ T = φ and ψ is still (F, B)-measurable.

In conclusion, for and measurable function φ, there exists a (F, B)-measurable function ψ such that φ = ψ ◦ T .

32. Let λ = m + ν, where m is the Lebesgue measure and ν is the counting measure on N = {0, 1, 2, 3, 4, . . .}. Adopt

the notation P X = P ◦ X −1 for any random variable X. First of all, P X1 = pP W1 + (1 − p)P Z1 . One could think of

X1 = R1 W1 + (1 − R1 )Z1 , where R1 is independent of (W1 , Z1 ) and follows Bernoulli(p). Secondly, we claim that

1

e−µ µx

dP X1

(x) = p √ exp{−(x − µ)2 /2}I{x ∈

/ N} + (1 − p)

I{x ∈ N},

dλ

x!

2π

a.e.(λ).

This is because, for any A ∈ B(R),

P X1 (A)

=

=

=

pP W1 (A) + (1 − p)P Z1 (A)

Z

Z

e−µ µx

1

dν

p √ exp{−(x − µ)2 /2}dm + (1 − p)

x!

2π

A

A

Z

Z

1

e−µ µx

p √ exp{−(x − µ)2 /2}I{x ∈

/ N}d(m + ν) + (1 − p)

I{x ∈ N}d(m + ν).

x!

2π

A

A

Now denote δi = I{Xi ∈ N}. Then

(

)

δi n

−µ x 1−δi

Y

1

e

µ

p √ exp{−(x − µ)2 /2}

(Xi ) =

(1 − p)

dλ

x!

2π

i=1

( n

)

n

X

X

Pn

2

= exp µ

δi Xi + [µ − µ /2 + log{p/(1 − p)}]

δi µ i=1 (1−δi )Xi

n

Y

dP Xi

i=1

· exp{−

i=1

n

X

i=1

δi Xi2 /2}

i=1

n

Y

(Xi !)δi −1 · (1 − p)n

i=1

By Factorization theorem, one sufficient statistic, which satisfies both (a) and (b), is

(

n

X

i=1

δi ,

n

X

δi Xi ,

i=1

n

X

i=1

1

(1 − δi )Xi ).

33. The Pθ in this context really means PθX , that is, PθX ({x}) = (1 − p)px−θ I{x − θ ∈ N}, where N shares the same

definition as in Problem 32.

(a) Suppose Pθ can be dominated by some σ-finite measure µ, then Pθ must be dominated by a probability measure λ

(Lemma 52). As PθX ({θ}) = 1−p > 0 for all θ, this means λ({θ}) > 0 for all θ. This is not possible for a probability

measure λ. Specifically, let D0 = {x ∈ R | λ({x}) > 1}, Dn = {x ∈ R | 1/(n + 1) < λ({x}) ≤ 1/n}, n ≥ 1. Then

R = ∪n≥0 Dn . This means, there exists uncountable set Dn0 . From the construction of Dn0 we know that

λ(Dn0 ) = ∞ as it contains infinitely many points.

(b) Consider PP

(X1 = x1 , . . . , Xn = xn |X(1) = t1 , Sn = t2 ), where X(1) is the minimum order statistic of X1 , . . . , Xn

n

and Sn = i=1 Xi . We would assume that t1 > θ, since otherwise

Pn the conditioning set is empty. Secondly, this

probability would be 0, if (x1 , . . . , xn ) ∈

/ E(t1 , t2 ) = {(k1 , . . . , kn )| i=1 ki = t2 , min1≤i≤n ki = t1 , ki − θ ∈ N}. Thus

P (X1 = x1 , . . . , Xn = xn , X(1) = t1 , Sn = t2 ) = (1 − p)n pt2 −nθ I{(x1 , . . . , xn ) ∈ E(t1 , t2 )}. Furthermore, P (X(1) =

P

P

t1 , Sn = t2 ) = (k1 ,...,kn )∈E(t1 ,t2 ) P (X1 = k1 , . . . , Xn = kn ) = (k1 ,...,kn )∈E(t1 ,t2 ) (1 − p)n pt2 −nθ . Consequently,

P (X1 = x1 , . . . , Xn = xn |X(1) =

Ptn1 , Sn = t2 ) = 1/|E(t1 , t2 )|, where |E(t1 , t2 )| is the size of E(t1 , t2 ). Notice further

that

E(t

,

t

)

=

{(k

,

.

.

.

,

k

)

|

1

2

1

n

i=1 (ki −t1 ) = t2 −nt1 , ki −t1 ∈ N, min1≤i≤n (ki −t1 ) = 0} = {(r1 +t1 , . . . , rn +tn ) |

Pn

r

=

t

−

nt

,

r

∈

N,

min

ri = 0}, which implies that it is independent of θ and only depends on t1 , t2 .

i

2

1

i

1≤i≤n

i=1

Thus (X(1) , Sn ) is sufficient for {Pθ }θ∈Θ .

(c) When θ is known, Θ = (0, 1) and PθX is dominated by counting measure ν, with R-N derivative

dPθX

(x) = (1 − p)px−θ I{x − θ ∈ N}, a.e.(ν).

dν

By factorization theorem,

P

i

Xi would be a sufficient statistic.

34. First we claim that

σ(T ) = {C ∈ B k | π(C) = C, ∀π}.

This is because permutation on x ∈ Rk would not change the order of it, that is, T (π(x)) = T (x), ∀π. This suggests

that the collection of σ(T )-measurable functions is

{f : Rk → R | f is a measurable function and f ◦ π = f, ∀π}

P

0

Now consider Y (x) =

σ(T )-measurable as Y ◦

π Iπ(B) (x)/k!, where x = (x1 , . . . , xk ) .P First of all, Y (X) is P

π(x)

=

Y

(x),

∀π.

Secondly,

for

any

C

∈

σ(T

),

E(I

Y

(X))

=

P

(π(B)

∩

C)/k!

=

B

π

π P (π(B) ∩ π(C)/k! =

P

P

P

(π(B

∩

C))/k!

=

P

(B

∩

C)/k!

=

P

(B

∩

C)

=

E(I

I

).

Therefore,

P

(B|σ(T

))

=

Y

(X),

a.s.(P ). Note that

B

C

π

π

P

Y (x) = π IB (π(x))/k! as well.

2