Keys

advertisement

2011-01-27

STAT602X

Homework 1

Keys

1. Consider Section 1.4 of the typed outline (concerning the variance-bias trade-off in prediction).

Suppose that in a very simple problem with p = 1, the distribution P for the random pair (x, y) is

specified by

x ∼ U(0, 1) and y | x ∼ N(x2 , (1 + x))

((1 + x) is the conditional variance of the output). Further, consider two possible sets of functions

S = {g} for use in creating predictors of y, namely

1. S1 = {g | g(x) = a + bx for real numbers a, b}, and

}

{

[

]

10

∑

j−1

j

2. S2 = g | g(x) =

aj I

<x≤

for real number aj

10

10

j=1

Training data are N pairs (xi , yi ) iid P . Suppose that the fitting of elements of these sets is done

by

1. OLS (simple linear regression) in the case of S1 , and

2. according to

âj =

if no xi ∈

ȳ

1

( j−1 j ]

# xi ∈ 10 , 10

∑

yi

( j−1

10

j

, 10

]

otherwise

i with j

xi ∈( j−1

,

10 10 ]

in the case of S2

to produce predictors fˆ1 and fˆ2 .

a) Find (analytically) the functions g ∗ for the two cases. Use them to find the two expected squared

model biases Ex (E[y | x] − g ∗ (x))2 . How do these compare for the two cases?

Note that E[y | x] = x2 , and I need to find g ∗ = argming∈S Ex (E[y|x] − g(x))2 .

1. (2 pts) For S1 , I need to solve a and b such that Ex (x2 − (a + bx))2 is minimized where

x ∼ U(0, 1). Let

h(a, b) ≡ Ex (x2 − (a + bx))2

∫1

= 0 (x2 − (a + bx))2 dx

= a2 + 13 b2 + 15 − 23 a − 21 b + ab .

–1–

2011-01-27

STAT602X

Homework 1

Set

Keys

∂h

∂a

= 2a −

2

3

+ b = 0 and

∂h

∂b

=

2

b

3

−

1

2

+ a = 0, I get a = − 16 and b = 1,

and the determine of the second derivative is

Ex (E[y | x] − g1∗ (x))2 =

1

180

1

3

> 0.

So, g1∗ (x) = − 16 + x and

≈ 0.0056 .

(

[ j−1

])2

∑

j

a

I

2. (2 pts) For S2 , I need to solve aj such that Ex x2 − 10

<

x

≤

is

j=1 j

10

10

∫ j/10

minimized where x ∼ U(0, 1). This is equivalent to minimize (j−1)/10 (x2 − aj )2 dx for

each j. Let

h(aj ) ≡

=

Set

and

dh

daj

d2 h

da2j

j

= 2aj ( 10

−

=

1

5

> 0.

∫ j/10

(x2 − aj )2 dx

(j−1)/10

j

j 3

a2j ( 10

− j−1

) − 23 aj (( 10

)

10

j 5

− ( j−1

)3 ) + 51 (( 10

) − ( j−1

)5 ) .

10

10

j 3

j 3

− 23 (( 10

) − ( j−1

)3 ) = 0, I get aj = 10

(( 10

) − ( j−1

)3 )

10

3

10

]

∑10 (( j )3 ( j−1 )3 ) [ j−1

j

So, g2∗ (x) = 10

− 10

I 10 < x ≤ 10

and

j=1

3

10

j−1

)

10

Ex (E[y | x] − g2∗ (x))2 ≈ 0.0011 .

R Output

R> j <- 1:10

R> a.j <- 10 / 3 * ((j / 10)^3 - ((j - 1) / 10)^3)

R> h.a.j <- a.j^2 * (j / 10 - (j - 1) / 10) +

2 / 3 * a.j * ((j / 10)^3 - ((j - 1) / 10)^3) +

+

1/5 * ((j/10)^5 - ((j - 1)/10)^5)

R> sum(h.a.j)

[1] 0.001108889

(1 pt) The term Ex (E[y|x] − g ∗ (x))2 is the average squared model bias and ideally g ∗ (x)

gives the smallest bias to the conditional mean function E[y|x] among all other elements of

S. From the above calculation, the g ∗ of S2 has smaller bias than the one of S1 on average.

(0.0011 < 0.0056)

b) For the second case, find an analytical form for ET fˆ2 and then for the average squared estimation

bias Ex (ET fˆ2 (x) − g2∗ (x))2 . (Hints: What is the conditional distribution of the yi given that no

(

]

(

]

xi ∈ j−1

, j ? What is the conditional mean of y given that x ∈ j−1

, j ?)

10 10

10 10

[ j−1

]

∑

j

â

I

Note that the question gave the predictor fˆ2 (x) = 10

<

x

≤

where x is a new

j

j=1

10

10

(

]

ˆ

, j for

observation differ to the training set implicit in the notation of f2 . Let Rj = j−1

10 10

j = 1, . . . , 10.

–2–

2011-01-27

STAT602X

Homework 1

Keys

(i) For ET fˆ2 , we define an indicator Ij = I(no xi ∈ Rj ) which is a Bernoulli random

( 9 )N

( 9 )N

variable, 1 with probability 10

, and 0 with probability 1 − 10

. In the following,

I use a notation f (· · · ) for the pdf or pmf depending on the arguments.

1 (2 pts) For case Ij = 1, I have

⃝

ET (yi | Ij = 1) =

=

=

=

=

Then, ET (ȳ | Ij = 1) =

1

N

∫∫

yi f (yi , x | Ij = 1)dyi dx

∫1∫∞

y f (yi | xi , Ij = 1)f (xi | Ij = 1)dyi dxi

0 −∞ i

∫1

∫∞

f (xi | Ij = 1) −∞ yi f (yi | xi )dyi dxi

0

∫

10 2

x dxi

[0,1]\Rj 9 i

(

( j )3 ( j−1 )3 )

10

1

−

+ 10

.

27

10

∑N

T

i=1 E (yi | Ij = 1) =

10

27

(

1−

( j )3

10

+

( j−1 )3 )

10

.

2 (2 pts) For case Ij = 0, I have

⃝

E(y | Ij = 0) =

=

=

=

=

∫∫

yf (y, x | Ij = 0)dydx

∫1∫∞

yf (y | x, Ij = 0)f (x | Ij = 0)dydx

0 −∞

∫1

∫∞

f (x | Ij = 0) −∞ yf (y | x)dydx

0

∫

10x2 dx

Rj

(( ) 3 ( ) 3 )

j

10

− j−1

.

3

10

10

)

Then, E

i with yi Ij = 0

x

∈R

i

j

(( ) 3 ( ) 3 )

j

.

− j−1

ET (y | Ij = 0) = 10

3

10

10

(

T

1

# xi ∈Rj

∑

1

# xi ∈Rj

=

∑

with

xi ∈Rj

i

ET ( yi | Ij = 0)

=

(1 pts) Combining all above, for all j,

ET (âj ) = PT (Ij = 1)ET (âj | Ij = 1) + PT (Ij = 0)ET (âj | Ij = 0)

( j )3 ( j−1 )3 ) (

( 9 )N ) 10 (( j )3 ( j−1 )3 )

( 9 )N 10 (

1

−

+

+

1

−

− 10

.

= 10

27

10

10

10

3

10

∑

T

Then, ET fˆ2 (x) = 10

j=1 E (âj )I

(ii) (2 pts) Let Ij (x) = I

[ j−1

10

<x≤

[ j−1

10

j

10

]

<x≤

j

10

]

∼ Bernoulli

–3–

.

(1)

10

. From the answer of the part a),

2011-01-27

STAT602X

Homework 1

we have

Keys

g2∗ (x)

=

10

3

∑10 (( j )3

j=1

10

−

( j−1 )3 )

10

Ij (x), so for N = 50,

]2

∑10 (( j )3 ( j−1 )3 )

I

(x)

ET (âj )Ij (x) − 10

−

j

j=1

3

10

10

[∑ [

(( )3 ( )3 )]

]2

10

j

10

T

= Ex

Ij (x)

− j−1

j=1 E (âj ) − 3

10

10

(( )3 ( )3 ))2

∑10 ( T

j

10

=

− j−1

Ex Ij (x)2

E

(â

)

−

j

j=1

3

10

10

Ex (ET fˆ2 (x) − g2∗ (x))2 = Ex

[∑

10

j=1

= 2.878469 × 10−6

where Ex Ij (x)2 =

1

10

and Ex Ij (x)Ik (x) = 0 for all j ̸= k.

R Output

> j <- 1:10

> N <- 50

> p.N <- (9 / 10)^N

> d.rl <- (j / 10)^3 - ((j - 1) / 10)^3

> E.a.j <- p.N * 10 / 27 * (1 - d.rl) + (1 - p.N) * 10 / 3 * d.rl

> # E.a.j <- (p.N * (1 - d.rl) + (1 - p.N) * d.rl) / 3

> sum((E.a.j - 10 / 3 * d.rl)^2 / 10)

[1] 2.878469e-06

c) For the first case, simulate at least 1000 training data sets of size N = 50 and do OLS on each

one to get corresponding fˆ’s. Average those to get an approximation for ET fˆ1 . (If you can do this

analytically, so much the better!) Use this approximation and analytical calculation to find the

average squared estimation bias Ex (ET fˆ1 (x) − g1∗ (x))2 for this case.

∑1000 OLS

1

(2 pts) Let ET fˆ1 (x) ≡ ã + b̃x where ã = 1000

≈ −0.1661305 and b̃ =

k=1 ak

∑

1000

1

OLS

≈ 0.9989991 are empirically estimated from simulations. From the answer of

k=1 bk

1000

the part a), we have the analytic solution g1∗ (x) = − 16 + x. Then,

Ex (ET fˆ1 (x) − g1∗ (x))2 = Ex ((ã + b̃x) − (− 16 + x))2

= (ã + 16 )2 + 2(ã + 61 )(b̃ − 1)Ex x + (b̃ − 1)2 Ex x2

(∵ Ex x = 1/2, Ex x2 = 1/3)

= (ã + 61 )2 + (ã + 61 )(b̃ − 1) + (b̃ − 1)2 /3

≈

8.47644 × 10−8

R Output

R> set.seed(60210113)

R> B <- 10000

R> N <- 50

–4–

2011-01-27

STAT602X

Homework 1

Keys

R> ret <- matrix(0, nrow = B, ncol = 2)

R> for(b in 1:B){

+

data.simulated <- generate.pair.xy(N)

+

ret[b, ] <- lm(y ~ x, data = data.simulated)$coef

+ }

R> (ret <- colMeans(ret))

[1] -0.1661305 0.9989991

R> (ret[1] + 1/6)^2 + (ret[1] + 1/6) * (ret[2] - 1) + (ret[2] - 1)^2 / 3

[1] 8.47644e-08

d) How do your answers for b) and c) compare for a training set of size N = 50?

(1 pts) The quantity Ex (ET fˆ(x) − g ∗ (x))2 measures how well the estimation method is between the predictor and the best estimating function g ∗ (x) among all other elements of S.

Ex (ET fˆ1 (x)−g1∗ (x))2 ≈ 8.47644×10−8 is smaller than Ex (ET fˆ2 (x)−g2∗ (x))2 ≈ 2.878469×10−6

indicating that the estimation method (OLS) for S1 yields smaller difference than the method

(empirical average on 10 equal intervals) for S2 when sample size N = 50. When the sample

size is small, this will lead to large variation for Ex (ET fˆ1 (x) − g1∗ (x))2 . Also, the number of

simulation affects the result, then the conclusion may differ.

e) (This may not be trivial, I haven’t completely thought it through.) Use whatever combination of

analytical calculation, numerical analysis, and simulation you need to use (at every turn preferring

analytics to numerics to simulation) to find the expected prediction variances Ex VarT (fˆ(x)) for the

two cases for training set size N = 50.

1. (2 pts) For fˆ1 (x), I can generate B = 1000 training sets and each has size N = 50.

I apply OLS to all training sets to obtain 1000 a’s and b’s estimations, then estimate

VarT (â), VarT (b̂)x) and CovT (â, b̂) by Monte Carlo. Therefore,

Ex VarT (fˆ1 (x)) = Ex (VarT (â) + VarT (b̂)x2 + 2xCovT (â, b̂))

= VarT (â) + 31 VarT (b̂) + CovT (â, b̂))

where Ex x = 1/2 and Ex x2

Ex VarT (fˆ1 (x)) ≈ 0.06052453 .

= 1/3.

2. (2 pts)

–5–

From the simulation output, I get

2011-01-27

STAT602X

Homework 1

Keys

For fˆ2 (x), I can apply similar steps as fˆ1 . Let Ij (x) = I

to the measure of T , so

[ j−1

10

<x≤

j

10

]

which is constant

∑

Ex VarT (fˆ2 (x)) = Ex VarT ( 10

j=1 âj Ij (x))

(∑

10

T

2

= Ex

j=1 Ij (x) Var (âj )

)

∑

+2 j<k Ij (x)Ik (x)CovT (âj , âk )

∑10 x

T

2

(∵ Ex (Ij (x)Ik (x)) = 0, ∀j ̸= k)

=

j=1 E (Ij (x) )Var (âj )

∑10

T

1

(Ij (x) ∼ Bernoulli(1/10))

= 10

j=1 Var (âj )

Therefore, I only need the Monte Carlo to estimate VarT (âj ) for all j. From the

simulation output, I get Ex VarT (fˆ2 (x)) ≈ 0.3777918 .

R Output

R> generate.pair.xy <- function(N){

+

x <- runif(N)

+

y <- rnorm(N, mean = x^2, sd = sqrt(1 + x))

+

data.frame(x = x, y = y)

+ }

R> estimate.a.j <- function(da.org){

+

a.j <- rep(mean(da.org$y), 10)

+

for(j in 1:10){

+

id <- da.org$x > (j - 1) / 10 & da.org$x <= j / 10

+

if(any(id)){

+

a.j[j] <- mean(da.org$y[id])

+

}

+

}

+

a.j

+ }

R> set.seed(60210115)

R> B <- 1000

R> N <- 50

R> ret.f.1 <- matrix(0, nrow = B, ncol = 2)

R> ret.f.2 <- matrix(0, nrow = B, ncol = 10)

R> for(b in 1:B){

+

data.simulated <- generate.pair.xy(N)

+

ret.f.1[b, ] <- lm(y ~ x, data = data.simulated)$coef

+

ret.f.2[b, ] <- estimate.a.j(data.simulated)

+ }

R> var(ret.f.1[, 1]) + var(ret.f.1[, 2]) / 3 + cov(ret.f.1[, 1], ret.f.1[, 2])

[1] 0.06052453

R> mean(apply(ret.f.2, 2, var))

[1] 0.3777918

f ) In sum, which of the two predictors here has the best value of Err for N = 50?

–6–

2011-01-27

STAT602X

Homework 1

Keys

(1 pts) From the lecture note, I have

Err ≡ Ex Err(x)

= Ex VarT fˆ(x) + Ex (ET fˆ(x) − E[y | x])2 + Ex Var[ y | x]

= Ex VarT fˆ(x) + Ex (ET fˆ(x) − g ∗ (x))2 + Ex (E[y | x] − g ∗ (x))2 + Ex Var[y | x] .

The forth term Ex Var[y | x] is the same for two cases, then compare the sum of the first three

terms is sufficient. From the previous calculation, I have

Err1 ≈ 0.06062453 + 8.47644 × 10−8 + 0.0056 + c = 0.06622461 + c

and

Err2 ≈ 0.3777918 + 2.878469 × 10−6 + 0.001108889 + c = 0.3789036 + c

where c = 1.5 is a constant. The errors are dominated by the first term Ex VarT fˆ(x), and the

Err1 < Err2 shows that the set of S1 with OLS estimation provides a better prediction and

on average has smaller error than the set of S2 with empirical average estimation on 10 equal

intervals.

2. Two files provided at http://thirteen-01.stat.iastate.edu/snoweye/stat602x/ with respectively 50 and then 1000 pairs (xi , yi ) were generated according to P in Problem 1. Use 10-fold

cross validation to see which of the two predictors in Problem 1 looks most likely to be effective.

(The data sets are not sorted, so you may treat successively numbered groups of 1/10th of the

training cases as your K = 10 randomly created pieces of the training set.)

(10 pts) The two datasets were originally generated by

R Code

set.seed(6021012)

generate.pair.xy <- function(N){

x <- runif(N)

y <- rnorm(N, mean = x^2, sd = sqrt(1 + x))

data.frame(x = x, y = y)

}

data.hw1.Q2.set1 <- generate.pair.xy(50)

data.hw1.Q2.set2 <- generate.pair.xy(1000)

write.table(data.hw1.Q2.set1, file = "data.hw1.Q2.set1.txt",

quote = FALSE, sep = "\t", row.names = FALSE)

write.table(data.hw1.Q2.set2, file = "data.hw1.Q2.set2.txt",

quote = FALSE, sep = "\t", row.names = FALSE)

I use 10-fold cross-validation to compute ”corss-validation error”, given by the lecture

–7–

2011-01-27

STAT602X

Homework 1

Keys

note,

N

)2

1 ∑ ( ˆk(i)

ˆ

f (xi ) − yi

CV (f ) =

N i=1

where k(i) is the index of the piece T k training set. From the output given in the following,

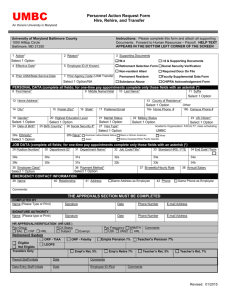

for N = 50, the

err1 = 1.911028 and err2 = 2.776087.

For N = 1000, the

err1 = 1.545203 and err2 = 1.562407.

The errors decrease as N increases and the case S1 is better choice. As the same size increases,

the OLS results S1 may be slightly better than the results of the empirical average calS2 after

N = 10000, and this conclusion may depend on the number of partitions on [0, 1].

1.9

2.0

10−fold cross validateion

1.5

1.6

1.7

err

1.8

OLS

Empirical average

50

1000

5000

10000

N

R Output

R> estimate.a.j <- function(da.org){

+

a.j <- rep(mean(da.org$y), 10)

+

for(j in 1:10){

+

id <- da.org$x > (j - 1) / 10 & da.org$x <= j / 10

+

if(any(id)){

+

a.j[j] <- mean(da.org$y[id])

+

}

–8–

50000

100000

2011-01-27

STAT602X

Homework 1

Keys

+

}

+

a.j

+ }

R> CV <- function(da.org, n.fold = 10){

+

N <- nrow(da.org)

+

N.test <- ceiling(N / n.fold)

+

err.1 <- rep(NA, N)

+

err.2 <- rep(NA, N)

+

+

for(f in 1:n.fold){

+

if(f < n.fold){

+

id.test <- (1:N.test) + (f - 1) * N.test

+

} else{

+

id.test <- (1 + (n.fold - 1) * N.test):N

+

}

+

da.test <- da.org[id.test,]

+

da.train <- da.org[-id.test,]

+

+

ret.lm <- lm(y ~ x, data = da.train)$coef

+

ret.f.1 <- cbind(1, da.test$x) %*% ret.lm

+

err.1[id.test] <- (ret.f.1 - da.test$y)^2

+

+

ret.a.j <- estimate.a.j(da.train)

+

ret.f.2 <- ret.a.j[ceiling(da.test$x * 10)]

+

err.2[id.test] <- (ret.f.2 - da.test$y)^2

+

}

+

+

list(err.1 = mean(err.1), err.2 = mean(err.2))

+ }

R> da1 <- read.table("data.hw1.Q2.set1.txt", header = TRUE, sep = "\t", quote = "")

R> da2 <- read.table("data.hw1.Q2.set2.txt", header = TRUE, sep = "\t", quote = "")

R> CV(da1)

$err.1

[1] 1.911028

$err.2

[1] 2.776087

R> CV(da2)

$err.1

[1] 1.545203

$err.2

[1] 1.562407

3. (This is another calculation intended to provide intuition in the direction that shows data in

Rp are necessarily sparse.) Let Fp (t) and fp (t) be respectively the χ2p cdf and pdf. Consider the

MVNp (0, I) distribution and Z 1 , Z 2 , . . . , Z N iid with this distribution. With

M = min{∥Z i ∥ | i = 1, 2, . . . , N }

Write out a one-dimensional integral involving Fp (t) and fp (t) giving EM . Evaluate this mean for

N = 100 and p = 1, 5, 10, and 20 either numerically using simulation.

–9–

2011-01-27

STAT602X

Homework 1

Keys

iid

(10 pts) Given the question setting, ∥Z i ∥2 ∼ χ2p has cdf Fp (t) and pdf fp (t) where t > 0 is an

observed value of ∥Z i ∥2 . From the order statistics, the pdf of M is fM (m) = N fp (m2 )(1 −

Fp (m2 ))N −1 |J| where |J| = 2m. Hence, the mean of M is

∫

EM =

∞

2m2 N fp (m2 )(1 − Fp (m2 ))N −1 dm.

0

This can be done by Monte Carlo or numerical intergration.

R Output

set.seed(6021013)

B <- 1000

DF <- c(1, 5, 10, 20, 50)

ret.100 <- matrix(0, nrow = B, ncol = length(DF))

for(df in 1:length(DF)){

for(b in 1:B){

ret.100[b, df] <- sqrt(min(rchisq(100, DF[df])))

}

}

ret.5000 <- matrix(0, nrow = B, ncol = length(DF))

for(df in 1:length(DF)){

for(b in 1:B){

ret.5000[b, df] <- sqrt(min(rchisq(5000, DF[df])))

}

}

data.frame(p = DF, mean.100 = colMeans(ret.100), mean.5000 = colMeans(ret.5000))

p

mean.100

mean.5000

1 0.01220739 0.0002436844

5 0.67931648 0.3014548833

10 1.52105314 0.9698842615

20 2.75772516 2.1245447477

50 5.32947136 4.6139640221

R>

R>

R>

R>

R>

+

+

+

+

R>

R>

+

+

+

+

R>

1

2

3

4

5

From the output, we can see that the mean of the minimum distance of N = 100 observations to the orange is increased as p increases. The mean of p = 20 is almost 50 times than

the mean of p = 1. This quantity EM should go to 0 as N → ∞, and from the output it

probably needs more samples as p increases. Rp is sparse.

Total: 40 pts (Q1: 20, Q2: 10, Q3: 10). Any resonable solutions are acceptable. Some results may

be different due to Monte Carlo simulation.

– 10 –