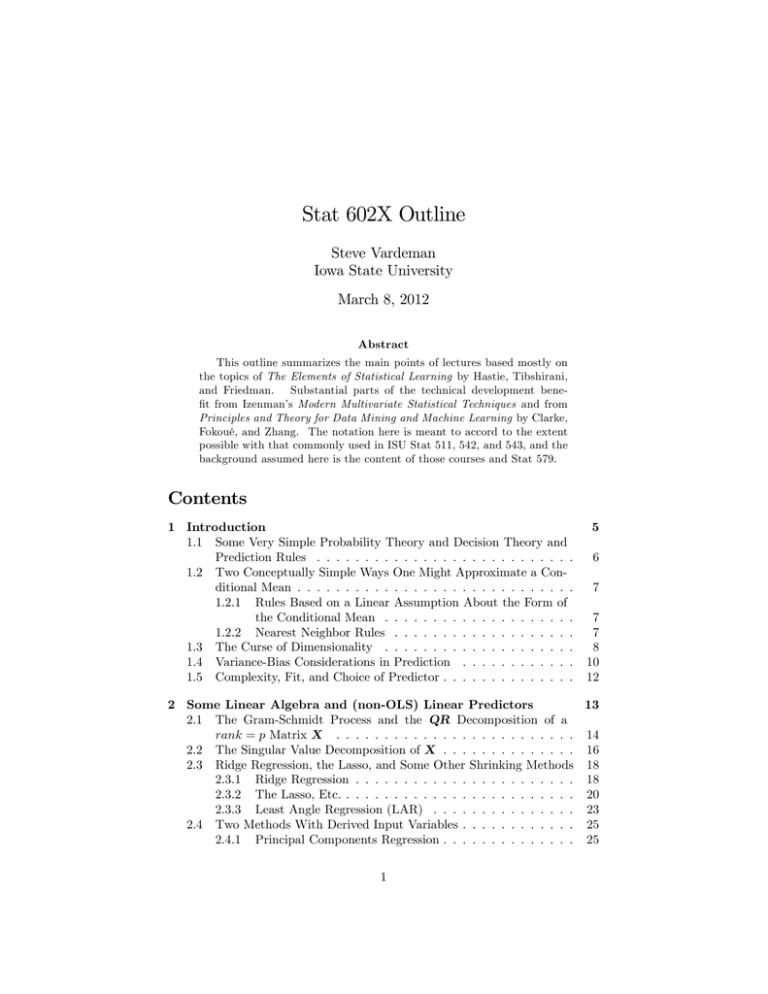

Stat 602X Outline Steve Vardeman Iowa State University March 8, 2012

advertisement

Stat 602X Outline

Steve Vardeman

Iowa State University

March 8, 2012

Abstract

This outline summarizes the main points of lectures based mostly on

the topics of The Elements of Statistical Learning by Hastie, Tibshirani,

and Friedman. Substantial parts of the technical development bene…t from Izenman’s Modern Multivariate Statistical Techniques and from

Principles and Theory for Data Mining and Machine Learning by Clarke,

Fokoué, and Zhang. The notation here is meant to accord to the extent

possible with that commonly used in ISU Stat 511, 542, and 543, and the

background assumed here is the content of those courses and Stat 579.

Contents

1 Introduction

1.1 Some Very Simple Probability Theory and Decision Theory and

Prediction Rules . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Two Conceptually Simple Ways One Might Approximate a Conditional Mean . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2.1 Rules Based on a Linear Assumption About the Form of

the Conditional Mean . . . . . . . . . . . . . . . . . . . .

1.2.2 Nearest Neighbor Rules . . . . . . . . . . . . . . . . . . .

1.3 The Curse of Dimensionality . . . . . . . . . . . . . . . . . . . .

1.4 Variance-Bias Considerations in Prediction . . . . . . . . . . . .

1.5 Complexity, Fit, and Choice of Predictor . . . . . . . . . . . . . .

5

6

7

7

7

8

10

12

2 Some Linear Algebra and (non-OLS) Linear Predictors

13

2.1 The Gram-Schmidt Process and the QR Decomposition of a

rank = p Matrix X . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2 The Singular Value Decomposition of X . . . . . . . . . . . . . . 16

2.3 Ridge Regression, the Lasso, and Some Other Shrinking Methods 18

2.3.1 Ridge Regression . . . . . . . . . . . . . . . . . . . . . . . 18

2.3.2 The Lasso, Etc. . . . . . . . . . . . . . . . . . . . . . . . . 20

2.3.3 Least Angle Regression (LAR) . . . . . . . . . . . . . . . 23

2.4 Two Methods With Derived Input Variables . . . . . . . . . . . . 25

2.4.1 Principal Components Regression . . . . . . . . . . . . . . 25

1

2.4.2

Partial Least Squares Regression . . . . . . . . . . . . . .

3 Linear Prediction Using Basis Functions

3.1 p = 1 Wavelet Bases . . . . . . . . . . . . . . . . . . . . . . .

3.2 p = 1 Piecewise Polynomials and Regression Splines . . . . .

3.3 Basis Functions and p-Dimensional Inputs . . . . . . . . . . .

3.3.1 Multi-Dimensional Regression Splines (Tensor Bases) .

3.3.2 MARS (Mulitvariate Adapative Regression Splines) .

.

.

.

.

.

.

.

.

.

.

4 Smoothing Splines, Regularization, RKHS’s, and Bayes Prediction

4.1 p = 1 Smoothing Splines . . . . . . . . . . . . . . . . . . . . . . .

4.2 Multi-Dimensional Smoothing Splines . . . . . . . . . . . . . . .

4.3 Reproducing Kernel Hilbert Spaces and Penalized/Regularized

Fitting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.1 RKHS’s and p = 1 Cubic Smoothing Splines . . . . . . . .

4.3.2 What Seems to be Possible Beginning from Linear Functionals and Linear Di¤erential Operators for p = 1 . . . .

4.3.3 What Seems Common Beginning From a Kernel . . . . .

4.4 Gaussian Process "Priors," Bayes Predictors, and RKHS’s . . . .

26

28

29

31

33

33

33

35

35

39

40

41

42

44

49

5 Kernel Smoothing Methods

51

5.1 One-dimensional Kernel Smoothers . . . . . . . . . . . . . . . . . 51

5.2 Kernel Smoothing in p Dimensions . . . . . . . . . . . . . . . . . 54

6 High-Dimensional Use of Low-Dimensional Smoothers

55

6.1 Additive Models and Other Structured Regression Functions . . 55

6.2 Projection Pursuit Regression . . . . . . . . . . . . . . . . . . . . 56

7 Highly Flexible Non-Linear Parametric Regression

7.1 Neural Network Regression . . . . . . . . . . . . . .

7.1.1 The Back-Propagation Algorithm . . . . . . .

7.1.2 Formal Regularization of Fitting . . . . . . .

7.2 Radial Basis Function Networks . . . . . . . . . . . .

Methods 57

. . . . . . . 57

. . . . . . . 59

. . . . . . . 61

. . . . . . . 62

8 More on Understanding and Predicting Predictor Performance

8.1 Optimism of the Training Error . . . . . . . . . . . . . . . . . . .

8.2 Cp , AIC and BIC . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.2.1 Cp and AIC . . . . . . . . . . . . . . . . . . . . . . . . . .

8.2.2 BIC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.3 Cross-Validation Estimation of Err . . . . . . . . . . . . . . . . .

8.4 Bootstrap Estimation of Err . . . . . . . . . . . . . . . . . . . . .

2

63

64

65

65

66

67

68

9 Predictors Built on Bootstrap Samples, Model Averaging, and

Stacking

9.1 Predictors Built on Bootstrap Samples . . . . . . . . . . . . . . .

9.1.1 Bagging . . . . . . . . . . . . . . . . . . . . . . . . . . . .

9.1.2 Bumping . . . . . . . . . . . . . . . . . . . . . . . . . . .

9.2 Model Averaging and Stacking . . . . . . . . . . . . . . . . . . .

9.2.1 Bayesian Model Averaging . . . . . . . . . . . . . . . . . .

9.2.2 Stacking . . . . . . . . . . . . . . . . . . . . . . . . . . . .

70

70

70

70

71

71

72

10 Linear (and a Bit on Quadratic) Methods of Classi…cation

74

10.1 Linear (and a bit on Quadratic) Discriminant Analysis . . . . . . 74

10.2 Logistic Regression . . . . . . . . . . . . . . . . . . . . . . . . . . 78

10.3 Separating Hyperplanes . . . . . . . . . . . . . . . . . . . . . . . 79

11 Support Vector Machines

11.1 The Linearly Separable Case . .

11.2 The Linearly Non-separable Case

11.3 SVM’s and Kernels . . . . . . . .

11.3.1 Heuristics . . . . . . . . .

11.3.2 An Optimality Argument

11.4 Other Support Vector Stu¤ . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

80

80

83

85

85

87

88

12 Trees and Related Methods

89

12.1 Regression and Classi…cation Trees . . . . . . . . . . . . . . . . . 89

12.2 Random Forests . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

12.3 PRIM (Patient Rule Induction Method) . . . . . . . . . . . . . . 95

13 Boosting

13.1 The AdaBoost Algorithm for 2-Class Classi…cation . . . . . . . .

13.2 Why AdaBoost Might Be Expected to Work . . . . . . . . . . . .

13.2.1 Expected "Exponential Loss" for a Function of x . . . . .

13.2.2 Sequential Search for a g (x) With Small Expected Exponential Loss and the AdaBoost Algorithm . . . . . . . . .

13.3 Other Forms of Boosting . . . . . . . . . . . . . . . . . . . . . . .

13.3.1 SEL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13.3.2 AEL (and Binary Regression Trees) . . . . . . . . . . . .

13.3.3 K-Class Classi…cation . . . . . . . . . . . . . . . . . . . .

13.4 Some Issues (More or Less) Related to Boosting and Use of Trees

as Base Predictors . . . . . . . . . . . . . . . . . . . . . . . . . .

14 Prototype and Nearest Neighbor Methods of Classi…cation

96

96

97

97

98

100

101

102

102

103

106

15 Some Methods of Unsupervised Learning

109

15.1 Association Rules/Market Basket Analysis . . . . . . . . . . . . . 110

15.1.1 The "Apriori Algorithm" for the Case of Singleton Sets Sj 112

15.1.2 Using 2-Class Classi…cation Methods to Create Rectangles 113

15.2 Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3

15.3

15.4

15.5

15.6

15.2.1 Partitioning Methods ("Centroid"-Based Methods) .

15.2.2 Hierarchical Methods . . . . . . . . . . . . . . . . .

15.2.3 (Mixture) Model-Based Methods . . . . . . . . . . .

15.2.4 Self-Organizing Maps . . . . . . . . . . . . . . . . .

Principal Components and Related Ideas . . . . . . . . . . .

15.3.1 "Ordinary" Principal Components . . . . . . . . . .

15.3.2 Principal Curves and Surfaces . . . . . . . . . . . . .

15.3.3 Kernel Principal Components . . . . . . . . . . . . .

15.3.4 "Sparse" Principal Components . . . . . . . . . . . .

15.3.5 Non-negative Matrix Factorization . . . . . . . . . .

15.3.6 Archetypal Analysis . . . . . . . . . . . . . . . . . .

15.3.7 Independent Component Analysis . . . . . . . . . .

15.3.8 Spectral Clustering . . . . . . . . . . . . . . . . . . .

Multi-Dimensional Scaling . . . . . . . . . . . . . . . . . . .

Document Features and String Kernels for Text Processing

Google PageRanks . . . . . . . . . . . . . . . . . . . . . . .

16 Kernel Mechanics

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

115

117

118

119

121

121

123

126

127

128

128

129

131

133

135

137

139

4

1

Introduction

The course is (mostly) about "supervised" machine learning for statisticians.

The fundamental issue here is prediction, usually with a fairly large number of

predictors and a large number of cases upon which to build a predictor. For

x 2 <p

an input vector (a vector of predictors) with jth coordinate xj and

y

some output value (usually univariate, and when it’s not, we’ll indicate so

with bold face) the object is to use N observed (x; y) pairs

(x1 ; y1 ) ; (x2 ; y2 ) ; : : : ; (xN ; yN )

called training data to create an e¤ective prediction rule

yb = fb(x)

"Supervised" learning means that the training data include values of the output

variable. ("Unsupervised" learning means that there is no particular variable

designated as the output variable, and one is looking for patterns in the training

data x1 ; x2 ; : : : ; xN , like clusters or low-dimensional structure.)

Here we will write

0

0 0 1

1

y1

x1

B x02 C

B y2 C

B

B

C

C

X = B . C ; Y = B . C ; and T = (X; Y )

N p

@ .. A N 1 @ .. A

yN

x0N

for the training data, and when helpful (to remind of the size of the training

set) add "N " subscripts.

A primary di¤erence between a "machine learning" point of view and that

common in basic graduate statistics courses is an ambivalence toward making

statements concerning parameters of any models used in the invention of prediction rules (including those that would describe the amount of variation in

observables one can expect the model to generate). Standard statistics courses

often conceive of those parameters as encoding scienti…c knowledge about some

underlying data-generating mechanism or real-world phenomenon. Machine

learners don’t seem to care much about these issues, but rather are concerned

about "how good" a prediction rule is.

An output variable might take values in < or in some …nite set G = f1; 2; : : : ; Kg

or G = f0; 1; : : : ; K 1g. In this latter case, it can be notationally convenient

to replace a G-valued output y with K indicator variables

yj = I [y = j]

5

(of course) each taking real values. This replaces the

output

0

0

1

y0

y1

B y1

B y2 C

B

B

C

y = B . C or y = B

..

@

@ .. A

.

yK

yK

taking values in f0; 1g

1.1

K

<K .

1

original y with the vector

1

C

C

C

A

(1)

Some Very Simple Probability Theory and Decision

Theory and Prediction Rules

For purposes of appealing to general theory that can suggest sensible forms for

prediction rules, suppose that the random pair (x0 ; y) has ((p + 1)-dimensional

joint) distribution P . (Until further notice all expectations refer to distributions and conditional distributions derived from P . In general, if we need

to remind ourselves what variables are being treated as random in probability,

expectation, conditional probability, or conditional expectation computations,

we will superscript E or P with their names.) Suppose further that L (b

y ; y) 0

is some loss function quantifying how unhappy I am with prediction yb when the

output is y. Purely as a thought experiment (not yet as anything based on the

training data) I might consider trying to choose a functional form f (NOT yet

f^) to minimize

EL (f (x) ; y)

This is (in theory) easily done, since

EL (f (x) ; y) = EE [L (f (x) ; y) jx]

and the minimization can be accomplished by considering the conditional mean

for each possible value of x. That is, an optimal choice of f is de…ned by

f (u) = arg minE [L (a; y) jx = u]

(2)

a

2

In the simple case where L (b

y ; y) = (b

y y) , the optimal f in (2) is then

well-known to be

f (u) = E [yjx = u]

In a classi…cation context, where y takes values in G = f1; 2; : : : ; Kg or

G = f0; 1; : : : ; K 1g, I might use (0-1) loss function

L (b

y ; y) = I [b

y 6= y]

An optimal f corresponding to (2) is then

X

f (u) = arg min

P [y = vjx = u]

a

=

v6=a

arg maxP [y = ajx = u]

a

6

Further, if I de…ne y as in (1), this is

f (u) = the index of the largest entry of E [yjx = u]

So in both the squared error loss context and in the 0-1 loss classi…cation context, (P ) optimal choice of f involves the conditional mean of an output variable

(albeit the second is a vector of 0-1 indicator variables). These strongly suggest

the importance of …nding ways to approximate conditional means E[yjx = u] in

order to (either directly or indirectly) produce good prediction rules.

1.2

Two Conceptually Simple Ways One Might Approximate a Conditional Mean

It is standard to point to two conceptually appealing but in some sense polaropposite ways of approximating E[yjx = u] (for (x; y) with distribution P ).

The tacit assumption here is that the training data are realizations of (x1 ; y1 ) ;

(x2 ; y2 ) ; : : : ; (xN ; yN ) iid P .

1.2.1

Rules Based on a Linear Assumption About the Form of the

Conditional Mean

If one is willing to adopt fairly strong assumptions about the form of E[yjx = u]

then Stat 511-type computations might be used to guide choice of a prediction

rule. In particular, if one assumes that for some 2 <p

u0

E [yjx = u]

(3)

(if we wish, we are free to restrict x1 = u1 1 so that this form has an intercept)

then an OLS computation with the training data produces

and suggests the predictor

b = X 0X

1

X 0Y

f^ (x) = x0 b

Probably "the biggest problems" with this possibility in situations involving large p are the potential in‡exibility of the linear form (3) working directly

with the training data, and the "non-automatic" ‡avor of traditional regression model-building that tries to both take account of/mitigate problems of

multicollinearity and extend the ‡exibility of forms of conditional means by

constructing new predictors from "given" ones (further exploding the size of

"p" and the associated di¢ culties).

1.2.2

Nearest Neighbor Rules

One idea (that might automatically allow very ‡exible forms for E[yjx = u]) is

to operate completely non-parametrically and to think that if N is "big enough,"

7

perhaps something like

X

1

yi

# of xi = u

(4)

i with

xi =u

might work as an approximation to E[yjx = u]. But almost always (unless N

is huge and the distribution of x is discrete) the number of xi = u is 0 or at

most 1 (and absolutely no extrapolation beyond the set of training inputs is

possible). So some modi…cation is surely required. Perhaps the condition

xi = u

might be replaced with

xi

u

in (4).

One form of this is to de…ne for each x the k-neighborhood

nk (x) = the set of k inputs xi in the training set closest to x in <p

A k-nearest neighbor approximation to E[yjx = u] is then

mk (u) =

1

k

X

yi

i with

xi 2nk (u)

suggesting the prediction rule

fb(x) = mk (x)

One might hope that upon allowing k to increase with N , provided that P is

not so bizarre (one is counting, for example, on the continuity of E[yjx = u] in

u) this could be an e¤ective predictor. It is surely a highly ‡exible predictor

and automatically so. But as a practical matter, it and things like it often

fail to be e¤ective ... basically because for p anything but very small, <p is far

bigger than we naively realize.

1.3

The Curse of Dimensionality

Roughly speaking, one wants to allow the most ‡exible kinds of prediction rules

possible. Naively, one might hope that with what seem like the "large" sample

sizes common in machine learning contexts, even if p is fairly large, prediction

rules like k-nearest neighbor rules might be practically useful (as points "…ll up"

<p and allow one to …gure out how y behaves as a function of x operating quite

locally). But our intuition about how sparse data sets in <p actually must be is

pretty weak and needs some education. In popular jargon, there is the "curse

of dimensionality" to be reckoned with.

There are many ways of framing this inescapable sparsity. Some simple ones

involve facts about uniform distributions on the unit ball in <p

fx 2 <p j kxk

8

1g

and on a unit cube centered at the origin

[ :5; :5]

p

For one thing, "most" of these distributions are very near the surface of the

p

solids. The cube [ r; :r] capturing (for example) half the volume of the cube

(half the probability mass of the distribution) has

r = :5 (:5)

1=p

which converges to :5 as p increases. Essentially the same story holds for the

uniform distribution on the unit ball. The radius capturing half the probability

mass has

1=p

r = (:5)

which converges to 1 as p increases. Points uniformly distributed in these

regions are mostly near the surface or boundary of the spaces.

Another interesting calculation concerns how large a sample must be in order

for points generated from the uniform distribution on the ball or cube in an iid

fashion to tend to "pile up" anywhere. Consider the problem of describing

the distance from the origin to the closest of N iid points uniform in the pdimensional unit ball. With

R = the distance from the origin to a single random point

R has cdf

8

< 0

rp

F (r) =

:

1

0

r<0

r 1

r>1

So if R1 ; R2 ; : : : ; RN are iid with this distribution, M = min fR1 ; R2 ; : : : ; RN g

has cdf

8

0

m<0

<

N

FM (m) =

1 (1 mp )

0 m 1

:

1

m>1

This distribution has, for example, median

1

FM (:5) =

1

2

1

1=N

!1=p

For, say, p = 100 and N = 106 , the median of the distribution of M is :87.

In retrospect, it’s not really all that hard to understand that even "large"

numbers of points in <p must be sparse. After all, it’s perfectly possible for

p-vectors u and v to agree perfectly on all but one coordinate and be far apart

in p-space. There simply are a lot of ways for two p-vectors to di¤er!

In addition to these kinds of considerations of sparsity, there is the fact that

the potential complexity of functions of p variables explodes exponentially in

9

p. CFZ identify this as a second manifestation of "the curse," and go on to

expound a third that says "for large p, all data sets exhibit multicollinearity (or

its generalization to non-parametric …tting)" and its accompanying problems of

reliable …tting and extrapolation. In any case, the point is that even "large"

N doesn’t save us and make practical large-p prediction a more or less a trivial

application of standard parametric or non-parametric regression methodology.

1.4

Variance-Bias Considerations in Prediction

Just as in model parameter estimation, the "variance-bias trade-o¤" notion

is important in a prediction context. Here we consider decompositions of

what HTF in their Section 2.9 and Chapter 7 call various forms of "test/

generalization/prediction error" that can help us think about that trade-o¤,

and what issues come into play as we try to approximate a conditional mean

function.

In what follows, assume that (x1 ; y1 ) ; (x2 ; y2 ) ; : : : ; (xN ; yN ) are iid P and

independent of (x; y) that is also P distributed. Write ET for expectation

(and VarT for variance) computed with respect to the joint distribution of the

training data and E[ jx = u] for conditional expectation (and Var[ jx = u] for

conditional variance) based on the conditional distribution of yjx = u. A

measure of the e¤ectiveness of the predictor f^ (x) at x = u (under squared

error loss) is (the function of u, an input vector at which one is predicting)

f^ (x)

ET E

Err (u)

2

y

jx = u

For some purposes, other versions of this might be useful and appropriate. For

example

2

ErrT

E(x;y) f^ (x) y

is another kind of prediction or test error (that is a function of the training data).

(What one would surely like, but almost surely cannot have is a guarantee that

ErrT is small uniformly in T .) Note then that

Err

Ex Err (x) = ET ErrT

is a number, an expected prediction error.

In any case, a useful decomposition of Err(u) is

Err (u)

2

=

ET

f^ (u)

E [yjx = u]

=

ET

f^ (u)

ET f^ (u)

=

VarT f^ (u) + ET f^ (u)

2

h

+ E (y

+ ET f^ (u)

10

2

E [yjx = u]) jx = u

2

E [yjx = u]

+ Var [yjx = u]

2

E [yjx = u]

i

+ Var [yjx = u]

The …rst quantity in this decomposition, VarT f^ (u) , is the variance of the pre2

diction at u. The second term, ET f^ (u)

E [yjx = u]

, is a kind of squared

bias of prediction at u. And Var[yjx = u] is an unavoidable variance in outputs

at x = u. Highly ‡exible prediction forms may give small prediction biases at

the expense of large prediction variances. One may need to balance the two o¤

against each other when looking for a good predictor.

Some additional insight into this can perhaps be gained by considering

Ex Err (x)

Err

= Ex VarT f^ (x) + Ex ET f^ (x)

2

E [yjx]

+ Ex Var [yjx]

The …rst term on the right here is the average (according to the marginal of x)

of the prediction variance at x. The second is the average squared prediction

bias. And the third is the average conditional variance of y (and is not under the

control of the analyst choosing f^ (x)). Consider here a further decomposition

of the second term.

Suppose that T is used to select a function (say gT ) from a some linear

2

susbspace, say S = fgg, of the space functions h with Ex (h (x)) < 1, and

that ultimately one uses as a predictor

f^ (x) = gT (x)

Since linear subspaces are convex

ET gT = ET f^ 2 S

g

Further, suppose that

g

2

arg minEx (g (x)

E [yjx])

g2S

the projection of (the function of u) E[yjx = u] onto the space S. Then write

h (u) = E [yjx = u]

g (u)

so that

E [yjx] = g (x) + h (x)

Then, it’s a consequence of the facts that Ex (h (x) g (x)) = 0 for all g 2 S

and therefore that Ex (h (x) g (x)) = 0 and Ex (h (x) g (x)) = 0, that

Ex ET f^ (x)

2

E [yjx]

2

= Ex (g

(x)

(g (x) + h (x)))

= Ex (g

(x)

g (x)) + Ex (h (x))

2Ex ((g

2

(x)

= Ex ET f^ (x)

11

2

g (x)) h (x))

2

g (x)

+ Ex (E [yjx]

g (x))

2

The …rst term on the right above is an average squared estimation bias, measuring how well the average (over T ) predictor function approximates the element

of S that best approximates the conditonal mean function. This is a measure

of how appropriately the training data are used to pick out elements of S. The

second term on the right is an average squared model bias, measuring how well

it is possible to approximate the conditional mean function E[yjx = u] by an

element of S. This is controlled by the size of S, or e¤ectively the ‡exibility

allowed in the form of f^. Average squared prediction bias can thus be large

because the form …t is not ‡exible enough, or because a poor …tting method is

employed.

1.5

Complexity, Fit, and Choice of Predictor

In rough terms, standard methods of constructing predictors all have associated

"complexity parameters" (like numbers and types of predictors/basis functions

or "ridge parameters" used in regression methods, and penalty weights/bandwidths/neighborhood sizes applied in smoothing methods) that are at the choice

of a user. Depending upon the choice of complexity, one gets more or less

‡exibility in the form f^. If a choice of complexity doesn’t allow enough ‡exibility

in the form of a predictor, under-…t occurs and there is large bias in prediction.

On the other hand, if the choice allows too much ‡exibility, bias may be reduced,

but the price paid is large variance of prediction and over-…t. It is a theme

that runs through this material that complexity must be chosen in a way that

balances variance and bias for the particular combination of N and p and general

circumstance one faces. Speci…cs of methods for doing this are presented in

some detail in Chapter 7 of HTF, but in broad terms, the story is as follows.

The most obvious/elementary means of selecting a "complexity parameter"

is to (for all options under consideration) compute and compare values of the

so-called "training error"

err =

N

1 X ^

f (xi )

N i=1

2

yi

PN

(or more generally, err = N1 i=1 L f^ (xi ) ; yi ) and look for predictors with

small err. The problem is that err is no good estimator of Err (or any other

sensible quanti…cation of predictor performance). It typically decreases with

increased complexity (without an increase for large complexity), and fails to

reliably indicate performance outside the training sample.

The existing practical options for evaluating likely performance of a predictor

(and guiding choice of complexity) are then three.

1. One might employ other (besides err) training-data based indicators of

likely predictor performance, like Mallows’Cp , "AIC," and "BIC."

2. In genuinely large N contexts, one might hold back some random sample of

the training data to serve as a "test set," …t to produce f^ on the remainder,

12

and use

X

1

size of the test set

2

f^ (xi )

yi

i2 the

test set

to indicate likely predictor performance.

3. One might employ sample re-use methods to estimate Err and guide choice

of complexity. Cross-validation and bootstrap ideas are used here.

We’ll say more about these possibilities later, but at the moment outline the

cross-validation idea. K-fold cross-validation consists of

1. randomly breaking the training set into K disjoint roughly equal-sized

pieces, say T 1 ; T 2 ; : : : ; T K ,

2. training on each of the reduced training sets T

dictors f^k ,

T k , to produce K pre-

3. letting k (i) be the index of the piece T k containing training case i, and

computing the cross-validation error

N

1 X ^k(i)

CV f^ =

f

(xi )

N i=1

(more generally, CV f^ =

1

N

PN

i=1

2

yi

L yi ; f^k(i) (xi ) ).

This is roughly the same as …tting on each T T k and correspondingly evaluating on T k , and then averaging. (When N is a multiple of K, these are exactly

the same.) One hopes that CV f^ approximates Err.

The choice of K = N is called "leave one out cross-validation" and sometimes

in this case there are slick computational ways of evaluating CV f^ .

2

Some Linear Algebra and (non-OLS) Linear

Predictors

There is more to say about the development of a linear predictor

fb(x) = x0 ^

for an appropriate ^ 2 <p than what is said in Stat 500 and Stat 511 (where

least squares is used to …t the linear form to all p input variables or to some

subset of M of them). Some of that involves more linear algebra background

than is assumed or used in Stat 511. We begin with some linear theory that

is useful both here and later, and then go on to consider other …tting methods

besides ordinary least squares.

13

Consider again the matrix of training data inputs and vector of outputs

0

1

0 0 1

y1

x1

B y2 C

B x02 C

B

C

C

B

X = B . C and Y = B . C

.

.. A

N p @

N 1

@ . A

yN

x0N

It is standard Stat 511 fare that least squares projects Y onto C (X), the column

space of X, in order to produce the vector of …tted values

0

1

yb1

B yb2 C

B

C

Yb = B . C

N 1

@ .. A

2.1

ybN

The Gram-Schmidt Process and the QR Decomposition of a rank = p Matrix X

For many purposes it would be convenient if the columns of a full rank (rank =

p) matrix X were orthogonal. In fact, it would be useful to replace the N p

matrix X with an N p matrix Z with orthogonal columns and having the

property that for each l if X l and Z l are N l consisting of the …rst l columns

of respectively X and Z, then C (Z l ) = C (X l ). Such a matrix can in fact be

constructed using the so-called Gram-Schmidt process. This process generalizes

beyond the present application to <N to general Hilbert spaces, and recognition

of that important fact, we’ll adopt standard Hilbert space notation for the innerproduct in <N , namely

N

X

hu; vi

ui vi = u0 v

i=1

and describe the process in these terms.

In what follows, vectors are N -vectors and in particular, xj is the jth column

of X. Notice that this is in potential con‡ict with earlier notation that made

xi the p-vector of inputs for the ith case in the training data. We will simply

have to read the following in context and keep in mind the local convention.

With this notation, the Gram-Schmidt process proceeds as follows:

1. Set the …rst column of Z to be

z 1 = x1

2. Having constructed Z l

1

= (z 1 ; z 2 ; : : : ; z l

z l = xl

1 ),

l 1

X

xl ; z j

j=1

14

zj ; zj

let

zj

It is easy enough to see that z l ; z j = 0 for all j < l (building up the

orthogonality of z 1 ; z 2 ; : : : ; z l 1 by induction), since

z l ; z j = xl ; z j

xl ; z j

as at most one term of the sum in step 2. above is non-zero. Further, assume

that C (Z l 1 ) = C (X l 1 ). z l 2 C (X l ) and so C (Z l ) C (X l ). And since

any element of C (X l ) can be written as a linear combination of an element

of C (X l 1 ) = C (Z l 1 ) and xl , we also have C (X l )

C (Z l ). Thus, as

advertised, C (Z l ) = C (X l ).

Since the z j are perpendicular, for any N -vector w,

l

X

w; z j

j=1

zj ; zj

zj

is the projection of w onto C (Z l ) = C (X l ). (To see this, consider minimizaD

E

2

Pl

Pl

Pl

tion of the quantity w

by

j=1 cj z j ; w

j=1 cj z j = w

j=1 cj z j

choice of the constants cj .) In particular, the sum on the right side of the

equality in step 2. of the Gram-Schmidt process is the projection of xl onto

C (X l 1 ). And the projection of Y onto C (X l ) is

l

X

Y; z j

j=1

zj ; zj

zj

This means that for a full p-variable regression,

Y; z p

zp; zp

is the regression coe¢ cient for z p and (since only z p involves it) the last variable in X, xp . So, in constructing a vector of …tted values, …tted regression

coe¢ cients in multiple regression can be interpreted as weights to be applied to

that part of the input vector that remains after projecting the predictor onto

the space spanned by all the others.

The construction of the orthogonal variables z j can be represented in matrix

form as

X = Z

N

where

p

N

pp p

is upper triangular with

kj

=

=

the value in the kth row and jth column of

8

1

if j = k

<

z k ; xj

:

if j > k

hz k ; z k i

15

De…ning

1=2

D = diag hz 1 ; z 1 i

; : : : ; zp; zp

1=2

= diag (kz 1 k ; : : : ; kz p k)

and letting

1

Q = ZD

and R = D

one may write

X = QR

(5)

that is the so-called QR decomposition of X.

In (5), Q is N p with

Q0 Q = D

1

Z 0 ZD

1

1

=D

diag hz 1 ; z 1 i ; : : : ; z p ; z p

D

1

=I

That is, Q has for columns perpendicular unit vectors that form a basis for

C (X). R is upper triangular and that says that only the …rst l of these unit

vectors are needed to create xl .

The decomposition is computationally useful in that (for q j the jth column

1

z j ) the projection of Y onto C (X) is (since

of Q, namely q j = z j ; z j

the q j comprise an orthonormal basis for C (X))

and

Yb =

p

X

Y; q j q j = QQ0 Y

(6)

j=1

b OLS = R

1

Q0 Y

(The fact that R is upper triangular implies that there are e¢ cient ways to

compute its inverse.)

2.2

The Singular Value Decomposition of X

If the matrix X has rank r then it has a so-called singular value decomposition

as

X = U D V0

N

p

N

rr rr p

where U has orthonormal columns spanning C (X), V has orthonormal columns

spanning C X 0 (the row space of X), and D = diag (d1 ; d2 ; : : : ; dr ) for

d1

d2

dr > 0

The dj are the "singular values" of X.

An interesting property of the singular value decomposition is this. If U l and

V l are matrices consisting of the …rst l r columns of U and V respectively,

then

U l diag (d1 ; d2 ; : : : ; dl ) V 0l

16

is the best (in the sense of squared distance from X in <N p ) rank = l approximation to X.

Since the columns of U are an orthonormal basis for C (X), the projection

of Y onto C (X) is

OLS

Yb

=

r

X

Y; uj uj = U U 0 Y

(7)

j=1

In the full rank (rank = p) X case, this is of course, completely parallel to (6)

and is a consequence of the fact that the columns of both U and Q (from the

QR decomposition of X) form orthonormal bases for C (X). In general, the

two bases are not the same.

Now using the singular value decomposition of a full rank (rank p) X,

X 0X

=

V D 0 U 0 U DV 0

=

V D2 V 0

which is the "eigen (or spectral) decomposition" of the symmetric and positive

de…nite X 0 X. The vector

z 1 Xv 1

is the product Xw with the largest squared length in <N subject to the constraint that kwk = 1: A second representation of z 1 is

0 1

1

B 0 C

B C

z 1 = Xv 1 = U DV 0 v 1 = U D B . C = d1 u1

@ .. A

0

In general,

z j = Xv j = dj uj

is the vector of the form Xw with the largest squared length in <N subject to

the constraints that kwk = 1 and hz j ; z l i = 0 for all l < j.

In the event that all the columns of X have been centered (each x0j 1 = 0),

1 0

XX

N

is the (N -divisor) sample covariance matrix1 for the p input variables x1 ; x2 ; : : : ; xp .

The eigenvectors v 1 ; v 2 ; : : : ; v r (the columns of V ) are called the "principal

components directions" of X in <p and the vectors z j might be called principal

components of X (in <N ) or vectors of principal component scores for the N

1 Notice that when X has standardized columns (i.e. each column of X, x , has

j

1

1; xj = 0 and xj ; xj = N ), the matrix N

X 0 X is the sample correlation matrix for the

p input variables x1 ; x2 ; : : : ; xp .

17

cases. The squared lengths of the principal components z j divided by N are

the (N -divisor) sample variances of entries of the z j , and their values are

d2j

1

1 0

z j z j = dj u0j uj dj =

N

N

N

(And the principal components directions prescribe linear combinations of columns

of X with maximum sample variances subject to the relevant constraints.)

For future reference, note also that

XX 0

= U DV 0 V DU 0

= U D2 U 0

and it’s then clear that the columns of U are eigenvectors of XX 0 and the

squares of the diagonal elements of D are the corresponding eigenvalues. U D

then is computable from XX 0 and produces directly the N r matrix of principal components of the data.

2.3

Ridge Regression, the Lasso, and Some Other Shrinking Methods

An alternative to seeking to …nd a suitable level of complexity in a linear prediction rule through subset selection and least squares …tting of a linear form to the

selected variables, is to employ a shrinkage method based on a penalized version of least squares to choose a vector ^ 2 <p to employ in a linear prediction

rule. Here we consider several such methods. The implementation of these

methods is not equivariant to the scaling used to express the input variables

xj . So that we can talk about properties of the methods that are associated

with a well-de…ned scaling, we assume here that the output variable has

been centered (i.e. that hY; 1i = 0) and that the columns of X have

been standardized (and if originally X had a constant column, it has been

removed).

2.3.1

For a

Ridge Regression

ridge

> 0 the ridge regression coe¢ cient vector b

2 <p is

b ridge = arg min (Y

0

X ) (Y

X )+

0

(8)

2<p

OLS

Here

is a penalty/complexity parameter that controls how much b

is

shrunken towards 0. The unconstrained minimization problem expressed in

(8) has an equivalent constrained minimization description as

b ridge =

t

arg min

2

with k k

(Y

t

18

0

X ) (Y

X )

(9)

ridge 2

for an appropriate t > 0. (Corresponding to used in (8), is t = b

used in (9). Conversely, corresponding to t used in (9), one may use a value of

in (8) producing the same error sum of squares.)

The unconstrained form (8) calls upon one to minimize

0

(Y

X ) (Y

0

X )+

and some vector calculus leads directly to

b ridge = X 0 X + I

1

X 0Y

So then, using the singular value decomposition of X (with rank = r),

ridge

Yb

=

=

Xb

ridge

1

U DV 0 V DU 0 U DV 0 + I

V DU 0 Y

=

U D V 0 V DU 0 U DV 0 + I V

=

U D D2 + I

DU 0 Y

!

r

X

d2j

Y; uj uj

2

dj +

j=1

=

1

DU 0 Y

1

(10)

Comparing to (7) and recognizing that

0<

d2j+1

d2j+1 +

<

d2j

d2j +

<1

we see that the coe¢ cients of the orthonormal basis vectors uj employed to

ridge

OLS

get Yb

are shrunken version of the coe¢ cients applied to get Yb

: The

most severe shrinking is enforced in the directions of the smallest principal

components of X (the uj least important in making up low rank approximations

to X).

The function

df ( )

= tr X X 0 X + I

1

1

X0

= tr U D D 2 + I

DU 0

0

1

!

r

X

d2j

= tr @

uj u0j A

2+

d

j

j=1

0

1

!

r

X

d2j

= tr @

u0j uj A

2+

d

j

j=1

!

r

X

d2j

=

d2j +

j=1

19

is called the "e¤ective degrees of freedom" associated with the ridge regression.

In regard to this choice of nomenclature, note that if = 0 ridge regression

is ordinary least squares and this is r, the usual degrees of freedom associated

with projection onto C (X), i.e. trace of the projection matrix onto this column

space.

As ! 1, the e¤ective degrees of freedom goes to 0 and (the centered)

ridge

b

Y

goes to 0 (corresponding to a constant predictor). Notice also (for future

ridge

ridge

reference) that since Yb

= Xb

= X X 0X + I

M = X X 0X + I

1

1

X 0 Y = M Y for

X 0 , if one assumes that

CovY =

2

I

(conditioned on the xi in the training data, the outputs are uncorrelated and

have constant variance 2 ) then

e¤ective degrees of freedom = tr (M ) =

N

1 X

2

Cov (^

yi ; yi )

(11)

i=1

This follows since Yb = M Y and CovY = 2 I imply that

1

1

0

0

M

Yb

2@

A I M 0 jI

A =

Cov @

I

Y

=

2

MM0

M0

M

I

and the terms Cov(^

yi ; yi ) are the diagonal elements of the upper right block

of this covariance matrix. This suggests that tr(M ) is a plausible general

de…nition for e¤ective degrees of freedom for any linear …tting method Yb =

M Y , and that more generally, the last form in (11) might be used in situations

where Yb is other than a linear form in Y . Further (reasonably enough) the

last form is a measure of how strongly the outputs in the training set can be

expected to be related to their predictions.

2.3.2

The Lasso, Etc.

The "lasso" (least absolute selection and shrinkage operator) and some other

¯

¯

¯

¯

¯

relatives of ridge regression are the result ofPgeneralizing the

criteria

Ppoptimization

q

2

p

0

2

(8) and (9) by replacing

= k k = j=1 j with j=1 j for a q > 0.

That produces

8

9

p

<

=

X

q

q

b = arg min (Y X )0 (Y X ) +

(12)

j

;

2<p :

j=1

20

generalizing (8) and

bq =

t

arg min

Pp

q

with

j=1 j j j

0

(Y

X ) (Y

X )

(13)

t

generalizing (9). The so called "lasso" is the q = 1 case of (12) or (13). That

is, for t > 0

b lasso =

t

arg min

Pp

j=1 j j j

with

0

(Y

X ) (Y

X )

(14)

t

Because of the shape of the constraint region

8

p

<

X

2 <p j

j

:

9

=

t

;

j=1

lasso

(in particular its sharp corners at coordinate axes) some coordinates of b t

OLS

are often 0, and the lasso automatically provides simultaneous shrinking of b

toward 0 and rational subset selection. (The same is true of cases of (13) with

q < 1.)

In Table 3.4, HTF provide explicit formulas for …tted coe¢ cients for the case

of X with orthonormal columns.

Method of Fitting

Fitted Coe¢ cient for xj

b OLS

OLS

j

h

b OLS I rank b OLS

j

j

OLS

1

b

j

1+

OLS

b OLS

sign b

Best Subset (of Size M )

Ridge Regression

Lasso

j

M

j

2

i

+

Best subset regression provides a kind of "hard thresholding" of the least

squares coe¢ cients (setting all but the M largest to 0), ridge regression provides

shrinking of all coe¢ cients toward 0, and the lasso provides a kind of "soft

thresholding" of the coe¢ cients.

There are a number of modi…cations of the ridge/lasso idea. One is the

"elastic net" idea. This is a compromise between the ridge and lasso methods.

For an 2 (0; 1) and some t > 0, this is de…ned by

b elastic

;t

net

=

with

arg min

)j j j+

j=1 ((1

Pp

(Y

2

j

)

0

X ) (Y

X )

t

(The constraint is a compromise between the ridge and lasso constraints.) The

constraint regions have "corners" like the lasso regions but are otherwise more

21

rounded than the lasso regions. The equivalent unconstrained optimization

speci…cation of elastic net …tted coe¢ cient vectors is for 1 > 0 and 2 > 0

b elastic

1; 2

net

= arg min (Y

0

X ) (Y

X )+

2<p

p

X

1

j

+

2

p

X

2

j

j=1

j=1

Several sources I’ve come across suggest that a modi…cation of the elastic net

idea, namely

elastic net

(1 + 2 ) b

1; 2

performs better than the original version. This seems a bit muddled, however,

in that when X has orthonormal columns it’s possible to argue that

b elastic

net

1 ; 2 ;j

=

1

1+

OLS

2

b OLS

sign b j

1

j

2

+

so that modi…cation of the elastic net coe¢ cient vector in this way simply reduces it to a corresponding lasso coe¢ cient vector. It’s probably the case,

however, that this reduction is not one that holds in general.

Breiman proposed a di¤erent shrinkage methodology he called the nonnegative garotte that attempts to …nd "optimal" re-weightings of the elements of

b OLS . That is, for > 0 Breiman considered the vector optimization problem

de…ned by

c =

arg min

c2<p with cj

Y

0; j=1;:::;p

Xdiag (c) b

OLS 0

Y

and the corresponding …tted coe¢ cient vector

0

b nng = diag (c ) b OLS

Xdiag (c) b

OLS

+

OLS 1

c 1 b1

B

C

..

C

=B

.

@

A

OLS

b

c p p

Again looking to the case where X has orthonormal columns to get an explicit

formula to compare to others, it turns out that in this context

0

1

b nng = b OLS B

@1

;j

j

2

b OLS

j

C

2A

+

and the nonnegative garotte is again a combined shrinkage and variables selection method.

22

p

X

j=1

cj

2.3.3

Least Angle Regression (LAR)

Another class of shrinkage methods is de…ned algorithmically, rather than directly algebraically, or in terms of solutions to optimization problems. This

includes the LAR (least angle regression). A description of the whole set of

LAR

LAR regression parameters b

(for the case of X with each column centered and with norm 1 and centered Y ) follows. (This is some kind of

amalgam of the descriptions of Izenman, CFZ, and the presentation in the 2003

paper Least Angle Regression by Efron, Hastie, Johnstone, and Tibshirani.)

Note that for Y^ a vector of predictions, the vector

c^

X0 Y

Y^

has elements that are proportional to the correlations between the columns of

X (the xj ) and the residual vector R = Y Y^ . We’ll let

C^ = max j^

cj j and sj = sign (^

cj )

j

Notice also that if X l is some matrix made up of l linearly independent columns

of X, then if b is an l-vector and W = X l b , b can be recovered from W as

1

X 0l X l

X 0l W . (This latter means that if we de…ne a path for Y^ vectors in

C (X) and know for each Y^ which linearly independent set of columns of X

is used in its creation, we can recover the corresponding path b takes through

<p .)

1. Begin with Y^ 0 = 0; b 0 = 0;and R0 = Y

Y^ = Y and …nd

j1 = arg max jhxj ; Y ij

j

(the index of the predictor xj most strongly correlated with y) and add

j1 to an (initially empty) "active set" of indices, A.

2. Move Y^ from Y^ 0 in the direction of the projection of Y onto the space

spanned by xj1 (namely hxj1 ; Y i xj1 ) until there is another index j2 6= j1

with

D

E

D

E

j^

cj2 j = xj2 ; Y Y^ = xj1 ; Y Y^ = j^

cj1 j

At that point, call the current vector of predictions Y^ 1 and the corresponding current parameter vector b 1 and add index j2 to the active set

A. As it turns out, for

!

!)+

(

C^0 + c^0j

C^0 c^0j

;

1 = min

j6=j1

1 hxj ; xj1 i

1 + hxj ; xj1 i

(where the "+" indicates that only positive values are included in the

minimization) Y^ 1 = Y^ 0 + sj1 1 xj1 = sj1 1 xj1 and b 1 is a vector of all

0’s except for sj1 1 xj1 in the j1 position. Let R1 = Y Y^ 1 .

23

3. At stage l with A of size l; b l 1 (with only l

1 non-zero entries),

0

^

^

Y l 1 ; Rl 1 = Y Y l 1 ; and c^l 1 = X Rl 1 in hand, move from Y^ l 1 toward the projection of Y onto the sub-space of <N spanned by fxj1 ; : : : ; xjl g.

This is (as it turns out) in the direction of a unit ul vector "making equal

angles less than 90 degrees with all xj with j 2 A" until there is an index

jl+1 2

= A with

j^

cl

1;j+1 j

= j^

cl

1;j1 j

( = j^

cl

1;j2 j

=

= j^

cl

1;jl j )

At that point, with Y^ l the current vector of predictions, let b l (with only l

non-zero entries) be the corresponding coe¢ cient vector, take Rl = Y Y^ l

and c^l = X 0 Rl . It can be argued that with

l

= min

j 2A

=

(

C^l

1

1

c^l 1;j

hxj ; ul i

!

;

C^l 1 + c^l 1;j

1 + hxj ; ul i

!)+

Y^ l = Y^ l 1 + sjl+1 l ul . Add the index jl+1 to the set of active indices,

A, and repeat.

This continues until until there are r = rank (X) indices in A, and at that point

OLS

OLS

Y^ moves from Y^ r 1 to Y^

and b moves from b r 1 to b

(the version of

an OLS coe¢ cient vector with non-zero elements only in positions with indices

in A). This de…nes a piecewise linear path for Y^ (and therefore b ) that could,

for example, be parameterized by Y^ or Y Y^ .

There are several issues raised by the description above. For one, the standard exposition of this method seems to be that the direction vector ul is prescribed by letting W l = (sj1 xj1 ; : : : ; sjl xjl ) and taking

ul =

1

1

W l W 0l W l

1

1

W l W 0l W l

1

1

1

1

1, so that each of sj1 xj1 ; : : : ; sjl xj1

It’s clear that W 0l ul = W l W 0l W l

has the same inner product with ul . What is not immediately clear (but is

argued in Efron, Hastie, Johnstone, and Tibshirani) is why one knows that this

prescription agrees with a prescription of a unit vector giving the direction from

Y^ l 1 to the projection of Y onto the sub-space of <N spanned by fxj1 ; : : : ; xjl g,

namely (for P l the projection matrix onto that subspace)

1

P lY

Y^ l

P lY

Y^ l

1

1

Further, the arguments that establish that j^

cl 1;j1 j = j^

cl 1;j2 j =

=

j^

cl 1;jl j are not so obvious, nor are those that show that l has the form in

3. above. And …nally, HTF actually state their LAR algorithm directly in

24

terms of a path for b , saying at stage l one moves from b l 1 in the direction

of a vector with all 0’s except at those indices in A where there are the joint

least squares coe¢ cients based on the predictor columns fxj1 ; : : : ; xjl g. The

correspondence between the two points of view is probably correct, but is again

not absolutely obvious.

OLS

At any rate, the LAR algorithm traces out a path in <p from 0 to b

. One

might think of the point one has reached along that path (perhaps parameterized

by Y^ ) as being a complexity parameter governing how ‡exible a …t this

algorithm has allowed, and be in the business of choosing a point along the

path (by cross-validation or some other method) in exactly the same way one

might, for example, choose a ridge parameter.

What is not at all obvious but true, is that a very slight modi…cation of

this LAR algorithm produces the whole set of lasso coe¢ cients (14) as its path.

One simply needs to enforce the requirement that if a non-zero coe¢ cient hits

0, its index is removed from the active set and a new direction of movement

is set based on one less input

Ppvariable. At any point along the modi…ed LAR

path, one can compute t = j=1 j , and think of the modi…ed-LAR path as

parameterized by t. (While it’s not completely obvious, this turns out to be

monotone non-decreasing in "progress along the path," or Y^ ).

A useful graphical representation of the lasso path is one in which all coe¢ lasso

cients ^ tj are plotted against t on the same set of axes. Something similar is

often done for the LAR coe¢ cients (where the plotting is against some measure

of progress along the path de…ned by the algorithm).

2.4

Two Methods With Derived Input Variables

Another possible approach to the problem of …nding an appropriate level of

complexity in a …tted linear prediction rule is to consider regression on some

number M < p of predictors derived from the original inputs xj . Two such

methods discussed by HTF are the methods of Principal Components Regression

and Partial Least Squares. Here we continue to assume that the columns of

X have been standardized and Y has been centered.

2.4.1

Principal Components Regression

The idea here is to replace the p columns of predictors in X with the …rst few

(M ) principal components of X (from the singular value decomposition of X)

z j = Xv j = dj uj

25

Correspondingly, the vector of …tted predictions for the training data is

PCR

Yb

=

M

X

Y; z j

zj ; zj

j=1

=

M

X

zj

Y; uj uj

(15)

j=1

Comparing this to (7) and (10) we see that ridge regression shrinks the coe¢ cients of the principal components uj according to their importance in making

up X, while principal components regression "zeros out" those least important

in making up X.

PCR

Notice too, that Yb

can be written in terms of the original inputs as

PCR

Yb

=

M

X

Y; uj

j=1

=

1

Xv j

dj

0

M

X

@

X

Y; uj

j=1

=

so that

1

1 A

vj

dj

0

1

M

X

1

X@

Y; Xv j v j A

d2

j=1 j

b PCR =

M

X

1

2 Y; Xv j v j

d

j=1 j

(16)

OLS

where (obviously) the sum above has only M terms. b

is the M = rank (X)

version of (16). As the v j are orthonormal, it is therefore clear that

b PCR

b OLS

PCR

So principal components regression shrinks both Yb

toward 0 in <N and

OLS

b

toward 0 in <p .

2.4.2

Partial Least Squares Regression

The shrinking methods mentioned thus far have taken no account of Y in determining directions or amounts of shrinkage. Partial least squares speci…cally

employs Y . In what follows, we continue to suppose that the columns of X

have been standardized and that Y has been centered.

The logic of partial least squares is this. Suppose that

z1

=

p

X

Y; xj xj

j=1

= XX 0 Y

26

It is possible to argue that for w1 = X 0 Y = X 0 Y ; Xw1 = z 1 = X 0 Y

linear combination of the columns of X maximizing

is the

jhY ; Xwij

(which is essentially the sample correlation between the variables y and x0 w)

subject to the constraint that kwk = 1. (Note that upon replacing jhY ; Xwij

with jhXw; Xwij one has the kind of optimization problem solved by the …rst

principal component of X.) This follows because

2

hY ; Xwi = wX 0 Y Y 0 Xw

and the maximizer of this quadratic form subject to the constraint is the eigenvector of X 0 Y Y 0 X corresponding to its single non-zero eigenvalue. It’s then

easy to verify that w1 is an eigenvector corresponding to the non-zero eigenvalue

Y 0 XX 0 Y .

Then de…ne X 1 by orthogonalizing the columns of X with respect to z 1 .

That is, de…ne the jth column of X 1 by

x1j = xj

hxj ; z 1 i

z1

hz 1 ; z 1 i

and take

z2

p

X

=

Y; x1j x1j

j=1

X 1 X 10 Y

=

For w2 = X 10 Y = X 10 Y ; X 1 w2 = z 2 = X 10 Y

the columns of X 1 maximizing

is the linear combination of

Y ; X 1w

subject to the constraint that kwk = 1.

Then for l > 1, de…ne X l by orthogonalizing the columns of X l

respect to z l . That is, de…ne the jth column of X l by

xlj

=

1

with

xlj 1 ; z l

zl

hz l ; z l i

xlj 1

and let

z l+1

=

p D

X

j=1

E

Y; xlj xlj

= X l X l0 Y

Partial least squares regression uses the …rst M of these variables z j as input

variables.

27

The PLS predictors z j are orthogonal by construction. Using the …rst M

of these as regressors, one has the vector of …tted output values

PLS

Yb

=

M

X

Y; z j

j=1

zj ; zj

zj

Since the PLS predictors are (albeit recursively-computed data-dependent) linPLS

ear combinations of columns of X, it is possible to …nd a p-vector b

(namely

X 0X

1

X 0 Yb

PLS

M

) such that

PLS

PLS

Yb

= X bM

and thus produce the corresponding linear prediction rule

PLS

fb(x) = x0 b M

(17)

It seems like in (17), the number of components, M , should function as a

complexity parameter. But then again there is the following. When the xj

PLS

OLS

are orthonormal, it’s fairly easy to see that z 1 is a multiple of Yb

, b

=

1

PLS

PLS

b PLS =

= bp = b

, and all steps of partial least squares after the …rst

2

are simply providing a basis for the orthogonal complement of the 1-dimensional

OLS

subspace of C (X) generated by Yb

(without improving …tting at all). That

is, here changing M doesn’t change ‡exibility of the …t at all. (Presumably,

when the xj are nearly orthogonal, something similar happens.)

3

Linear Prediction Using Basis Functions

A way of moving beyond prediction rules that are functions of a linear form in

x, i.e. depend upon x through x0 ^ , is to consider some set of (basis) functions

fhm g and predictors of the form or depending upon the form

f^ (x) =

p

X

^ hm (x) = h (x)0 ^

m

(18)

m=1

0

(for h (x) = (h1 (x) ; : : : ; hp (x))). We next consider some ‡exible methods

employing this idea. Notice that …tting of form (18) can be done using any of

the methods just discussed based on the N p matrix of inputs

0

0 1

h (x1 )

B h (x2 )0 C

B

C

X = (hj (xi )) = B

C

..

@

A

.

h (xN )

(i indexing rows and j indexing columns).

28

0

3.1

p = 1 Wavelet Bases

Consider …rst the case of a one-dimensional input variable x, and in fact here

suppose that x takes values in [0; 1]. One might consider a set of basis function

for use in the form (18) that is big enough and rich enough to approximate

essentially any function on [0; 1]. In particular, various orthonormal bases for

the square integrable functions on this interval (the space of functions L2 [0; 1])

come to mind. One might, for example, consider using some number of functions

from the Fourier basis for L2 [0; 1]

np

o1

o1

np

[

[ f1g

2 sin (j2 x)

2 cos (j2 x)

j=1

For example, using M

the …tting the forms

f (x) =

j=1

N=2 sin-cos pairs and the constant, one could consider

0+

M

X

1m sin (m2 x) +

m=1

M

X

2m

cos (m2 x)

(19)

m=1

If one has training xi on an appropriate regular grid, the use of form (19) leads

to orthogonality in the N (2M + 1) matrix of values of the basis functions X

and simple/fast calculations.

Unless, however, one believes that E[yjx = u] is periodic in u, form (19) has

its serious limitations. In particular, unless M is very very large, a trigonometric series like (19) will typically provide a poor approximation for a function

that varies at di¤erent scales on di¤erent parts of [0; 1], and in any case, the coe¢ cients necessary to provide such localized variation at di¤erent scales have no

obvious simple interpretations/connections to the irregular pattern of variation

being described. So-called "wavelet bases" are much more useful in providing

parsimonious and interpretable approximations to such functions.

The simplest wavelet basis for L2 [0; 1] is the Haar basis. It may be described

as follows. De…ne

' (x) = I [0 < x

1] (the so-called "father" wavelet)

and

(x)

= ' (2x)

' (2x

1

= I 0<x

2

1)

I

1

<x

2

1

(the so-called "mother" wavelet)

Linear combinations of these functions provide all elements of L2 [0; 1] that are

constant on 0; 21 and on 12 ; 1 . Write

0

= f'; g

Next, de…ne

1;0

(x)

=

p

2 I 0<x

1;1

(x)

=

p

2 I

1

4

3

4

1

<x

2

29

1

<x

4

3

I

<x

4

I

1

2

1

and

and let

1

=

1;0 ;

1;1

Using the set of functions 0 [ 1 one can build (as linear combinations) all

elements of L2 [0; 1] that are constant on 0; 41 and on 14 ; 12 and on 21 ; 34 and

on 34 ; 1 .

The story then goes on as one should expect. One de…nes

2;0

(x)

=

2 I 0<x

2;1

(x)

=

2 I

2;2

(x)

=

2;3

(x)

=

1

<x

4

1

<x

2 I

2

3

<x

2 I

4

1

8

3

8

5

8

7

8

1

<x

8

3

I

<x

8

5

<x

I

8

7

I

<x

8

I

1

4

1

2

3

4

and

and

and

1

and lets

2

=

2;0 ;

2;1 ;

2;2 ;

2;3

In general,

m;j

(x) =

p

j

2m

2m x

2m

for j = 1; 2; : : : ; 2m

1

and

m

=

m;0 ;

m;1 ; : : : ;

m;2m 1

The Haar basis of L2 [0; 1] is then

[1

m=0

m

Then, one might entertain use of the Haar basis functions through order M

in constructing a form

m

f (x) =

0

+

M 2X1

X

mj

m;j

(x)

(20)

m=0 j=0

(with the understanding that 0;0 = ), a form that in general allows building

of functions that are constant on consecutive intervals of length 1=2M +1 . This

form can be …t by any of the various regression methods (especially involving

thresholding/selection, as a typically very large number, 2M +1 , of basis functions is employed in (20)). (See HTF Section 5.9.2 for some discussion of using

the lasso with wavelets.) Large absolute values of coe¢ cients mj encode scales

at which important variation in the value of the index m, and location in [0; 1]

where that variation occurs in the value j=2m . Where (perhaps after model selection/ thresholding) only a relatively few …tted coe¢ cients are important, the

30

corresponding scales and locations provide an informative and compact summary of the …t. A nice visual summary of the results of the …t can be made by

plotting for each m (plots arranged vertically, from M through 0, aligned and

to the same scale) spikes of length mj pointed in the direction of sign mj

along an "x" axis at positions (say) (j=2m ) + 1=2m+1 .

In special situations where N = 2K and

xi = i

1

2K

for i = 1; 2; : : : ; 2K

and one uses the Haar basis functions through order K

is computationally clean, since the vectors

0

1

m;j (x1 )

B

C

..

@

A

.

m;j

1, the …tting of (20)

(xN )

(together with the column vector

p of 1’s) are orthogonal. (So, upon proper

normalization, i.e. division by N = 2K=2 , they form an orthonormal basis for

<N .)

The Haar wavelet basis functions are easy to describe and understand. But

they are discontinuous, and from some points of view that is unappealing. Other

sets of wavelet basis functions have been developed that are smooth. The

construction begins with a smooth "mother wavelet" in place of the step function

used above. HTF make some discussion of the smooth "symmlet" wavelet basis

at the end of their Chapter 5.

3.2

p = 1 Piecewise Polynomials and Regression Splines

Continue consideration of the case of a one-dimensional input variable x, and

now K "knots"

< K

1 < 2 <

and forms for f (x) that are

1. polynomials of order M (or less) on all intervals

tially, at least)

j 1; j

, and (poten-

2. have derivatives of some speci…ed order at the knots, and (potentially, at

least)

3. are linear outside ( 1 ;

K ).

If we let I1 (x) = I [x < 1 ], for j = 2; : : : ; K let Ij (x) = I j 1 x < j ,

and de…ne IK+1 (x) = I [ K x], one can have 1. in the list above using basis

31

functions

I1 (x) ; I2 (x) ; : : : ; IK+1 (x)

xI1 (x) ; xI2 (x) ; : : : ; xIK+1 (x)

x2 I1 (x) ; x2 I2 (x) ; : : : ; x2 IK+1 (x)

..

.

xM I1 (x) ; xM I2 (x) ; : : : ; xM IK+1 (x)

Further, one can enforce continuity and di¤erentiability (at the knots) conditions

P(M +1)(K+1)

on a form f (x) = m=1

m hm (x) by enforcing some linear relations

between appropriate ones of the m . While this is conceptually simple, it is

messy. It is much cleaner to simply begin with a set of basis functions that are

tailored to have the desired continuity/di¤erentiability properties.

A set of M + 1 + K basis functions for piecewise polynomials of degree M

with derivatives of order M 1 at all knots is easily seen to be

M

1 )+

1; x; x2 ; : : : ; xM ; (x

M

2 )+

; (x

M

K )+

; : : : ; (x

M

(since the value of and …rst j derivatives of x

The

j + at j are all 0).

choice of M = 3 is fairly standard.

Since extrapolation with polynomials typically gets worse with order, it is

common to impose a restriction that outside ( 1 ; K ) a form f (x) be linear. For

the case of M = 3 this can be accomplished by beginning with basis functions

3

3

3

1; x; (x

1 )+ ; (x

2 )+ ; : : : ; (x

K )+ and imposing restrictions necessary to

force 2nd and 3rd derivatives to the right of K to be 0: Notice that (considering

x > K)

0

1

K

K

2

X

X

d @

3

A

+

x

+

x

=

6

(21)

0

1

j

j +

j x

j

dx2

j=1

j=1

and

3

0

d @

dx3

0+

1x +

K

X

j

1

3

A

j +

x

j=1

So, linearity for large x requires (from (22)) that

=6

K

X

j

(22)

j=1

PK

= 0. Further,

PK

substituting this into (21) means that linearity also requires that j=1 j j = 0.

PK 1

Using the …rst of these to conclude that K =

j and substituting into

j=1

the second yields

K

X2

K

j

=

K 1

j

K

j=1

and then

K

=

K

X2

j=1

K

j

j

K

32

K 1

j=1

K 1

K

X2

j=1

j

j

These then suggest the set of basis functions consisting of 1; x and for j =

1; 2; : : : ; K 2

3

j +

x

=

x

K

K

3

j +

j

3

K 1 +

x

K 1

K

j

K

K

+

K

3

K 1 +

x

K 1

j

3

K )+

(x

K 1

+

K 1

K

j

(x

K 1

3

K )+

(x

3

K )+

(These are essentially the basis functions that HTF call their Nj .) Their use

produces so-called "natural" (linear outside ( 1 ; K )) cubic regression splines.

There are other (harder to motivate, but in the end more pleasing and

computationally more attractive) sets of basis functions for natural polynomial

splines. See the B-spline material at the end of HTF Chapter 5.

3.3

3.3.1

Basis Functions and p-Dimensional Inputs

Multi-Dimensional Regression Splines (Tensor Bases)

If p = 2 and the vector of inputs, x, takes values in <2 , one might proceed as

follows. If fh11 ; h12 ; : : : ; h1M1 g is a set of spline basis functions based on x1

and fh21 ; h22 ; : : : ; h2M2 g is a set of spline basis functions based on x2 one might

consider the set of M1 M2 basis functions based on x de…ned by

gjk (x) = h1j (x1 ) h2k (x2 )

and corresponding forms for regression splines

X

f (x) =

jk gjk (x)

j;k

The biggest problem with this potential method is the explosion in the size

of a tensor product basis as p increases. For example, using K knots for cubic

p

regression splines in each of p dimensions produces (4 + K) basis functions

for the p-dimensional problem. Some kind of forward selection algorithm or

shrinking of coe¢ cients will be needed to produce any kind of workable …ts with

such large numbers of basis functions.

3.3.2

MARS (Mulitvariate Adapative Regression Splines)

This is a high-dimensional regression-like methodology based on the use of

"hockey-stick functions" and their products as data-dependent "basis functions." That is, with input space <p we consider data-dependent basis functions

built on the N p pairs of functions

hij1 (x) = (xj

xij )+ and hij2 (x) = (xij

xj )+

(23)

(xij is the jth coordinate of the ith input training vector and both hij1 (x) and

hij2 (x) depend on x only through the jth coordinate of x). MARS builds

predictors sequentially, making use of these "re‡ected pairs" roughly as follows.

33

1. Begin with

f^0 (x) = ^ 0

2. Identify a pair (23) so that

0

+

11 hij1

(x) +

12 hij1

(x)

has the best SSE possible. Call the selected functions

g11 = hij1 and g12 = hij2

and set

f^1 (x) = ^ 0 + ^ 11 g11 (x) + ^ 12 g12 (x)

3. At stage l of the predictor-building process, with predictor

f^l

^

1 (x) = 0 +

l 1

X

^

m1 gm1

(x) + ^ m2 gm2 (x)

m=1

in hand, consider for addition to the model, pairs of basis functions that

are either of the basic form (23) or of the form

hij1 (x) gm1 (x) and hij2 (x) gm1 (x)

or of the form

hij1 (x) gm2 (x) and hij2 (x) gm2 (x)

for some m < l, subject to the constraints that no xj appears in any

candidate product more than once (maintaining the piece-wise linearity of

sections of the predictor). Additionally, one may decide to put an upper

limit on the order of the products considered for inclusion in the predictor.

The best candidate pair in terms of reducing SSE gets called, say, gl1 and

gl2 and one sets

f^l (x) = ^ 0 +

l

X

^

m1 gm1

(x) + ^ m2 gm2 (x)

m=1

One might pick the tuning parameter l by cross-validation, but the standard

implementation of MARS apparently uses instead a kind of generalized cross

validation error

2

PN

f^l (xi )

i=1 yi

GCV (l) =

2

1 MN(l)

where M (l) is some kind of degrees of freedom …gure. One must take account

of both the …tting of the coe¢ cients in this and the fact that knots (values

xij ) have been chosen. The HTF recommendation is to use

M (l) = 2l + (2 or 3) (the number of di¤erent knots chosen)

(where presumably the knot count refers to di¤erent xij appearing in at least

one gm1 (x) or gm2 (x)).

34

4

Smoothing Splines, Regularization, RKHS’s,

and Bayes Prediction

4.1

p = 1 Smoothing Splines

A way of avoiding the direct selection of knots for a regression spline is to

instead, for a smoothing parameter > 0, consider the problem of …nding (for

a min fxi g and max fxi g b)

!

Z b

N

X

2

2

00

^

f =

arg min

(yi h (xi )) +

(h (x)) dx

functions h with 2 derivatives

a

i=1

Amazingly enough, this optimization problem has a solution that can be fairly

simply described. f^ is a natural cubic spline with knots at the distinct values

xi in the training set. That is, for a set of (now data-dependent, as the knots

come from the training data) basis functions for such splines

h1 ; h2 ; : : : ; hN

(here we’re tacitly assuming that the N values of the input variable in the

training set are all di¤erent)

N

X

f^ (x) =

j=1

where the b j are yet to be identi…ed.

So consider the function

b hj (x)

j

N

X

g (x) =

j hj

(x)

(24)

j=1