Confidence Set Estimation from Rounded/Digital Normal Data Steve Vardeman C-S (Johnson) Lee

advertisement

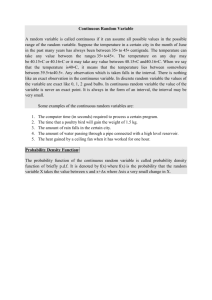

Confidence Set Estimation from Rounded/Digital Normal Data Steve Vardeman C-S (Johnson) Lee (JQT 2001, Comm Stat 2002, (2003)) Iliana Vaca (M.S. Work in Progress) 1 2 Rounding/Digital Nature of Data • Hardly a new problem … see e.g. Sheppard, W. (1898). "On the Calculation of the Most Probable Values of Frequency Constants for Data Arranged According to Equidistant Divisions of a Scale." Proceedings of the London Mathematical Society 29, pp. 353-380 • Metrologists recognize this as a source of error in physical measurement, but don’t have good ways of accounting for it • Elementary statistical methods are implicitly based on the assumption that this “isn’t a problem” 3 But … Continuous Models • Are for “real-number”/“infinitely-manydecimal-place” observations • Even if they DO adequately describe an underlying physical phenomenon, they MAY OR MAY NOT adequately describe what can be observed 4 Observation “To the Nearest ∆” • Observations y potentially coded as integers via y′ = ( y − y0 ) / ∆ so that 1.2, 1.2, 1.2, 1.2, 1.3, 1.3, 1.3, 1.3, 1.3, 1.3 could become (with ∆ = .1 ) 2, 2, 2, 2, 3, 3, 3, 3, 3, 3 • Suppose that a continuously distributed X produces a rounded/digital version Y … the discrete distribution of Y may or may not look anything like the continuous distribution of X 5 Two ∆ = 1 Normal Cases µ = 4.25 and σ = 1.0 (µY = 4.25 and σ Y = 1.0809) µ = 4.25 and σ = .25 (µY = 4.1573 and σ Y = .3678) 6 Key is the Size of • If σ ≥ .5∆ then µ − µY < .005∆ • If σ ≈ 0 then µ − µY can be nearly .5∆ σ ∆ • Provided σ > .15∆ , σ Y > σ . For such σ σY −σ σ – decreases in σ – for σ ≥ .5∆ is less than .141 • For small σ , σ Y can be many times or a small fraction of σ 7 Naïve Use of Continuous Data Inference Formulas … • y estimates µY not µ and in cases where sy “zeros-in” on µY ≠ µ the interval y ± t n µY (and thus for large samples has actual confidence level near 0) • s y estimates σ Y not σ and unless σ is large doesn’t have anything like a (root of 2 a) χ distribution 8 Inference Engine: the “Right” Likelihood • (Rounded data) one-sample normal likelihood n L ( µ , σ ) = ΠPµ ,σ ( yi − .5∆ < X < yi + .5∆ ) i =1 yi + .5∆ − µ yi − .5∆ − µ = ΠΦ −Φ σ σ i =1 n 9 • Log-likelihood l ( µ , σ ) = log L ( µ , σ ) • Profile log-likelihoods l ( µ ) = sup l ( µ , σ ) * σ >0 l ** (σ ) = sup l ( µ , σ ) µ 10 Standard Simple Asymptotics Let M = sup l ( µ , σ ) ( µ ,σ ) (the max (sup) log-likelihood), then 2 2 ( M − l ( µ , σ ) ) → χ 2 n →∞ L ( µ ,σ ) 2 ( M − l ( µ ) ) →χ n →∞ * Lµ 2 1 2 2 ( M − l (σ ) ) → χ 1 n →∞ ** Lσ 11 Cartoon for Asymptotically OK Confidence Sets • Region for ( µ , σ ) shaded; interval for µ (similar interval for σ ) 12 • Corresponding cartoon for profile loglikelihoods and estimation of µ or σ 13 Practical Problems With the Asymptotically-OK Sets • There is under-coverage – for µ when σ is large – for σ when σ is either large or (moderately) small – for ( µ , σ ) when σ is large • Computation of the sets is not always absolutely obvious (the log-likelihood is not always so nice-looking) 14 Our Plan (in retrospect, anyway) • Understand the small sample nature of the log-likelihood and profile log-likelihoods • Somehow “fix” the under-coverage problems by finding suitable small sample 2 2 χ γ and χ ( ) replacements for 1 2 ( γ ) … together with …????? – Central idea for replacement: for large σ , the distribution of 2 ( M − l ( µ , σ ) ) is perhaps essentially that of the corresponding random variable based on the exact x values 15 Nature of the Likelihood • This depends on the sample range R, and only when R≥2∆ is it “tame” (nice and mound-shaped) – An R=0 case: n = 10 observations all 1.2, ∆ = .1 ; (base 10) loglikelihood 16 – An R=∆ case: original example with ∆ = .1 ; (base 10) version of l (1.25 + ( t + .25 ) σ , σ ) 17 – An R=2∆ case: ∆=.1 with one data value 1.1, seven data values 1.2, and two 1.3; (base 10) log-likelihood 18 Estimation of µ • Here the “exact data” version of 2 ( M − l * ( µ ) ) 2 is x −µ sx / n n ln 1 + n −1 2 χ this suggests the replacement of 1 ( γ ) with 2 1+ γ tn −1 2 cn ( γ ) = n ln 1 + n −1 19 • Simulations show this works splendidly – The intervals are conservative (for small σ ) to exact (for large σ ) – Coverage probabilities are asymptotically correct since Lµ * 2 2 2 ( M − l ( µ ) ) → χ and c γ χ ( ) 1 n → 1 (γ ) →∞ n n →∞ – For large R these limits are essentially y ±t sy n • For usual confidence levels and moderate sample sizes, R = 0 intervals are ∆ ∆ y− ,y+ 2 2 20 • Profile loglikelihoods for R=0 and R=∆ (cartoons) R=0 R=∆ 21 Estimation of σ • Trying first to cure the large σ under-coverage ** problems, the exact data version of 2 M − l (σ ) is 2 ( ) nσ 2 ( n − 1) sx n ln n + − 2 2 n 1 s − σ ( ) x which has the distribution of n U n = n ln W 2 + W − n for W ∼ χ1 2 χ and suggests replacing 1 ( γ ) with d n (γ ) = the γ -quantile of the distribution of U n 22 • This cures the large σ problem (makes the method exact for large σ ), but does not completely cure the small σ under-coverage • Think: small σ will often produce R = 0 or R = ∆ 23 • We find that the R = 0 and R = ∆ log2 χ likelihoods are such that using 1 ( γ ) or d n ( γ ) the (naturally one-sided) intervals have (upper) endpoints σ 0 < σ ∆ ,1 < σ ∆ ,2 < < σ n ∆, 2 where σ 0 = the "R = 0" endpoint σ ∆ , j = the "R = ∆ and smaller count = j" endpoint • ????Replace these values with (minimally) larger ones???? 24 • An obvious necessary condition for correctto-conservative coverage probabilities is that (*) Pµ0,σ + Pµ∆,σ ≤ 1 − γ ∀µ ,σ for η µ ,σ P = Pµ ,σ ( R = η and interval fails to cover σ ) Do brute force computations for the ∆ = 1 case of replacements for σ 0 and σ 1, j that will guarantee (*) 25 • Find (for ∆ = 1 ) σ = minimum σ with max Pµ ,σ ( R = 0 ) ≤ 1 − γ * 0 µ • Find (for ∆ = 1 ) σ 1,* j = minimum σ with Pµ ,σ ( R = 0 ) j ≤ − γ max 1 µ + ∑ Pµ ,σ ( R = 1 and smaller count = l ) l =1 • Replace σ 0 with ∆σ 0* and σ ∆ , j with ∆σ 1,* j 26 • Simulations indicate that with the d n (γ ) and R = 0 and R = ∆ “corrections” – The intervals are rarely liberal, and when they are, they are only slightly so – For large σ (where naïve use of the “usual” formulas makes sense) these intervals are somewhat shorter on average than the equal-tail intervals (no real surprise … equal-tail intervals aren’t optimized for average length) 27 Example • For the example data set (with 4 values 1.2 and 6 values 1.3) 95% intervals are – (1.226,1.294 ) for µ (not unlike naïve use of a t interval in this particular case) – ( 0,.0851) for σ (not unlike naïve use of a 2 χ one-sided interval in this case) ( s = .0518 ) y 28 (Joint) Estimation of ( µ , σ ) (in progress) • Could, for example, be used to create simultaneous confidence limits for all values of the cdf • The “exact data” version of 2 ( M − l ( µ ,σ )) is n ln ( n − 1) s n x −µ + −n+ 2 2 ( n − 1) sx σ σ / n 2 σ 2 x 2 29 and the exact data distribution of this is that n of Qn = n ln + W − n + V W for independent W ∼ χ n2−1 and V ∼ χ12 • Numerical computation of the cdf and thus quantiles of such a Qn is easy enough • We expect that with qn ( γ ) = the γ -quantile of the distribution of Qn the prescription 1 ( µ , σ ) | M − l ( µ , σ ) < qn ( γ ) 2 will give reliable confidence sets 30