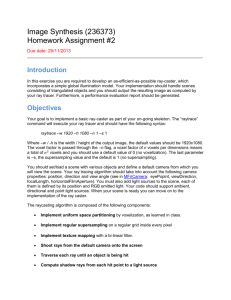

Interactive Ray Tracing of VRML Scenes in... by C. Submitted to the Department of Electrical Engineering ...

advertisement