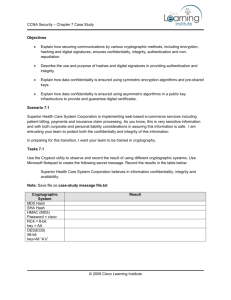

Group Sharing and Random Access ... Cryptographic Storage File Systems E.

advertisement