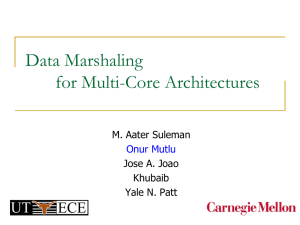

18-742 Spring 2011 Parallel Computer Architecture Lecture 10: Asymmetric Multi-Core III

advertisement

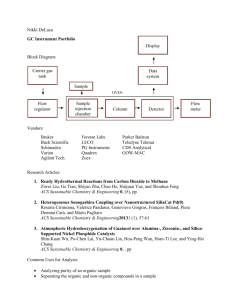

18-742 Spring 2011 Parallel Computer Architecture Lecture 10: Asymmetric Multi-Core III Prof. Onur Mutlu Carnegie Mellon University Project Proposals We’ve read your proposals Get feedback from us on your progress 2 Reviews Due Today (Feb 9) before class Due Friday (Feb 11) midnight Rajwar and Goodman, “Speculative Lock Elision: Enabling Highly Concurrent Multithreaded Execution,” MICRO 2001. Herlihy and Moss, “Transactional Memory: Architectural Support for Lock-Free Data Structures,” ISCA 1993. Due Tuesday (Feb 15) midnight Patel, “Processor-Memory Interconnections for Multiprocessors,” ISCA 1979. Dally, “Route packets, not wires: on-chip inteconnection network,” DAC 2001. Das et al., “Aergia: Exploiting Packet Latency Slack in On-Chip Networks,” ISCA 2010. 3 Last Lecture Discussion on hardware support for debugging parallel programs Asymmetric multi-core for energy efficiency Accelerated critical sections (ACS) 4 Today Speculative Lock Elision Data Marshaling Dynamic Core Combining (Core Fusion) 5 Alternatives to ACS Transactional memory (Herlihy+) ACS does not require code modification Transactional Lock Removal (Rajwar+), Speculative Synchronization (Martinez+), Speculative Lock Elision (Rajwar) Hide critical section latency by increasing concurrency ACS reduces latency of each critical section Overlaps execution of critical sections with no data conflicts ACS accelerates ALL critical sections Does not improve locality of shared data ACS improves locality of shared data ACS outperforms TLR (Rajwar+) by 18% (details in ASPLOS 2009 paper) 6 Speculative Lock Elision Many programs use locks for synchronization Many locks are not necessary Idea: Stores occur infrequently during execution Updating different parts of data structure Speculatively assume lock is not necessary and execute critical section without acquiring the lock Check for conflicts within the critical section Roll back if assumption is incorrect Rajwar and Goodman, “Speculative Lock Elision: Enabling Highly Concurrent Multithreaded Execution,” MICRO 2001. 7 Dynamically Unnecessary Synchronization 8 Speculative Lock Elision: Issues Either the entire critical section is committed or none of it How to detect the lock How to keep track of dependencies and conflicts in a critical section How to buffer speculative state How to check if “atomicity” is violated Read set and write set Dependence violations with another thread How to support commit and rollback 9 Maintaining Atomicity If atomicity is maintained, all locks can be removed Conditions for atomicity: Data read is not modified by another thread until critical section is complete Data written is not accessed by another thread until critical section is complete If we know the beginning and end of a critical section, we can monitor the memory addresses read or written to by the critical section and check for conflicts Using the underlying coherence mechanism 10 SLE Implementation Checkpoint register state before entering SLE mode In SLE mode: Store: Buffer the update in the write buffer (do not make visible to other processors), request exclusive access Store/Load: Set “access” bit for block in the cache Trigger misspeculation on some coherence actions If external invalidation to a block with “access” bit set If exclusive access to request to a block with “access” bit set If not enough buffering space, trigger misspeculation If end of critical section reached without misspeculation, commit all writes (needs to appear instantaneous) 11 ACS vs. SLE ACS Advantages over SLE + Speeds up each individual critical section + Keeps shared data and locks in a single cache (improves shared data and lock locality) + Does not incur re-execution overhead since it does not speculatively execute critical sections in parallel ACS Disadvantages over SLE - Needs transfer of private data and control to a large core (reduces private data locality and incurs overhead) - Executes non-conflicting critical sections serially - Large core can reduce parallel throughput (assuming no SMT) 12 ACS Summary Critical sections reduce performance and limit scalability Accelerate critical sections by executing them on a powerful core ACS reduces average execution time by: 34% compared to an equal-area SCMP 23% compared to an equal-area ACMP ACS improves scalability of 7 of the 12 workloads Generalizing the idea: Accelerate “critical paths” or “critical stages” by executing them on a powerful core 13 Staged Execution Model (I) Goal: speed up a program by dividing it up into pieces Idea Benefits Split program code into segments Run each segment on the core best-suited to run it Each core assigned a work-queue, storing segments to be run Accelerates segments/critical-paths using specialized/heterogeneous cores Exploits inter-segment parallelism Improves locality of within-segment data Examples Accelerated critical sections [Suleman et al., ASPLOS 2010] Producer-consumer pipeline parallelism Task parallelism (Cilk, Intel TBB, Apple Grand Central Dispatch) Special-purpose cores and functional units 14 Staged Execution Model (II) LOAD X STORE Y STORE Y LOAD Y …. STORE Z LOAD Z …. 15 Staged Execution Model (III) Split code into segments Segment S0 LOAD X STORE Y STORE Y Segment S1 LOAD Y …. STORE Z Segment S2 LOAD Z …. 16 Staged Execution Model (IV) Core 0 Core 1 Core 2 Instances of S0 Instances of S1 Instances of S2 Work-queues 17 Staged Execution Model: Segment Spawning Core 0 S0 Core 1 Core 2 LOAD X STORE Y STORE Y S1 LOAD Y …. STORE Z S2 LOAD Z …. 18 Staged Execution Model: Two Examples Accelerated Critical Sections [Suleman et al., ASPLOS 2009] Idea: Ship critical sections to a large core in an asymmetric CMP Segment 0: Non-critical section Segment 1: Critical section Benefit: Faster execution of critical section, reduced serialization, improved lock and shared data locality Producer-Consumer Pipeline Parallelism Idea: Split a loop iteration into multiple “pipeline stages” where one stage consumes data produced by the next stage each stage runs on a different core Segment N: Stage N Benefit: Stage-level parallelism, better locality faster execution 19 Problem: Locality of Inter-segment Data Core 0 S0 Core 1 LOAD X STORE Y STORE Y Core 2 Transfer Y Cache Miss S1 LOAD Y …. STORE Z Transfer Z Cache Miss S2 LOAD Z …. 20 Problem: Locality of Inter-segment Data Accelerated Critical Sections [Suleman et al., ASPLOS 2010] Producer-Consumer Pipeline Parallelism Idea: Ship critical sections to a large core in an ACMP Problem: Critical section incurs a cache miss when it touches data produced in the non-critical section (i.e., thread private data) Idea: Split a loop iteration into multiple “pipeline stages” each stage runs on a different core Problem: A stage incurs a cache miss when it touches data produced by the previous stage Performance of Staged Execution limited by inter-segment cache misses 21 Terminology Core 0 S0 Core 1 LOAD X STORE Y STORE Y Transfer Y S1 LOAD Y …. STORE Z Generator instruction: The last instruction to write to an inter-segment cache block in a segment Core 2 Inter-segment data: Cache block written by one segment and consumed by the next segment Transfer Z S2 LOAD Z …. 22 Data Marshaling: Key Observation and Idea Observation: Set of generator instructions is stable over execution time and across input sets Idea: Identify the generator instructions Record cache blocks produced by generator instructions Proactively send such cache blocks to the next segment’s core before initiating the next segment Suleman et al., “Data Marshaling for Multi-Core Architectures,” ISCA 2010. 23 Data Marshaling Hardware Compiler/Profiler 1. Identify generator instructions 2. Insert marshal instructions Binary containing generator prefixes & marshal Instructions 1. Record generatorproduced addresses 2. Marshal recorded blocks to next core 24 Profiling Algorithm Inter-segment data Mark as Generator Instruction LOAD X STORE Y STORE Y LOAD Y …. STORE Z LOAD Z …. 25 Marshal Instructions LOAD X STORE Y G: STORE Y MARSHAL C1 When to send (Marshal) Where to send (C1) LOAD Y …. G:STORE Z MARSHAL C2 0x5: LOAD Z …. 26 Data Marshaling Hardware Compiler/Profiler 1. Identify generator instructions 2. Insert marshal Instructions Binary containing generator prefixes & marshal Instructions 1. Record generatorproduced addresses 2. Marshal recorded blocks to next core 27 Hardware Support and DM Example Cache Hit! Core 0 Addr Y L2 Cache Data Y Marshal Buffer S0 LOAD X STORE Y G: STORE Y MARSHAL C1 S1 LOAD Y …. G:STORE Z MARSHAL C2 S2 0x5: LOAD Z …. Core 1 L2 Cache 28 DM: Advantages, Disadvantages Advantages Timely data transfer: Push data to core before needed Can marshal any arbitrary sequence of lines: Identifies generators, not patterns Low hardware cost: Profiler marks generators, no need for hardware to find them Disadvantages Requires profiler and ISA support Not always accurate (generator set is conservative): Pollution at remote core, wasted bandwidth on interconnect Not a large problem as number of inter-segment blocks is small 29 Accelerated Critical Sections Large Core Small Core 0 Addr Y L2 Cache Data Y L2 Cache LOAD X STORE Y G: STORE Y CSCALL LOAD Y …. G:STORE Z CSRET Critical Section Marshal Buffer Cache Hit! 30 Accelerated Critical Sections: Methodology Workloads: 12 critical section intensive applications Multi-core x86 simulator Data mining kernels, sorting, database, web, networking Different training and simulation input sets 1 large and 28 small cores Aggressive stream prefetcher employed at each core Details: Large core: 2GHz, out-of-order, 128-entry ROB, 4-wide, 12-stage Small core: 2GHz, in-order, 2-wide, 5-stage Private 32 KB L1, private 256KB L2, 8MB shared L3 On-chip interconnect: Bi-directional ring, 5-cycle hop latency 31 n e lit e oo k sq ue e m az ts p or t le zz up m ys ql -1 m ys ql w -2 eb ca ch e hm ea n ip l is in e qs pu ge m 140 nq pa Speedup over ACS DM on Accelerated Critical Sections: Results 168 170 120 8.7% 100 80 60 40 20 DM Ideal 0 32 Pipeline Parallelism Cache Hit! Core 0 Addr Y L2 Cache Data Y Marshal Buffer S0 LOAD X STORE Y G: STORE Y MARSHAL C1 S1 LOAD Y …. G:STORE Z MARSHAL C2 S2 0x5: LOAD Z …. Core 1 L2 Cache 33 Pipeline Parallelism: Methodology Workloads: 9 applications with pipeline parallelism Financial, compression, multimedia, encoding/decoding Different training and simulation input sets Multi-core x86 simulator 32-core CMP: 2GHz, in-order, 2-wide, 5-stage Aggressive stream prefetcher employed at each core Private 32 KB L1, private 256KB L2, 8MB shared L3 On-chip interconnect: Bi-directional ring, 5-cycle hop latency 34 es s et 40 ea n 60 hm 80 si gn ra nk is t ag e m tw im fe rr de du pE de du pD co m pr bl ac k Speedup over Baseline DM on Pipeline Parallelism: Results 160 140 120 16% 100 DM Ideal 20 0 35 DM Coverage, Accuracy, Timeliness 100 90 Percentage 80 70 60 50 40 Coverage 30 Accuracy 20 Timeliness 10 0 ACS Pipeline High coverage of inter-segment misses in a timely manner Medium accuracy does not impact performance Only 5.0 and 6.8 cache blocks marshaled for average segment 36 DM Scaling Results DM performance improvement increases with More cores Higher interconnect latency Larger private L2 caches Why? Inter-segment data misses become a larger bottleneck More cores More communication Higher latency Longer stalls due to communication Larger L2 cache Communication misses remain 37 Other Applications of Data Marshaling Can be applied to other Staged Execution models Task parallelism models Cilk, Intel TBB, Apple Grand Central Dispatch Special-purpose remote functional units Computation spreading [Chakraborty et al., ASPLOS’06] Thread motion/migration [e.g., Rangan et al., ISCA’09] Can be an enabler for more aggressive SE models Lowers the cost of data migration an important overhead in remote execution of code segments Remote execution of finer-grained tasks can become more feasible finer-grained parallelization in multi-cores 38 How to Build a Dynamic ACMP Frequency boosting DVFS Core combining: Core Fusion Ipek et al., “Core Fusion: Accommodating Software Diversity in Chip Multiprocessors,” ISCA 2007. Idea: Dynamically fuse multiple small cores to form a single large core 39 Core Fusion: Motivation Programs are incrementally parallelized in stages Each parallelization stage is best executed on a different “type” of multi-core 40 Core Fusion Idea Combine multiple simple cores dynamically to form a larger, more powerful core 41 Core Fusion Microarchitecture Concept: Add enveloping hardware to make cores combineable 42