Coding Techniques for Multicasting Ashish Khisti

Coding Techniques for Multicasting

by

Ashish Khisti

B.A.Sc., Engineering Science

University of Toronto, 2002

Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of

Master of Science at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

May 2004 c Massachusetts Institute of Technology 2004. All rights reserved.

Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Department of Electrical Engineering and Computer Science

May 21, 2004

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Gregory Wornell

Professor

Thesis Supervisor

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Uri Erez

Post Doctoral Scholar

Thesis Supervisor

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Arthur C. Smith

Chairman, Department Committee on Graduate Students

Coding Techniques for Multicasting

by

Ashish Khisti

B.A.Sc., Engineering Science

University of Toronto, 2002

Submitted to the Department of Electrical Engineering and Computer Science on May 21, 2004, in partial fulfillment of the requirements for the degree of

Master of Science

Abstract

We study some fundamental limits of multicasting in wireless systems and propose practical architectures that perform close to these limits. In Chapter 2, we study the scenario in which one transmitter with multiple antennas distributes a common message to a large number of users. For a system with a fixed number ( L ) of transmit antennas, we show that, as the number of users ( K ) becomes large, the rate of the worst user decreases as O ( K

− 1

L

).

Thus having multiple antennas provides significant gains in the performance of multicasting system with slow fading. We propose a robust architecture for multicasting over block fading channels, using rateless erasure codes at the application layer. This architecture provides new insights into the cross layer interaction between the physical layer and the application layer. For systems with rich time diversity, we observe that it is better to exploit the time diversity using erasure codes at the application layer rather than be conservative and aim for high reliability at the physical layer. It is known that the spatial diversity gains are not significantly high in systems with rich time diversity. We take a step further and show that to realize these marginal gains one has to operate very close to the optimal operating point.

Next, we study the problem of multicasting to multiple groups with a multiple antenna transmitter. The solution to this problem motivates us to study a multiuser generalization of the dirty paper coding problem. This generalization is interesting in its own right and is studied in detail in Chapter 3. The scenario we study is that of one sender and many receivers, all interested in a common message. There is additive interference on the channel of each receiver, which is known only to the sender. The sender has to encode the message in such the way that it is simultaneously ‘good’ to all the receivers. This scenario is a non-trivial generalization of the dirty paper coding result, since it requires that the sender deal with multiple interferences simultaneously. We prove a capacity theorem for the special case of two user binary channel and derive achievable rates for many other channel modes including the Gaussian channel and the memory with defects model. Our results are rather pessimistic since the value of side information diminishes as the number of users increase.

Thesis Supervisor: Gregory Wornell

Title: Professor

Thesis Supervisor: Uri Erez

Title: Post Doctoral Scholar

2

Acknowledgments

First and foremost I would like to thank my two wonderful advisors, Greg Wornell and Uri

Erez. I am truly privileged to have the opportunity to work with the two of you. Greg took me under his wings when I joined MIT and has ever since been a tremendous source of wisdom and inspiration. My interactions with Uri have been simply remarkable. Any time

I had the slightest idea on my research problem, I could drop by his office and develop a much clearer picture on what I was thinking about. I will always remember the summer of

2003 when we spent several hours together each day trying to crack the binary dirty paper coding problem. I have learned so many things from the two of you in such a short while that it is difficult to imagine myself two years earlier.

Special thanks to my lab mates, Albert Chan, Vijay Divi, Everest Huang, Hiroyuki Ishii,

Emin Martinian, Charles Swannack and Elif Uysal. I should particularly mention special thanks to Emin Martinian for several interesting research discussions. It was great to have you Vijay as my office mate, with whom I could freely talk almost anything that came to mind. Special thanks to Giovanni Aliberti, our systems administrator for providing us with delicious homemade sandwiches during the Thursday lunch meetings and introducing me to the wonderful world of Mac OSX. My deepest regards to our admin, Tricia Mulcahy for making all the complicated things at MIT look so simple. Also thanks to Shashi Borade and Shan-Yuan Ho for being wonderful travel companions in my trip to Europe last year.

Thank you Watcharapan Suwansantisuk for inviting me to the Thai festival.

I would like to thank the wonderful researchers at HP Labs, Palo Alto for inviting me to visit their labs several times during the last two years. I would especially like to acknowledge some fruitful interactions with Mitch Trott, John Apostolopoulos and Susie Wee at the HP labs and hope that they continue in the future.

Finally, I would like to thanks my grandmother, mother and my sister for being extremely supportive during my course of studies. It is nice to have three generation of ladies to support my uprising! I could have never gone this far without your support.

3

Contents

1 Introduction 9

2 Multicasting from a Multi-Antenna Transmitter 12

2.1

Channel Model for Multicasting . . . . . . . . . . . . . . . . . . . . . . . . .

13

2.2

Serving all Users — No Transmitter CSI . . . . . . . . . . . . . . . . . . . .

15

2.2.1

Single User Outage . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

2.2.2

Outage in Multicasting . . . . . . . . . . . . . . . . . . . . . . . . .

16

2.3

Serving all Users - Perfect Transmitter CSI . . . . . . . . . . . . . . . . . .

18

2.3.1

Naive Time-Sharing Alternatives . . . . . . . . . . . . . . . . . . . .

20

2.4

Serving a fraction of Users . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

2.5

Multicasting Using Erasure Codes . . . . . . . . . . . . . . . . . . . . . . .

23

2.5.1

Architecture Using Erasure Codes . . . . . . . . . . . . . . . . . . .

26

2.5.2

Analysis of the Achievable Rate . . . . . . . . . . . . . . . . . . . .

28

2.5.3

Layered Approach and Erasure Codes . . . . . . . . . . . . . . . . .

33

2.6

Multicasting to Multiple Groups . . . . . . . . . . . . . . . . . . . . . . . .

34

2.6.1

Transmitting Independent Messages to each user . . . . . . . . . . .

35

2.6.2

Multiple Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36

3 Multicasting with Known Interference as Side Information 38

3.1

Point to Point channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40

3.2

Multicasting channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42

3.3

Binary Channels — 2 User noiseless case . . . . . . . . . . . . . . . . . . . .

43

3.3.1

Coding Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

3.3.2

Converse . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

3.3.3

Discussion of the Coding Scheme . . . . . . . . . . . . . . . . . . . .

48

4

3.3.4

Random Linear Codes are Sufficient . . . . . . . . . . . . . . . . . .

49

3.3.5

Alternative Proof of the Coding Theorem . . . . . . . . . . . . . . .

49

3.3.6

Practical Capacity Approaching Schemes . . . . . . . . . . . . . . .

50

3.4

Binary Channels — More than two receivers . . . . . . . . . . . . . . . . . .

51

3.4.1

Improved Inner Bound for K > 2 users . . . . . . . . . . . . . . . . .

52

3.4.2

Binary Channel with Noise . . . . . . . . . . . . . . . . . . . . . . .

58

3.5

Gaussian Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

3.6

Writing on many memories with defects . . . . . . . . . . . . . . . . . . . .

61

3.6.1

Two Memories with Defects . . . . . . . . . . . . . . . . . . . . . . .

62

4 Conclusion and Future Work

A Coding over multiple blocks in SISO Block Fading Channels

B Proof of Proposition 2

65

68

71

5

List of Figures

2-1 Outage Probability for a Single User in MISO system . . . . . . . . . . . .

17

2-2 K · E [ R ] vs.

K for L=1,2 . . . 6 antennas. We need to take the expectation over R , since we are considering a finite number of users. . . . . . . . . . . .

20

2-3 The pdf of Γ with L=2,8. The mean of both distribution is the same, but the pdf for L=8 has much shorter tails. This helps when we aim for low values of Γ, but hurts when we aim for very high values of Γ . . . . . . . . . . . .

24

2-4 A time division protocol for serving 4 groups of users. Each group has multiple users that want the same content. The channel coherence time is large enough so that the channel gain is constant in a given block but small enough that successive blocks to a given group have independent gains. . . . . . . .

24

2-5 Low SNR analysis of erasure codes based Multicasting. (a) The optimal value of

C

C erg

(normalized throughput) as a function of the number of antennas and the corresponding optimizing value of

R

C erg

(normalized target rate) (b) The optimal outage probability as a function of the number of antennas (c)

C

C erg as a function of

R

C erg for L = 1 , 4 , 10 transmit antennas.

. . . . . . . . . . .

30

2-6 Analysis of of the erasure code based multicasting at SNR=50 dB. (a) Optimal value of ˜ as a function of the number of antennas and the corresponding optimizing value of R (b) The optimal outage as a function of the number of antennas (c) ˜ as a function of R − C erg for L = 1 , 4 , 10 transmit antennas.

32

3-1 Point to Point channel with state parameters known to the encoder . . . . .

40

3-2 Dirty Paper Coding Channel . . . . . . . . . . . . . . . . . . . . . . . . . .

41

3-3 Two User Multicasting Channel with Additive Interference . . . . . . . . .

44

3-4 Achievable rates for the two user multicasting channel when S

1 and S

2 are i.i.d. The x

− axis is Pr( S i

) = 1 . . . . . . . . . . . . . . . . . . . . . . . . .

45

6

3-5 Coding for two user multicasting channel . . . . . . . . . . . . . . . . . . .

46

3-6 Architecture for 2 user Binary Multicasting Channel . . . . . . . . . . . . .

50

3-7 K user multicasting channel . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

3-8 Upper bound for K user multicasting channel . . . . . . . . . . . . . . . . .

53

3-9 Improved Architecture for the 3 user channel . . . . . . . . . . . . . . . . .

54

3-10 Numerical Calculation of the optimal p and the corresponding I ( U ; S

1

, S

2

, S

3

) vs q . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

3-11 Improved Inner Bound and Outer Bound as a function of q .

. . . . . . . .

57

3-12 Two User Multicasting Gaussian Dirty Paper Channel . . . . . . . . . . . .

59

3-13 Achievable Rates and Outer Bound for writing on two memories with defects 64

7

List of Tables

3.1

The probability distribution p ( U | S

1

, S

2

, S

3

) of cleanup scheduling. Here p is a parameter to be optimized. . . . . . . . . . . . . . . . . . . . . . . . . . .

56

8

Chapter 1

Introduction

The problem of distributing common content to several receivers is known as multicasting. It arises in many popular applications such as multimedia streaming and software distribution.

It is pertinent to both wireless and wireline networks. Some early work [25] in multicasting has focussed on developing efficient scalable protocols for multi-hop networks such as the

Internet and mobile ad hoc networks (MANET). These protocols provide on demand service so that the entire network does not get flooded with data packets. More recent work

[18] on wireline multicasting has shown that the fundamental rate can be increased if the intermediate nodes perform coding. Polynomial time algorithms for efficient network coding have also been suggested [27, 15]. Yet another direction [21, 29] has been to model the endto-end links as erasure channels and develop codes that can be efficiently decoded by several users experiencing different channel qualities. These codes are suitable for multicasting over the Internet where packet loss is the main source of error.

In this thesis, we establish some fundamental limits and propose robust architectural solutions for multicasting in wireless networks. A signal transmitted from one node in a wireless network is received by all other nodes surrounding it. While this effect causes interference if different users want different messages, it is beneficial in the multicasting scenario. Despite this inherent advantage, the problem has not been widely explored in the wireless setting. We are only aware of the work in [20] that studies some array processing algorithms and fundamental limits on multicasting in wireless systems.

Multicasting is not a new application in wireless. The radio and TV broadcasting systems are one of the earliest examples of analog wireless multicasting. However, the

9

engineering principles that govern the design of these systems are very different from the modern view. These networks have a powerful transmitter designed to maximize the range of signal transmission. The transmitter is mounted on tall towers so that many receivers are in direct line-of-sight and receive good quality signal. Unfortunately, the data rate available in such systems is limited and modern of digital television systems use wireline solutions such as cable TV for high bandwidth transmission. The enormous growth of wireless telephony over the last decade has been made possible in part through the new digital wireless networks.

These networks, also known as cellular networks, divide the geographical area into smaller cells and reuse the available spectrum in different cells so that they can support a large number of users. Furthermore these systems do not rely on line of sight communication, but rather exploit the structure of wireless fading channel to develop new algorithms for reliable communication. These networks have been primarily designed for providing individualized content to each user. However, the next generation cellular networks are expected to support wireless data and streaming media applications where several users are interested in the same content. As these applications are deployed, it is important to develop new efficient architectural solutions. Clearly, if all the receivers want the same content, a system that creates a separate copy for each receiver is far from efficient. Consider for example an indoor sports stadium where the audience have personalized screens on which they can watch the game more closely and listen to the commentary. A central access point provides content to all the receivers over wireless links. Unlike the analog TV/radio multicasting systems, these digital systems cannot rely on line of sight communications and have to combat fading. At the same time they are not efficient if they do not exploit the fact that all the receivers want a common message.

In Chapter 2 of this thesis we will study some architectural issues for digital multicasting over wireless systems. The performance of these systems is often limited by the worst user in the network. We observe that multiple transmit antennas provide substantial gains in these systems as they greatly improve the quality of the worst channel. We also propose a robust architecture that uses rateless erasure codes. This study provides new insights into the cross layer design of the application and physical layers. One conclusion we draw is that in wireless systems with rich time diversity, it is not necessarily a good idea to be conservative in the design of the physical layer and aim for small outage probability if there is an ARQ type protocol at the application layer.

10

Another scenario we study is multicasting to multiple groups of users. It is quite natural to conceive examples where instead of a single group, there are many groups of users and different groups of users want a different message. This scenario is a generalization of the conventional unicasting systems and the single group multicasting system. In Chapter 2 of this thesis, we study some coding schemes for the scenario where the base station has multiple antennas and wishes to send different messages to different groups of users. This particular problem leads us to study a multiuser generalization of the dirty paper coding scheme. The classical dirty paper coding scenario has one sender, one receiver and an additive interference known only to the sender. It has been used to solve the problem of unicasting from a multi-antenna transmitter [1, 36]. We are interested in the scenario where a transmitter wishes to send a common message to many receivers and each of the channel experiences an additive interference that is known only to the sender but not to the receivers. The transmitter has to use the knowledge of the interference sequences so that the resulting code is good for all the receivers simultaneously. A solution to this particular abstraction provides an efficient solution to the problem of multicasting to multiple groups with multiple antennas. We devote Chapter 3 of this thesis to consider this problem in detail. We view this problem as a multiuser generalization of the single user link studied in

[13] where the transmitter has to deal with only one interfering sequence. We refer to this generalization as “Writing on many pieces of Dirty Paper at once”. We obtain achievable rates for a variety of channel models and prove capacity theorems for some special cases.

This scenario is rich with many open problems and we describe some of them in Chapter 4.

11

Chapter 2

Multicasting from a Multi-Antenna

Transmitter

In this chapter we focus on the problem of sending a common stream of information from a base station to a large number of users in a wireless network. The scenario where one transmitter communicates to several receivers is known as the broadcast channel problem.

A coding technique known as superposition coding is known to be optimal for the Gaussian broadcast channel problem when the transmitter and all the receivers have a single antenna

[5]. Recently, this result has been generalized to the case where the transmitter and receivers have multiple antennas and each receiver wants an independent message. The solution uses a technique known as dirty paper coding [4]. This technique was first used for the MIMO broadcast channel in [1]. Subsequent work in [34, 35, 39] shows that this technique achieves the sum capacity point and very recently Weingarten et al. [36] show that this scheme is in fact optimal. However little work has been done when there is common information to be sent in the network. We are only aware of the work in Lopez et al. [23, 20] which studies several schemes for multicasting common information in wireless networks.

Before designing a system for disseminating common content in a wireless network, we must estimate the gains we expect from such architectures over existing systems that encode several copies of the same message for different users. It is also important to understand how these gains are affected by our design decisions. For example how do these gains change if we decide to serve only a fraction of the best users, instead of all the users? How does the fading environment and the number of users affect such systems? In this chapter we

12

seek answers to some of these questions.

Another consideration is whether the fading coefficients are known to the sender and/or the receiver. Pilot assisted training sequences are often used so that the receivers can learn the fading coefficients. If the receivers have perfect knowledge of fading coefficients then the system is called a coherent communication system while those systems where neither the transmitter nor the receiver have this knowledge are called non-coherent communication systems. In this chapter we only focus on the coherent systems. Providing the knowledge of fading coefficients to the transmitter typically requires explicit feedback from the receivers in FDD (frequency division duplex) systems or uplink measurements in TDD (time division duplex) systems. This knowledge of fading coefficients is known as channel state information (CSI) at the transmitter. It is necessary to assess the value of providing CSI to the transmitter. In this chapter we study the systems with and without transmitter CSI.

2.1

Channel Model for Multicasting

We consider a scenario in which one base station wishes to send the same message to K different users. The base station is equipped with L antennas while each user has a single antenna. Thus, each receiver experiences a superposition of L different paths from the base station. We focus on an environment that has rich enough scattering and no line-ofsight between the base-station and the receivers. Furthermore, we consider narrow-band communication systems so that the time interval between two symbols is much larger than the delay spread due to multipath. Under these conditions, the channel gains on each of the L paths can be modeled as i.i.d. complex Gaussian CN (0 , 1) [33]. Furthermore, it is noted in [33] that the typical coherence time for such environments is on the order of a few hundred symbols. The channel gains stay constant for a relatively large number of symbols.

The relative magnitude of coherence time compared to the communication delay tolerated by the application has important implications on the fundamental data rates achieved by the system as well as the coding schemes that have to be used to achieve these rates.

We present three different scenarios of interest. In our model we consider transmission of codewords consisting of N symbols. We denote symbol i ∈ { 1 , 2 . . . n } in codeword m by x [ i ; m ]. We use subscript k to denote the channel model of the k th user.

(i) Slow Fading: In some applications, the delay requirements are stringent. Several

13

different codewords have to be transmitted within one coherence time interval. In such situations, we assume that the channel gains are fixed once they are drawn randomly.

The overall channel model is given by y k

[ i ; m ] = h

† k x [ i ; m ] + w k

[ i ; m ] (2.1)

The channel gains are each drawn i.i.d.

CN (0 , 1), but they are fixed for subsequent transmissions.

(ii) Block Fading: If the delay requirements are not too stringent a block fading model is suitable. Here the channel remains constant over a codeword transmission, but changes independently when the next codeword is transmitted. Accordingly, the channel model is given by y k

[ i ; m ] = h

† k

[ m ] x [ i ; m ] + w k

[ i ; m ] (2.2)

The channel gains are drawn i.i.d. before the transmission of each codeword and assumed to remain fixed during the transmission of the entire codeword. A slight generalization of the block fading channel model is a model where one codeword spans over several blocks (say T blocks). Alternately, in this model, the channel is constant over n/T consecutive symbols and then changes independently for the next block of symbols.

(iii) Fast Fading: If the delay requirements are extremely relaxed, the transmitter can perform interleaving of different codeword symbols. Accordingly, each symbol experiences a different channel gain and the channel model is given by y k

[ i ; m ] = h

† k

[ i ; m ] x [ i ; m ] + w k

[ i ; m ] (2.3)

In this model, the channel gains are assumed to be drawn i.i.d. before the transmission of each symbol in each codeword.

In (2.1)-(2.3), w k

[ i ; m ] is additive Gaussian noise CN (0 , σ 2 ) at receiver k when symbol i of codeword m is received. These equations explicitly reveal how the channel gain changes from symbol to symbol and codeword to codeword.

14

The choice of a good performance criterion for the multicasting system is intimately related to the fading channel model. In the slow fading scenario we can order the users based on their channel gains. However such an ordering cannot be done in the fast fading model since the channel changes independently with each transmitted symbol. All users have statistically identical channels. In such a scenario, it is most appropriate to focus on the ergodic capacity of the channel. Since each user has the same ergodic capacity, it is clear that this rate is achievable for multicasting. The ergodic capacity of such a channel is given by [32]

C = E [log(1 + ρ h

† h )] (2.4)

In the slow fading scenario, one obvious choice is to serve all the users. In this case the system is limited by the worst user in the network. Such a choice may be necessary if all the users have subscribed to a service. On the other hand in some applications it might be worthwhile not to serve some of the weakest users. This may be necessary to do in applications such as streaming that require that the stream be transmitted at a fixed rate. In the block fading scenario, it is not advisable to serve the weakest user in each block, since the ordering of users changes from block to block. Instead it is attractive to exploit the time diversity available in these systems to improve the overall throughput. In the following sections we discuss achievable rates based on these choices.

2.2

Serving all Users — No Transmitter CSI

As discussed before, a key factor that affects system design in slow fading is the availability of channel state information (CSI) at the transmitter. In this section we are concerned with the scenario where the transmitter has no knowledge of the channel. This happens if there is no reverse path from the receivers to the transmitter or if the delay constraints prohibit the transmitter to wait for the measurements from the receivers. Since the transmitter does not know the channel gains, we typically design a code for some fixed rate R . A common figure of merit is the so called outage probability, which we discuss next.

2.2.1

Single User Outage

We begin by defining the ǫ -outage probability for a single user link as in [26].

15

Definition 1 An ǫ -outage probability for a fixed rate R is given by:

ε = min p ( x ): E [ x † x ] ≤ P

Pr ( I ( x ; y, h ) ≤ R ) (2.5)

In the above definition P is the power constraint and I ( · ; · ) is the standard mutual information between two random variables. Note that h is explicitly included along with y , to stress that the receiver but not the transmitter has knowledge of the channel vector.

For Gaussian channels, we have max p ( x ): E [ x † x ] ≤ P

I ( x ; y, h ) = max

Λ x

:tr(Λ x

) ≤ P log

µ

1 + h

†

Λ x h

¶

N 0

It is conjectured in [32] that for low outage, the optimal Λ x

=

P

L

I and accordingly (2.5) reduces to

ε =

½

Pr

µ log

µ

1 +

ρ h

† h

¶

L

< R

¶¾

Here ρ = P/σ

2 is the input SNR of the system. Since the distribution of h

† h ∼ 1

2

χ

2

2 L

, under the assumption of small values of ǫ and high SNR it can be shown that the outage probability is given by [38, 24] ǫ ≈

µ

2

R − 1

¶ L

ρ

L

L

L !

(2.6)

2.2.2

Outage in Multicasting

In multicasting, we declare an outage if any user fails to decode. This is clearly an extremely stringent requirement. Nevertheless, even under this case, significant gains can be achieved by using a modest number of transmit antennas. If the transmitter fixes a data rate R , the resulting outage probability is given by: ǫ m

= Pr(min { I h 1

, I h 2

. . . I hK

} < R )

Here I hj

= I ( x ; y j

, h j

) is the mutual information between the transmitter and user j .

Since we assume i.i.d. channel gains and ǫ ≪ 1, we have ǫ m

= 1 − (1 − ǫ )

K

≈ Kǫ (2.7)

16

10

0

10

−1

10

−2

10

−3

10

−4

10

−5

Outage Proababilites as a function of SNR for R=0.1b/sym and R = 0.5 b/sym

R=0.1,L=2

R=0.1,L=4

R=0.5,L=4

R=0.5,L=2

−6

10

−10 −5 0 5

Input SNR(dB)

10

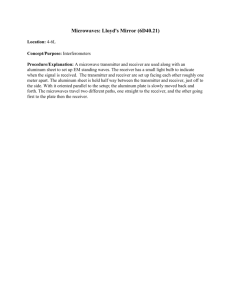

Figure 2-1: Outage Probability for a Single User in MISO system

15

Combining equations (2.6) and (2.7) we get ǫ m

≈ K

µ

2

R

ρ

− 1

¶ L

L

L

L !

(2.8)

Note that if we hold R as constant, K/ρ L = constant. Thus doubling the SNR, increases the number of users the system can support at same ǫ m by a factor of 2

L

. This leads us to the following conclusion

Claim 1 For a system with L transmitter antennas, operating at a fixed rate R bits/symbol in the high SNR regime, and small outage, every 3 dB increase in SNR increases the number of multicasting users that can be supported by a factor of 2

L

.

This result essentially says that if we are designing a system that operates with a low outage probability, then we can serve many more users simultaneously by a modest increase in the SNR. It is a consequence of the well known fact that the diversity of a multi-inputsingle-output (MISO) system is proportional to SNR

− L in the high SNR regime. From (2.7), it is clear that if we want to achieve a certain ǫ m for the system, the outage probability for

17

each user must satisfy ǫ = ǫ m

/K . Accordingly the additional SNR should drive the outage for each user from ǫ m to ǫ m

/K . This reduction in outage can be achieved by a modest increase in SNR, thanks to the steepness in the waterfall curve. See Figure 2-1.

2.3

Serving all Users - Perfect Transmitter CSI

In this section, we examine the other extreme when the transmitter has perfect knowledge of each channel. There are two possible uses of this channel knowledge:

(i) Smart array processing to maximize the throughput.

(ii) Selecting a rate which all the users can decode.

The use of channel knowledge in array processing for multicasting is studied in [20]. It is shown that beamforming is optimal for the special case when there are either one or two receivers. However beamforming is not optimal in general and a technique called spacetime-multicast-coding is proposed and shown to be optimal for any number of users. The capacity of the multicast network with transmitter knowledge derived in [20] is,

C = max

Λ x

:tr(Λ x

) ≤ P min i ∈{ 1 , 2 ...K

} log

Ã

1 + h i

†

Λ x h i

!

N 0

(2.9)

The numerical optimization of Λ x can be performed efficiently, since the problem is a convex optimization problem. However, the answer depends on the specific realization of the channel vectors and in general is not amenable to analysis. As K → ∞ , it is natural to expect that Λ x

→ P

L

I

L

, where I

L is a L × L identity matrix. The intuition is that as the number of users becomes large, there is no preferred direction and hence it is favorable to use an isotropic code. We primarily consider the scenario of a large number of users and use Λ x

=

P

L

I

L

. In this limit, the channel knowledge is not helpful in performing array processing. We have,

R = min i ∈{ 1 , 2 ...K

} log

³

1 +

ρ

L h i

† h i

´

= log

µ

1 +

ρ

L min i ∈{ 1 , 2 ...K

} h

† i h i

¶

(2.10)

We set g = min i ∈{ 1 , 2 ...K

} h

† i h i

. Its distribution can be easily calculated from the distribution of h and is stated in the following proposition.

18

Proposition 1 The probability distribution function of g = min i ∈{ 1 , 2 ...K

} h

† i h i is given by f g

( x

) = Kf h

† h

( x

)(1 − F h

† h

( x

))

K − 1 where f h

† h

( · ) and F h

† h

( · ) are the probability density and cumulative distribution functions of h † h respectively.

Proof:

Pr( g ≥ G ) = Pr( k h

1 k 2

= Pr( k h i k

2

≥ G, k

≥ G ) K h

2 k 2

= (1 − F h † h

( G ))

K

≥ G . . .

k h

K k 2 ≥ G )

(Since all the channels are i.i.d.)

Differentiating both sides, we get the desired result.

Using results from order statistics, we show in Appendix B that as K → ∞ , g = min i h i

† h i decays as O ( K

− 1

L

), where L is the number of antennas. This leads to the following proposition,

Proposition 2 For fixed ρ , as the number of users K → ∞ , the rate common rate R which all the receivers can decode decays as O ( K

− (

1

L

+ δ )

) where L is the number of antennas and

δ > 0 can be made arbitrarily small.

Proof:

From (2.10), we have

R =

≈ min i ∈{ 1 , 2 ...K

} log

³

1 + min i ∈{ 1 , 2 ...K

}

ρ

L h

† i h i

= O ( K

− (

1

L

+ δ )

)

ρ

L h

† i h i

´

(For large K , we use the linear approximation)

(See Appendix B)

In Figure 2-2, we numerically calculate the quantity E [ R ] using Proposition 1 and plot the product K · E [ R ] as a function of K . Note that Proposition 2 suggests that as the number of users K becomes large, the common rate R approaches a non-random quantity.

However for any finite K , R is still a random variable and hence we use the average value of R to observe of its decay rate. We see qualitatively from the graph that for L = 1, the average value of R decays as 1 /K (since K · E [ R ] approaches a constant as K increases), but for L ≥ 2 the decay rate of R is much slower(since K · E [ R ] increases with K ). Hence,

19

150

100

M=1

M=2

M=3

M=4

M=5

M=6

50

KE[R] for M=1,2,3,4 antennas for k=5−200 users

10

10 50

Number of Users (K)

100 150 200

Figure 2-2: K · E [ R ] vs.

K for L=1,2 . . . 6 antennas. We need to take the expectation over

R , since we are considering a finite number of users.

multiple antennas provide substantial gains in the achievable rate, analogous to the gains we observed in the outage probability in the previous section.

2.3.1

Naive Time-Sharing Alternatives

We now compare the achievable rate of the previous section to naive schemes that do not exploit the fact that all the users want a common message. The achievable rates from these schemes in comparison to Proposition 2, will indicate the value of exploiting common information in the system. The scheme described in Proposition 2, requires that all users simultaneously listen to the transmission and is limited by the worst user. Suppose we

“pretend” that each user wants a different message and then perform resource allocation to ensure that all users get the same amount of data. We consider two such schemes and show that both these schemes incur a significant loss in the achievable rate compared to

Proposition 2

1

.

1

Note that time-sharing is in genera not optimal for broadcast channels with independent messages.

Ideally we should do dirty paper coding. However we limit ourselves to time-sharing type strategies, since they are easy to analyze and it is known that TDMA is not too bad compared to Dirty Paper Coding [16]

20

(i) Allocation based on time-slots: Users are divided up into time-slots, so that only one user is active in each time-slot. The rate of the active user in a particular timeslot is given by R i

= log(1 + ρ h i

† h i

), where h i is the received channel vector of the active user. Since all the users need to get the same message eventually, we need to allocate the time-slots inversely proportional to their rates. This can be expressed mathematically as:

α

1

R

1

= α

2

R

2

= . . .

= α

K

R

K such that

P K i =1

α i

= 1. This gives

R ts, 1

=

1

R

1

+

1

R

2

1

· · · +

R

1

K

=

1

K

H ( R

1

, · · · , R

K

) <

1

K 2

K

X

R i i =1

(2.11)

In (2.11), H denotes the harmonic mean of R

1

. . . R

K

. Using the fact that as K → ∞ ,

1

K

P K i =1

R i

→ E [log(1 + ρ h

† h )] it follows that R ts, 1

≤

1

K

E [log(1 + ρ h

† h )]. Note that the term inside the logarithm increases with the number of antennas L , but this is only a secondary effect. The rate decreases as 1 /K for any number of transmit antennas in contrast to Proposition 2 where the rate decreases as (1 /K )

1

L

.

(ii) Allocation based on power-levels: Users are each assigned an equal number of time-slots but the power level assigned to each user is inversely proportional to the channel strength. Accordingly, we have that

α

1 k h

1 k

2

= α

2 k h

2 k

2

= . . .

= α

K k h

K k

2 where the average power constraint requires

1

K

P K i =1

α i

= ρ . This gives

R ts, 2

=

1

K log

1 + h

†

1

1 h

1

Kρ

+ · · · + h

†

K

1 h

K

Since log(1 + 1 /x ) is a convex function in x , it follows that

R ts, 2

≤

1

K 2

K

X log

³

1 + ρ h i

† h i

´ i =1

21

As K → ∞ , it is clear that R ts, 2

≤

1

K

E [log(1 + ρ h

† h )]. Again the rate achieved through this scheme decreases as 1 /K for any number of transmit antennas.

Note that in these schemes, we do not have to transmit at the rate of the worst user all the time. Despite this seeming advantage, these schemes perform poorly. The intuition behind the poor performance of the time-sharing based schemes is that we have to allocate more resources to the worst user (in terms of time-slots or power levels). It hurts if the users do not listen in all time-slots. Thus significant gains can be achieved by designing appropriate protocols for multicasting.

2.4

Serving a fraction of Users

In this section, we relax the requirement that all the user have to be served and find the achievable rate when a faction α of the weakest users is not served. We observed in the previous section that the rate decreases as O ( K

− 1

L

) if all the users have to be served. How does the rate improve if we decide not to serve a certain fraction of the weakest uses?

In order to perform analysis and develop some insights, we again consider the limit of a large number of users. Let Γ eff be the effective target SNR with the corresponding rate

R = log(1 + ρ Γ eff

). An outage event occurs with probability

α = Pr

µ k h i k 2

L

≤ Γ eff

¶

(2.12)

The usual notion of outage on a single user link is the fraction of time the channel is not strong enough to support a given rate. If the channel is in outage, the receiver cannot decode at all and hence we would like this probability to be as small as possible. In the multicasting setting, outage has an alternative interpretation. It is the fraction of the weakest users who are not served. Thus in multicast, there is no reason to restrict attention to small values of outage. It allows us to study the tradeoff between the fraction of weakest users that are not served and the rate that can be given to the other users.

Since k h k

2

∼

1

2

χ

2

2 L

, the relation between the effective channel gain, Γ eff probability, α is given by the following expression: and outage

1 − α = 1 − F k h k 2

( L Γ eff

) = e

− L Γ eff

Ã

1 + L Γ eff

+

L Γ eff

2

. . .

( L Γ eff

)

L − 1

!

( L − 1)!

(2.13)

22

In general, the above equation does not have an explicit solution for Γ eff

, however we can consider the extreme cases

(i) α → 0: In this case, we expect Γ eff to be small since we are serving almost all users.

Using Γ eff

≪ 1 we have from (2.13),

Γ eff

≈ α

1

L

L !

1

L

L

(ii) α → 1: In this case, we expect Γ eff to be large, since we are serving only the best users. Accordingly, we have from (2.13), 1 − α ≈ e − ( L Γ eff

− L log Γ eff

) and that 2

Γ eff

≈

− log(1 − α )

L

(2.14)

Equation (2.13) suggests that a large number of antennas actually hurts the performance when we aim to serve only the strongest users ( α → 1). The intuition here is that when we are serving the strongest users the more favorable distribution of the channel gains must have long tails in the right extreme. By having many antennas we average the path gains to each receiver and hence the extreme tails decay faster in both directions(see Figure 2-3).

This feature helps if we decide to serve most of the users but is not desirable if we decide to serve only the best users. Note however that this observation comes with a caveat. We assume that the transmitter uses a spatially isotropic code for serving (1 − α ) K users in the network. For values of α close to 1, in practice we are serving only a finite number of users.

In this case a spatially isotropic code (i.e. Λ x

=

P

L

I

L

) should not be used. It is worthwhile that the transmitter learns the channel gains of the best users and does beamforming or space time multicast coding proposed by Lopez [20]. In other words, if we want to serve a fixed number of the best users then multiple antennas can again be useful albeit for different reasons.

2.5

Multicasting Using Erasure Codes

Our treatment so far, has not addressed the important question of how large an outage the system must tolerate. An obvious tendency is to keep the outage probability small. Such

2

Here the approximation a

≈ f ( b ) is in the sense that lim b →∞ a f ( b )

= 1

23

0.4

0.3

0.2

0.1

0.7

0.6

0.5

t=2 t=8

0

0 0.5

1 1.5

2

Γ

2.5

3 3.5

4

Figure 2-3: The pdf of Γ with L=2,8. The mean of both distribution is the same, but the pdf for L=8 has much shorter tails. This helps when we aim for low values of Γ, but hurts when we aim for very high values of Γ

Group 1

K

1 users

Group 2

K

2 users

Group 3

K

3 users

Group 4

K

4 users

Group 1

K

1 users

Group 2

K

2 users

Group 3

K

3 users

Channel Coherence Time

Figure 2-4: A time division protocol for serving 4 groups of users. Each group has multiple users that want the same content. The channel coherence time is large enough so that the channel gain is constant in a given block but small enough that successive blocks to a given group have independent gains.

a design is however conservative as the corresponding target rate can be very small. When the channel is good, we can decode much more information than the conservative rate. If a system designer has the choice to pick the outage probability is it better to pick 1% or 10%?

Such a choice is important as different outages imply different achievable rates. In order to get some insights into this particular problem, we need to pose the question in a broader context and consider the overall system architecture. A bigger picture that involves crosslayer design is necessary as it lends some important insights on the interaction between the physical layer and higher layer protocols.

The application we consider is distributing common content to a group of users from a wireless access point. The access point serves many such groups in a round robin fashion as shown in Figure 2-4. Due to the delay between successive periods in which a particular group is served, the block fading scenario (2.2) is a suitable model. Each user experiences

24

an i.i.d. Rayleigh fading channel which stays constant within a given block and changes independently in the next block. It is well known in the information theory literature [33] that the optimal encoding technique over a block fading channel is to jointly code over a very large number of blocks. The receiver knows the fading coefficients and incorporates this knowledge in maximum likelihood decoding and this scheme achieves the ergodic capacity

(2.4). Even though this scheme is information theoretically optimal, there are many issues that limit the use of this scheme in practice. The physical layer implementation requires use of practical error correction codes that can be decoded at variable SNR. Unlike the AWGN case, practical capacity approaching codes over these channels suffer from complexity constraints. Perhaps more serious is the fact that the code we use is a fixed block length code and there is a finite error exponent associated with the code even if we code over a very large number of blocks. In order to deal with an error event at the physical layer, a higher layer protocol has to be implemented. This can be one of the following forms:

• Automatic Repeat Request(ARQ): If there is only a single receiver a particularly simple feedback based scheme can be used to deal with the physical layer outage. If the receiver is not able to decode at the end of the transmission, it sends a retransmission request to the sender. While such a feedback cannot increase the information theoretic rate, it is known to improve the error exponent [8]

3

.

• Forward Error Correction(FEC): When there are many receivers, the ARQ protocol cannot be used because different users lose different symbols. One approach is to use an erasure code as an outer code. The original source file is first converted into erasure symbols each of which is then encoded at the physical layer using a suitable channel code. The receivers can recover the original source file if they are able to receive a sufficient number of the erasure code symbols.

While a significant amount of literature has been devoted to ARQ based schemes on block fading channels (see [22] and references therein), relatively little work has been done to our knowledge on FEC based schemes. The main problem is that traditional erasure codes are block codes and need to be designed for a specific rate which has to be chosen aprori

[8]. Hence the problem of outage is not completely solved since there is still a chance that some users with weak channel cannot decode even after the FEC code is used. Fortunately

3 This particular analysis assumes perfect feedback with no errors.

25

an elegant solution to this problem is to use an incremental redundancy rateless erasure code. These codes, also known as fountain codes were suggested in [21],[29] for multicasting over the internet. Erasure symbols are generated from the source file in real time as long as any of the receivers are still listening. Unlike the traditional block erasure codes, we do not need the knowledge of erasure probability to generate these symbols. A receiver can recover the original file after it has collected a sufficient number of symbols. Thus, rateless codes allow variable rate decoding. A receiver is “online” until it collects a specific number of erasure symbols and then recovers the original file. Using the rateless erasure code as an outer code preserves the advantages of the ARQ based system without requiring feedback of which specific symbols were lost.

2.5.1

Architecture Using Erasure Codes

We now describe an architecture that uses a rateless erasure code as an outer code. Each erasure symbol is sent over the channel using a standard channel code. In order to allow successful implementation of the channel code, the erasure symbol must be over a large alphabet so that it provides large number of information bits. An important consideration is how many channel blocks ( K ) should be used for transmitting each symbol. The case of large K is analogous to the ergodic capacity achieving scheme we discussed earlier. It is however of practical interest to consider the case of K = 1. In this case we can use existing good codes for AWGN channel for channel coding. The idea is to fix a data rate R which depends on the alphabet size of the erasure code. This would in turn result in an outage probability ε . The average throughput achieved in this scheme is (1 − ε ) R . Optimization of the average throughput yields the best possible tradeoff between using a large data rate per erasure symbol and producing a small outage probability at the physical layer. The optimal value of ε that maximizes this average throughput would answer the question we posed in the first paragraph of this section as to what is a reasonable value for the outage probability in designing systems. We now describe this particular architecture in detail and calculate the optimal value of ε . Our focus is initially on the case when K = 1. In each block we send one erasure symbol encoded using a standard AWGN code. The case of large

K will be dealt subsequently.

(i) The transmitter converts the file into a very large number of erasure symbols each

26

consisting of nR information bits ( n is the size of a block), using a rateless erasure code. The erasure code symbols

4 can also be generated in real time. The encoding and decoding complexity of these codes in near linear.

(ii) In each block, the transmitter attempts to transmit one erasure symbol. It encodes this symbol using a suitable AWGN channel code at rate R .

(iii) Each receiver then tries to decode the packet received in each block. If its instantaneous mutual information in the block is higher than nR , it succeeds and the packet is decoded and stored. Otherwise an error is declared and the packet is discarded.

(iv) When a receiver obtains enough packets, it is able to decode the original file using the decoding algorithm for the rateless erasure code. If the original source file has nT bits then [ T /R ] + δ symbols are sufficient to generate the original file, where δ is a small constant depending on the choice of a particular code.

Note that this particular architecture assumes a feedback from the receiver. The transmitter has to know of whether any receiver is listening to its packets. In practice there is always a handshaking protocol and session establishment between the receiver and the transmitter. So this requirement is naturally available in most systems. There are many practical observations that favor such an architecture.

• Robustness to Channel Modeling: The architecture we presented is adaptive to varying channel conditions. If the channel is weak, the user has to wait longer until it receives enough erasure symbols. Conversely if the channel is strong the waiting time is short. By overcoming the problem of outage experienced in fixed block codes, this scheme is far less sensitive to channel modeling. The choice of channel model does affect the optimal outage probability we aim in each block, but this mismatch is not detrimental to the performance of the system.

• Computational Complexity: There is a useful separation between generating the erasure code symbols and AWGN codewords. The time as well as complexity requirements for encoding and decoding of erasure symbols are near linear. Moreover efficient

4

Note that the erasure code symbols has to be over a very large alphabet since each such symbol must provide nR information bits to the inner AWGN code. From now on, we will refer to these as erasure symbols with the understanding that they are over large alphabets.

27

iterative algorithms for decoding the inner AWGN code are now widely available as well. On the other hand if one were to directly encode the source file for a block fading channel code the practical implementations are not as efficient, as discussed earlier.

• Better Error Exponent: In a fixed block length erasure code was used there are two sources error (i) error event in decoding the inner AWGN code (ii) error event in the outer erasure code when sufficient erasure symbols are not received. By using a rateless code, and assuming that the receiver has a perfect feedback channel to indicate when it is done, we eliminate the second event and improve the error exponent 5 . This type of improvement is analogous to the improvement in the ARQ based schemes [9],

[6](pg. 201) for single user links.

2.5.2

Analysis of the Achievable Rate

We now present some analysis of the achievable rates using the architecture we just described. We model the channel of each user as an independent block fading Rayleigh channel. The transmitter has L antennas while each receiver has one antenna. Suppose we decide to send an erasure symbol with nR information bits in each block. The probability of erasure depends on the choice of R . Let ε denote the probability that a packet gets lost for any given user. The average throughput for each user is then given by ˜ = (1 − ε ) R .

We choose the optimal R that maximizes ˜ . The probability of outage is given by:

ε = Pr

= Pr

³

(log(1 + k h k

2

µ k h k

2

< L e

R

ρ

ρ

) < R

´

L

− 1

¶

= 1 − e

− G eff

µ

1 + G eff

+

G eff

2

2

G eff

L − 1

. . .

( L − 1)!

¶ where

6

G eff

= L e

R

ρ

− 1

(2.15)

Here G eff refers to the effective channel gain that we aim for based on our choice of R . If we aim for the ergodic capacity for example, then G eff

= 1. The overall throughput is given

5 Note that in practice it is not true that the backward channel is perfect. There is always going to be some error. In both slow fading and fast fading, we can show that the error exponent still improves with feedback.

6

In this section we take logarithms to the base e for simplicity of calculations.

28

by

˜

( G eff

) = e

− G eff

µ

1 + G eff

+

G eff

2

2

G eff

L − 1

. . .

( L − 1)!

¶ log

µ

1 +

ρG eff

¶

L

(2.16)

To develop insight into the effect of multiple antennas on the throughput ˜ , we consider the case of low SNR and high SNR systems.

Low SNR As the SNR = ρ → 0, we can make the following simplifications

(i) C erg

≈ ρ

(ii) G eff

= L e R − 1

ρ

≈

LR

C erg

(iii) log(1 +

ρG

L eff ) ≈

ρG eff

L

Accordingly (2.16) simplifies to

˜

( G eff

)

C erg

= e

− G eff

G eff

L

µ

1 + G eff

+

G eff

2

2

. . .

G eff

L − 1

( L − 1)!

¶

Figure 2-5(a) shows the optimal value of the normalized throughput ˜ erg as a function of the number of antennas while Figure 2-5(b) shows the corresponding outage probability.

For L = 1, we see that the optimal G eff

= 1, ˜ erg

= 1 /e and the corresponding outage probability is ε = 1 − 1 /e . Thus the optimal system aims for a large outage probability.

As the number of transmit antennas is increased Figure 2-5(a) shows that the optimal value of R decreases initially, reaches a minimum around L = 6 and then slowly increases.

The intuition behind this behavior is that the tails of the distribution function of k h k become sharper (cf. Figure 2-3). Consequently, by decreasing R we can decrease the outage substantially and the overall effect is a net increase in the throughput as seen in 2-5(a).

Even though the optimal throughput increases with the number of antennas, one has to be careful in interpreting these results. We plot the function ˜ as a function of

R

C erg in

2-5(c) for L = 1 , 4 , 10 antennas. We note that 2-5(a) only plots the peak values. These plots reveal that even though overall higher gains are achievable with more antennas, the function ˜ ( · ) becomes more peaky as L increases. One has to operate very close to the optimal R/C erg as L increases. Since we are operating in the low SNR regime, this requires us to design and use strong AWGN codes for specific low rates. This may not be possible in practice and Figure 2-5(c) shows that there is a large penalty in the throughput of multiple

29

(a) Optimized Throughput and Corresponding R

1

0.8

0.6

0.4

0.2

0

Throughput

Target Rate

0.6

0.5

0.4

(b) Optimal Outage

0.3

0.2

0.1

5 10

Number of Antennas

15

0

5 10

Number of Antennas

(c) Achievable Throughput With L=1,4,10 Transmit Antennas

15

0.5

0.4

0.3

0.2

0.1

0

0

L=10

L=4

L=1

0.5

1 1.5

2 2.5

R/C erg

3 3.5

4 4.5

5

Figure 2-5: Low SNR analysis of erasure codes based Multicasting. (a) The optimal value of

C

C erg

(normalized throughput) as a function of the number of antennas and the corresponding optimizing value of

R

C erg

(normalized target rate) (b) The optimal outage probability as a function of the number of antennas (c)

C

C erg antennas.

as a function of

R

C erg for L = 1 , 4 , 10 transmit

30

transmitter antenna array if one operates at rates not close to the optimal. Given a code that operates at a certain rate in the low SNR regime one has to select the matching number of antennas to optimize the throughput. From Figure 2-5(c) we see that for R > 1 .

5 C erg

, it is better to have a single transmit antenna rather than 4 or more antennas. Thus one has to be careful in interpreting the performance gains from using multiple antennas.

High SNR In the high SNR regime, we can make the following approximations:

(i) G eff

= L e R − 1

ρ

≈ L e R

ρ

≪ 1

(ii) ε = 1 − e

− G eff

³

1 + G eff

+

G eff

2

2

. . .

G eff

L − 1

( L − 1)!

´

≈

G eff

L !

L

(iii) ˜ ( G eff

) ≈

³

1 −

G eff

L !

L

´ log

³

1 +

ρG eff

L

´

Using the above approximations, we obtain the following expressions for the optimal parameters:

ε opt

≈

1

L log ρ

R opt

≈ log ρ −

1

L

1

˜ opt

≈ log ρ −

L

1 log log ρ + O (

L

1 log log ρ − O (

L

)

)

(2.17)

(2.18)

(2.19)

In Figure 2-6, we numerically plot the optimal achievable rates at SNR = 50 dB. Figure

2-6(a) shows the optimal throughput and the corresponding target rate. Unlike the low

SNR case, we observe here that the target rate increases with the number of antennas. The intuition behind this fact is that in the high SNR case the distribution of log( k h k ) is what matters and this distribution has short tails on the on the right side of the mean even for

L = 1. Accordingly, as seen in Figure 2-6(a),(c) the optimal value of R is chosen to the left of the ergodic capacity. In this regime, the the outage is small. As the number of antennas increases, the target rate and the average throughput both appear to approach the ergodic capacity according to

1

L log log ρ as predicted by (2.18)and (2.19). Figure 2-6(b) shows the corresponding outage probability, which decreases according to 1 /L as predicted by (2.17). Figure 2-6(c) plots the achievable throughput as a function of R − C erg for

L = 1 , 4 , 10 antennas. Analogous to the low SNR regime, we observe that the gains from having multiple antennas are prominent only if we operate close to the optimal R .

31

8

6

4

2

0

(a) Optimal Throughput & Target Rate

(b) Optimal Outage

Throughput (C)

Target Rate

0.12

0.1

0.08

0.06

0.04

0.02

0

5 10

Number of Antennas

15 5 10

Number of Antennas

(c) Achievable Throughput with L=1,4,10 Transmit Antennas

10

8

6

4

L=1

L=4

L=10

15

2

0

−5 −4 −3 −2

R − C erg

−1 0 1 2

Figure 2-6: Analysis of of the erasure code based multicasting at SNR=50 dB. (a) Optimal value of ˜ as a function of the number of antennas and the corresponding optimizing value of R (b) The optimal outage as a function of the number of antennas (c) ˜ as a function of

R − C erg for L = 1 , 4 , 10 transmit antennas.

32

Coding over Multiple Blocks: So far we have considered using one erasure symbol per block and an AWGN channel code. As discussed earlier, instead of using an AWGN code we could have the inner channel code span over multiple blocks and exploit the time diversity.

The transmitter could use an erasure symbol over a larger alphabet with nKR information bits. If the total mutual information over the K blocks is less than ( nKR ) bits then the entire packet is discarded. The ergodic capacity approaching channel code requires K → ∞ .

However, as discussed in the earlier part of this section practical schemes should not aim for a very large K . As we increase the number of blocks, we expect to approach the ergodic capacity. We analyze the performance improvements for a single antenna transmitter in

Appendix A. We argue that for large K and high SNR, the achievable rate by coding over

K blocks is ˆ opt

= C erg

− O

¡

1

K 1 − δ log log ρ

¢

(where δ > 0 can be made arbitrarily small).

This shows that even with a single antenna, we can perform reasonably well by coding over a small number of blocks.

2.5.3

Layered Approach and Erasure Codes

We now discuss another generalization of the communication technique using erasure codes which uses the layered approach suggested in [31]. In the schemes that we have considered so far we send at most one erasure symbol per block. If an outage occurs in a given block, then the receiver misses the corresponding symbol. The main issue with such a construction is that if the receiver is in outage, it misses the entire symbol. Ideally the receiver should be able to decode as much information as possible depending to its channel strength. The layered approach is a scheme where the users can recover a certain fraction of the information depending on their channel strength. However we argue in this section that the gains from the layered approach are not substantial.

The layered approach over slow fading channels for MISO links is suggested by Steiner and Shamai [31]. The authors compute the average throughput for their scheme but do not explicitly consider the use of erasure codes to achieve it. The main idea in [31] is to send many different codewords in each block. For simplicity let us consider sending two different packets at rates R

1 and R

2 over each block. Note that the channel with multiple transmit antennas is a non-degraded broadcast channel and hence successive cancelation cannot be performed at the decoder. If the channel is very strong so that we can decode both the packets we get a total rate of R

1

+ R

2

. This is accomplished by performing joint

33

decoding between the two codewords. If the channel is not strong enough to perform the joint decoding, an attempt is made to decode each codeword, treating the other as noise. If this succeeds then at least one packet can be recovered. If the channel is extremely weak, none of the codewords can be decoded. Thus a receiver can receive either 0,1 or 2 packets in each block depending on the strength of the channel. By collecting more packets, we reduce the average time necessary to collect sufficient number of packets to decode the source. An optimization is performed over the possible rates R

1 and R

2 in [31] to maximize the average throughput. This is precisely the rate we achieve using an outer erasure code. As we add more and more layers, we expect to achieve higher gains at the cost of higher complexity since more layers enable the receiver to get on average more information in each block.

However, it is shown in [31] that the gains are diminish quickly for more than two layers over a wide range of SNR . Two layers provide some noticeable gains over a single layer, for moderately large SNR > 20 dB. (See Figure 1, [31]). Adding multiple antennas does not provide a dramatic gain in capacity as observed in [31]. Largest gains are achieved when going from one to two antennas and the gains quickly diminish, similar to our observation from the single layer scheme.

One open problem in implementing the multilayered broadcast approach the design of compatible rateless erasure codes. One has to generate erasure symbols with different alphabet sizes for the two layers. The decoder has to be able to use both types of symbols to efficiently reconstruct the original file. The current implementations of rateless codes all generate symbols over a single alphabet. In absence of such codes, one could use current rateless codes over very small alphabet sizes. Erasure code packets on each layer can be generated by using a collection of these small symbols. The number of symbols in each packet is proportional to the rate assigned to that layer. However, having erasure symbols over a small alphabet is not efficient from implementation point of view since it incurs large overheads. Another problem with the broadcast approach is that the the receiver has to perform joint decoding of the codewords which is prohibitively complex.

2.6

Multicasting to Multiple Groups

So far, we have focussed on the case in which all the users want a common message. In this section, we consider a generalization to the case when different groups of users want different

34

messages. More specifically, there is one sender and many receivers. Each receiver belongs to a specific group and all users within a given group want the same message. If there is only one group, then the scenario degenerates to the case we have studied in the previous sections. On the other hand, if the size of each group is one then the problem reduces to that of sending independent messages to different users. In this section we discuss the general problem when there is more than one group each with more than one user. The problem is still open. We discuss some problems that need to be solved for this generalization and provide some motivation for the next chapter of this thesis.

We begin by reviewing the known schemes for transmitting independent messages to different users and then consider the more general case of multiple groups.

2.6.1

Transmitting Independent Messages to each user

In this section, we review the scheme for sending a separate message to each user. This scheme was suggested by Caire and Shamai [1]. For simplicity, we consider the case of a

2 × 2 system where the transmitter has 2 antennas and each of the two receivers has one antenna. The channel model is given by y = H x + w (2.20)

We assume a slow fading model. Each entry of H is independent and CN (0 , 1). The transmitter knows the realization of H . Also we have w ∼ CN (0 , I ) and E [ x

† x ] ≤ P . The main idea is to perform a LU decomposition of H . So that H = LU , where L is a lower triangular matrix and U is an orthogonal matrix. Such a scheme is optimal at high SNR.

For general SNR an MMSE generalization of this factorization has to be performed. If m is the message to be transmitted, we set x = U † m . Accordingly, we have:

y

1

=

y

2

| {z } y

| l l

11

21

{z

L l

0

22

}

m m

1

2

| {z } m

+

| w w

{z w

1

2

}

(2.21)

The transformation x = U † m involves rotation by an orthogonal matrix, hence the power constraint and the noise variance are preserved. The result of this transformation is that user 1 receives an interference free AWGN channel. On the other hand user 2

35

experiences interference from receiver 1. The channel y

2

= l

21 m

1

+ l

22 m

2

+ w

2 has l

21 m

1 as additive interference. Since the transmitter knows the message of user 2, this interference is known at the transmitter and a well known scheme called dirty paper coding [4] is used to code for this user. The capacity of the channel is same as if the interference did not exist.

This scheme can be easily generalized to the K user channel and was shown to be optimal recently [36].

2.6.2

Multiple Groups

In this section we consider the case when there are multiple groups of users and each groups wants a different message. To simplify the exposition, we consider the case of a 4 × 4 system with two groups and two users in each group. The input x is a 4 × 1 vector but there are only two messages. How should we map the input message symbols to x ? Inspired from the independent message case in the previous section, we consider a linear transformation x = V m where

7

V is a 4 × 2 matrix. In order to satisfy the power constraint, we require tr ( V † V ) ≤ 1. Also we select V such that L = HV is block triangular as shown in the following equation:

y

1 y y

2

3

y

4

| {z } y

=

| l l l l

11

21

31

41

{z

L l l

0

0

32

42

}

m m

1

2

| {z } m

+

| w w w w

{z w

1

2

3

4

}

(2.22)

By performing the above transformation, receiver 1 and 2 have interference free channel, so message 1 can be decoded perfectly. On the other hand receiver 3 and 4 have known interference on their channels. How can the transmitter cope with this known interference?

The transmitter has to simultaneously deal with two interference sequences now. If we had the special case that l

31

= l

41 and l

32

= l

42 then, both the channels would be equivalent and one can perform dirty paper coding. However, this choice of V is restrictive. Nonetheless, we argue that even this choice of V is attractive for some special cases. We make use of the following rule of thumb:

7 We do not use the same notation U as before because the matrix V it is not orthonormal. We explicitly need to ensure that the power is preserved by imposing the trace constraint.

36

Rule of Thumb: If an N antenna transmitter is used to null out in the direction of t < N users (assuming i.i.d. Rayleigh fading), then effectively we have N − t degrees of freedom.

The above rule is motivated from the QR factorization of the i.i.d. Gaussian matrix H .

The diagonal entries of R have χ

2

2( n − i ) degrees of freedom. We loose i degrees of freedom by nulling out i random directions. Now consider the 4 × 4 system with two groups that we considered previously. For group 2 we pose a constraint that l

31

= l

41

. This is equivalent to nulling out one direction for group 1. Hence group 1 has three degrees of freedom. For group 2 we only have two degrees of freedom since l

12

= l

22

= 0. The constraint l

31

= l

41 ensures that single user dirty paper coding can be done to remove the interference of group

2. With this scheme group 1 enjoys an extra degree of freedom. If there are N groups each with 2 users and 2 N transmit antennas, then the i th , i ∈ { 1 , 2 . . . N } group has ( N − i + 2) degrees of freedom. On the other hand if we performed zero forcing to all the other users in each group, we only have 2 degrees of freedom for each group. Thus a simple scheme that employs dirty paper coding and takes into account that users in each group want a common message can do better.

The choice of V used in the above argument is somewhat arbitrary. It allows us to apply the single user dirty paper coding technique to the second group of users. We are not restricted to this particular value of V if there is a technique that allows us to deal with more than one interference simultaneously. More specifically, the channel for user 3 and 4 are given by y

3

= l

31 m

1

+ l

31 m

2

+ w

3 and y

4

= l

41 m

1

+ l

42 m

2

+ w

4 respectively. We would like to encode the message m

1 in such a way that both the users have as little interference m

2 as possible

8

. This problem is a multiuser generalization of the single user dirty paper coding result which deals with only one user. In the next chapter we study this problem in detail. We consider a broader class of channels: channels with state parameters known to the transmitter and develop some multiuser generalizations to these channels. By analogy to [4], we view this generalization as “Writing on many pieces of Dirty Paper at once”.

We obtain some achievable rates for the special case of Gaussian channels with Gaussian interference, which we are interested in for the present application. However the capacity of this channel remains an open problem.

8 For the 4

×

4 system we only need to deal with the case when the two interfering sequences for users 3 and

4 are scalar multiples of each other. But for larger systems, we need to consider more arbitrary interferences

37

Chapter 3

Multicasting with Known

Interference as Side Information

In this chapter, we study some coding techniques for multicasting channels which experience an additive interference known to the transmitter. Channels with interference known to the transmitter model many different applications. In digital watermarking for example, we wish to embed a watermark onto a host signal. The host signal is treated as known interference in encoding the watermark [2]. In the last chapter (see also [1],[36]) we observed that when the transmitter has different messages to send to different users, it can encode one message treating the other as known interference. In this chapter we consider the problem of coding for channels with known interference in detail. This particular scenario is a special case of a broader class of channels, known as channels with state parameters known to the transmitter. We examine this class of channels initially and then specialize to the known interference case.

Communication channels controlled by state parameters known to the transmitter were first introduced by Shannon [28]. Shannon studies a point to point channel, whose transition probability depends on a state variable which is known to the transmitter but not to the receiver. In Shannon’s model the transmitter becomes aware of the state parameters during the course of transition (i.e. the transmitter has causal knowledge of the state sequence).

Gel’fand and Pinsker [13] study a similar channel model where the transmitter has knowledge of the entire state sequence before the transmission begins (i.e. the transmitter has non-causal knowledge of the state sequence). The knowledge of state variables is commonly

38

referred to as side information. See Figure 3-1 for the model of such a channel.