INFORMATION TO BE INCLUDED IN STUDENT OUTCOMES ASSESSMENT PLANS

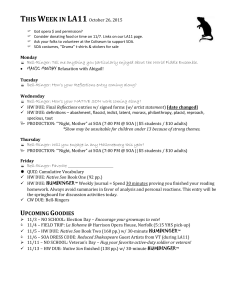

advertisement

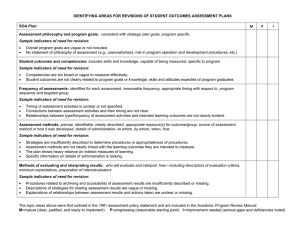

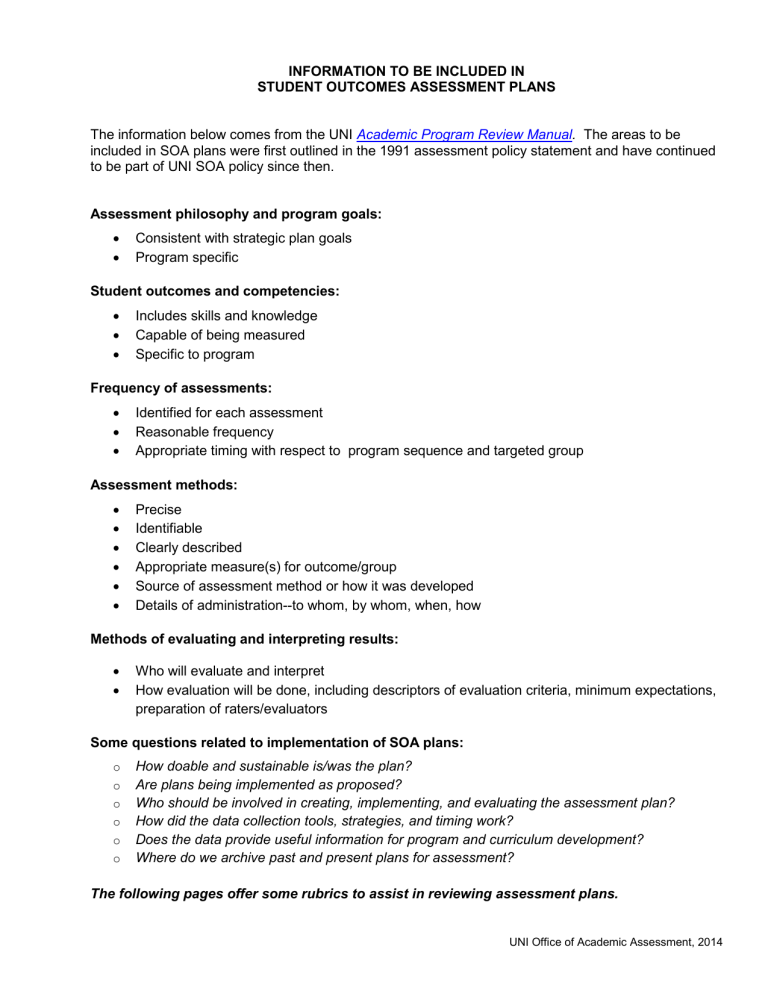

INFORMATION TO BE INCLUDED IN STUDENT OUTCOMES ASSESSMENT PLANS The information below comes from the UNI Academic Program Review Manual. The areas to be included in SOA plans were first outlined in the 1991 assessment policy statement and have continued to be part of UNI SOA policy since then. Assessment philosophy and program goals: • • Consistent with strategic plan goals Program specific Student outcomes and competencies: • • • Includes skills and knowledge Capable of being measured Specific to program Frequency of assessments: • • • Identified for each assessment Reasonable frequency Appropriate timing with respect to program sequence and targeted group Assessment methods: • • • • • • Precise Identifiable Clearly described Appropriate measure(s) for outcome/group Source of assessment method or how it was developed Details of administration--to whom, by whom, when, how Methods of evaluating and interpreting results: • • Who will evaluate and interpret How evaluation will be done, including descriptors of evaluation criteria, minimum expectations, preparation of raters/evaluators Some questions related to implementation of SOA plans: o o o o o o How doable and sustainable is/was the plan? Are plans being implemented as proposed? Who should be involved in creating, implementing, and evaluating the assessment plan? How did the data collection tools, strategies, and timing work? Does the data provide useful information for program and curriculum development? Where do we archive past and present plans for assessment? The following pages offer some rubrics to assist in reviewing assessment plans. UNI Office of Academic Assessment, 2014 CRITERIA FOR STUDENT OUTCOMES ASSESSMENT PLANS FROM THE ACADEMIC PROGRAM REVIEW MANUAL The information below comes from the UNI Academic Program Review Manual. The topic areas below were first outlined in the 1991 assessment policy statement and included in previous manuals. Ready for use SOA Plan • Assessment philosophy and program goals: consistent with strategic plan goals, program specific • Student outcomes and competencies: includes skills and knowledge, capable of being measured, specific to program • Frequency of assessments: identified for each assessment, reasonable frequency, appropriate timing with respect to program sequence and targeted group • Assessment methods: precise, identifiable, clearly described, appropriate measure(s) for outcome/group, source of assessment method or how it was developed, details of administration--to whom, by whom, when, how • Methods of evaluating and interpreting results: who will evaluate and interpret, how—including descriptors of evaluation criteria, minimum expectations, preparation of raters/evaluators Details needed Not included Self-Study Report on Student Outcomes Assessment (Organizational Format for a Self-Study Report, Section V) This section of the APR Manual suggests further areas for SOA evaluation. • Routine procedures for measuring student outcomes, as defined in the program’s SOA Plan o • Summary of important findings from assessing student outcomes. Describe how these findings are shared with program faculty, students, and other interested parties. Describe specific changes made in the program as a result of information derived from student outcomes assessment findings. o o o o • Are plans being implemented as proposed? How are findings reported and shared? How are findings archived? How are findings connected to program changes? How are changes reported and archived? Recommendations for improvement in SOA processes. o o How is the assessment plan evaluated? How are changes to the assessment plan reported and archived? IDENTIFYING AREAS FOR REVISIONS OF STUDENT OUTCOMES ASSESSMENT PLANS SOA Plan: M P I Assessment philosophy and program goals: consistent with strategic plan goals, program specific Sample indicators of need for revision: • • Overall program goals are vague or not included. No statement of philosophy of assessment (e.g., use/usefulness, role in program operation and development procedures, etc.) Student outcomes and competencies: includes skills and knowledge, capable of being measured, specific to program Sample indicators of need for revision: • • Competencies are too broad or vague to measure effectively. Student outcomes are not clearly related to program goals or knowledge, skills and attitudes expected of program graduates Frequency of assessments: identified for each assessment, reasonable frequency, appropriate timing with respect to program sequence and targeted group Sample indicators of need for revision: • • • Timing of assessment activities is unclear or not specified. Connections between assessment activities and their timing are not clear. Relationships between type/frequency of assessment activities and intended learning outcomes are not clearly evident. Assessment methods: precise, identifiable, clearly described, appropriate measure(s) for outcome/group, source of assessment method or how it was developed, details of administration--to whom, by whom, when, how Sample indicators of need for revision: • • • • Strategies are insufficiently described to determine procedures or appropriateness of procedures. Assessment methods are not clearly linked with the learning outcomes they are intended to measure. The plan shows heavy reliance on indirect measures of learning. Specific information on details of administration is lacking. Methods of evaluating and interpreting results: who will evaluate and interpret, how—including descriptors of evaluation criteria, minimum expectations, preparation of raters/evaluators Sample indicators of need for revision: • • • Procedures related to archiving and accessibility of assessment results are insufficiently described or missing. Descriptions of strategies for sharing assessment results are vague or missing. Explanations of relationships between assessment results and actions taken are unclear or missing. The topic areas above were first outlined in the 1991 assessment policy statement and are included in the Academic Program Review Manual. M=mature (clear, justified, and ready to implement) P=progressing (reasonable starting point) I=improvement needed (serious gaps and deficiencies noted)