Technical Report The North Carolina Mathematics Tests

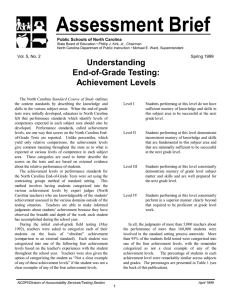

advertisement