DEFENSE SCIENCE AND TECHNOLOGY Further DOD and DOE Actions Needed

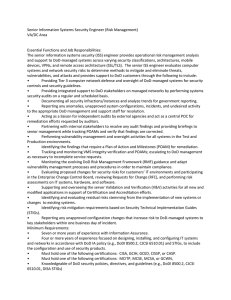

advertisement