el d o M

advertisement

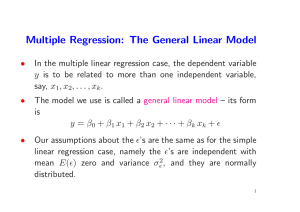

• +β5 x31 + β6 x32 + β7 x1 x2 + y = β0 + β1 x1 + β2 x2 + β3 x21 + β4 x22 The multiple regression model with x1 ≡ x, x2 ≡ x2, x3 ≡ x3 . y = β 0 + β 1 x1 + β 2 x2 + β 3 x3 + fits the form of the general linear model y = β 0 + β1 x + β 2 x2 + β3 x3 + Thus the polynomial model in one variable Our assumptions about the ’s are the same as for the simple linear regression case, namely the ’s are independent with mean E() zero and variance σ2, and they are normally distributed. • • The model we use is called a general linear model – its form is y = β 0 + β 1 x1 + β 2 x2 + · · · + β k xk + • 3 1 In the multiple linear regression case, the dependent variable y is to be related to more than one independent variable, say, x1, x2, . . . , xk . • Multiple Regression: The General Linear Model i = 1, 2, . . . , n The model is called a linear model because its expression is linear in the parameters i.e., the β’s. The fact that it may involve nonlinear functions of the x’s is irrelevant. • 4 Even terms like log(x3), ex2 , cos(x4), etc., are permitted. with x3 = x21, x4 = x22, x5 = x31, x6 = x32, x7 = x1 x2. +β6 x6 + β7, x7 + y = β 0 + β1 x1 + β2 x2 + β3 x3 + β 4 x4 + β5 x5 can also be written as 2 The form of the general linear model, as given, is understood to permit polynomial terms like x32, cross-product terms like x2x3 – etc. – yi = β0 + β1 xi1 + β2 xi2 + · · · + βk xik + i Using this full notation we can write the model as (yi, xi1, xi2, . . . , xik ), Data consist of n cases of k + 1 values denoted by • • • • Here let us just state that the presence (or absence) of interaction between two independent variables (say) x1 and x2 can be tested by including product terms such as x1 x2 in the model. Thus the model: • • Then confidence intervals and tests of hypotheses concerning the parameters will be discussed as well as inferences about E(y) and a future y observation. Computing quantities of interest like the β̂i, SSE, SSREG, MSE, MSREG, -etc- must be done using a computer because the amount of arithmetic required is very large. In order to demonstrate exactly what computations are required (so you will understand what the computer is doing) it is easiest to use matrix notation. • • • 7 These least square estimates of the parameters will be denoted by β̂0, β̂1, . . . , β̂k . • 5 The interpretation of this model is postponed till later. • allows us to test whether interaction between x1 and x2 exists. y = β 0 + β 1 x1 + β2 x2 + β3 x1 x2 The concept of interaction will be discussed later. • • • • • • y n×1 ⎞ y1 ⎜ y2 ⎟ ⎜ = ⎝ . ⎟ . ⎠ yn ⎛ ⎜ ⎜ X =⎜ ⎜ n×(k+1) ⎝ ⎛ 1 x11 x12 1 x21 x22 1 x31 x32 .. .. .. 1 xn1 xn2 x1k x2k x3k .. . . . xnk ... ... ... ⎟ ⎟ ⎟ ⎟ ⎠ ⎞ 8 Using matrix notation, we can define vectors y, β, and and the matrix X, as follows with the objective being to give a compact form of definition of quantities like least squares point and interval estimates, test statistics, etc. These are defined as follows: Multiple Regression Computations Using Matrix Notation 6 When a general linear model relates y to a set of quantitative independent variables (x’s) that may include squared terms, product terms, etc., we have a multiple regression model. When the independent variables are dummy variables coded to 0 or 1 representing values of qualitative independent variables or levels of treatment factors, the resulting models are called analysis of variance models. Just as we did in Chapter 11 for the simple linear regression model y = β0 + β1 x + , we will consider least squares estimation of the parameters β0, β1, β2, . . . , βk in the general linear model. The Multiple Regression Model X X is the matrix of sums of cross products of the various columns of X. • • β̂ = (X X)−1X y 11 Note that without using matrix notation the normal equations are the following and is given by The least squares estimates β̂i of the parameters βi in the model are elements of the vector β̂ = (β̂0, β̂1, . . . , β̂k ) where β̂ is the solution to the normal equations • X X β̂ = X y Note also that vij = vji for all (i, j) i.e., the (X X)−1 matrix is symmetric. • 9 The following quantities, defined in terms of the above, are useful in multiple regression computations that follow. y = Xβ + Now the model can be written in terms of matrices as ⎞ 1 ⎜ 2 ⎟ ⎟ =⎜ ⎝ .. ⎠ n×1 n ⎛ • • (k+1)×1 β ⎞ β0 ⎜ β1 ⎟ ⎟ = ⎜ ⎝ .. ⎠ βk ⎛ • • • • t=1 n xti xtj n β̂0 x1 β̂0 .. xk β̂0 + x β̂ 12 1 + x1 β̂1 .. + xk x1 β̂1 +···+ +···+ ··· +···+ x β̂ k k x1 xk β̂k .. 2 xk β̂k 12 Thus β̂0, β̂1, . . . , β̂k are the solutions to this set of simultaneous equations. The standard deviations of the β̂i are √ σβ̂0 = σ v00 √ σβ̂1 = σ v11 .. √ σβ̂k = σ vkk y= x1 y = .. xk y = 10 where X X and (X X)−1 are (k + 1) × (k + 1) matrices. Denote the elements of the inverse of this matrix by vij where i = 1, 2, . . . , k, j = 1, 2, . . . , k. In this notation ⎤ ⎡ v00 v01 v02 . . . v0k ⎥ ⎢ v ⎢ 10 v11 v12 . . . v1k ⎥ ⎥ ⎢ −1 (X X) = ⎢ v20 v21 v22 . . . v2k ⎥ ⎥ ⎢ . ⎦ ⎣ . vk0 vk1 vk2 . . . vkk X X = The element in the ith row and the j th column of X X is A 100(1-α)% confidence interval for E(yn+1), the population mean of Yn+1 at (xn+1,1, xn+1,2, . . . , xn+1,k ) • • Thus the standard errors of β̂j are: √ sβ̂0 = s v00 √ sβ̂1 = s v11 .. √ sβ̂k = s vkk • yn+1 ˆ i ± tα/2 s 1 + n+1(X X)−1n+1 15 A 100(1-α)% prediction interval for a new yn+1 simply adds one under the square root above. where n+1 = (1, xn+1,1, xn+1,2, . . . , xn+1,k ) ŷn+1 ± tα/2 · s n+1(X X)−1n+1 The mean square error, i.e., the s2 which we use to estimate σ2 is SSE . s2 = MSE = n − (k + 1) • 13 The sum of squares of residuals (or SSE) is SSE = y y − β̂ X y • √ vjj , j = 0, 1, 2, . . . , k β̂j √ s vjj n-(k+1) n-1 Total k Regression Error df Source SSTot SSE Sum of Squares SSReg MSE=SSE/(n-(k+1)) Mean Square MSReg=SSReg/k 16 F=MSReg/MSE F Analysis of variance table for multiple regression • 14 In the general linear model the β’s are sometimes called partial slopes. βj is the expected change in y for unit change in xj (when all other x’s are held constant)i.e., when the other x variables are fixed at a set of values. Inference On Multiple Regression Model with df = n − (k + 1), for each j = 1, . . . , k. tj = To test H0 : βj = 0 vs. Ha : βj = 0 use the t-statistic where tα/2 is the upper (1 − α/2) percentile of the tdistribution with df = n − (k + 1). β̂j ± tα/2 · s Confidence intervals for the βj therefore have the form • • • The null hypothesis is rejected if the computed F-value exceeds the Fα,k,n−(k+1) percentile of the F-distribution for selected α level. Quantities like sβ̂j , and confidence interval (band) for E(y), or prediction interval (band) for a future y cannot reasonably be computed by hand. To obtain intervals at x values for which y was not observed, include an (n + 1)th row of x values in the data set with a missing value (i.e. a period) for the value of y. That is the • • • 2 Generally, Ry·x is the proportion of the variability 1 ,...,xk in y that is accounted for by the independent variables x1, x2, . . . , xk in the model. • 19 2 The coefficient of determination Ry·x is computed 1 ,x2 ,...,xk as Syy − SSE 2 Ry·x = 1 ,x2 ,...,xk Syy • 17 The computed F-statistic is used to test the hypothesis H0 : β1 = β2 = · · · βk = 0 vs. Ha : at least oneβj = 0. • Estimates computed for full and reduced models will usually all be different. If the x1 variable is contained in both models, for example, the estimate of the β1 coefficient (β̂1) will not be the same for the two least squares fits. The Example 12.18 (page 658) illustrates this. • • 20 Other than a full model (i.e.,one involving all the x variables being considered at the time) one often looks at reduced models (ones involving some but not all of the full set of independent variables.) More Inference On Multiple Regression Model 18 Note that when we say “band” for E(y) and future y we are talking about a multivariate band, i.e., a “band” in more than two dimensions. Using the period in the y-value position tells JMP that the y observation is to be predicted. JMP will ignore the row of (k + 1) x values when computing sums of squares, least squares estimates, etc., but will the compute predicted value ŷ, confidence interval for E(y), and prediction interval for future y observation at the given set of (k + 1) x values. • • • (· , x1, x2, . . . , xk ) line of data is in the form If correlation is very high for a pair of x variables the effect is that the estimated coefficients (β̂’s) of one or both of these variables will have very high standard errors, resulting in the t-tests for these variables H0 : βj = 0 vs. Ha : βj = 0 failing to reject the respective null hypotheses. • x j )2 n 23 and Rx2 j ·x1,x2,...,xj−1, xj+1,...,xk is the coefficient of determination for regressing xj on x1, x2, . . . , xj−1, xj+1, . . . , xk ( • x2j − Sxj xj (1 − 1 Rx2 j ·x1,...,xj−1,xj+1,...,xk ) where Sxj xj = sβ̂j = s The estimated standard errors of the β̂j ’s can be expressed as functions of R2 values obtained by regressing xj on the rest of the x variables. Statistics useful for explaining multicollinearity • • • If we are using both the high school GPA (x1), and the SAT score (x2) in a multiple regression model to predict college GPA (y) , it is very likely that the two variables x1 and x2 are correlated. • 21 The term multicollinearity is used to describe the situation wherein some of the independent variables have strong correlations amongst themselves. • Such a decision is usually based on subject matter knowledge about the predictive value of these variables to the model. Variables may be retained in the model even in the presence of some multicollinearity if they contribute types of information needed to explain the variability in y other variables in the model may not be able to provide. In the following section, we will discuss a statistic called vif that may be calculated to determine the variables that are involved in multicollinearity. • • • If the xj variable is highly collinear with one or more of the other x variables, then Rx2 j ·x1,...,xj−1,xj+1,...,xk will be large. • 24 Note that the denominator of the formula above for sβ̂j contains the term (1 − Rx2 j ·x1,...,xj−1,xj+1,...,xk ) This definition is useful for illustrating the effect of multicollinearity on the estimation of each coefficient βj . • • That is, Rx2 j ·x1,x2,...,xj−1, xj+1,...,xk is the coefficient of determination computed using the variable xj as the dependent variable and all of the other x’s in the original model as the independent variables • 22 The investigator may make a decision on which of these variables will be retained in the model. • • • • The quantity variance inflation factor or vif for a coefficient βj is defined as • 27 However, if the parameter is estimated with high variabilty, the point estimate of the parameter may show up as a negative value. if subject matter knowledge suggests that the mean E(Y ) must increase when the value of x1 increases (when the other x variable values are unchanged). One noticeable effect of high standard error of β̂j is that it may result in an incorrect sign for β̂j . For example, one would expect the sign of βˆ1 to be positive 25 Thus the formula for sβ̂j shows directly the effect of severe multicollinearity on the estimation • 1 1 − Rx2 j ·x1,x2,...,xj−1, xj+1,...,xk A large standard error for β̂j shows that the coefficient βj will be estimated inaccurately. • vifj = Thus the denominator of the formula for sβ̂j will be small if Rx2 j ·x1,...,xj−1,xj+1,...,xk is large. This will possibly result in a very large standard error for βˆj • As a rule of thumb a vif of greater than 10 indicates severe collinearity of the xj with other x’s and the standard error of the corresponding estimated coefficient will be inflated. • Here we shall assume that k is the total number of predictors (x’s) and that g is the number of coefficients considered to be nonzero where, obviously, g < k. • 28 Sometimes we wish to test a hypothesis whether two or more of the β’s in the model are zero against the alternative hypothesis that at least one of these β’s is not zero. That is, a hypothesis like H0 : β2 = 0 employs the test statistic t = β̂2/sβ̂2 . • • We know that we can test hypothesis about any one of the β’s in the model using a t-test. • Inferences Concerning a Set of β’s If the vif is 1 for a particular variable, there is no multicollinearity problem at all with that variable. • 26 The vif measures the factor by which the variance (square of the standard error) of β̂j increases because of multicollinearity. • 31 When this computed F exceeds the F -value obtained from the F-tables for a chosen α, H0 is rejected. • [SSReg(Full) − SSReg(Reduced)]/(k − g) MSE(Full) 29 The numerator and denominator degrees of freedom here for the F statistic are k − g and n − (k + 1), respectively. F = Now the F -test statistic for testing H0 is y = β0 + β 1 x1 + β2 x2 + · · · + βg xg + Reduced Model: The model involving only those x’s from the full model whose β coefficients are not hypothesized to be zero. y = β0 + β 1 x1 + β2 x2 + · · · + β k xk + • • To test this hypothesis we use an F test. To formulate it we first fit the two models: • Full Model: The model involving all x’s. Thus k−g represents the number of variables with coefficients hypothesized to be zero. • 30 Fitting the two models, using the same 20 observations in each case, yields the following: • 32 The null hypothesis H0 : β3 = β4 = 0 tests whether x3 and x4 taken together contribute significant predictive value to be included in the Full Model • y = β0 + β1 x1 + β 2 x2 + • y = β0 + β1 x1 + β 2 x2 + β 3 x3 + β4 x4 + Example 12.18 illustrates this kind of a test. The two models considered are: Ha : at least one of these is not zero. H0 : βg+1 = βg+2 = · · · = βk = 0 Notice that the k − g variables corresponding to the coefficients to be tested equal to zero are assumed to be the last k − g variables in the full model. This can be done without any loss of generality since the order that the variables appear in the full model can always be re-arranged. The reason for doing this is that it is easier to state the hypotheses needed to be tested if the two models are formulated as above. In this situation, the hypotheses to be tested are • • • • • • 19 Total F 39.65 35 In this case we have evidence that Access and Structure variables do actually contribute predictive value to the model. • 33 For α = 0.01, the percentile from the F table is F.01,2,15 = 6.36. Thus we reject H0 at α = 0.01. T.S. F = [SSReg(Full) − SSReg(Reduced)]/(k − g) MSE(Full) (24.0624 − 2.9125)/(4 − 2) = = 69.7 .1517 Thus the test for H0 : β3 = β4 = 0 is: 0.1517 MS 6.0156 • • 2.27564 15 Error 26.338 SS of 24.0624 Source df Regression 4 ANOVA Table: 19 17 26.338 23.425 SS of 2.9125 1.378 MS 1.456 F 1.06 That is , consider E(y) = β0 + β1 x1 + β2 x2 36 To illustrate, consider the first-order model in only x1 and x2 , • • By definition x1, and x2 do not interact if the expected change in y for unit change in x1, does not depend on x2. • y = β 0 + β 1 x1 + β2 x2 + The concept of interaction between two independent variables (say) x1 and x2 is discussed in Section 12.1. 34 • Including Interaction Terms in the Model Total Error Source df Regression 2 ANOVA Table: ŷ = −0.8709 + 0.0394x1 + 0.828x2 Prediction Equation: Prediction Equation: ŷ = −2.784+0.02679x1+0.50351x2+0.74293x3+0.05113x4 Reduced Model: Full Model: 6 !! Different values of x2 give parallel lines. Thus the expected change in y for unit change in x1 will remain β1 regardless of what the value of x2 is. • • • Therefore the slope now depends on the value of x2. So, as you see in the picture, the lines are not parallel because the slopes are different for different values of x2. This model can be used to represent interaction between x1 and x2, if it exists. To detect interaction in regression data, we may construct a scatterplot of the data with the numbers identifying values of the variable x2: • • • • 39 However, the slope is β1 +β3 x2, which obviously is a function of x2. • 37 x1 When the value of x2 is kept fixed, E(y) is a straight line function of x1. ! !! !! - x2 = k 3 !! x = k2 ! ! 2 !! !! !! !! !! !! x = k1 ! ! ! 2 !! !! !! ! !! !! ! !! !! !! !! !! !! ! !! ! ! !! !! !! !! !! !! !! ! ! !! !! E(y) x2 = k 1 x2 = k 2 40 But if the plot looks like the one below, interaction is indicated. 38 • x1 - x-2 = k3 x1 ``` ` 1 2 1 3 1 2 2 33 1 2 3 1 1 3 3 2 2 1 12 3 3 ``` ``` ` ! !! !! Here the scatterplot suggests parallel traces, hence no interaction is indicated. y 6 ! !! !! ! !! !! !! ! ``` !! !!```` ! !! ``` ` ``` E(y) 6 When the value of x2 is kept fixed, E(y) is still a straight line function of x1. = β0 + (β1 + β3 x2) x1 + β2 x2 Now suppose we have the model E(y) = β0 + β1 x1 + β2 x2 + β3 x1 x2 • • • • • 6 1 3 1 2 3 12 2 2 2 2 21 1 11 33 3 3 1 - x1 41 However, this type of a plot may not always be useful. Subject matter knowledge of the experimenter may suggest that certain interactions terms are needed in the model. A term in the model like βx2x3 is used to account for interaction (a 2-factor interaction term, we say) between x2 and x3. y • 42 Higher order interactions (3-factor, 4-factor, etc.) are conceivable, like βx1x3x4 or βx1x2x3x4, but we seldom consider other than possibly 2-factor interactions, mainly because such models become difficult to interpret and therefore not useful.