MA22S6 Numerical and data analysis techniques Slides by Mike Peardon (2011)

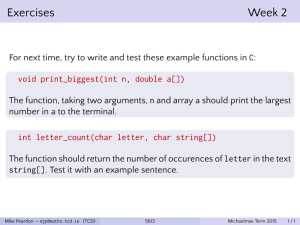

advertisement

MA22S6

Numerical and data analysis techniques

Slides by Mike Peardon (2011)

minor modifications by Stefan Sint

School of Mathematics

Trinity College Dublin

Hilary Term 2016

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

1 / 96

Probability

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

2 / 96

Sample space

Consider performing an experiment where the outcome is

purely randomly determined and where the experiment

has a set of possible outcomes.

Sample Space

A sample space, S associated with an experiment is a set

such that:

1

each element of S denotes a possible outcome O of the

experiment and

2

performing the experiment leads to a result

corresponding to one element of S in a unique way.

Example: flipping a coin - choose the sample space

S = {H, T} corresponding to coin landing heads or tails.

Not unique: choose the sample space S = {L}

corresponding to coin just landing. Not very useful!

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

3 / 96

Events

Events

An event, E can be defined for a sample space S if a question

can be put that has an unambiguous answer for all outcomes

in S. E is the subset of S for which the question is true.

Example 1: Two coin flips, with S = {HH, HT, TH, TT}.

Define the event E1T = {HT, TH}, which corresponds to

one and only one tail landing.

Example 2: Two coin flips, with S = {HH, HT, TH, TT}.

Define the event E≥1T = {HT, TH, TT}, which corresponds

to at least one tail landing.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

4 / 96

Probability measure

Can now define a probability model, which consists of a

sample space, S a collection of events (which are all

subsets of S) and a probability measure.

Probability measure

The probability measure assigns to each event E a probability

P(E), with the following properties:

1

P(E) is a non-negative real number with 0 ≤ P(E) ≤ 1.

2

P(∅) = 0 (∅ is the empty set event).

3

P(S) = 1 and

4

P is additive, meaning that if E1 , E2 , . . . is a sequence of

disjoint events then

P(E1 ∪ E2 ∪ . . . ) = P(E1 ) + P(E2 ) + . . .

Two events are disjoint if they have no common outcomes

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

5 / 96

Probability measure (2)

Venn diagrams give a very useful way of visualising

probability models.

Example: Ec ⊂ S is

the complement to

event E, and is the

set of all outcomes

NOT in E (ie

Ec = {x : x ∈

/ E}).

The probability of an

event is visualised as

the area of the

region in the Venn

diagram.

C

E

E

S

The intersection A ∩ B and union A ∪ B of two events can

be depicted ...

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

6 / 96

Probability measure (3)

A B

The intersection of

two subsets A ⊂ S

and B ⊂ S

A ∩ B = {x : x ∈ A and x ∈ B}

A

A

B

S

A B

1111111111111111

0000000000000000

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

111111111

000000000

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

The union of two

subsets A ⊂ S and B ⊂ S

A ∪ B = {x : x ∈ A or x ∈ B}

B

S

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

7 / 96

Probability measure (4)

The Venn diagram approach makes it easy to remember:

P(Ec ) = 1 − P(E)

P(A ∪ B) = P(A) + P(B) − P(A ∩ B)

Also define the conditional probability P(A|B), which is

the probability event A occurs, given event B has occured.

Since event B occurs with probability P(B) and both

events A and B occur with probability P(A ∩ B) then the

conditional probability P(A|B) can be computed from

Conditional probability

P(A|B) =

P(A ∩ B)

P(B)

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

8 / 96

Conditional probability (1)

Conditional probability describes situations when partial

information about outcomes is given

Example: coin tossing

Three fair coins are flipped. What is the probability that the

first coin landed heads given exactly two coins landed heads?

S = {HHH, HHT, HTH, HTT, THH, THT, TTH, TTT}

A = {HHH, HHT, HTH, HTT} and B = {HHT, HTH, THH}

A ∩ B = {HHT, HTH}

P(A|B) =

P(A∩B)

P(B)

Answer:

=

2/ 8

3/ 8

=

2

3

2

3

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

9 / 96

Conditional probability (2)

Bayes’ theorem

For two events A and B with P(A) > 0 and P(B) > 0 we have

P(A)

P(A|B) =

P(B|A)

P(B)

Since P(A|B) = P(A ∩ B)/ P(B) from conditional

probability result, we see P(A ∩ B) = P(B)P(A|B).

switching A and B also gives P(B ∩ A) = P(A)P(B|A)

. . . A ∩ B is the same as B ∩ A . . .

Thomas Bayes

(1702-1761)

so we get P(A)P(B|A) = P(B)P(A|B) and Bayes’

theorem follows

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

10 / 96

Partitions of state spaces

Suppose we can completely partition S into n disjoint

events, A1 , A2 , . . . An , so S = A1 ∪ A2 ∪ · · · ∪ An .

Now for any event E, we find

P(E) = P(E|A1 )P(A1 ) + P(E|A2 )P(A2 ) + . . . P(E|An )P(An )

This result is seen by using the conditional probability

theorem and additivity property of the probability

measure. It can be remembered with the Venn diagram:

A2

E A1

A4

A5

A3

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

S

Hilary Term 2016

11 / 96

A sobering example

With the framework built up so far, we can make powerful

(and sometimes surprising) predictions...

Diagnostic accuracy

A new clinical test for swine flu has been devised that has a

95% chance of finding the virus in an infected patient.

Unfortunately, it has a 1% chance of indicating the disease in

a healthy patient (false positive). One person per 1, 000 in the

population is infected with swine flu. What is the probability

that an individual patient diagnosed with swine flu by this

method actually has the disease?

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

12 / 96

A sobering example

With the framework built up so far, we can make powerful

(and sometimes surprising) predictions...

Diagnostic accuracy

A new clinical test for swine flu has been devised that has a

95% chance of finding the virus in an infected patient.

Unfortunately, it has a 1% chance of indicating the disease in

a healthy patient (false positive). One person per 1, 000 in the

population is infected with swine flu. What is the probability

that an individual patient diagnosed with swine flu by this

method actually has the disease?

Answer: about 8.7%

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

12 / 96

The Monty Hall problem

When it comes to probability, intuition is

often not very helpful...

The Monty Hall problem

In a gameshow, a contestant is shown three doors and asked

to select one. Hidden behind one door is a prize and the

contestant wins the prize if it is behind their chosen door at

the end of the game. The contestant picks one of the three

doors to start. The host then opens at random one of the

remaining two doors that does not contain the prize. Now the

contestant is asked if they want to change their mind and

switch to the other, unopened door. Should they? Does it

make any difference?

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

13 / 96

The Monty Hall problem

When it comes to probability, intuition is

often not very helpful...

The Monty Hall problem

In a gameshow, a contestant is shown three doors and asked

to select one. Hidden behind one door is a prize and the

contestant wins the prize if it is behind their chosen door at

the end of the game. The contestant picks one of the three

doors to start. The host then opens at random one of the

remaining two doors that does not contain the prize. Now the

contestant is asked if they want to change their mind and

switch to the other, unopened door. Should they? Does it

make any difference?

P(Win)=2/3 when switching, P(Win) = 1/3 otherwise

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

13 / 96

The Monty Hall problem (2)

This misunderstanding about conditional probability can

lead to incorrect conclusions from experiments...

Observing rationalised decision making?

An experiment is performed where a monkey picks between

two coloured sweets. Suppose he picks black in preference to

white. The monkey is then offered white and red sweets and

the experimenters notice more often than not, the monkey

continues to reject the white sweets and chooses red. The

experimental team concludes the monkey has consciously

rationalised his decision to reject white sweets and reinforced

his behaviour. Are they right in coming to this conclusion?

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

14 / 96

The Monty Hall problem (2)

This misunderstanding about conditional probability can

lead to incorrect conclusions from experiments...

Observing rationalised decision making?

An experiment is performed where a monkey picks between

two coloured sweets. Suppose he picks black in preference to

white. The monkey is then offered white and red sweets and

the experimenters notice more often than not, the monkey

continues to reject the white sweets and chooses red. The

experimental team concludes the monkey has consciously

rationalised his decision to reject white sweets and reinforced

his behaviour. Are they right in coming to this conclusion?

Not necessarily. Based on the first observation, there

are three possible compatible rankings

(B>W>R,B>R>W,R>B>W). In 2 of 3, red is preferred to

white, so a priori that outcome is more likely anyhow.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

14 / 96

Independent events

Independent events

Events A and B are said to be independent if

P(B ∩ A) = P(A) × P(B)

If P(A) > 0 and P(B) > 0, then independence implies both:

P(B|A) = P(B) and

P(A|B) = P(A).

These results can be seen using the conditional

probability result.

Example: Two coins are flipped where the probability the

first lands on heads is 1/ 2 and similarly for the second. If

these events are independent we can now show that all

outcomes in S = {HH, HT, TH, TT} have probability 1/ 4.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

15 / 96

Summary

Defining a probability model means choosing a good

sample space S, collection of events (which all correspond

to subsets of S) and a probability measure defined on all

the events.

Events are called disjoint if they have no common

outcomes.

Understanding and remembering probability calculations

or results is often made easier by visualising with Venn

diagrams.

The conditional probability P(A|B) is the probability event

A occurs given event B also occured.

Bayes’ theorem relates P(A|B) to P(B|A).

Calculations are often made easier by partitioning state

spaces - ie finding disjoint A1 , A2 , . . . An such that

S = A1 ∪ A2 ∪ . . . An .

Events are called independent if P(A ∩ B) = P(A) × P(B).

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

16 / 96

Binomial experiments

A binomial experiment

Binomial experiments are defined by a sequence of

probabilistic trials where:

1

2

3

4

Each trial returns a true/false result

Different trials in the sequence are independent

The number of trials is fixed

The probability of a true/false result is constant

Usual question to ask - what is the probability the trial

result is true x times out of n, given the probability of

each trial being true is p?

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

17 / 96

Examples of binomial experiments

Examples and counter-examples

These examples are binomial experiments:

1

Flip a coin 10 times, does the coin land heads?

2

Ask the next ten people you meet if they like pizza

3

Screen 1000 patients for a virus

... and these are not:

Flip a coin until it lands heads (not fixed number of trials)

Ask the next ten people you meet their age (not

true/false)

Is it raining on the first Monday of each month? (not a

constant probability)

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

18 / 96

Number of experiments with k true outcomes

Number of selections

There are

Nk,n ≡ n Ck =

n!

k!(n − k)!

ways of having k out of n selections.

Coin flip outcomes

Example: how many outcomes of five coin flips result in

the coin landing heads three times?

Answer: N3,5 =

5!

3!(5−3)!

= 10

They are: {HHHTT, HHTHT, HHTTH, HTHHT, HTHTH,

HTTHH, THHHT, THHTH, THTHH, TTHHH}

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

19 / 96

Probability of k out of n true trials

If the probability of each trial being true is p (and so the

probability of it being false is q = 1 − p) ...

and the selection trials are independent then...

Probability of x out of n true outcomes

Pk,n = n Ck pk qn−k ≡ n Ck pk (1 − p)n−k

We can compute this probability since we can count the

number of cases where there are k true trials and each

case has the same probability

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

20 / 96

Infinite state spaces

The set of outcomes of a probabilistic experiment may be

an uncountably infinite set

Here, the distinction between outcomes and events is

more important: events can be assigned probabilities,

outcomes can’t

Outcomes described by a continuous variable

1

If I throw a coin and measure how far away it lands, the

state space is described by the set of real numbers ≥ 0,

Ω = [0, ∞)

2

I could also simultaneously see if it lands heads or tails.

This set of outcomes is still “uncountably infinite”. The

state space is now Ω = {H, T} × [0, ∞)

Impossible to define probability the coin lands 1m away.

Events can be defined - for example, an even might be

“the coin lands headsMA22S6

more

than

1m away.”Hilary Term 2016 21 / 96

Slides by Mike Peardon (2011) minor modifications

by Stefan

- Data

Sint

analysis

(TCD)

Random variables or random numbers

To be mathematically correct, random variables (or

random numbers) are neither variables nor numbers!

They are functions taking an outcome and returning a

number.

Depending on the nature of the state-space, they can be

discrete or continuous.

Random numbers

A random number X is a function that converts outcomes on a

sample space Ω = {O1 , O2 , O3 . . . } to a number in

{x1 , x2 , x3 , . . . } so X(Oi ) = xi

Example - heads you win ...

If I flip a coin and pay you e1 if it lands heads and you pay me

e2 if it lands tails, then the money you get after playing this

game is a random number: Ω = {H, T}, X(H) = 1, X(T) = −2

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

22 / 96

Random variables or random numbers

A discrete random variable only takes a countable set of

non-zero values x1 , x2 , . . ..

A discrete random variable defines a decomposition of the

sample space Ω in mutually exclusive events

Ω = E1 ∪ E2 ∪ . . . ,

Ei = {O ∈ Ω : X(O) = xi } ≡ {X = xi }

Notation for probabilities of these events:

P({O ∈ Ω : X(O) = xi }) ≡ P(X = xi ) ≡ P(xi ).

Completeness then entails

X

X

X

1 = P(Ω) =

P(Ei ) ≡

P(X = xi ) ≡

P(xi )

i

i

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

i

Hilary Term 2016

23 / 96

Expected value of a random variable

Imagine we sample a random variable X lots of times and

we know the probability different values will occur. We

can guess what the average of all these samples will be:

P(X = x1 )x1 + P(X = x2 )x2 + P(X = x3 )x3 + . . .

Expected value

The expected value of a discrete random variable which can

take any of N possible values is defined as

E[X] =

N

X

X(Oi )P(X = X(Oi )) =

i=1

N

X

xi P(X = xi )

i=1

It gives the average of n samples of the random number

as n gets large

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

24 / 96

Expected value (2)

Back to our example:

Heads you win ...

Before, we had X(H) = 1 and X(T) = −2. If both are equally

likely (fair coin) then the expected value,

E[X] = P(X = 1) × X(H) + P(X = −2) × X(T)

1

1

=

× 1 + × −2

2

2

1

= −

2

So playing n times you should expect to lose e 2n . Not a good

idea to play this game!

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

25 / 96

Expected value (3)

The expected value of a function f : R → R applied to our

random number can be defined easily too.

Expected value of a function

E[f (X)] =

N

X

f (xi )P(X = xi )

i=1

Taking the expected values of two different random

numbers X and Y is linear i.e for constant numbers α, β

we see E[αX + βY] = αE[X] + βE[Y]

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

26 / 96

Variance and standard deviation

Variance

The variance of X is defined as

σX2 = E[(X − μX )2 ] ≡ E[X2 ] − E[X]2

Standard deviation

The standard deviation of X, σX is the square root of the

variance. If X has units, σX has the same units.

The variance and standard deviation are non-negative:

σX ≥ 0

They measure the amount a random variable fluctuates

from sample-to-sample.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

27 / 96

Variance (2)

Returning again to our game:

Heads you win ...

The variance of X can be computed. Recall that μX = − 12 . The

variance is then

2

2

1

1

1

1

σX2 =

× 1+

+ × −2 +

2

2

2

2

1 9 1 9

=

× + ×

2 4 2 4

9

=

4

and the standard deviation of X is

3

.

2

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

28 / 96

The expected number of successful trials

Consider the binomial experiment where n trials are

performed with probability of success p

n!

Recall P(k) = n Ck pk qn−k ≡ k!(n−k)!

pk qn−k with q = 1 − p

So the expected value of k is

μX =

=

n

X

k=0

n

X

k=0

kP(k)

n!

k

pk qn−k

k!(n − k)!

= np

A bit more work gives

σX2 = npq

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

29 / 96

Poisson distribution

A limiting case for the binomial experiment can be

considered by taking n → ∞, while keeping μ = n × p fixed.

This models the number of times a random occurence

happens in an interval (radioactive decay, for example).

Now k, the number of times the event occurs becomes

The poisson distribution

For integer k,

μk e−μ

P(k) =

n!

P∞

Check that k=0 P(k) = 1 ie. the probability is properly

normalised.

Also find the expected value of X is just μ.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

30 / 96

Poisson distribution (2)

Example: chirping crickets

A field full of crickets are chirping at random, with on average

0.6 chirps per second. Assuming the chirps obey the poisson

distribution, what is the probability we hear at most 2 chirps

in one second?

Answer: P(0)+P(1)+P(2).

P(0) =

0.60 e−0.6

0!

= e−0.6

(NB remember 0! = 1)

0.61 e−0.6

0.62 e−0.6

P(1) =

= 0.6e−0.6 and P(2) =

= 0.18e−0.6

1!

2!

P(0) + P(1) + P(2) = 0.9768

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

31 / 96

Continuous random numbers (1)

For continuous random number X (one that can take any

value in some range [a, b]), the sample space is

(uncountably) infinite.

Consider the event E which occurs when the random

number X < x.

NB: Big X ≡ random number, little x ≡ reference point for E

Cumulative distribution function

The cumulative distribution function (cdf), FX (x) of a

continuous random number X is the probability of the event

E : X < x;

FX (x) = P(X < x)

Since it is a probability, 0 ≤ FX (x) ≤ 1

If X is in the range [a, b] then FX (a) = 0 and FX (b) = 1.

FX is monotonically increasing, which means that if q > p

then FX (p) ≥ FX (q).

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

32 / 96

Continuous random numbers (2)

Since E occurs when X < x, then Ec occurs when X ≥ x and

so P(X < x) + P(X ≥ x) = 1 and

P(X ≥ x) = 1 − FX (x)

Take two events, A which occurs when X < q and B when

X ≥ p and assume q > p.

A

B

p

q

The event A ∪ B always occurs (so P(A ∪ B) = 1) and A ∩ B

occurs when p ≤ X < q

Since P(A ∪ B) = P(A) + P(B) − P(A ∩ B) we have

P(p ≤ X < q) = FX (q) − FX (p)

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

33 / 96

Continuous random numbers (3)

Example: exponential distribution

FX (x) =

1 − e−2x when x ≥ 0

0

when x < 0

Describes random number X in range [0, ∞]

What is probability X < 1?

FX(x) 1

0.8

What is probability X ∈ [ 12 , 1]?

0.6

0.4

0.2

-0.5

0

0.5

1

1.5

2

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

34 / 96

Continuous random numbers (3)

Example: exponential distribution

FX (x) =

1 − e−2x when x ≥ 0

0

when x < 0

Describes random number X in range [0, ∞]

What is probability X < 1?

P(X < 1) = FX (1)

FX(x) 1

= 1 − e−2

0.8

= 0.864664 . . .

0.6

What is probability X ∈ [ 12 , 1]?

0.4

0.2

-0.5

0

0.5

1

1.5

2

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

34 / 96

Continuous random numbers (3)

Example: exponential distribution

FX (x) =

1 − e−2x when x ≥ 0

0

when x < 0

Describes random number X in range [0, ∞]

What is probability X < 1?

P(X < 1) = FX (1)

FX(x) 1

= 1 − e−2

0.8

= 0.864664 . . .

0.6

0.4

0.2

-0.5

0

0.5

1

1.5

2

What is probability X ∈ [ 12 , 1]?

1

1

P( < X < 1) = FX (1) − FX ( )

2

2

−2

= 1 − e − 1 + e−1

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

= 0.2325442 . . .

Hilary Term 2016

34 / 96

Probability density function

If p and q are brought closer together so q = p + dp then

P(p ≤ X < p + dp) = FX (p + dp) − FX (p)

dF

dF

− FX (p) ≈ dp

≈ FX (p) + dp

dp

dx

Probability density function

The probability density function gives the probability a

random number falls in an infinitesimally small interval,

scaled by the size of the interval.

fX (x) = lim

P(x ≤ X < x + dx)

dx

dx→0

For a random number X in the range [a, b],

Zx

FX (x) =

fX (z)dz

a

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

35 / 96

Probability density function (2)

fX (the pdf) is not a probability. FX (the cdf) is.

While fX is still non-negative, it can be bigger than one.

For X in the range [a, b], FX (b) = 1 so

Z

b

fX ≥ 0 and

fX (z) dz = 1

a

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

36 / 96

The uniform distribution

A random number U that is in the range [a, b] is uniformly

distributed if all values in that range are equally likely.

This implies the pdf is a constant, fU (u) = α. Normalising

Rb

this means ensuring a fU (u) du = 1.

fU (u) =

1

b−a

pdf of uniform U[ 14 , 32 ]

fX(x)

-0.5

and

FU (u) =

1

FX(x) 1

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0.5

1

1.5

2

b−a

cdf of uniform U[ 14 , 32 ]

0.8

0

u−a

-0.5

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

0

0.5

1

1.5

2

x

Hilary Term 2016

37 / 96

The exponential distribution

For a positive parameter, λ > 0, a random number W that

is in the range [0, ∞] is called exponentially distributed if

the density function falls exponentially.

The pdf is proportional to e−λx . Normalising again means

Rb

ensuring a fW (w) dw = 1. So

λe−λw , w ≥ 0

0,

w<0

fW (w) =

pdf of exponential(2)

and FW (w) =

1 − e−λw w ≥ 0

0 w<0

cdf of exponential(2)

FX(x) 2

FX(x) 1

0.8

1.5

0.6

1

0.4

0.5

0.2

-0.5

0

0.5

1

1.5

2

-0.5

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

0

0.5

1

1.5

2

x

Hilary Term 2016

38 / 96

The normal distribution

The normal distribution N(μ, σ 2 ) is parameterised by two

numbers, μ and σ.

pdf is the “bell curve”

The cdf doesn’t have a nice expression (but it is

sufficiently important to get its own name - erf(x).

fW (w) =

pdf of N(0.75,0.4)

(x−μ)2

1

−

p e 2σ2

σ 2π

cdf of N(0.75,0.4)

FX(x) 1

FX(x)

1

0.8

0.75

0.6

0.5

0.4

0.25

-0.5

0.2

0

0.5

1

1.5

2

-0.5

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

0

0.5

1

1.5

2

x

Hilary Term 2016

39 / 96

Continuous random numbers (4)

An expected value of a continuous random number can be

defined, in analogy to that of the discrete random number

Expected value

For a random number X taking a value in [a, b], the expected

value is defined as

Zb

E[X] =

z fX (z) dz

a

As with discrete random numbers, the easiest way to

think of this is the running average of n samples of X as n

gets very large.

Can show E[αX + βY] = αE[X] + βE[Y]

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

40 / 96

Continuous random numbers (5)

An expected value of a continuous random number can be

defined, in analogy to that of the discrete random number

Variance

The variance of a continuous random number X has the

same definition:

σX2 = E[X2 ] − E[X]2

Again, like discrete random numbers, the standard

deviation is the square root of the variance. Both the

variance and standard deviation are non-negative.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

41 / 96

2 of uniform U[a, b]

Example: E[U] and σU

For U a uniform variate on [a, b], what is

1

E[U]?

2

σU2 ?

Using definitions,

E[U] =

=

1

b−a

b+a

Z

b

z dz

a

2

The mean is (as might be guessed) the mid-point of [a, b]

Similarly, substituting to find E[X2 ] gives

σU2 =

(b − a)2

12

which depends only on b − a, the width of the range

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

42 / 96

2 of exponential(λ)

Example (2): E[U] and σU

For W an exponentially distributed number with parameter λ

1

E[U]?

2

σU2 ?

Again using definitions,

Z

b

E[U] =

w · λe−λw dz

a

=

1

λ

From the definition of E[X2 ], we get

σU2 =

1

λ2

so the expected value and standard deviation of exponentially

distributed random numbers are given by λ−1

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

43 / 96

Visualising a probability density function

Given some sample data, useful to plot a pdf. This can be

done by binning data and plotting a histogram.

Divide range [a, b] for possible values of X into N bins.

Count mi , the number of times X lies in

i(b−a)

(i+1)(b−a)

ri = [a + N , a +

). Plot mi vs x

N

Care must be taken choosing bin-size; too big, structure

will be lost, too small, fluctuations will add features.

Visualising the exponential distribution - 10,000 samples

10 bins in range 0 to 10

100 bins in range 0 to 10

1000 bins in range 0 to 10

0

0

2

4

x

6

8

10

pX(x)

1

pX(x)

1

pX(x)

1

0

0

2

4

x

6

8

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

10

0

0

2

4

x

6

Hilary Term 2016

8

10

44 / 96

Joint probability distributions

Sometimes in an experiment, we measure two or more

(random) numbers.

Now the sample space is more complicated, but it is still

possible to define events usefully.

The cumulative distribution function is defined as a

probability:

FX,Y (x, y) = P(X ≤ x and Y ≤ y)

Probability that (X, Y) lies

inside lower-left quadrant

defined by X ≤ x and Y ≤ y

y

In this example, it would be

approximated by the fraction

of red dots to the total number

of red and green dots.

x

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

45 / 96

Joint probability distributions (2)

Can write expressions for P(x0 ≤ X < x1 and y0 ≤ Y < y1 ) in

terms of FX,Y : Get

P(x0 ≤ X < x1 and y0 ≤ Y < y1 ) =

FX,Y (x1 , y1 ) − FX,Y (x0 , y1 ) − FX,Y (x1 , y0 ) + FX,Y (x0 , y0 )

A joint probability density can be defined too: it is the

ratio of the probability a point (X, Y) lands inside an

infinitesimally small area dxdy located at (x, y) to the area

dxdy:

fX,Y (x, y) =

lim

P(X ∈ [x, x + dx] and Y ∈ [y, y + dy])

dx→0,dy→0

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

dxdy

Hilary Term 2016

46 / 96

Joint probability distributions (3)

Independent random numbers

Two random numbers, X and Y can be said to be

independent if for all x and y,

FX,Y (x, y) = FX (x) × FY (y)

this is equivalent to

fX,Y (x, y) = fX (x) × fY (y)

As with independent events, if two random numbers are

independent, knowing something about one doesn’t allow

us to infer anything about the other

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

47 / 96

Summary (1)

Mathematically, a random number is a function taking an

outcome and returning a number

They can be discrete or continuous

Their expected value is the sum of all possible values

assigned to outcomes, weighted by the probability of

each outcome.

The variance (and standard deviation) of a random

number quantifies how much they fluctuate.

In a binomial experiment, the random number X that

counts the number of successes out of n trials has

probability P(X = x) = n Cx px (1 − p)n−x , where p is the

probability a single trial is successful.

Random occurences be modelled by the Poisson

distribution. The probability there will be X occurences if

μx e−μ

μ are expected is P(X = x) = x!

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

48 / 96

Summary (2)

Continuous random numbers can be described by a

cumulative distribution function (cdf). It gives the

probability X will be smaller than some reference value x.

The probability density function (pdf) is the ratio of

the probability a random number will fall in an

infinitesimally small range to the size of that range.

Given the pdf, the expected value and variance of a

continuous random number can be computed by

integration.

If a random number is sampled many times, an

approximation to its pdf can be visualised by binning and

plotting a histogram.

If more than one random number is measured,

probabilities are described by joint distributions.

Two random numbers are independent if their joint

distribution is separable.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

49 / 96

Sampling

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

50 / 96

Sample mean

Sample mean

For a sequence of n random numbers, {X1 , X2 , X3 , . . . Xn }. The

sample mean is

n

1X

X̄(n) =

Xi

n i=1

X̄(n) is also a random number.

If all entries have the same mean, μX then

E[X̄(n) ] =

n

1X

n i=1

E[Xi ] = μX

If all entries are independent and identically distributed

then

1

σX̄2(n) = σX2

n

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

51 / 96

The law of large numbers

Jakob Bernoulli: “Even the stupidest man — by some

instinct of nature per se and by no previous instruction

(this is truly amazing) — knows for sure the the more

observations that are taken, the less the danger will be of

straying from the mark”(Ars Conjectandi - 1713).

But the strong law of large numbers was only proved in

the 20th century (Kolmogorov, Chebyshev, Markov, Borel,

Cantelli, . . . ).

The strong law of large numbers

If X̄(n) is the sample mean of n independent, identically

distributed random numbers with well-defined expected value

μX and variance, then X̄(n) converges almost surely to μX .

P( lim X̄(n) = μX ) = 1

n→∞

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

52 / 96

Example: exponential random numbers

X

0.299921

1.539283

1.084130

1.129681

0.001301

1.238275

4.597920

0.679552

0.528081

1.275064

0.873661

1.018920

0.980259

1.115647

1.664513

0.340858

X̄(2)

X̄(4)

X̄(8)

X̄(16)

0.919602

1.013254

1.106906

1.321258

0.619788

1.629262

2.638736

1.147942

0.901572

0.923931

0.946290

0.974625

1.047953

1.025319

1.002685

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

53 / 96

The central limit theorem

As the sample size n grows, the sample mean looks more

and more like a normally distributed p

random number with

mean μX and standard deviation σX / n

The central limit theorem (de Moivre, Laplace,

Lyapunov,. . . )

The sample mean of n independent, identically distributed

random numbers, each drawn from a distribution with

expected value μX and standard deviation σX obeys

Za

−aσ

+aσ

1

2

(n)

lim P( p < X̄ − μX < p ) = p

e−x / 2 dx

n→∞

n

n

2π −a

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

54 / 96

The central limit theorem (2)

The law of large numbers tells us we can find the

expected value of a random number by repeated

sampling

The central limit theorem tells us how to estimate the

uncertainty in our determination when we use a finite (but

large) sampling.

The uncertainty falls with increasing sample size like

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

1

p

n

55 / 96

The central limit theorem

An example: means of bigger sample averages of a

random number X with n = 1, 2, 5, 50

14

14

12

12

n=1

10

8

6

6

4

4

2

2

0

0

0.2 0.4 0.6 0.8

1

14

0

0

0.2 0.4 0.6 0.8

1

14

12

12

n=5

10

8

6

6

4

4

2

2

0

0.2 0.4 0.6 0.8

n=50

10

8

0

n=2

10

8

1

0

0

0.2 0.4 0.6 0.8

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

1

Hilary Term 2016

56 / 96

Confidence intervals

The central limit theorem tells us that for sufficiently large

sample sizes, all sample means are normally distributed.

We can use this to estimate probabilities that the true

expected value of a random number lies in a range.

One sigma

What is the probability a sample

mean X̄ is more than one

p

standard deviation σX̄ = σX / n from the expected value μX ? If

n is large, we have

1

P(−σX̄ < X̄ − μX < σX̄ ) = p

2π

Z

1

e−x

2/ 2

dx = 68.3%

−1

These ranges define confidence intervals .

Most commonly seen are the 95% and 99% intervals

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

57 / 96

Confidence intervals (2)

Most commonly seen are the 95%(2σ) and 99%(3σ)

intervals.

P −σX̄

P −2σX̄

P −3σX̄

P −4σX̄

P −5σX̄

P −10σX̄

< X̄ − μX

< X̄ − μX

< X̄ − μX

< X̄ − μX

< X̄ − μX

< X̄ − μX

< σX̄

< 2σX̄

< 3σX̄

< 4σX̄

< 5σX̄

< 10σX̄

68.2%

95.4%

99.7%

99.994%

99.99994%

99.9999999999999999999985%

The standard deviation is usually measured from the

sample variance.

Beware - the “variance of the variance” is usually large.

Five-sigma events have been known ...

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

58 / 96

Sample variance

With data alone, we need a way to estimate the variance

of a distribution. This can be estimated by measuring the

sample variance:

Sample variance

For n > 1 independent, identically distributed samples of a

random number X, with sample mean X̄, the sample

variance is

n

1 X

σ̄X2 =

(Xi − X̄)2

n − 1 i=1

Now we quantify fluctuations without reference to (or

without knowing) the expected value, μX .

Note the n − 1 factor. One “degree of freedom” is

absorbed into “guessing” the expected value of X

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

59 / 96

Student’s t-distribution

In 1908, William Gosset, while working for Guinness in

St.James’ Gate published under the pseudonym “Student”

Computes the scaling to define a confidence interval

when the variance and mean of the underlying

distribution are unknown and have been estimated

Student’s t-distribution

fT (t) = p

Γ( 2n )

π(n − 1)Γ( n−1

)

2

t2

1+

−n/ 2

n−1

Used to find the scaling factor c(γ, n) to compute the γ

confidence interval for the sample mean

P(−cσ̄ < μX < cσ̄) = γ

For n > 10, the t-distribution looks very similar to the

normal distribution

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

60 / 96

Student’s t-distribution (2)

fX(x)

0.4

0.2

0

-3

-2

-1

0

x

1

2

3

blue - normal distribution

red - Student t with n = 2.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

61 / 96

Student’s t-distribution (3)

For example, with just 2 samples, the sample mean and

variance can be computed but now the confidence levels

are:

P −σ̄X < X̄ − μX < σ̄X

50%

P −2σ̄X < X̄ − μX < 2σ̄X

70.5%

P −3σ̄X < X̄ − μX < 3σ̄X

79.5%

P −4σ̄X < X̄ − μX < 4σ̄X

84.4%

P −5σ̄X < X̄ − μX < 5σ̄X 87.4%

P −10σ̄X < X̄ − μX < 10σ̄X

93.7%

“Confidences” are much lower because variance is very

poorly determined with only two samples.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

62 / 96

Modelling statistical (Monte Carlo) data

Often, we carry out experiments to test a hypothesis.

Since the result is a stochastic variable, the hypothesis

can never be proved or disproved.

Need a way to assign a probability that the hypothesis is

false. One place to begin: the χ2 statistic.

Suppose we have n measurements, Ȳi , i = 1..n each with

standard deviation σi . Also, we have a model which

predicts each measurement, giving yi .

The χ2 statistic

χ2 =

n (Ȳ − y )2

X

i

i

i=1

σi2

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

63 / 96

Goodness of fit

χ2 ≥ 0 and χ2 = 0 implies Ȳi = yi for all i = 1..n (ie the

model and the data agree perfectly).

Bigger values of χ2 imply the model is less likely to be

true.

Note χ2 is itself a stochastic variable

Rule-of-thumb

χ2 ≈ n for a good model

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

64 / 96

Models with unknown parameters - fitting

The model may depend on parameters αp , p = 1 . . . m

Now, χ2 is a function of these parameters; χ2 (α).

If the parameters are not know a priori, the “best fit”

model is described by the set of parameters, α ∗ that

minimise χ2 (α), so

∂χ2 (α) =0

∂αp ∗

α

Pm

p

For linear models; yi = p=1 αp qi , finding α ∗ is equivalent

to solving a linear system.

For more general models, finding minima of χ2 can be a

challenge. . .

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

65 / 96

Example - one parameter fit

Fit a straight line through the origin

Consider the following measured data Yi ± σi , i = 1..5 for

inputs xi

i

1

2

3

4

5

xi

0.1

0.5

0.7

0.9

1.0

Yi

0.25

0.90

1.20

1.70

2.20

σi

0.05

0.10

0.05

0.10

0.20

Fit this to a straight line through the origin, so our model is

y(x) = αx

with α an unknown parameter we want to determine

Result: α = 1.8097 and χ2 = 8.0.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

66 / 96

Example - one parameter fit (2)

3

2.5

y

2

1.5

1

0.5

0

0

0.2

0.4

x

0.6

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

0.8

1

Hilary Term 2016

67 / 96

Models with unknown parameters - fitting (2)

Example: fitting data to a straight line

Suppose for a set of inputs, xi , i = 1..n we measure output

Ȳi ± σi .

If Y is modelled by a simple straight-line function;

yi = α1 + α2 xi , what values of {α1 , α2 } minimise χ2 ?

χ2 (α1 , α2 ) is given by

χ2 (α1 , α2 ) =

n (Ȳ − α − α x )2

X

i

1

2 i

i=1

The minimum is at

α1∗ =

α2∗ =

σi2

A22 b1 − A12 b2

A11 A22 − A212

A11 b2 − A12 b1

A11 A22 − A212

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

68 / 96

Models with unknown parameters - fitting (3)

Example: fitting data to a straight line

A11 =

n 1

X

i=1

σi2

A12 =

n x

X

i

i=1

A22 =

σi2

n x2

X

i

i=1

σi2

b1 =

n Ȳ

X

i

i=1

b2 =

σi2

n x Ȳ

X

i i

i=1

σi2

∗ are themselves stochastic

The best-fit parameters, α1,2

variables, and so have a probabilistic distribution

A range of likely values must be given; the width is

approximated by

s

s

A

A11

22

α

σ1α =

,

σ

=

A11 A22 − A212 2

A11 A22 − A212

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

69 / 96

Example - two parameter fit (2)

3

2.5

y

2

1.5

1

0.5

0

0

0.2

0.4

x

0.6

0.8

1

Now χ2 goes down from 8.0 → 7.1.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

70 / 96

Example - try both fits again ...

3.5

3

2.5

y

2

1.5

1

0.5

0

0

0.2

0.4

x

0.6

0.8

1

Now χ2 is 357 for the y = αx model but still 7.1 for the

y = α1 + α2 x model. The first model should be ruled out.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

71 / 96

Uncertainty propagates

The best fit parameter(s) α ∗ have been determined from

statistical data - so we must quote an uncertainty. How

precisely have they been determined?

α ∗ is a function of the statistical data, Ȳ. A statistical

fluctuation

in Ȳ of dȲ would result in a fluctuation in α ∗ of

dα ∗

dȲ.

dȲ

All the measured Y values fluctuate but if they are

independent, the fluctuations only add in quadrature so:

Error in the best fit parameters:

σα2 ∗

=

n

X

i=1

dα ∗

2

dYi

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

σi2

Hilary Term 2016

72 / 96

Uncertainty propagates (2)

Back to our example:

One-parameter fit

We found α ∗ = b/ A with

A=

n x2

X

i

i=1

So

dα ∗

dyi

=

1 db

A dyi

σi2

and b =

n xy

X

i i

i=1

σi2

since A is fixed. We get

σα2 ∗

=

n

1 X

A2

i=1

xi

σi2

2

σi2 =

1

A

Back to our first example: We quote α ∗ = 1.81 ± 0.05.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

73 / 96

The median

In describing statistical data, often use the median.

The median is a “typical” sample.

It is defined as the middle value in the sample (so the pdf

must be non-zero at that value, unlike the sample mean).

Median of n data

After ordering the data into a sequence

S = {X1 , X2 , X3 , . . . Xn } where X1 ≤ X2 ≤ X3 · · · ≤ Xn

Consider the two cases where n is

1

Odd: MX = Xm where m =

2

Even: MX =

Xm +Xm+1

2

n+1

2

where m =

n

2

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

74 / 96

The median (2)

The median of 9 data-points

Consider the data

{3, 7, 2, 9, 6, 5, 1, 9, 8}

After ordering this data, we find the sequence

S = {1, 2, 3, 5, 6, 7, 8, 9, 9}

and so the median is MX = 6.

The median of 10 data-points

Consider the data

{23, 28, 12, 84, 92, 45, 32, 81, 11, 52}

After ordering this data, we find the sequence

S = {11, 12, 23, 28, 32, 45, 52, 81, 84, 92}

and so the median is MX =

32+45

2

= 38 21

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

75 / 96

Sorting algorithms

An algorithm is a practical method for solving some

problem.

To find the median of m data, where m is large, we would

use a computer. Finding the median is then equivalent to

solving the problem of sorting the data-set.

As we shall see, this is almost true - there is a short-cut...

There are many different approaches to solving this

problem. Are they all equally useful?

Assuming they all work, we would like to find the

algorithm that finds the correct solution in the shortest

amount of time.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

76 / 96

Bubblesort

NB: this is an example of a bad algorithm!

The bubblesort algorithm

To sort n data;

1

For i = 1, 2, 3, . . . n − 1

2

Test if Xi > Xi+1 and if true, swap Xi ↔ Xi+1

3

Repeat steps 1, 2 until all pairs are in the right order

How many tests and swap operations on average will we

need to perform?

Suppose the smallest number starts at position k. We

need k − 1 iterations of the loop to get it to top of the list

and each iteration requires n − 1 “>-tests”, so method will

converge in at least (k − 1) × (n − 1) ≈ kn iterations.

For jumbled data, k ∝ n, so cost of method grows like n2

Bubblesort is called an O(n2 ) algorithm.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

77 / 96

Bubblesort - an example

Use bubblesort to sort 5 numbers

Start with X = [7, 2, 9, 4, 1]

7

2

9

4

1

2

7

9

4

1

2

7

9

4

1

2

7

4

9

1

2

7

4

1

9

2

7

4

1

9

2

7

4

1

9

2

4

7

1

9

2

4

1

7

9

2

4

1

7

9

2

4

1

7

9

2

4

1

7

9

2

1

4

7

9

2

1

4

7

9

2

1

4

7

9

2

1

4

7

9

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

1

2

4

7

9

1

2

4

7

9

1

2

4

7

9

1

2

4

7

9

1

2

4

7

9

1

2

4

7

9

1

2

4

7

9

Hilary Term 2016

1

2

4

7

9

1

2

4

7

9

78 / 96

Quicksort

Donald Knuth (The Art of Computer Programming, Vol 3):

“The bubble sort seems to have nothing to recommend it,

except a catchy name”

Are there algorithms better than O(n2 )? Yes

One example: quicksort. Popular as it is efficient on

computers.

Algorithm is an example of a recursive method.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

79 / 96

The quicksort algorithm

Quicksort

To sort a list of n numbers Ω = [X1 , X2 , . . . Xn ]

1

2

Choose one element Xp (usually at random) called the

pivot. Define two sets, Ωlo and Ωhi

For i = 1, 2, 3, . . . p − 1, p + 1, . . . n − 1

Test if Xp > Xi . If true, put Xi in Ωlo otherwise put Xi in Ωhi

3

Now apply this algorithm to sort both Ωlo and Ωhi

(recursion)

After the first pass, the pivot is in its correct final location

If the purpose is to find the median, only one recursion

needs to be followed.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

80 / 96

The quicksort algorithm

How many test/swap operations are needed?

The first pass, comparing all elements in Ω to the pivot

takes n operations.

There are two sorts to do at the next level of recursion,

each (on avarage) of length n/ 2. These two sorts need

2 × n/ 2 = n comparisons

The number of recursions (on average) is d where 2d = n,

so d = log2 n

Total number of comparisons is then O(n log2 n)

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

81 / 96

Quicksort

Example: quicksorting 10 numbers

Task: sort the list Ω = [12, 3, 8, 15, 4, 9, 1, 14, 7, 5]

(chosen pivots in red):

12

3

3

1

1

1

1

1

3

4

1

3

3

3

3

3

8

1

4

4

4

4

4

4

15

7

7

7

5

5

5

5

4

5

5

5

7

7

7

7

9

8

8

8

8

8

8

8

1

12

12

12

12

9

9

9

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

14

15

15

15

15

12

12

12

7

9

9

9

9

15

14

14

5

14

14

14

14

14

15

15

Hilary Term 2016

82 / 96

Comparing bubblesort with quicksort

1

10

0

10

time to sort (secs)

-1

10

-2

10

quicksort

bubblesort

-3

10

-4

10

-5

10

-6

10

1

10

2

10

3

10

4

10

5

10

6

10

7

10

size of sort array

Except for tiny arrays, quicksort is much faster

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

83 / 96

Visualising the cdf

1

0.8

i/n

0.6

0.4

0.2

0

-4

-2

0

2

4

a[i]

Plotting the sorted data (with its index in the array) gives

a visualisation of the cdf

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

84 / 96

Expectation of the median for a large sample

For a large set of independent samples, the median

should be the “middle” of the cdf

Expected value of the median M¯X in this case would obey

FX (M̄X ) =

1

2

For a random value, probability it is above (or below) the

median is 21 .

Not true for the expected value of the mean.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

85 / 96

Expectation of the median for a large sample

Example: Expected values of the mean and median

Consider a random number X in the range [0, 1] with pdf

fX (x) = 2x

What is expected value of the mean of X?

Z1

2

2

E[X] =

x · 2x dx = [ x3 ]10 =

3

3

0

What is expected value of the median of X?

Zx

2x̃ dx̃ = x2

FX (x) =

0

so

FX (M̄X ) =

1

2

È

→ M̄X =

1

2

= 0.707106 . . .

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

86 / 96

Introduction to

Markov processes

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

87 / 96

Markov chains

In 1906, Markov was interested in demonstrating that

independence was not necessary to prove the (weak) law

of large numbers.

He analysed the alternating patterns of vowels and

consonants in Pushkin’s novel “Eugene Onegin”.

In a Markov process, a system makes stochastic

transitions such that the probability of a transition

occuring depends only on the start and end states. The

system retains no memory of how it came to be in the

current state. The resulting sequence of states of the

system is called a Markov chain.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

88 / 96

Markov chains

A Markov chain

A system can be in any one of n distinct states, denoted

{χ1 , χ2 , . . . χn }.

If ψ(0), ψ(1), ψ(2), . . . is a sequence of these states observed

at time t = 0, 1, 2, . . . and generated by the system making

random jumps between states so that the conditional

probability

P(ψ(t) = χi |ψ(t − 1) = χj , ψ(t − 2) = χk , . . . ψ(0) = χz )

= P(ψ(t) = χi |ψ(t − 1) = χj )

then the sequence {ψ} is called a Markov Chain

If P(ψ(t) = χi |ψ(t − 1) = χj ) does not depend on t, then the

sequence is called a homogenous Markov chain - we will

consider only homogenous Markov chains.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

89 / 96

Markov matrix

The conditional probabilities describe the probability of

the system jumping from state χj to state χi . There are

n × n possible jumps and these probabilities can be

packed into a matrix, with elements Mij being the

probability of jumping from j to i.

The Markov matrix

A matrix containing the n × n probabilities of the system

making a random jump from j → i is called a Markov matrix

Mij = P(ψ(t + 1) = χi |ψ(t) = χj )

Since the entries are probabilities, and the system is always in

a well-defined state, a couple of properties follow. . .

0 ≤ Mij ≤ 1

Pn

M =1

i=1 ij

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

90 / 96

Markov Processes (4)

Dublin’s weather

An example: On a rainy day in Dublin, the probability

tomorrow is rainy is 80%. Similarly, on a sunny day the

probability tomorrow is sunny is 40%.

This suggests Dublin’s weather can be described by a

(homogenous) Markov process. Can we compute the

probability any given day is sunny or rainy?

For this system, the Markov matrix is

Sunny Rainy

Sunny

0.4

0.2

Rainy

0.6

0.8

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

91 / 96

Dublin’s weather (2)

1

0

If today is sunny we can write the state as ψ(0) =

,

0.4

and the state tomorrow is then ψ(1) =

, and

0.6

0.28

0.256

ψ(2) =

, ψ(3) =

, ...

0.72

0.744

0

If today is rainy we can write the state as ψ(0) =

,

1

0.2

, and

and the state tomorrow is then ψ(1) =

0.8

0.24

0.248

ψ(2) =

, ψ(3) =

,

0.76

0.752

The vector ψ quickly collapses to a fixed-point, which

must be π, the eigenvector of M with eigenvalue 1,

P2

normalised such that i=1 πi = 1.

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

92 / 96

Dublin’s weather (3)

Finding the probability of sun or rain a long time in the

future is equivalent to solving

0.4 0.2

π1

π1

=

0.6 0.8

π2

π2

with the normalising condition for the probabilities;

π1 + π2 = 1

0.25

We find π =

. This is the invariant probability

0.75

distribution of the process; with no prior information these

are the probabilities any given day is sunny (25%) or rainy

(75%).

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

93 / 96

Population migrations

In a year, the fraction of the population of three provinces A, B

and C who move between provinces is given by

From/ To

A

B

C

A

3%

7%

B

1%

C

1%

2%

7%

Show the stable populations of the three provinces are in the

proportions 8 : 3 : 1

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

94 / 96

Winning a Tennis game at Deuce

Two tennis players, Alice and Bob have reached Deuce. The

probability Alice wins a point is p while the probability Bob

wins is q = 1 − p. Write a Markov matrix describing transitions

this system can make.

Answer:

1 p 0 0 0

0 0 p 0 0

M= 0 q 0 p 0

0 0 q 0 0

0 0 0 q 1

with states given by χ = {A wins, Adv A, Deuce, Adv B, B wins}

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

95 / 96

Winning a Tennis game at Deuce (2)

Remember: entry Mij = P(ψ(t + 1) = χi |ψ(t) = χi )

Some transitions are forbidden (like Adv A → Adv B)

Some states are “absorbing” - once in that state, the

system never moves away.

With a bit of work, it is possible to see the long-time

average after starting in state χ3 ≡ Deuce is

p2

π3 =

1−2pq

0

0

0

q2

1−2pq

1

The tennis game ends with probability 1

2

Alice wins with probability

p2

1−2pq

Slides by Mike Peardon (2011) minor modifications

MA22S6

by Stefan

- Data

Sint

analysis

(TCD)

Hilary Term 2016

96 / 96