Optimal Design Theory for Linear Models, Part II

advertisement

Optimal Design Theory for Linear Models, Part II

Design as a Probability Measure

As discussed above, the construction of optimal designs is generally viewed as a scalarvalued optimization problem to be solved either analytically or numerically. But finding

a design U that maximizes or minimizes φ(M(U )) can be very difficult, especially when

N × r (the usual number of arguments over which the optimization must be performed)

is large. This is made even more difficult in some cases by the inherent discrete nature of

the optimization (i.e. where replacing one design point with another is not a “continuous”

change in the design), restricting the applicability of many standard analytical optimization

techniques.

In response to this, continuous design theory has been developed as an alternative framework for optimal design study and construction. Formally, this is done as follows:

• Require that X be a compact subset of Rk .

• Define H to be the class of probability distributions on Borel sets of X .

• Regard any η ∈ H as a design measure.

This changes the problem from one of finding an optimal set of N discrete points in U (and

therefore also in X ) to one of finding a “weight function” that integrates to one over X . As

a result, we now generalize our definition of a the design moment matrix to be:

M(η) = Eη (xx0 )

Note that this is actually consistent with our previous formulation, where each discrete value

of x in the design was given an equal probabiliy, or “weight”, of

1

.

N

The point of this generalization is, essentially, to “remove N from the problem”. As

a result, the optimal “designs” found often cannot really be implemented in practice, but

must be “rounded” to “exact” designs that weight each of a discrete set of points with

probabilities that are multiples of

1

.

N

For this reason, the generalization is sometimes called

approximate design theory. Despite this disadvantage, we will see that it allows us to bring

some powerful mathematical results to bear on the design problem that greatly improve our

ability to construct optimal or near-optimal designs.

A practical constraint that goes with adopting the design measure approach is the requirement that for any design criterion φ considered,

φ(c1 M) = c2 φ(M) for any psd M, for positive constants c1 and c2

1

This requirement essentially says that for any collection of design measures, the ranking

implied by φ would be the same as that for the same set of design measures redefined

so that they integrate to a constant other than one. Fortunately, all of the optimality

criterion functions discussed above (as well as most others that make any sense) satisfy this

requirement.

It is also useful at this point to simplify things by agreeing to define criterion functions φ

so that “large is good”. Hence for a given problem, we seek the design measure or measures

η∗ that maximize φ(M(η)) over H. The above implies that if:

• η∗ assigns positive probability to only a finite set of points, and

• the probability assigned to the ith of these points can be written as ri /N for finite

integers ri and N

then the “exact” N -point design with ri replications at the corresponding unique point must

be φ-optimal among all N -point “exact” designs. If η∗ does not have this character, then

“rounding” to a near-optimal “exact” design – which may also be optimal among exact

designs – is necessary.

Example: SLR again

Let H be the class of all probability distributions over U = [−1, +1]. Then for any design

measure η ∈ H,

1

Eη (u)

M(η) =

|M(η)| = Eη (u2 ) − Eη (u)2 = V arη (u)

2

Eη (u) Eη (u )

Eη (u2 ) can be no greater than 1, and Eη (u)2 can be no smaller than 0, so

η∗ : u =

+1 prob

−1 prob

1

2

1

2

other prob 0

is the D-optimal design measure. Hence for any even N

N/2 u0 s = +1

U =

N/2 u0 s = −1

is D-optimal among all discrete (exact) designs. For odd N , we need to round η∗ ; we might

guess that

U=

(N + 1)/2 u0 s = +1

(N − 1)/2 u0 s = −1

2

is “D-good”, but this result doesn’t prove it. (Actually, we did prove this the hard way for

N = 3 earlier.)

Some Assurances against what should be your Worst Fears

Question: Might it be possible that for some problem, the only optimal design measure η∗

is, say, the uniform distribution over all of a continuous space X , so that no exact design in

finite N approximates it well?

Answer: Let M be the class of all design moment matrices generated by H over X :

M(η) ∈ M

η ∈ H.

iff

M is a convex set, i.e.

• If M1 ∈ M and M2 ∈ M and 0 < t < 1

• then tM1 + (1 − t)M2 ∈ M

This can be proved immediately by simple construction; the “mixture M” belongs to the

design measure that is the indicated weighted average of η1 and η2 . This is what you need

to invoke a form of Caratheodory’s Theorem:

Any M ∈ M can be expressed as:

M=

PI

i=1

for xi ∈ X , λi > 0, i = 1, 2, 3, ..., I, and

λi xi x0i

PI

i=1

λi = 1 (i.e. a distribution with I

points of support), and such an arrangement exists for which:

• I ≤ k(k + 1)/2 + 1 if M is on the interior of M

• I ≤ k(k + 1)/2 if M is on the boundary of M

So, while there may design measures that cannot be well-approximated by exact designs,

for any value of φ (including the maximum one), there will always be at least one optimal

design measure on no more than the indicated finite number of support points.

Example: SLR again

Let η1 assign uniform probability across U = [−1, +1], or across X here since x0 = (1, u).

For this design measure

M(η1 ) =

R

X

xx0 η(x)dx = R 1

1

1

−1 2 udu

Now also define

3

R1 1

udu

−1 2

R1 1 2

−1 2 u

du

1 0

=

0 13

η2 : u =

q

1

q3

− 13

+

prob

prob

1

2

1

2

other prob 0

It is not difficult to show that M(η2 ) = M(η1 ). Caratheodory’s result guarantees an equivalent design measure exists on no more than 4 points; η2 satisfies the requirement in one

fewer points.

Preparing for some Theory

A basic requirement that will be needed in order to develop a more powerful mathematical

threory of optimal design is that φ be a concave function of M, that is, for any M1 and

M2 ∈ M, and for any 0 < t < 1, we require that:

φ(tM1 + (1 − t)M2 ) ≥ tφ(M1 ) + (1 − t)φ(M2 )

Note that the “mixed” M on the left side is guaranteed to be an element of M by our

requirement that M be a convex set. The concavity requirement says that the mixture of

two designs is at least as good as the same mixture of φ values applied to the two designs

individually. Again, this is true of most commonly used optimality criteria. The most

important exception is that |M| is not concave in all cases, and we repair this by changing

the D-optimality criterion function to log|M|, which is concave.

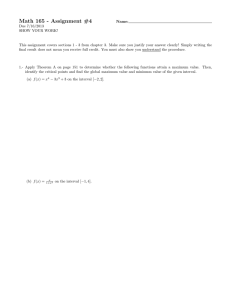

Now, define for any φ and design moment matrices M1 and M2 from M, a quantity we

will call a Frechet difference:

D (M1 , M2 ) = 1 [φ{(1 − )M1 + M2 } − φ{M1 }], ∈ [0, 1]

In the following, we shall use the fact that requiring φ to be concave implies that D (M1 , M2 )

is a non-increasing function of ∈ [0, 1]:

slope = Dϵ(M1,M2)

φ

(1-ϵ)M1+ϵM2

ϵ

M2

M1

4

This can be proven as follows:

φ((1 − )M1 + M2 ) ≥ (1 − )φ(M1 ) + φ(M2 )

1

[φ((1

(concave φ)

− )M1 + M2 ) − φ(M1 )] ≥ φ(M2 ) − φ(M1 )

(algebra)

D (M1 , M2 ) ≥ D1 (M1 , M2 )

(defn of D )

D (M1 , M2 ) = 1 D1 (M1 , (1 − )M1 + M2 )

(defn of D )

1

D (M1 , (1 − )M1 + M2 ) ≥ 1 D1 (M1 , (1 −

δ

1

D (M1 , (1 − )M1 + M2 ) ≥ D (M1 , M2 )

δ

1

[φ((1 − δ)M1 + δM2 ) − φ(M1 )] ≥ 1 [φ((1

δ

)M1 + M2 ),

δ ∈ [0, 1]

(same argument)

(combining)

− )M1 + M2 ) − φ(M1 )]

(algebra)

Dδ (M1 , M2 ) ≥ D (M1 , M2 )

Introduction of the “Frechet difference” is really an intermediate step to introducing the

Frechet derivative of φ at M1 , in the direction of M2 . (It should not be surprising that

our desire to optimize a function would involve a derivative of some kind, especially after

we’ve gone to the trouble of generalizing the design problem to a continuum setting.) This

is one of a collection of so-called “directional derivatives”, and is the one most commonly

used in continuous design optimality arguments. (There is also a simpler Gateaux derivative

discussed by Silvey in Chapter 3; some results depending on F-derivatives can be made using

intermediate results based on G-derivatives.)

Def ’n: The Frechet derivative of φ at M1 , in the direction of M2 , is

Fφ (M1 , M2 ) = lim→0+ D (M1 , M2 ).

Frechet Derivative: D-optimality

φ = log|M|.

Fφ (M1 , M2 )

= lim→0+ 1 [φ {(1 − )M1 + M2 } − φ {M1 }]

[−]

= log|(1 − )M1 + M2 | − log|M1 |

[−]

= log|(1 − )M1 + M2 | + log|M−1

1 |

[−]

= log|(1 − )M1 + M2 ||M−1

1 |

[−]

= log|((1 − )M1 + M2 )M−1

1 | (prod of det’s is det of prod)

[−] = log|(1 − )I + M2 M−1

1 |

Now think about structure of this matrix. The ith diagonal element is 1 − + di where

di is the ith diagonal element of M2 M−1

1 . The off-diagonal elements are all of order .

So, the O(1) term in the determinant is 1, and the O() terms are − + di . Therefore,

2

[−] = log(1 − k + trace[M2 M−1

1 ] + O( ))

[−]

Fφ (M1 , M2 )

= −k + trace[M2 M−1

1 ] for small = trace[M2 M−1

1 ]−k

5

Frechet Derivative: A-optimality

φ(M) = −trace[AM−1 ]

= lim→0+ 1 [φ {(1 − )M1 + M2 } − φ {M1 }]

Fφ (M1 , M2 )

= lim

1

n

−trace[A((1 − )M1 + M2 )−1 ] + trace[AM−1

1 ]

o

−1

= trace A lim 1 [M−1

1 − ((1 − )M1 + M2 ) ]

Write the last inverse as M−1

1 + R, solve for R:

((1 − )M1 + M2 )(M−1

1 + R) = I

→ R = ((1 − )M1 + M2 )−1 (I − M2 M−1

1 )

Fφ (M1 , M2 )

= trace A lim

1

o

n

−1

−1

−1

M−1

1 − M1 − ((1 − )M1 + M2 ) (I − M2 M1 )

= trace A lim − 1 ((1 − )M1 + M2 )−1 (I − M2 M−1

1 )

−1

−1

= −traceAM−1

1 + traceAM1 M2 M1

−1

= traceAM−1

1 M2 M1 + φ(M1 )

Frechet Derivative: Exercise

Suppose we decide to make M−1 as “small as possible” by minimizing the sum of squares

of its elements. What is Fφ for this criterion? (There is no obvious statistical meaning, but

it isn’t entierly silly, and makes an interesting exercise. Hint: φ(M) = −trace(M−1 M−1 ).

Review the form of the argument for A-optimality.)

Some Theory

The following results are numbered as in (and closely follow) Silvey’s presentation.

Theorem 3.6: For φ concave on M, η∗ is φ-optimal iff:

Fφ (M(η∗ ), M(η)) ≤ 0 for all η ∈ H

Proof of “if”:

• Fφ (M(η∗ ), M(η)) ≤ 0 (for all η ∈ H)

•

1

[φ {(1

0

− 0 )M(η∗ ) + 0 M(η)} − φ {M(η∗ )}] ≤ 0 (D is nonincreasing)

• first term ≥ (1 − 0 )φ {M(η∗ )} + 0 φ {M(η)} (concave function)

•

1 0

[ φ {M(η)}

0

− 0 φ {M(η∗ )}] ≤ 0 (substitution)

• η∗ is φ-optimal

Proof of “only if”:

6

• η∗ is φ-optimal

• φ{M((1 − )η∗ + η)} ≤ φ{M(η∗ )} (any fixed )

• φ{(1 − )M(η∗ ) + M(η)} ≤ φ{M(η∗ )} (same thing)

• φ{(1 − )M(η∗ ) + M(η)} − φ{M(η∗ )} ≤ 0

• divide by and take the limit, Fφ (M(η∗ ), M(η)) ≤ 0

Really, this says “the obvious”; you are on top of the hill if and only if every direction takes

you “down”. Note that this result is not an especially powerful tool in direct application,

because proving that η∗ is optimal requires that you compare it to every η ∈ H. We’ll have

a more powerful result in the next theorem, but first:

Lemma: Let η1 ...ηs be any set of design measures from H, and λi > 0,

P

i

λi = 1, i = 1...s.

Let φ be finite at M(η). If φ is differentiable at M(η) then:

Fφ (M(η),

Ps

i=1

λi M(ηi )) =

Ps

i=1

λi Fφ (M(η), M(ηi ))

i.e. the derivative in the direction of a weighted average, is the weighted average of the

derivatives in each direction.

Theorem 3.7: For φ concave on M and differentiable at η∗ , η∗ is φ-optimal iff:

Fφ (M(η∗ ), xx0 ) ≤ 0 for all x ∈ X

Proof of “if”:

• Fφ (M(η∗ ), xx0 ) ≤ 0

• Any M(η) =

P

λi xi x0i (and the sum has a finite number of terms, Caratheodory)

• Fφ (M(η∗ ), M(η)) =

P

λi Fφ (M(η∗ ), xi x0i ) (Differentiability Lemma)

• ≤ 0, so η∗ is φ-optimal (Theorem 3.6)

Proof of “only if”:

• by Thm 3.6, since every x is a one-point design

Direct application of Theorm 3.7 requires comparison of η∗ to every x ∈ X , rather than to

every η ∈ H (as in Theorem 3.6).

Example: QLR Consider quadratic linear regression in one predictor variable and set U =

[−1, +1], so that:

7

X = {x = (1, u, u2 ) : −1 ≤ u ≤ +1}

We would like to show that a particular design in D-optimal, and we use the log form of the

criterion to satisfy the concavity requirement:

φ(M) = log|M|.

Theorem 3.7 does not tell us how to construct an optimal design (although we will later

see that it can be used to motivate construction algorithms). So at this point, we have to

“guess” what an optimal design might be, and use the theorem to (hopefully) prove that we

are correct. Since we know that any discrete design will need at least 3 distinct treatments

in order to support estimation of the model, try:

η0 : u =

+1 prob

−1 prob

0 prob

1

3

1

3

1

3

For this design,

3 0 2

1

M(η0 ) = 3 0 2 0

2 0 2

For our chosen criterion function, we’ve shown that the Frechet derivative at any design, in

the direction of any one-point design, is:

Fφ (M, xx0 ) = trace(xx0 M) − k = x0 Mx − k

So Theorem 3.7 shows that our design is D-optimal if x0 M(η0 )x ≤ 3 for all x ∈ X . Doing

the algebra shows that for η0 , the quadratic form is 43 [4 − 6u2 (1 − u2 )], which can be no more

than 3. Hence η0 is a D-optimal (continuous) design, and a discrete D-optimal design for

any N that is a multiple of 3 can be formed by putting

1

3

of the points at each of u = −1,

0, and +1.

There is a hint of something worth noticing here! Theorem 3.7 and the example make

a connection between the D-optimality criterion and the maximum value of its Frechet

derivitive “in the direction of” any one-point design:

log|M| and maxx∈X x0 Mx − k

Theorem 3.7 says that the first is maximized (the design is D-optimal) iff the second is no

more than zero. But note that the Frechet derivative for D-opimality is equivalent to the

criterion function for G-optimality. Hence this theorem suggests a link between the two

criteria, although it doesn’t quite say that the two criteria are equivalent (because it doesn’t

8

say you have to minimize the G-criterion ... just that it must be less than zero.) However,

the next result does complete this link.

Theorem 3.9: For φ differentiable on M+ (the subset of M where φ(M) > −∞), and an

optimal design exists, η∗ is φ-optimal iff:

maxx∈X Fφ (M(η∗ ), xx0 ) = minη∈H maxx∈X Fφ (M(η), xx0 ) = 0

(Note that the “= 0” part of this is implied by Silvey’s proof, but is not included in the

theorem statement; he adds this as part of a following corollary.)

Proof:

• For any η, we’ve shown Fφ (M(η),

P

λi M(ηi )) =

P

λi Fφ (M(η), M(ηi ))

• So Fφ (M(η), Eλ (xx0 )) = Eλ Fφ (M(η), xx0 )

• If λ = η, the first is zero, so the second is also zero

• So maxx∈X Fφ (M(η), xx0 ) ≥ 0 for any η, including the one that minimizes maxx∈X Fφ (M(η), xx0 )

• But by Thm 3.7, η is φ-optimal iff maxx∈X Fφ (M(η), xx0 ) ≤ 0

• So minη∈H maxx∈X Fφ (M(η), xx0 ) = 0, where the minimizer is η∗ .

Stated this way, Silvey’s Theorem 3.9 is “part a” of Whittle’s (1973) version of the

General Equivalence Theorem:

For φ concave and differentiable on M+ , if a φ-optimal design exists, the following statements

are equivalent:

1. η∗ is φ-optimal

2. η∗ minimizes maxx∈X Fφ (M(η), xx0 )

3. maxx∈X Fφ (M(η∗ ), xx0 ) = 0

Kiefer and Wolfowitz (1960) gave the first version of an Equivalence Theorem, specifically for

D-optimality (where the Frechet derivative is, apart from “−k”, the quantity to be minimized

for G-optimality) which says that the following statements are equivalent:

1. η∗ is D-optimal

2. η∗ minimizes maxx∈X x0 M(η)−1 x, i.e. is G-optimal

3. maxx∈X x0 M(η∗ )−1 x = k

9

An important reminder: (General) Equivalence Theory holds only for continuous design

measures, so for example, discrete D- and G-optimal designs are not always the same.

Corollary: If η∗ is optimal and φ is differentiable at M(η∗ ), then:

• maxx∈X Fφ (M(η∗ ), xx0 ) = 0 (bottom line of Thm 3.9)

• Eη∗ Fφ (M(η∗ ), xx0 ) = 0 (by letting the λ’s be the weights associated with η∗ in the

second line of the proof)

Where η∗ is discrete (and it always can be thanks to Caratheodory’s Theorem), this says

that the largest F-derivative in the direction of any x is zero, and the average F-derivative

in the direction of the x’s included in the design is zero, which implies that the F-derivative

in the direction of each point in the design is zero, that is:

Fφ (M(η∗ ), xi x0i ) = 0, i = 1, 2, 3, ..., I

The last point can be used as a check on a “candidate” optimal design, but isn’t sufficient to

prove optimality if it holds. That is, the F-derivative in another direction might be positive

even if those in the direction of the design support points are zero.

Example: MLR

Here’s an example of how optimal designs can be constructed or verified based on the theory presented to this point. Consider the 3-predictor first-order multiple regression problem

with:

E(y) = θ0 + θ1 u1 + θ2 u2 + θ3 u3 U = [−1, +1]3 , X = 1 × [−1, +1]3

and think about what form an exact D-optimal design for the whole parameter vector might

take. To use the theory we’ve discussed, start with a general probability measure η instead;

what do we know?

• At η∗ , maxx∈X x0 M−1 x = 4 (from equiv. theory)

Without much thought, we know that M = I achieves this; so, for what η’s does this happen?

• M=

PI

i=1

λi xi x0i = I

→

PI

i=1

λi u21,i = 1, etc. (diag’s)

So a D-optimal design can be constructed with mass only on the 8 corner points; what

8-point distributions do this? Order the 8 corner points as:

10

1 + + + +

2

+ + + −

3

+

−

+

+

4

+ + − −

5

−

+

+

+

6

+ − + −

7

+

−

−

+

8

+ − − −

In coded form, this means:

X0 X =

1

1234

1256

5678

3478

1357

2468

1278

1

1368

3456

2457

1458

1

2367

1

where, e.g., the (1,2) element means λ1 + λ2 + λ3 + λ4 − λ5 − λ6 − λ7 − λ8 . If we require

that M be an identity matrix, including the “diagonal” implication that the sum of the λ’s

must be 1, this yields a system of 7 equations in 8 unknowns:

+ + + + + + + +

+ + + + − − − −

+ + − − + + − −

λ=

+ − + − + − + −

+ + − − − − + +

+ − + − − + − +

+ − − + + − − +

0

1

0

0

0

0

0

Solutions to this system are of form:

λ1 = λ4 = λ6 = λ7 ,

λ 2 = λ3 = λ5 = λ8 ,

P8

i=1

λi = 1

So, design measures that put equal weight on the points of I = +ABC, and equal weight

on the points of I = −ABC are D-optimal. When N is any multiple of 4, an exact design

can be constructed that reflects this measure, and so it is D-optimal among all exact design.

(And for large N , there are lots of choices, e.g. 3 points at each of I = +ABC and 5 points

at each of I = −ABC when N = 64, et cetera.) Note that in this argument, we’ve not

explicitly included the requirement that each λi be non-negative ... that’s a “side condition”

that can’t be expressed as a linear equation.

11

The Complication of Singular M

Theorem 3.7 is quite powerful, but depends on φ being differentiable at M(η∗ ). In this

context, differentiability implies that:

Fφ (M(η∗ ), M(η)) =

R

x

Fφ (M(η∗ ), xx0 )η(x)dx

This is true for D- and A- and many other criteria that require nonsingular M, but consider

what happens when we try to do what seems reasonable in a subset-estimation example.

Consider the model y = θ0 + θ1 u1 + θ2 u2 + , with U = the quadrilateral with corners:

(−1, 0), (+1, 0), (−2, +1), (+2, +1):

u2

-2

-1

0

1

2

u1

Suppose want to estimate (θ0 , θ1 ) well; θ2 is a nuisance parameter. A partitioning of the

design moment matrix that reflects this is:

M11 m21

M= 0

m21 m22

The inverse of the upper left corner of M−1 is

M11 −

and we could use φ(M) = log|M11 −

1

m m0

m22 21 21

1

m m0 |.

m22 21 21

But this requires m22 6= 0, which isn’t

really necessary to estimate (θ0 , θ1 ). To see this, let

η1 : u =

(+1, 0) prob

(−1, 0) prob

1

2

1

2

1 0 0

M(η1 ) = 0 1 0

0 0 0

Since u2 = 0 in this design, (θ0 , θ1 ) can be estimated as if this were a simple linear regression

problem, and in fact, this design is D-optimal for the one-predictor model if you restrict U

to u1 in [−1, +1].

But we don’t know that η1 is optimal, so instead, we can define our optimality criterion

to be:

12

φ(M) = log|M11 −

1

m m0 |

m22 21 21

(“form 1”) if M is of full rank

= log|M11 | (“form 2”) if M11 is of full rank and m22 = 0

(Note here that φ(M(η1 )) = 0; this is needed in comparisons to come next.)

Now define an alternative design measure:

(+1, 0) prob

(−1, 0) prob

η2 : u =

(+2, 1) prob

(−2, 1) prob

1

4

1

4

1

4

1

4

1 0 12

M(η2 ) = 0 52 0

1

1

0 2

2

Intuition is hard to use here; this design is on the corners of U, so the entire parameter vector

is estimable. θ̂2 is not orthogonal to θ̂0 , and other things being equal, this should mean that

we won’t be able to estimate (θ0 , θ1 ) as well as with η1 . But by using all of U, this design

“spreads out” the values of u1 more which, other things being equal, should mean that we

can estimate (θ0 , θ1 ) better.

We are interested in looking at the Frechet derivative of φ at η1 in the direction of η2 ,

and in the direction of each of its points of support. First, note that

(1 − )M(η1 ) + M(η2 ) =

1

0

0

1 + 32 1

2

0

1

2

0

1

2

The optimality criterion (form 1, since this matrix is nonsingular for non-zero ) is

φ(above) =

log

1

0

0 1+

3

2

− 1

/2

2

/4 0

0

0

From this, the Frechet derivative is gotten by dividing by and taking the limit (remembering

the φ(M(η1 )) = 0, and after applying l’Hospital’s rule):

Fφ (M(η1 ), M(η2 )) = lim 1 log(1 + − 43 2 ) = 1

But now look at Fφ (M(η1 ), −) for each of the points of support of η2 individually:

1 + 0

• (1 − )M(η1 ) + M(+1, 0) = + 1 0

0

0 0

– φ(above) =

log

1

+

+ 1 (form 2)

– Fφ (M(η1 ), M(+1, 0)) = lim 1 log(1 − 2 ) = 0

• Similarly, Fφ (M(η1 ), M(−1, 0)) = 0

13

1

+2

+

• (1 − )M(η1 ) + M(+2, 1) = +2 1 + 3 +2

+

+2

+

– φ(above) =

log

+22

+2 1 2

−

+2 1 + 3

+22 +42 1

(form 1)

– Fφ (M(η1 ), M(+2, 1)) = lim 1 log((1 − )(1 − )) = −2

• Similarly Fφ (M(η1 ), M(−2, 1)) = −2

So, Fφ at M(η1 ) in the direction of each support point of η2 is non-positive, while Fφ (M(η1 ), M(η2 ))

is positive, so Fφ (M1 ,

P

λi xi x0i ) 6=

P

λi Fφ (M1 , xi x0i ). Theorem 3.7 cannot be used at η1 , but

Thm 3.6 can since it does not depend on differentiability.

What more can be said about this problem? It turns out that design measures that put

all mass on u2 = 0 can’t be optimal; if they were, our η1 would be the best (because it is the

optimal s.l.r. design allowed on this segment of the line), but it isn’t because, for example,

φ(M(η2 )) = log 54 . Further, design measures that put all mass on any other single value of u2

can’t be optimal, because they confound θ0 and θ2 , so at least two values of u2 are needed.

A good guess might be:

ηg : u =

(+1 + d, d) prob π1

(−1 − d, d) prob π1

(+2, 1) prob π2

(−2, 1) prob π2

One could numerically or analytically optimize φ over π1 and d. Since φ will be differentiable

at M(ηg ), one could then ask whether Fφ (M(ηg ), xx0 ) ≤ 0 for all x.

References

Silvey, S.D. (1980). Optimal Design: An Introduction to the Theory for Parameter Estimation, Chapman and Hall, London.

14