TWO-LEVEL FACTORIAL EXPERIMENTS (Basics) • Treatments defined by f binary “bits” treatments

advertisement

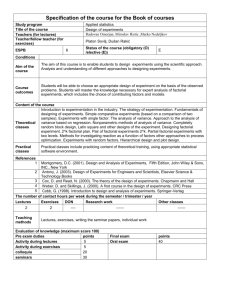

STAT 512 2-Level Factorial Experiments: Basics TWO-LEVEL FACTORIAL EXPERIMENTS (Basics) • Treatments defined by f binary “bits” → 2f treatments • For (a classical) example, fertilizer treatments defined by including: – N (nitrogen) at level i (1 or 2) – P (phosphorus) at level j (1 or 2) – K (potassium) at level k (1 or 2) * N2 * * * * N1 * K1 * P2 * P1 K2 1 STAT 512 2-Level Factorial Experiments: Basics • Cell Means Model: yijkm = µijk + ijkm m = 1, ...r • Factorial Effects Model (as we’ve been using with two factors, “overparameterized” form with over-dots in the book): yijkm = µ + αi + βj + γk +(αβ)ij + (αγ)ik + (βγ)jk +(αβγ)ijk + ijkm • Model matrix columns for main effects, e.g. α1 : 0 N2 0 0 0 1 N1 1 K1 1 P2 1 P1 K2 2 STAT 512 2-Level Factorial Experiments: Basics • Two-factor interactions, e.g. (αβ)11 0 N2 0 0 0 0 N1 1 K1 0 P2 1 P1 K2 • Three-factor interactions, e.g. (αβγ)111 0 N2 0 0 0 0 N1 1 K1 0 P2 0 P1 K2 3 STAT 512 2-Level Factorial Experiments: Basics • As usual for this approach, there are more parameters than are really needed: – 2 first order terms for each factor ... . 2f in all – 4 second order terms for each pair of factors ... f in all . 4 2 – 2q qth order terms for each q-tuple of factors ... f q in all . 2 q • 3f of them in all, but really only need 2f parameters to model the entire mean structure 4 STAT 512 2-Level Factorial Experiments: Basics One reparameterization that works well for 2-level factorials, is a “full-rank” form (with no over-dots in the book): • Replace each group of: – 2 main effects parameters, e.g. α1 , α2 – 4 two-factor interaction parameters, e.g. (αβ)11 ... with a single parameter as follows: • Replace (− − −)222...2 = (− − −) • Replace (− − −)121...2 = (− − −) × (−1)s where s = number of 1’s in subscript • Example: µ121 = µ +α1 + β2 + γ1 =µ −α + β − γ +(αβ)12 + (αγ)11 + (βγ)21 −(αβ) + (αγ) − (βγ) +(αβγ)121 +(αβγ) • Now, total of 2f parameters ... same as number of cell means 5 STAT 512 2-Level Factorial Experiments: Basics Also, this parameterization corresponds to contrasts in cell means: • Model matrix columns for main effects, e.g. α: + N2 + + + N1 K1 - P2 - P1 K2 • Two-factor interactions, e.g. (αβ) + N2 - + - N1 + K1 - P2 + P1 K2 6 STAT 512 2-Level Factorial Experiments: Basics • Managing +1’s and -1’s ... let: – x1 = −1 for i = 1, +1 for i = 2 – x2 = −1 for j = 1, +1 for j = 2 – x3 = −1 for k = 1, +1 for k = 2 • Then: µijk = µ +x1 α + x2 β + x3 γ +x1 x2 (αβ) + x1 x3 (αγ) + x2 x3 (βγ) +x1 x2 x3 (αβγ) 7 STAT 512 2-Level Factorial Experiments: Basics 8 • For a complete unreplicated 23 Factorial Design: – y = Xθ + y111 y112 y121 y122 y211 y212 y221 y222 + − − − + + +− = + − − + + − −+ α β γ (αβ) + (αγ) (βγ) + − + − − + −+ + − + + − − +− + + − − − − ++ + + − + − + −− + + + − + − −− + + + + + + ++ µ (αβγ) 111 112 121 122 211 212 221 222 – Columns of X are values of 1, x1 , x2 , ... x1 x2 x3 for each experimental run. – Basic pattern is the same for all 2f . STAT 512 2-Level Factorial Experiments: Basics y = Xθ + (N × 1) = (N × 2f )(2f × 1) + (N × 1) N = 2f unreplicated, N = r2f with r units per cell • Number of parameters is 2f because each is specified by presence or absence of each of f symbols. • Note odd notation ... θ contains one nuisance parameter. – Could partition model as before: y = 1µ + X2 φ + → H1 = 1 N J, but since all columns of X2 have zero-sums, H1 X2 = 0, X2|1 = X2 . – This means “correction for the mean” or intercept is automatic in this parameterization 9 STAT 512 2-Level Factorial Experiments: Basics • X is an orthogonal matrix: X0 X = r2f I θ̂ = (r2f )−1 X0 y • If r ≥ 1: θ̂ = 2−f M0 ȳ where M is the model matrix for an unreplicated design and ȳ is 2f -element vector of treatment means • Any linear combination of elements of θ is estimable (not just those that are treatment contrasts) because M is square, of full rank. But we generally don’t regard anything involving the first element as meaningful, since it includes experiment-specific effects. 10 STAT 512 2-Level Factorial Experiments: Basics Estimation of Functions of Treatment Mean: Full Model • Let µ = (µ111... ...µ222... )0 , same size and order of elements as ȳ • By our definition of factorial effects, µ = Mθ • So: 0 µ = c0d cd Mθ b = c0 M2−f M0 ȳ = c0 ȳ = c0 Mθ (because MM0 = 2f I) • Why? M0 M = 2f I M is square, full rank, so 2−f M0 = M−1 M(2−f M0 ) = I MM0 = 2f I 0 µ] = c0 E(ȳ) = c0 µ, V ar[c 0 µ] = (σ 2 /r)c0 c d • E[cd 11 STAT 512 2-Level Factorial Experiments: Basics 12 Estimation of Treatment Means: Reduced Model • Returning to f = 3 example, suppose we know (or are willing to assume) that interactions involving γ don’t exist: (αγ) = (βγ) = (αβγ) = 0 • Now: x1 µ= x2 + − − + − − x3 − + x1 x2 + + + − + − − + − + + − + + − − − + + − + − + + + − + + + + + + µ α β γ (αβ) = M1 θ 1 STAT 512 2-Level Factorial Experiments: Basics 0 0 0 µ = c0 M d • Now: cd 1 θ1 = c M1 θ̂ 1 ... but this is not c ȳ = c0 M1 (M01 M1 )−1 M01 ȳ = 2−f c0 M1 M01 ȳ • The difference now is that M1 M01 6= 2f I 0 µ] = • E[cd 2−f c0 M1 M01 (M1 θ 1 ) = 2−f 2f c0 M1 θ 1 = c0 µ ...if the reduced model is correct 0 µ] = • V ar[cd c0 M1 V ar[θ̂] M01 c c0 M1 (M01 M1 )−1 M01 V ar[ȳ] M1 (M01 M1 )−1 M01 c (σ 2 /r)2−2f c0 M1 M01 M1 M01 c = (σ 2 /r)2−f c0 M1 M01 c 13 STAT 512 2-Level Factorial Experiments: Basics • For full model, MM0 is 2f I → V ar[−] = (σ 2 /r)c0 c – Here, let M = (M1 , M2 ), and rewrite the variance for the FULL model: 0 2 −f 0 0 µ] V ar[cd = (σ /r)2 c MM c (full) 0 M 1c 2 −f 0 0 = (σ /r)2 (c M1 , c M2 ) M02 c = (σ 2 /r)2−f c0 M1 M01 c + (σ 2 /r)2−f c0 M2 M02 c 0 µ] = V ar[cd (reduced) + something non-negative – So, variance is no greater than, and sometimes less than, what we would have for the full model 14 STAT 512 2-Level Factorial Experiments: Basics Examples: • c = (0, 0, 0...1...0)0 , i.e. c0 µ is a single cell mean – c0 M1 is a single row from M1 – c0 M1 M01 c = number of parameters included in the model, say p1 – c0 MM0 c = 2f for full model – reduced model gives smaller variance for estimating a single cell mean – (not generally interesting, since it isn’t a treatment contrast) 15 STAT 512 2-Level Factorial Experiments: Basics • c = (0, 0, 0...1.... − 1...0)0 , i.e. c0 µ is a difference (contrast) between two cell means – c0 M1 contains zeros, and v1 elements = ±2 – c0 M1 M01 c = 4v1 – c0 M contains zeros, and v2 elements = ±2 in v2 , v2 > v1 – c0 MM0 c = 4v2 ≥ 4v1 • c = a column from M1 (i.e. a contrast associated with a factorial effect in the reduced model – c0 M1 = (0, 0, 0...2f ...0) – c0 M1 M01 c = (2f )2 – c0 MM0 c = (2f )2 – reduced model gives SAME variance for estimating an included factorial effect 16 STAT 512 2-Level Factorial Experiments: Basics • c = a column from M2 (i.e. a contrast associated with a factorial effect EXCLUDED from the model) – c0 M1 = 0! ... why? – variance for estimating something YOU SAID WAS ZERO • Potential benefits of a reduced model are improved precision and power associated with some treatment comparisons. • BUT, associated risk is that estimates are biased and tests potentially invalid if the omitted effects are actually present. 17 STAT 512 2-Level Factorial Experiments: Basics TESTING HIGHER-ORDER EFFECTS: r > 1, individual terms • Hyp0 : (−) = 0, where “(−)” is any of the factorial effects d = 2−f m0 ȳ • (−) (−) – because (M0 M)−1 = 2−f I – m(−) is the corresponding column from M −2f 2 0 d = 2−2f m0 V ar[ȳ]m • V ar[(−)] = 2 (σ /r)m (−) (−) (−) m(−) (last inner product is 2f ) = σ 2 /N P 2 2 f ˆ 2 • σ = spooled = s /2 ij... ij... q d • t = (−)/ s2pooled /N ... same for any (−) compare to t[1 − α/2, 2f (r − 1)] 18 STAT 512 2-Level Factorial Experiments: Basics TESTING HIGHER-ORDER EFFECTS: r > 1, multiple terms • Hyp0 : µ = M1 θ 1 • HypA : µ = Mθ = M1 θ 1 + M2 θ 2 • Group tests useful to address: – inflated type-I error rates from multiple testing – e.g. “all interactions involving γ” • For any model, SSE = (y − Xθ̂)0 (y − Xθ̂) y0 (I − X(X0 X)− X0 )y y0 y − (y0 X(X0 X)− X0 )(X(X0 X)− X0 y) 0 0 y y − (θ̂ X0 )(Xθ̂) 0 f 0 y y − r2 θ̂ θ̂ 19 STAT 512 • SST = 2-Level Factorial Experiments: Basics 0 SSE(Hyp0 ) - SSE(HypA ) = y y − N θ̂ 1 θ̂ 1 0 0 f 0 f = r2 (θ̂ θ̂ − θ̂ 1 θ̂ 1 ) = r2 θ̂ 2 θ̂ 2 0 20 0 − y y + N θ̂ θ̂ • Changing the model doesn’t change individual estimates IN THIS CASE. f • F = (r2 0 θ̂ 2 θ̂ 2 /p2 )/s2pooled compare to F [1 − α, p2 , 2f (r − 1)] • e.g., testing (αγ) = (βγ) = 0 uses numerator mean square 2 2 d + (βγ) d ]/2 N [(αγ) 0 STAT 512 2-Level Factorial Experiments: Basics UNREPLICATED EXPERIMENTS: individual terms • Unreplicated experiments are more common in factorial settings because of the large number of possible treatments • If full model is correct: d ∼ indep., N ( (−) , σ 2 /N ) (−) • For effects that are actually zero: d ∼ i.i.d., N ( 0 , σ 2 /N ) (−) • If most effects are actually zero (sometimes called “effect sparsity”), a diagnostic graph for outliers can be used to identify the (few) effects that appear to be “real”: 21 STAT 512 2-Level Factorial Experiments: Basics • For any group of effects, (−)i , i = 1...p, usually excluding µ: d versus Φ−1 ((i − 1 )/p) – Plot of sorted (−) [i] 2 d [i] versus Φ−1 ( 1 + (i − 1 )/(2p)) – Plot of sorted |(−)| 2 2 (without absolute value, plot isn’t invariant to changing “high” and “low” levels of factors) – can get Φ−1 values from qnorm in R ... • Reiterate: This is a useful technique only under effect sparsity, because it relies on the few “real” effects (outliers) to be different from the many “absent” effects. 22 STAT 512 2-Level Factorial Experiments: Basics • Example: 6 – simulated data for unreplicated 24 , with all factorial effects zero except for α = 6, β = −4, and (αβ) = 2; and σ = 3 4 5 •A |estimates| 3 •B • AB 2 • ABC 1 • 0 • • • • • • • • • • 0.0 0.5 1.0 1.5 nonnegative normal quantiles 2.0 – so 3 “real” effects are detected, but there is also 1 false alarm 23 STAT 512 2-Level Factorial Experiments: Basics An algorithmic alternative to normal plots – Lenth’s Method: • Let B be the set of absolute values of estimated coefficients of interest. √ • Compute an initial robust estimate of σ/ N : s0 = 1.5 × median B • Let B ∗ be the subset of B less than 2.5 × s0 √ • Compute a refined estimate of σ/ N , “pseudo standard error” P SE = 1.5 × median B ∗ • Treat a coefficient as significant if it is greater than t × P SE • Lenth published a table of values of t; for α = 0.05, and B not too small, t = 2 works reasonably well as a rule-of-thumb 24 STAT 512 2-Level Factorial Experiments: Basics ADDITIONAL GUIDELINES FOR MODEL CONSTRUCTION • Half-normal plots, tests, help you find a model form. BUT, what if the model “makes no sense” – e.g. A and BCD only ... • EFFECT HIERARCHY PRINCIPLE – If an interaction involving a given set of factors is included in the model, all main effects and interactions involving subsets of these factors should also be included. • EFFECT HEREDITY PRINCIPLE – If an interaction involving a given set of factors is included in the model, at least one effect of the next smallest order involving a subset of these factors should also be included. • For example: 25 STAT 512 2-Level Factorial Experiments: Basics B2 → A, B, not AB B2 + Heredity → OK µ B1 B1 A1 A2 + Hierarchy → OK 26 STAT 512 2-Level Factorial Experiments: Basics B1 → A, AB, not B µ B2 B2 + Heredity → OK + Hierarchy → add B B1 A1 A2 27 STAT 512 2-Level Factorial Experiments: Basics B2 B1 → AB, not A or B + Heredity → add A or B µ + Hierarchy → add A and B B1 B2 A1 A2 28 STAT 512 2-Level Factorial Experiments: Basics WHY? • If AB is present, A and B are clearly important ... E(y) changes when they are changed • Given the right collection of factor levels, at least one of the two main effects would likely be present • Hierarchy (conservative) is generally more popular than Heredity, except when considerable knowledge about the action of factors is available so that informed choices can be made Last example: • 4 factors, ABC and AD are significant • + Heredity → add A and AB, minimally • + Hierarchy → add A, B, C, D; AB, AC, BC 29