ANALYSIS: CRDs & ORTHOGONALLY BLOCKED DESIGNS DIAGNOSTICS

advertisement

STAT 512

Analysis

1

ANALYSIS: CRDs & ORTHOGONALLY BLOCKED DESIGNS

DIAGNOSTICS

• Results depend on the assumption y ∼ M V N (XA β A , σ 2 I)

• Checks are based on r = y − ŷ = (I − HA )y

• Under HypA , E[r] = 0 V ar[r] = σ 2 (I − HA )

• e.g. for CRD, HA = diag( n11 Jn1 ×n1 ,

1

J

,

n2 n2 ×n2

...

1

J

)

nt nt ×nt

– individual entries are “small” if ni are “large”

– V ar(r) ≈ σ 2 I

– approximation is generally (but not always) better as rank(XA )/N

is smaller

• Primary concern about model form is lack-of fit, check with plots

– elements of r versus time (trend)

– interaction plots for CBD’s

STAT 512

Analysis

• Primary concern about variances is equality, check with plots of

the elements of r versus:

– treatment group (compare spread within groups)

– time (“wedge”)

– corresponding elements of ŷ (“wedge”)

• Bartlett Test (exact)

– not robust to non-normal errors

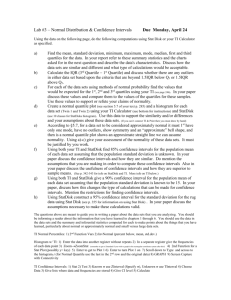

• Modified Levene Test (approx.)

– for CRD and other designs that include true replication

– for data group i, and observation j within the group,

– compute zi,j = |yi,j − mediani |

– apply one-way ANOVA F to z-values

– rejection implies that means of z’s vary, suggests that the

spread of y’s varies with group.

2

STAT 512

Analysis

3

3

0

2

1

2

z

4

y

6

4

8

5

6

10

Example:

1

2

group

3

1

2

group

3

STAT 512

Analysis

POWER TRANSFORMS (for variance-related-to-mean)

• Generally used with non-negative data.

• Suppose V ar(y) isn’t constant, but varies as E(y)q .

• Then, via the delta method:

V ar(y p ) ≈ V ar(y) × {py p−1 |y=E(y) }2 ∝ E(y)q+2p−2

• This suggests transformation, e.g.:

– q = 1 (e.g. Poisson) → p =

1

2

– q = 2 (e.g. exponential) → p = 0 (e.g. log)

limp→0 (y p − 1)/p = ln(y)

4

STAT 512

Analysis

• Actually, better (but related) transformations exist for both

Poisson and exponential distributions, and when we know these

are appropriate we would likely use a generalized linear model

anyay. But for empirical modeling, the Box-Cox transform:

p

y

−1

∗

y =

p

is popular and easy to fit:

QN

1. compute the geometric mean of all data, ỹ = [ i=1 yi ]1/N

2. fit y ∗∗ = y ∗ /ỹ p to your intended model form for several value

of p

3. the value of p that minimizes the residual sum of squares is the

MLE under a model that says y ∗ has mean structure as you

claim, plus i.i.d. normal errors.

5

STAT 512

Analysis

TEST OF EQUAL TREATMENT MEANS

• The bottom line here is that for all orthogonally blocked designs

Pt

– the numerator sum of squares is i=1 ni (ȳi. − ȳ)2

Pt

– the noncentrality parameter is i=1 ni (τi − τ̄ )2 /σ 2

– the denominator sum of squares comes from the fit of the full

model

– power analysis differs from what we did for CRDs only in

counting denominator df

6

STAT 512

Analysis

CONFIDENCE INTERVALS FOR C0 τ

• General interest is in invidual intervals for individual contrasts:

sX

√

α

0 τ ± t(1 −

d

c

, df) M SE

c2i /ni

2

i

• In large experiments (large t and many contrasts of interest),

multiple inference risk can be a problem, e.g.

• Suppose you have a design with t = 10 treatment groups, and

want to estimate all (45) pair-wise differences of treatment effects:

CI for τ1 − τ2 (5% risk)

CI for τ − τ (5% risk)

1

3

...

CI for τ9 − τ10 (5% risk)

so the risk of making at least one error is much greater than 5%

7

STAT 512

Analysis

8

• Simultaneous CI’s are constructed so that, with 95% confidence

(or whatever you pick), ALL intervals are correct.

• This works by replacing the t quantile in the usual CI by one from

another distribution, e.g.:

intervals of interest

procedure

distribution/quantile

all τi − τj

Tukey intevals

studentized max|xi − xj |

all τi − τ1

Dunnett intervals

studentized max|xi − x1 |

all contrasts

Scheffe’ intervals

specified contrasts

Edwards & Berry

p

(t − 1)F (1 − α, t − 1, df)

simulation

• Intervals are typically larger than usual t-based intervals

• Intervals are typically larger for procedures made for larger

collections of intervals (e.g. Scheffe’) than for those made for

smaller collections (e.g. Dunnett)