Discrimination and Classification - Introduction

advertisement

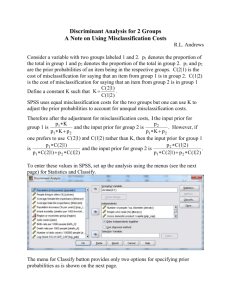

Discrimination and Classification - Introduction • Main objectives behind discrimination and classification are: 1. Separate distinct sets of objects: discrimination. 2. Classify new objects into well defined populations: classification. • An example of discrimination: Given measurements on the concentrations of five elements in bullet lead, find combinations of those concentrations that best describe bullets made by Cascade, Federal, Winchester and Remington. • An example of classification: Using information on prisoners eligible for parole (good behavior, history of drug use, job skills, etc) can we successfully allocate a prisoners elegible for parole into two groups: those who will commit another crime or those who will not commit another crime? 653 Discrimination and Classification • In practice, the two objectives often overlap: – A function of the p variables that serves as a discriminant is also used for classifying a new object into one of the populations. – An allocation or classification rule can often serve as a discriminant. • The setup is the usual one: p variables are measured on n sample units or subjects. We wish to find a function of the variables that will optimize the discrimination between units belonging to different populations (minimize classification errors). 654 Two Populations • We wish to separate two populations and also allocate new units to one of the two populations. • Let π1, π2 denote the two populations and let X = [X1, X2, ... Xp] denote the p−dimensional measurement vector for a unit. • We assume that the densities f1(x) and f2(x) describe variability in the two populations. • For example, individuals who are good credit risks may constitute population 1 (π1) and those who are bad risks may constitute population 2 (π2). Variables that may be used to discriminate between populations or to classify individuals into one of the two populations might include income, age, number of credit cards, family size, education, and such. 655 Two Populations • To develop a classification rule, we need a training or learning sample of individuals from each population. • The training samples can be examined for differences: what are the characteristics of individuals that best distinguish between the two populations? • If R1 and R2 denote the regions defined by the set of characteristics of objects in each of the training samples, then when a new object (whose group membership we do not know) falls into Rj we classify it into πj . • We can make mistakes by misclassifying some units 656 Two Populations • When is classification of a new unit necessary? – When we have incomplete knowledge of future performance, e.g., student success in graduate school. – When obtaining perfect information requires destroying the object, e.g., products meeting specifications. – When obtaining information is impossible or very expensive. E.g., authorship of the unsigned Federalist papers (Madison or Hamilton?) or perfectly diagnosing a medical problem which may only be done by conducting invasive and expensive surgery. • The bigger the overlap between the set of characteristics that distinguish units from π1 and π2, the larger the probability of a classification error. 657 Example: Owners of Riding Mowers • A manufacturer of riding lawn mowers wishes to target an ad campaign to families who are most likely to purchase one. • Is income and size of lot enough to discriminate owners and non-owners of riding lawn mowers? • A sample of size n1 = 12 current owners and n2 = 12 current non-owners was obtained and X1 = income and X2 = size of lot were measured for each. • Owners tend to have higher income and larger lots, but income appears to be a better discriminator. • Note that the two populations, separated by the line, are not perfectly discriminated, so some classfication errors are likely to occur. 658 Owners and Non-owners of Riding Mowers 659 Priors and Misclassification Costs • In addition to the observed characteristics of units, other features of classification rules are: 1. Prior probability: If one population is more prevalent than the other, chances are higher that a new unit came from the larger population. Stronger evidence would be needed to allocate the unit to the population with the smaller prior probability. 2. Costs of misclassification: It may be more costly to misclassify a seriously ill subject as healthy than to misclassify a healthy subject as being ill. It may be more costly for a lender to misclassify a bad credit risk as a good credit risk and award a loan than to misclassify a good credit risk as a bad credit and deny a loan. 660 Classification Regions • Let f1(x) and f2(x) be the probability density functions associated with the random vector X for populations π1 and π2, respectively. • Let Ω denote the sample space (collection of all possible values for x). R1 is the set of values of x for which we classify objects into π1 and R2 = Ω − R1 is the set of values of x for which we classify objects into π2. • Since every object belongs into one of the two populations, we have that Ω = R1 ∪ R2. 661 Classification Regions 662 Classification Regions • Ignore for now the prior probabilities of each population and the potentially different misclassification costs. • The probability of misclassifying an object into π2 when it belongs in π1 is P (2|1) = P (X ∈ R2|π1) = Z R2 =Ω−R1 f1(x)dx, and probability of misclassifying an object into π1 when it belongs in π2 is P (1|2) = P (X ∈ R1|π2) = Z R1 f2(x)dx. • In the next figure, the solid shaded region is P (2|1) and the striped region is P (1|2). 663 Classification Regions 664 Probability of Misclassification • Let p1, p2 denote the prior probabilities of π1, π2, respectively. • Then probabilities of the four possible outcomes are: P (correctly classified as π1) = P (X ∈ R1|π1)P (π1) = P (1|1)p1 P (incorrectly classified as π1) = P (X ∈ R1|π2)P (π2) = P (1|2)p2 P (correctly classified as π2) = P (X ∈ R2|π2)P (π2) = P (2|2)p2 P (incorrectly classified as π2) = P (X ∈ R2|π1)P (π1) = P (2|1)p1 • Further, let c(1|2) and c(2|1) be the costs of misclassifying an object into π2 and π1, respectively. 665 Expected Cost of Misclassification • Classification rules are often evaluated in terms of the expected cost of misclassification or ECM : ECM = c(2|1)P (2|1)p1 + c(1|2)P (1|2)p2. • There is no cost when units are correctly classified. • We seek rules that minimize the ECM . This leads to an optimal classification rule: classify an object into π1 if f1(x)c(2|1)p1 > 1. f2(x)c(1|2)p2 666 Classification Rule • Equivalently, the regions R1, R2 that minimize the ECM are defined by the values of x for which f (x) R1 : 1 > f2(x) f1(x) R2 : < f2(x) c(1|2) c(2|1) ! c(1|2) c(2|1) ! p2 p1 ! p2 p1 ! • Implementation of the minimum ECM rule require for a new unit requires evaluation of f1 and f2 at the new vector of observations x0, but it does not require knowing the two costs or the two prior probabilities, just their ratio. • When the prior probabilities or the misclassification costs are equal, the classification rule above simplifies correspondingly. 667 Special cases of minimum ECM regions f (x) c(1|2) p1 = p2 : R1 = 1 > , f2(x) c(2|1) p f (x) > 2, c(1|2) = c(2|1) : R1 = 1 f2(x) p1 p1 = p2 , f (x) c(1|2) = c(2|1) : R1 = 1 > 1, f2(x) f (x) c(1|2) R2 = 1 < f2(x) c(2|1) f (x) p R2 = 1 < 2 f2(x) p1 f (x) R2 = 1 < 1. f2(x) If x is on the boundary between R1 and R2 then toss a coin, or randomly classify in some way 668 Other Criteria for Choosing a Classification Rule • The total probability of misclassification or T P M ignores the cost of misclassification and is defined as the probability of either misclassifying a π1 observation or misclassifying a π2 observation: T P M = p1 Z R2 f1(x)dx + p2 Z R1 f2(x)dx, and is equivalent to ECM when costs are equal. An optimal rule in this sense would minimize T P M . • The optimal TPM regions are given in the second equation in the preceding page. 669 Other Criteria for Choosing a Classification Rule • We might consider classifying a new unit with observation x0 into the population with the highest posterior probability P (πi|x0). By Bayes rule P (we observe x0|π1)p1 P (observe x0|π1)p1 + P (observe x0|π2)p2 p1f1(x0) = . p1f1(x0) + p2f2(x0) P (π1|x0) = • Clearly, P (π2|x0) = 1 − P (π1|x0). • Using the posterior probability criterion, we classify a unit with measurements x0 into π1 when P (π1|x0) > P (π2|x0). 670 Two multivariate normal populations with Σ1 = Σ2 • We now assume that f1(x) = Np(µ1, Σ) and f2(x) = Np(µ2, Σ). • Note that 1 1 f1(x) = exp − (x − µ1)0Σ−1(x − µ1) + (x − µ2)0Σ−1(x − µ2) . f2(x) 2 2 • Then, R1 is given by the set of x values for which: f1(x) 1 1 = exp − (x − µ1)0Σ−1(x − µ1) + (x − µ2)0Σ−1(x − µ2) f2(x) 2 2 > c(1|2) c(2|1) ! ! p2 , p1 and R2 is the complementary set (< instead of >). 671 Multivariate Normal Populations • Given the definitions of R1, R2, an allocation that minimizes the ECM is the following: allocate x0 to π1 if 1 0 −1 (µ1−µ2) Σ x0− (µ1−µ2)0Σ−1(µ1+µ2) > ln 2 " c(1|2) c(2|1) ! p2 p1 !# and allocate x0 to π2 otherwise. • Since µ1, µ2, Σ are typically unknown, in practice we use x̄1, x̄2 as estimators of the population means, and Spool as estimator of the common covariance matrix Σ. • The classification rule is a linear function of x0 and is known as Fisher’s linear classification rule. 672 , Multivariate Normal Populations • In the special case in which c(1|2) c(2|1) ! p2 p1 ! = 1, we have that ln(1) = 0 and the rule simplifies. Let m̂ = 1 1 −1 (x̄1 − x̄2)0Spool (x̄1 + x̄2) = (ȳ1 + ȳ2), 2 2 with −1 ȳ1 = (x̄1 − x̄2)0Spool x̄1 = â0x̄1 −1 ȳ2 = (x̄1 − x̄2)0Spool x̄2 = â0x̄2. 673 Multivariate Normal Populations • For a new observation x0, we allocate the object to π1 if ŷ0 > m̂ and to π2 otherwise, where −1 ŷ0 = (x̄1 − x̄2)0Spool x0 = â0x0. • In this simple case, we simply compare ŷ0 to the midpoint of ȳ1 and ȳ2. • Note that the classification rule computed using estimated parameters does not guarantee minimum ECM , but if sample sizes are large enough and the two populations are reasonably normal, the actual ECM will tend to be close to the miminum. 674 Example: Hemophilia A Carriers • Objective was to detect individuals who are hemophilia A carriers. • Blood samples from women known to be carriers and women known to be non-carriers were assayed and measurements were taken on two variables: X1 = log10(AHF activity) and X2 = log10(AHF-like antigen). • Samples of size n1 = 30 normal women and n2 = 22 carrier women were obtained. • Pairs of measurements for each woman are plotted on the figure in the next page, together with the 50% and 95% probability contours estimated under the bivariate normal assumption. 675 Example: Hemophilia A Carriers 676 Example: Hemophilia A Carriers • Sample statistics were the following: " x̄1 = −0.0065 −0.0390 # " , x̄2 = −0.2483 0.0262 # −1 , Spool = " 131.158 −90.423 −90.423 108.147 • Assuming (for now) equal prior probabilities and costs, the discriminant function is −1 ŷ = â0x = [x̄1 − x̄2]0Spool x = 37.61x1 − 28.92x2. 677 # . Example: Hemophilia A Carriers • Further, ȳ1 = â0x̄1 = 37.61 × (−0.0065) − 28.92 × (−0.0390) = 0.88 ȳ2 = â0x̄2 = 37.61 × (−0.2483) − 28.92 × 0.0262 = −10.10. • Therefore, m̂ = (ȳ1 + ȳ2)/2 = −4.61. • Suppose that a new patient has measurements x1 = −0.210 and x2 = −0.044. Is she normal or is she a carrier? Using Fisher’s linear discriminant function, we would allocate the woman to the normal group if ŷ0 = â0x0 ≥ m̂. • In this case, ŷ0 = 37.61 × (−0.210) − 28.92 × (−0.044) = −6.62 < −4.61. Therefore, she would be classified as an obligatory carrier. 678 Example: Hemophilia A Carriers • If our patient is maternal first cousin of a hemophiliac we know from genetics that she has p2 = 0.25 of being hemophiliac. Then p1 = 0.75. • Assume equal cost of misclassification. The discriminant is 1 −1 −1 0 ŵ = (x̄1 − x̄2) Spoolx0 − (x̄1 − x̄2)0Spool (x̄1 + x̄2), 2 which for this patient is equal to -2.01. • We classify the patient as an obligatory carrier because " ŵ = −2.01 < ln # p2 = −1.10, p1 even though she was more likely to be normal. 679 Fisher, Classification and Hotelling’s T 2 • For the two population case, R. A. Fisher arrived at the linear discriminant function in a different way. • Given p−dimensional observations x from populations π1 and π2, he proposed finding the linear combinations of the elements of x, ŷ1i = â0x1i and ŷ2j = â0x2j for i = 1, ..., n1; j = 1, ..., n2 to maximize (ȳ1 − ȳ2)2 , separation = s2 y 0 with s2 y the pooled estimate of the variance of ŷ1i = â x1i . (Classify into π1 if ŷ1i = â0x1i > â0(x̄1 + x̄2)/2 680 Fisher, Classification and Hotelling’s T 2 −1 • The separation is maximized for â0 = (x̄1 − x̄2)0Spool and the maximum of the ratio is −1 D2 = (x̄1 − x̄2)0Spool (x̄1 − x̄2). • Therefore, for two populations and a linear rule, the maximum relative separation that can be obtained is equal to the squared distance between the multivariate means. • A test of H0 : µ1 = µ2 is also a test for the hypothesis of no separation. If Hotelling’s T 2 fails to reject H0, then the data will not provide a useful classification rule. 681 The normal case when Σ1 6= Σ2 • When Σ1 6= Σ2, we can no longer use a simple linear classification rule. • Under normality, terms involving |Σi|1/2 do not cancel and the exponential terms do not combine easily. • Using the definition of R1 given earlier and expressing the likelihood ratio in the log scale, we now have: 1 −1 0 Σ−1 −µ0 Σ−1 )x−k > ln −Σ )x+(µ R1 : − x0(Σ−1 2 2 1 1 1 2 2 " c(1|2) c(2|1) with R2 the complement, where ! k= 1 |Σ1| 1 0 Σ−1 µ ). ln + (µ01Σ−1 µ − µ 1 2 2 2 1 2 |Σ2| 2 682 ! p2 p1 !# , The normal case when Σ1 6= Σ2 • The classification regions are now quadratic functions of x. • The classification rule for a new observation x0 is now the following: allocate x0 to π1 if 1 −1 0 Σ−1 −µ0 Σ−1 )x −k > ln − x00(Σ−1 −Σ )x +(µ 0 0 1 1 2 2 1 2 2 " c(1|2) c(2|1) ! p2 p1 and allocate x0 to π2 otherwise. • In practice, we estimate the classification rule by substituting the unobservable population parameters (µ1, µ2, Σ1, Σ2) by sample estimates (x̄1, x̄2, S1, S2). 683 !# , Features of Quadratic Rules • Disjoint classification regions are possible. 684 Features of Quadratic Rules • Quadratic rules are not robust to departures from normality. 685 • Sometimes, the quadratic discriminant rule is written as: classify observation into π1 if " ln # " # f1(x)p1 c(1|2) > ln . f2(x)p2 c(2|1) • In the normal case with equal misclassification costs, the estimated discriminant function is given by 1 1 (x − x̄1)0S1−1(x − x̄1) + ln(p1) − ln |S1| − 2 2 1 1 − − ln |S2| − (x − x̄2)0S2−1(x − x̄2) + ln(p2) . 2 2 More on Quadratic Discriminant Functions Q • The term quadratic discriminant score or dk (x) is sometimes used to denote the terms in the expression just presented. That is, 1 1 Q dˆ1 (x) = − ln |S1| − (x − x̄1)0S1−1(x − x̄1) + ln(p1) 2 2 1 1 Q dˆ2 (x) = − ln |S2| − (x − x̄2)0S2−1(x − x̄2) + ln(p2). 2 2 • With equal misclassification costs, we allocate an observation Q to the population with higher dˆk (x). • We see later that the quadratic discriminant scores can be used to allocate objects to more than two populations. 686 Selecting a Discriminant Function • If populations are normal, how do we decide whether to use a linear or a quadratic discriminant function? – Use Bartlett’s test to test for equality of covariance matrices (In SAS PROC DISCRIM does this, not in R). – Test is sensitive to departures from normality. • If data are not normally distributed: – Neither the linear nor quadratic discriminant functions are optimal, but they still could perform well if the populations are well separted. – Quadratic rules tend to work better on non-normal samples even when covariance matrices are homogeneous. 687 • Transform variables to better approximate samples from normal distributions, or use ranks. • Evaluate proposed rules on new observations with known group membership (or use crossvalidation) 688 Estimating Misclassification Probabillities • To evaluate the performance of a classification rule, we wish to get estimates of P (1|2) and of P (2|1). • When the parent population densities f1(x), f2(x) are not known, we limit ourselves to evaluating the performance of the sample classification rule. • We discuss several approaches to estimate Actual Error Rate (AER): – The apparent error rate method – Data splitting, or set-aside method – Crossvalidation – Bootstrap approach. 689 Apparent Error Rates • Use the classification rule estimated from the training sample (either the linear or the quadratic rule) to classify each member of the training sample. • Compute the proportion of sample units that are misclassified into an incorrect population. • These proportions (P̂ (1|2) and P̂ (2|1)) are called apparent error rates. They provide estimates of misclassification probabilities. • As estimates of misclasification probabilities, they can be significantly biased toward zero, since we are re-using the same data for estimating the rule and assessing its performance. • The bias decreases as the size of the training sample increases. 690 Data splitting • If the training sample is large enough, we can randomly split the sample into a training and a validation set. About 25% to 35% of the sample should be set aside for validation. • Estimate the classification rule from the training sub-sample. • We then use the rule to classify the cases in the validation sub-sample and compute the proportion of them that are misclassified. • Estimates of P (1|2) and of P (2|1) are less biased but tend to have larger variances because a smaller sample of units is used to estimate the misclassification probabilities. • Further, the rule evaluated is not the one that will be used in practice (and which will be estimated using all observations). 691 Crossvalidation or Hold-out Methods • Similar to the set-aside method, except that instead of putting aside an entire validation sample we now randomly split the training sample into g groups. • We sequentially set aside one group at a time and estimate the rule from the observations in the other g − 1 groups. • We then classify the cases in the group that was set aside and estimate the proportion of misclassified cases. • Repeat the steps g times, each time setting a different group aside. • The estimated probabilities of misclassification are computed as the average over the g estimates. 692 Crossvalidation • Notice that a different rule is used to estimate misclassification probabilities for each of the g subsets of data. • Estimates of misclassification probabilities are less unbiased and have smaller variance than the estimates obtained using the single set-aside method. • Bias will increase when more variables are used. • When g = n1 + n2 (i.e., we set aside one case at a time) the method is called a ”holdout” or ”leave one out” method. It is sometimes (incorrectly) called a jacknife method. 693 Bootstrap Estimation • First, estimate the apparent error rates from the complete sample: P̂ (1|2) and P̂ (2|1). • Next, create B new samples of size n1 and n2 by randomly sampling observations with replacement from the original training samples. • From each of the bootstrap samples, estimate a classification rule and compute the apparent error rates. Label them as P̂i(1|2) and P̂i(2|1), for i = 1, ..., B. 694 Bootstrap Estimation • Use the B bootstrap estimates P̂i(1|2) and P̂i(2|1) to estimate the bias of the apparent error rates as ˆ bias(1|2) = B −1 B X P̂i(1|2) − P̂ (1|2) i=1 ˆ bias(2|1) = B −1 B X P̂i(2|1) − P̂ (2|1) i=1 695 Bootstrap Estimation • A bootstrap-corrected estimate of P (1|2) is given by ˆ P̂ c(1|2) = P̂ (1|2) − bias(1|2) = P̂ (1|2) − (B −1 B X P̂i(1|2) − P̂ (1|2)) i=1 B X = 2 × P̂ (1|2) − B −1 P̂i(1|2). i=1 • Similarly, ˆ P̂ c(2|1) = P̂ (2|1) − bias(2|1) = P̂ 2|1) − (B −1 B X P̂i(2|1) − P̂ (2|1)) i=1 = 2 × P̂ (2|1) − B −1 B X P̂i(2|1). i=1 696 Bootstrap Confidence Intervals • We can also compute bootstrap confidence intervals fpr the misclassification probabilities. • An approximate 100(1 − α)% confidence interval for P (1|2) is given by P̂ c(1|2) ± zα/2 v uP −1 PB 2 u ( P̂ (1|2) − B P̂ (1|2)) i k i t k=1 (B − 1) , where zα/2 is the upper (1 − α/2) percentile of the standard normal distribution. 697 Percentile Bootstrap Confidence Intervals • A more direct approach to estimating the confidence limits is to compute the lower limit as the P̂i(1|2) value exceeded by 1−α/2 estimates of P (1|2) in the B samples and compute the upper limit as the P̂i(1|2) value exceeded by α/2 estimates in the B samples. • This direct approach to confidence limits works better when B is large. • Reference: Efron, B. and Tibshirani, R. J. 1993, An Introduction to the Bootstrap, Chapman Hall, New York. (Chapter 17) 698 Variable Selection • We must often decide how many variables (out of p) to include in the classification rule. • In general, the apparent error rate will not increase as more variables are used for classification. • However, the probability of misclassifying a new case can increase when the number of variables used to constuct the classification rule increases. 699 Variable Selection • We can use Proc Stepdisc in SAS to help select a ’good’ set of variables using either – backward elimination: start with all p variables and sequentially eliminate variables that do not improve the probability of correct classification – forward selection: sequentially add variables to the classification rule. – stepwise selection • The Stepdisc procedure uses the p-value for a partial Ftest to determine the ”signifcance” of adding or deleting a variable. 700 Variable Selection • The partial F-test for including a new variable is computed by – regression of each variable not in the model on all variables currently in the model – compute the residuals – compute the F-test for no difference in population means for a one-way ANOVA of the residuals – The variable with the most significant partial F-test is added to the classification model • Re-estimate misclasification probabilities as each variable is added or deleted from the model. 701