Estimating Variability in Functional Images Using a Synthetic Resampling Approach

advertisement

Estimating Variability in Functional Images Using a Synthetic Resampling Approach

Ranjan Maitra and Finbarr O’Sullivan

Statistics

and Data Analysis Research Group, Bellcore, Morristown, NJ 07960-6438, USA

Department of Statistics, University of Washington, Seattle WA 98195, USA

Abstract

Functional imaging of biologic parameters like in vivo

tissue metabolism is made possible by Positron Emission

Tomography (PET). Many techniques, such as mixture

analysis, have been suggested for extracting such images from

dynamic sequences of reconstructed PET scans. Methods

for assessing the variability in these functional images are

of scientific interest. The nonlinearity of the methods used

in the mixture analysis approach makes analytic formulae

for estimating variability intractable. The usual resampling

approach is infeasible because of the prohibitive computational

effort in simulating a number of sinogram datasets, applying

image reconstruction, and generating parametric images

for each replication. Here we introduce an approach that

approximates the distribution of the reconstructed PET images

by a Gaussian random field and generates synthetic realizations

in the imaging domain. This eliminates the reconstruction steps

in generating each simulated functional image and is therefore

practical. Results of experiments done to evaluate the approach

on a model one-dimensional problem are very encouraging.

Post-processing of the estimated variances is seen to improve

the accuracy of the estimation method. Mixture analysis is

used to estimate functional images; however, the suggested

approach is general enough to extend to other parametric

imaging methods.

I. I NTRODUCTION

The potential to quantitate tissue metabolism from a

sequence of PET scans is one of the most powerful features of

this imaging modality. In this context, the protocol typically

consists of injecting a patient with a radio-tracer and recording

the emissions at discrete time-points. From emissions recorded

at each time-point, the tissue isotope concentration or source

distribution is estimated, giving us a time-course sequence

of reconstructed PET scans. These scans form the input

for algorithms that output pixel-wise estimates of biologic

parameters like metabolic rate, phosphorylation ratio, etc. A

number of techniques have been developed to generate these

images; we have been using a mixture analysis technique

(O’Sullivan [12]) in our experiments.

Assessing variability in these estimated functional images

is of scientific importance because these can potentially be

used to develop inference tools like calculating significance

levels of tests of hypothesis on biologic activities in different

regions in single-patient studies. The problem of developing

practical variability measures for reconstructed PET scans at

fixed time-points has been studied extensively ([3],[7],[8]).

Blomqvist et al. [2] noted the desirability of extending these

results to functional images. Unfortunately, the nonlinear

formulations used in constructing the biologic parameter

estimates make analytic variance formulae intractable.

Extending the resampling approach of Haynor and Woods

[7] would involve simulating a number of sinogram data sets,

applying image reconstruction, mixture analysis and generating

parametric images. This approach is impractical because of the

excessive computational effort required to replicate dynamic

reconstructed PET sequences.

In this paper, we suggest a simulation approach via the

parametric bootstrap [5] executed in the imaging domain.

We use the result in Maitra [11] that with increased count

rate, each reconstructed PET scan has an approximate

multivariate Gaussian distribution. The mean is estimated

by the reconstructed image. Computationally feasible and

accurate dispersion estimates are suggested. This model is

used to simulate dynamic PET sequences, from each of which

biologic parameters are extracted. This yields a bootstrap

sample of the functional images, which can be used to assess

variability. The advantage of this synthetic approach over the

usual one is that it eliminates the computationally expensive

step of reconstructing time-course sequences after simulating

from the observation process.

In the sequel, Section 2 formulates the problem and

outlines the theory and develops the methodology behind our

approach. Section 3 details the experimental evaluations that

were carried out to examine the performance of our suggested

approach. Since it is not possible to validate our methods in

a two-dimensional PET setup, the suggestions are evaluated

on experiments performed in a model one-dimensional

deconvolution problem with reconstruction characteristics

similar to PET. The results are presented in Section 4. Finally,

Section 5 summarizes the contributions of this paper and poses

questions for future research.

II. THEORY

AND

M ETHODS

A. Problem Formulation

1) Image Reconstruction

The standard reconstruction methodology for PET is

an algorithm known as filtered backprojection (FBP). In

convolution form, this method involves filtering of the data

from each projection angle followed by back-projection. The

equation for the ’th reconstructed pixel value

!"$#&%('

is,

(1)

&#*),+-%/.10 32

65

Here, % denotes angle, 0 distance, 42

is the corrected

sinogram data and 78 is the convolution filter with resolution

size (FWHM) 9 .

In matrix notation, the reconstruction equation can be

written in terms of the expression,

: <;>=?;@BA

(2)

;>=C2

where 2 is the vector of corrected projection data, ; is the

discretized version of the Radon transform, and : represents

the smoothing operation of FWHM 9 that is applied to the raw

reconstructions in order to obtain acceptable solutions [13].

2) Functional Imaging via Mixture Analysis

Local tissue metabolism has usually been assessed from

dynamic PET scans by modeling locally averaged time-course

measurements [15]. Functional imaging techniques, like

mixture analysis [12], generate more comprehensive pixel-wise

representations.

Let 3D represent the true source distribution in the D ’th

time-bin

at the ’th pixel in the PET5 imaging domain. The

vector 78 4 EDF*D HG IJKKLK*M is called the true timeactivity curve (TAC) at the ’th pixel. A ; -component mixture

model represents the ’th pixel TAC as a weighted average of ;

underlying curves (sub-TACs), NLOJP QG BI

LKKLK*; .

R

3D (3)

3ONLO3D

OTS $U

R

3O

O S [U

6

W X OTZ

W

(4)

W X O6Z

3 O

O S U

T

3) Assessing Variability

(6)

W

Analytic expressions for Var( ) are intractable because of

the nonlinear methods used in the extraction. The prohibitive

cost of generating time-course sequences from realizations in

the observation domain makes the usual resampling approach

impractical. This suggests the need for development of variance

estimation strategies.

B. A Synthetic Variability Estimation Strategy

1) Approximate Distribution of

Maitra [11] shows that under idealized projection conditions

of no detector effects such as scatter, attenuation,

etc., the

at any fixed

distribution of the reconstructed PET scan

time-point can be approximately and adequately specified

by a multivariate Gaussian distribution. The mean of this

distribution

is : while From (2), the dispersion matrix b of

is given by,

5

where the mixing proportions 4 3O FP lie

G IJKKLK*;

U

in the ; -dimensional simplex. The

physical basis for such

a representation is that the sub-TACs (N ’s) correspond to

the different tissue types represented in the image and the

underlying <V ’s indicate the anatomic tissue composition of

U

the underlying

pixel.

Functional

imaging

maps a metabolic parameter of interest,

W

, at each pixel in the image.

The mixture analysis approach

WYX

fits the metabolic parameter O6Z to each tissue sub-TAC NLOJ78

and following (3) regards each pixel biologic parameter as a

composition of the component tissue parameters,

W

The problem of estimating N ’s, given the ’s, is a lowdimensional problem and usually robust to theU choice of the

estimation method. On the other hand, the dimensionality of

the 3O ’s is high and so the estimation problem is delicate.

U

Many

methods have been proposed: among them is a quadratic

(weighted) least-squares algorithm which constrains 3O ’s to

U

belong to the ; -dimensional simplex.

WYX O6Z

The tissue metabolic parameters

’s are estimated from

the NLO78 ’s and the pixel metabolic parameters are estimated

following (4),

R

b

c: <;d=,;eA

;>=

Var ?2f];g<;>=?;@BA

: (7)

Reconstruction in PET is practical because Fast Fourier

Transforms are used in the implementation. This is not readily

possible in the case of (7). So, we need approximation methods

whereby reconstruction-type convolution procedures can be

used in calculating dispersion.

Theoretically, using the Poisson nature of the observed

counts, one can develop exact formulae for the variances of

the reconstructed pixel values directly from (1) and obtain

unbiased estimates by repeating the reconstruction

procedure

after replacing the kernel 78 with its square &*78 .

h

jVar

i

< k !"$#f%l'nm!#)?+l%/.e0 32

(8)

W

In estimating the ’s, the data

are the

time-course sequence

5

of reconstructed PET scans

.

4

3DF*D \G BI

LKKLK]M

The number of tissue types, ; , the sub-TACs NLO&*7^ , and the

mixing proportions 3O ’s have to be determined. ; is obtained

U

from anatomic considerations

or through clustering or other

sophisticated algorithms ([12],[14]). Estimation of N ’s and ’s

U

are usually done alternately to fit the model,

3D`_

R

3ONLO3DBF\D aG IJKLKKM-K

O S [U

6

(5)

This was suggested by Alpert, et. al. [1]. In practice,

(1) is implemented via discrete convolution through Fast

Fourier Transforms.

Interpolation steps are required

during backprojection. Studies show that ignoring these

over-approximates variances and can be corrected with

negligible added computational effort ([9],[10]).

To approximate correlations, notice that if the variances of 2

are assumed uniform, (7) reduces to,

Var K

ab o

q

Hp : <;

= ;@ A

: (9)

where q p is the assumed common variance of the observables.

r

The approximately

Toeplitz/Fourier form of ; = ; (and hence,

of : <; = ;@ A : ) means that the correlation between the ’th

and s ’th reconstructed pixel values is,

u

where

y$

G KLKK

sxw y$

|{!

(10)

5

= ;@ A

4

u

: .

b

|;

h :

u

= ;@ A

: h

]y[

15

is the first row of

u

u

(This correlation

structure

is equivalent to that

developed by Carson, et al. [3] and may also be regarded as a

quick and ready implementation for his approach.) Writing

}

h

h h h~

LKKK

, the estimated dispersion matrix is,

diag : |;

z

(11)

The approximately Toeplitz form of the correlation matrix also

means that discrete convolution via Fast Fourier Transforms

may be used in simulating from the multi-Gaussian distribution.

C. Synthetic Bootstrap for Estimating Variances

Since the reconstructed PET images are independent over

time, the distribution of a time-course PET sequence can be

approximated by a Gaussian random field. This suggests

development of a practical variance estimation strategy via

resampling in the imaging domain. The exact implementation

is as follows:

1. Obtain time-course reconstructed images of radio-tracer

uptake from the PET study. Also, obtain the variance

estimates of the reconstructed pixel values for each

of these scans and the approximate spatially invariant

Fourier correlation structure. From this reconstructed

PET sequence, obtain a functional image of the estimated

pixel-wise tissue biologic parameters.

2. Simulate from a Gaussian random field with mean

estimated by the above reconstructed time-course

sequence. The spatially invariant correlation structure

means that Fourier methods can be used in the simulation

of correlated multivariate Gaussian realizations. From

each simulated PET sequence, obtain pixel-wise

simulated images of the desired biologic parameters.

3. Estimate variability of the estimated functional image

from this synthetic bootstrap sample.

The suggested variance estimation strategy is practical because

it resamples in the imaging domain and thus eliminates the

cumulative computational burden of the many reconstruction

steps that would be needed in an extension of the resampling

techniques in Haynor and Woods [7].

III. EXPERIMENTS

A. One-dimensional Convolution Model

Experiments were conducted to assess the performance of

the suggested approach in estimating the pixel-wise variances

Activity

10

5

Bw .

0

&

&

t vu

Corr W

of the ’s. Since it was not possible to estimate the true

variances in a two-dimensional PET setup under existing

computer resources, evaluations were done in a simplified

one-dimensional deconvolution setting [13] with characteristics

matching PET reconstruction. A 6-component mixture

model

WYX O6Z

was specified. In this set-up, N ’s (and hence

’s) were

assumed known. The source distribution 78 (Figure 1) was

specified using (3) with mixing proportions ( 3O ’s) that are

U

blurred step functions [4].

Time activity curves over 60 time-points were reconstructed

at 216 bins (pixels) from realizations of a inhomogeneous

6 0

5 0

4 0

t im3

0

e b 2

in 0

150

0

er

b

0

m

1 nu

l

50 pixe

1 0

Fig. 1 Perspective plot of the source distribution

experiments.

&]C

200

used in the

Poisson process in the observation domain [4].

The

reconstructions were smoothed by a Gaussian kernel with

bandwidth preset to correspond to smoothing parameters

that are reasonable for the given total expected number of

emissions. Since as explained earlier, most of the variability

is in the estimation of the mixing proportions, the component

WYX O6Z

’s) were known.

sub-time activity curves N ’s (and hence

WYX O6Z

The relationship between N O ’s and

’s was specified by the

equation

NLO&ED W X O6Z6&

WYX OTZ

5

4 .

9O6D

F

P

QG BI

LKKKJK

(12)

N O y$ . This is called the “amplitude

This implies that

parameter”. 9 O is another functional parameter (the “half-life”)

but this parameter was

not of interest in this experiment. The

source distribution *7^ (Figure 1) was specified using (3) with

mixing proportions ( 3O ’s) that were blurred step functions [4].

TheWYtarget

functionalU parameter was defined using

the ’s and

X O6Z

U

the

’s in (4). The 3O ’s were estimated from 78 and

used

W

U

to obtain ’s. Figure 2 is a plot of the functional parameter —

the “amplitude” — along with a sample estimate obtained using

the mixture analysis approach.

1000 simulated reconstructions of the

TAC were obtained

W

by simulating

the observed process and ’s were extracted from

W

each 78 . Sample pixel-wise standard deviations of these ’s

are assumed to be the truth in our performance evaluations.

Realizations were simulated from the

approximate

multi-Gaussian

model for the estimated TACs 78 . Bootstrap

W

samples of ’s were obtained as outlined in Section 3.1.2 and

standard deviations calculated. The experiment was done

15

10

0.4

••

•

•

•••

•

•

•

•

• •••• •

•• •

•

••

•

••

•

•••

••

•

• •••

•

••

•

•

•

•

•

0

0.1

5

Standard Deviation

0.2

0.3

amplitude

•

0

50

100

pixel number

150

200

Fig. 2 True amplitude (broken line) and a sample estimate (bold line)

obtained using mixture analysis.

with bootstrap sample sizes =10, 30 and replicated 500

times in order to study the distributional properties of these

bootstrapped standard deviations.

The above experiments were performed for low ( G K8y[I G y[ ),

medium

( IJK8y$` G y[ ) and high (&K G G y$ ) expected total counts,

3D . Corresponding bandwidths for the smoothing kernel

were set at 9.7, 8.4 and 7.8 pixels. (Different expected total

counts can be interpreted as different dosage levels of the radiotracer.)

•

•• •

•

•

•••

••

••

•

0

•

•

•

•••• •

•

•••

•

••

•

••••• •

• ••

•

••••••

•• •

•

•

••

••

• •

•

••

•

• •

•

• ••

•

• •

• • • ••

•

•• •

•

•• •

•

••

••

50

100

pixels

•

••• ••

• ••

•

••

••

•

•• •

•

• • • ••

••

•

••

••

• ••• ••

• • •• •• •• • •

•

• •

•

•

•

•

•

•

•

• • ••

••

•• •

•

••

•

• •••• •

••

••

•

•

150

200

Fig. 3 True standard deviation (broken line) and its unsmoothed

(points), and smoothed (bold line) bootstrap estimates (10 bootstrap

replications).

absolute biases are not altered appreciably as a result of the

smoothing; however, the variability measures are considerably

improved. It is observed that the bias and error rates do not

differ appreciably for different total expected counts. However,

as expected, the error rates decrease with increasing bootstrap

sample size.

IV. R ESULTS

V. D ISCUSSION

The percent relative absolute bias, averaged over pixels

was about 4-5% for all count rates and bootstrap sample sizes.

Figure 3 shows a set of pixel-wise bootstrapped standard

deviation estimates (points). Here =10. The estimates were

post-processed by smoothing with the variable-span smoother

of Friedman [6] which uses a local cross-validation scheme

to obtain the smoothing parameter. The smoothed estimate

(Figure 3, bold line) is shown to give a better fit. Variability of

the estimates was measured by the average, over pixels, of the

mean percent absolute error in estimating standard deviation.

Table 1 summarizes the bias and the variability measures of the

estimated bootstrap standard deviations. The percent relative

This paper suggests a practical approach towards variability

assessment in functional images. Preliminary results reported

here are very encouraging. In our evaluations, we have

concentrated on the problem of estimating pixel-wise variances

of functional images obtained using the mixture analysis

approach; the technique can be easily extended to assess other

variability measures like correlations. Also, the method can be

applied to functional imaging techniques other than mixture

analysis.

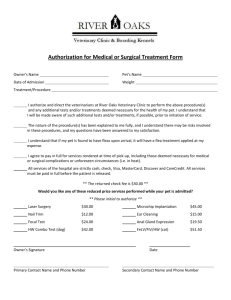

Table 1

Bias and variability measures for smoothed bootstrap standard

deviation estimates over different total expected counts and bootstrap

sample sizes. The bias measure is the percent relative bias averaged

over pixels and the variability measure is the mean relative percent

absolute error in estimating standard deviations averaged over pixels.

Corresponding measures for unsmoothed estimates are in parenthesis.

Counts

( G y$ )

1.02

Bias

10 rep

30 rep

4.6 (5.6) 4.8 (3.7)

Variability

10 rep

30 rep

17.3 (27.7) 14.0 (21.6)

2.05

5.1 (3.8)

5.1 (5.4)

16.1 (27.6)

12.9 (21.3)

4.10

5.1 (5.3)

4.9 (3.7)

15.5 (27.3)

12.3 (21.0)

A number of issues remain to be addressed. In smoothing

our synthetic bootstrap standard deviation estimates, the

variable-span fitting algorithm of Friedman [6] was used to

obtain the resolution size (FWHM) of the smoothing filter.

This strategy chooses the smoothing parameter adaptively

by local cross-validation. As a result, the estimates may

be under-smoothed in the presence of positively correlated

variates. Hence, the obtained error rates may potentially

be decreased by a more sophisticated choice of smoothing

parameter. Another question of interest is determining the

number of bootstrap samples. We also need to evaluate

this scheme in the context of two-dimensional PET images.

While it does not seem possible to do a complete evaluation

given existing computational resources, it may be possible to

evaluate the performance in a limited frame-work. Hence,

while this seems a promising new technique towards variability

estimation in functional images, a number of issues remain to

be investigated.

VI. R EFERENCES

[1] Alpert, N. M., Chesler, D. A., Correia, J. A., Ackerman, R. H.,

Chang, J. H., Finklestein, S., Davis, S. M., Brownell, G. L.,

and Taveras, J. M., “Estimation of the local statistical noise in

emission computed tomography”, IEEE Trans. Med. Imag., MI1:142-146, 1982.

[2] Blomqvist, G., Eriksson L., Rosenqvist G., “The effect of

spatial correlation on the quantification in Positron Emission

Tomography” Neuroimage, 2:2, 1995.

[3] Carson, R. E., Yan, Y., Daube-Witherspoon, M. E., Freedman,

N., Bacharach, S. L. and Herscovitch, P., “An Approximation

Formula for the Variance of PET Region-of-Interest Values”,

IEEE Trans. Med. Imag., 12:240-50, 1993.

[4] Choudhury, K. R. and O’Sullivan, F., “A Statistical Examination

of FBP and ML for Estimating Mixture Models from Dynamic

PET Data”, 1995 IEEE Nucl. Sci. Symp. and Med. Imag. Conf.

Record, 3:1237-41, 1995.

[5] Efron, B., “The Jackknife, the Bootstrap and Other Resampling

Plans”, SIAM, 1982.

[6] Friedman, J. H., “A variable span smoother”, Tech. Rep. No. 5,

Lab. for Comp. Stat., Dept. of Stat., Stanford Univ., Stanford, CA,

1984.

[7] Haynor, D. R. and Woods, S. D., “Resampling Estimates of

Precision in Emission Tomography”, IEEE Trans. Med. Imag.,

8:337-43, 1989.

[8] Huesman, R. H., “A new fast algorithm for the evaluation

of regions of interest and statistical uncertainty in computed

tomography”, Phys. Med. Biol., 29:543-52, 1984.

[9] Maitra, R. and O’Sullivan, F., “Estimating the Variability of

Reconstructed PET Data : A Technique Based on Approximating

the Reconstruction Filter by a sum of Gaussian kernels”, 1995

IEEE Nucl. Sci. Symp. and Med. Imag. Conf. Record, 3:1411-14,

1995.

[10] Maitra, R., Variability Estimation in Linear Inverse Problems.

Ph. D. dissertation, Department of Statistics, University of

Washington, 1996.

[11] Maitra, R., “Estimating Precision in Functional Images”, J.

Comp. and Graph. Stats., to appear, 1997.

[12] O’Sullivan, F., “Imaging radiotracer model parameters in PET :

A mixture analysis approach”, IEEE Trans. Med. Imag., 12:399412, 1993.

[13] O’Sullivan, F., Pawitan, Y. and Haynor, D., “Reducing Negativity

Artifacts in Emission Tomography: Post-Processing Filtered

Backprojection Solutions”, IEEE Trans. Med. Imag., 12:653-663,

1993.

[14] O’Sullivan, F., “Metabolic images from dynamic Positron

Emission Tomography studies”, Stat. Meth. in Med. Res., 3:87101, 1994.

[15] Phelps, M. E., Huang, S. C., Hoffman, E. J., Selin, C., Sokoloff,

L., and Kuhl, D. E., “Tomographic measurement of local cerebral

glucose metabolic rate in humans with [F-18]2-Fluoro-2-deoxyD-glucose: validation of method”, Ann. Neurol. 6:371-388, 1979.