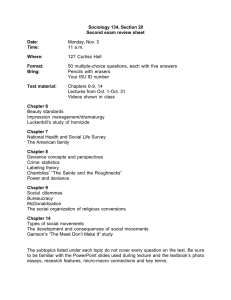

ST A T 557

advertisement

STAT 557

1. (a)

(b)

(c)

(d)

(e)

Solutions to Assignment 1

Fall 2002

Adults in the State of Iowa with telephones

An adult from the population

a binary (yes, no) response

nominal

Since the sample size (n = 825) is fairly large and the point estimate of p is not too extreme (np > 5 and

n(1 p) > 5), the approximation would be reasonable if the random digit dialing approximately produces

a simple random sample from the population of adults with telephones in Iowa. This should be case if

they talk to only one adult on each call. Then, with p = 0:4630, an approximate 95 % condence interval

is (0:429; 0:497): If they talk to every adult present in the household on each call, however, it would be

closer to a one-stage cluster sample than a simple random sample and the number of \yes" responses may

not have a binomial distribution. In that case, using the binomial variance formula in the construct of a

condence interval would not be appropriate.

2. (a) Patients in the national registry of records for individuals who have been diagnosed as suering from a

certain liver disease

(b) a patient from the population

(c) disease severity measured on a ve point scale

(d) ordinal

(e) Since the sample size (n = 825) is fairly large and the point estimate of p is not too extreme (np > 5 and

n(1 p) > 5), the approximation would be reasonable. With p = 0:171, the approximate 95 % condence

interval is (0:124; 0:218):

3. (a) Iowa State students

(b) A student and his or her parents

(c) There are three potentially correlated binary response variables corresponding to right (or left) handedness

for a student and his or her two parents. For each student/parent trio you could think of this as a

multinomial response variable with trio would belong to exactly on the 8 categories, if information on

both parents is available.

(d) nominal

(e) The Pearson chi-square test can be used to compare proportions obtained from independent binomial

random variables. Since the binomial variables in this problem are correlated, the use of the Pearson

chi-square test statistic is not reasonable.

4. (a) Schools in Iowa with sixth grade classes

(b) This is a two stage sampling scheme where schools are sampled from the population of schools in Iowa

with sixth grades students. Then one class is randomly selected from the sixth grade classes in each of the

selected schools. An entire sixth grade class is the unit of response. Since students in a class are inuenced

by the same teacher and they largely share the same educational background, two students from the same

class will tend to respond in a more similar way than two students from dierent schools. To use sixth

grade students as the units of response, correlations among responses from classmates would have to be

taken into account. This could be diÆcult because it would require a model they would allow for dierent

types or strengths of relationships between dierent pairs of classmates.

(c) For each of the 25 animals there are two response variables (each with ve categories) corresponding to

attitudes toward the animal before and after the visit to the wildlife center.

(d) ordinal

1

p

(e) The original data was used to create a binary response for each student (did their attitude toward snakes

become more positive?) As mentioned in part (a), students' response may not be independent and the

total number of positive responses not be the binomial. Thus, p(1 p) may underestimate the variance

of the sample proportion and p (1:96) p(1 p) may produce intervals that are too short to provide

95% condence.

p

5. Let 1 and 2 be the survival rates of the standard treatment and the new treatment, respectively. Then the

testing problem is "H0 : 1 = 2 : HA : 1 < 2 ". The alternative is one-sided because the objective of the

study is to demonstrate that the new treatment is more eective. Here, = 0:05, = 0:20, z = 1:64485,

z = 0:84162, 1 = 0:55, 2 = 0:75.

s

p = (1 + 2 )=2 = 0:65

2p(1 p)

= 1:02273

r =

1 (1 1 ) + 2 (1 2 )

(z + z r)2 (1 (1 1 ) + 2 (1 2 ))

= 69:27

n =

(1 2 )2

Then, 70 subjects are needed in each treatment.

6. (a) Note that p = 4=933 = 0:004287 and np = 4 < 5. Thus a normal approximation would not be reasonable.

The exact condence interval is computed as (0.001169, 0.010940) using the formulas on pages 73-76 of

the lecture notes.

(b) Similarly as in part (a), the normal approximation would not be reasonable. The exact condence interval

is (0.0000585, 0.0017427).

7. (a) The table of counts would have a multinomial distribution if simple random sampling with replacement

was used. Since the population is large, a multinomial distribution would provide a good approximation to

the distribution of the counts if simple random sampling without replacement was used. The log-likelihood

function is

`(; Y) = log(n!)

X2 X2

i=1 j =1

log(Yij !) +

X2 X2

i=1 j =1

Yij log(ij )

(b) Under the independence model ij = i+ +j . Substituting this into the log-likelihood function shown

above, we have

`(; Y) = log(n!)

X2 X2

i=1 j =1

log(Yij !) +

X2

i=1

Yi+ log(i+ ) +

X2

j =1

Y+j log(+j )

Maximizing this log-likelihood with respect to the constraints 1+ + 2+ = 1 and +1 + +2 = 1. The

formula for the maximum likelihood estimates for the expected counts is

Yi+ Y+j

m

^B

;

ij = Y

++

and

i = 1; 2andj = 1; 2

(m

^B

^B

^B

^B

11 ; m

12 ; m

21 ; m

22 ) = (799:5; 220:5; 295:2; 81:5):

(c) Under the general alternative, the mle's of the expected counts will be same as the observations; i.e.,

(m

^ C11 ; m

^ C12 ; m

^ C21 ; m

^ C22 ) = (784; 236; 311; 66):

(d)

G2 = 2

X2 X2 Y

i=1 j =1

ij log

Yij

= 5:321 on 1 d.f. with p-value=.021

m

^B

ij

2

X2 = 2

X2 X2 Y

( ij

i=1 j =1

2

m

^B

ij )

= 5:15 on 1 d.f. with p-value=.023

m

^B

ij

The data suggest that opinions on gun registration are not held independently of opinions on the death

penalty. In particular, people who oppose the death penalty are more likely to favor gun registration than

people who favor the death penalty.

(e) The log-likelihood function is

`(; Y) = log(n!)

X2 X2

log(Yij !) +

= log(n!)

X2 X2

log(Yij !) + Y11 log(2 ) + (Y12 + Y21 ) log((1

= log(n!)

X2

i=1 j =i

i=1 j =i

j =i

X2 X2 Y

i=1 j =i

ij log(ij )

)) + 2Y22 log((1 )2 )

log(Yij !) + (2Y11 + Y12 + Y21 ) log() + (Y12 + Y21 + 2Y22 ) log((1

))

(f) Solve the likelihood equation

0=

@`(; Y) 2Y11 + Y12 + Y21

=

@

Y12 + Y21 + 2Y22

1 The maximum likelihood estimate is

Y +Y

2Y + Y + Y

^ = 11 12 21 = 1+ +2 = 0:757

2Y++

2Y++

(g) Compute m.l.e.'s for expected counts:

2

m

^A

11 = Y++ ^ = 800:5055

m

^A

^A

12 = m

21 = Y++ ^(1 ^) = 256:9944

2

m

^A

22 = Y++ (1 ^) = 82:5055

(h) The deviance statistic is

G2 = 2

X2 X2 Y

^A

ij log(Yij =m

ij ) = 16:2823

i=1 j =i

with 2 d.f. and p-value = .0003. It is not surprising that the data do not support this model because the

independence model was rejected in a previous part of this problem and this model is a restricted form of

the independence model.

(i) An analysis of deviance table

Comparison

Model A vs. Model B

Model B vs. Model C

Model A vs. Model C

d.f.

1

1

2

Deviance

10.962

5.321

16.283

p-value

.0009

.021

.0003

Note that the deviance statistic for \Model A vs. Model B" is given by

G2 = 2

X2 X2 Y

i=1 j =i

^B

^A

ij log(m

ij =m

ij ) = 10:962

Although Model B is a signicant improvement over Model A, neither Model A nor Model B is appropriate

in this case.

3

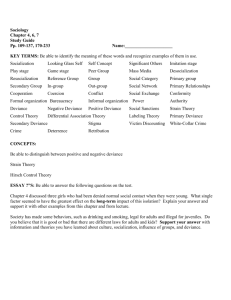

> #=========================================================================#

> #

Problem 5

#

> #=========================================================================#

> pi1 <- .55

> pi2 <- .75

>

> alpha <- .05

> beta <- .20

> z.alpha <- qnorm( 1 - alpha )

> z.beta <- qnorm( 1 - beta )

>

> p <- (pi1+pi2)/2

> r <- sqrt( 2*p*(1-p)/(pi1*(1-pi1)+pi2*(1-pi2)) )

> n <- (z.beta+z.alpha*r)^2*(pi1*(1-pi1)+pi2*(1-pi2))/(pi1-pi2)^2

> c(p,r,n)

[1] 0.65000 1.02273 69.27248

>

> #=========================================================================#

> #

Problem 6

#

> #=========================================================================#

>

#The following function uses F-distribution to construct a

>

#100*a% "exact" confidence interval for the probability of

>

#success in a binomial distribution;

>

> binci.f<-function(x, n, a){

+

# x = observed number of successes; +

# n = number of trials;

+

# a = level of confidence (e.g. 0.95);

+

p <- x/n

+

a2 <- 1-((1-a)/2)

+

if (x > 0)

+

f1 <- qf(a2, 2*(n-x+1), 2*x)

+

else

+

f1 <- 1

+

if (n > x)

+

f2 <- qf(a2, 2*(x+1), 2*(n-x))

+

else

+

f2 <- 1

+

plower <- x / (x + (n-x+1)*f1)

+

pupper <- (x+1)* f2 / ((n-x)+(x+1)*f2)

+

cbind( plower=plower, pupper=pupper )

+ }

> binci.f(x=4, n=933, a=0.95)

plower

pupper

[1,] 0.001169328 0.01094038

> binci.f(x=2, n=4143, a=0.95)

plower

pupper

[1,] 0.00005846764 0.001742731

>

>

> #=========================================================================#

> #

Problem 7

#

> #=========================================================================#

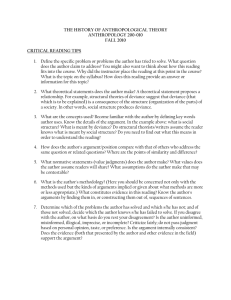

> #-----------------------------------------------------------------------(b)

> Y <- matrix( c( 784, 236, 311, 66), 2, 2, byrow=T )

> dimnames(Y) <- list( c("GR.Favor","GR.Oppose"), c("DP.Favor","DP.Oppose") )

> Y

DP.Favor DP.Oppose

GR.Favor

784

236

GR.Oppose

311

66

>

> row.total <- Y["GR.Favor",] + Y["GR.Oppose",]

> col.total <- Y[,"DP.Favor"] + Y[,"DP.Oppose"]

> total <- sum(Y)

>

#------------------------------------#

>

# Expected counts; Independence Model#

>

#------------------------------------#

> mB.hat <- (col.total %o% row.total) / total

> mB.hat

DP.Favor DP.Oppose

GR.Favor 799.4989 220.50107

GR.Oppose 295.5011 81.49893

> #------------------------------------------------------------------------(d)

>

#------------------------------------#

>

# Pearson X^2 Test: ModelB vs ModelC #

>

#------------------------------------#

> chisq.test(Y, correct=F)

Pearson's chi-square test without Yates' continuity correction

data: Y X-square = 5.1503, df = 1, p-value = 0.0232

>

# See (i) for deviance G^2 test

4

#

> #------------------------------------------------------------------------(f)

>

#------------------------------------#

>

# MLE of theta

#

>

#------------------------------------#

> theta <- (2 * Y[1,1] + Y[1,2] + Y[2,1]) / ( 2 * sum(Y) )

> theta

[1] 0.7569792

> #------------------------------------------------------------------------(g)

>

#------------------------------------#

>

# Expected counts by Model A

#

>

#------------------------------------#

> piA.hat <- matrix( c(theta^2, theta*(1-theta),

+

theta*(1-theta), (1-theta)^2 ),

2, 2 )

> mA.hat <- total * piA.hat

> mA.hat

[,1]

[,2]

[1,] 800.5055 256.99445

[2,] 256.9945 82.50555

> #------------------------------------------------------------------------(i)

> table2 <- matrix(0, 3, 3)

> dimnames(table2) <- list( c( "A vs B", "B vs C", "A vs C"),

+

c("df", "deviance", "pvalue") )

>

#------------------------------------#

>

#

MODEL A vs MODEL B

#

>

#------------------------------------#

> table2["A vs B", "deviance"] <- 2 * sum( Y * log( mB.hat/mA.hat ) )

> table2["A vs B", "df"]

<- 1

> table2["A vs B", "pvalue"] <- 1 - pchisq(table2["A vs B", "deviance"],

+

table2["A vs B", "df"] )

>

#------------------------------------#

>

#

MODEL B vs MODEL C

#

>

#------------------------------------#

> table2["B vs C", "deviance"] <- 2 * sum( Y * log( Y / mB.hat ) )

> table2["B vs C", "df"]

<- 1

> table2["B vs C", "pvalue"] <- 1 - pchisq(table2["B vs C", "deviance"],

+

table2["B vs C", "df"] )

>

#------------------------------------#

>

#

MODEL A vs MODEL C

#

>

#------------------------------------#

> table2["A vs C", "deviance"] <- 2 * sum( Y * log( Y /mA.hat ) )

> table2["A vs C", "df"]

<- 2

> table2["A vs C", "pvalue"] <- 1 - pchisq(table2["A vs C", "deviance"],

+

table2["A vs C", "df"] )

>

#------------------------------------#

>

# Analysis of Deviance Table

#

>

#------------------------------------#

> table2

df deviance

pvalue

A vs B 1 10.96130 0.0009303443

B vs C 1 5.32065 0.0210741513

A vs C 2 16.28195 0.0002913527

5