Fractional Imputation in Survey Sampling: A Comparative Review Shu Yang Jae-Kwang Kim

advertisement

Fractional Imputation in Survey Sampling:

A Comparative Review

Shu Yang

Jae-Kwang Kim

Iowa State University

Joint Statistical Meetings, August 2015

Outline

Introduction

Fractional imputation

Features

Numerical illustration

Conclusion

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

2 / 29

Introduction

Basic Setup

U = {1, 2, · · · , N}: Finite population

A ⊂ U: sample (selected by a probability sampling design).

Under complete response, suppose that

X

η̂n,g =

wi g (yi )

i∈A

is an unbiased estimator of ηg = N −1

known function.

PN

i=1 g (yi ).

Here, g (·) is a

For example, g (y ) = I (y < 3) leads to ηg = P(Y < 3).

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

3 / 29

Introduction

Basic Setup (Cont’d)

A = AR ∪ AM , where yi are observed in AR . yi are missing in AM

Ri = 1 if i ∈ AR and Ri = 0 if i ∈ AM .

yi∗ : imputed value for yi , i ∈ AM

Imputed estimator of ηg

η̂I ,g =

X

wi g (yi ) +

i∈AR

X

wi g (yi∗ )

i∈AM

Need E {g (yi∗ ) | Ri = 0} = E {g (yi ) | Ri = 0}.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

4 / 29

Introduction

ML estimation under missing data setup

Often, find x (always observed) such that

Missing at random (MAR) holds: f (y | x, R = 0) = f (y | x)

Imputed values are created from f (y | x).

Computing the conditional expectation can be a challenging problem.

1

Do not know the true parameter θ in f (y | x) = f (y | x; θ):

E {g (y ) | x} = E {g (yi ) | xi ; θ} .

2

Even if we know θ, computing the conditional expectation can be

numerically difficult.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

5 / 29

Introduction

Imputation

Imputation: Monte Carlo approximation of the conditional

expectation (given the observed data).

M

1 X ∗(j) E {g (yi ) | xi } ∼

g yi

=

M

j=1

1

2

Bayesian approach: generate yi∗ from

Z

f (yi | xi , yobs ) = f (yi | xi , θ) p(θ | xi , yobs )dθ

Frequentist approach: generate yi∗ from f yi | xi ; θ̂ , where θ̂ is a

consistent estimator.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

6 / 29

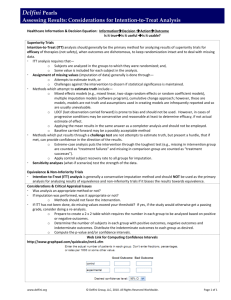

Comparison

Model

Computation

Prediction

Parameter update

Parameter est’n

Imputation

Variance estimation

Yang & Kim (Iowa State University )

Bayesian

Posterior distribution

f (latent, θ | data)

Data augmentation

I-step

P-step

Posterior mode

Multiple imputation

Rubin’s formula

Fractional Imputation

Frequentist

Prediction model

f (latent | data, θ)

EM algorithm

E-step

M-step

ML estimation

Fractional imputation

Linearization

or Resampling

2015 JSM

7 / 29

Fractional Imputation: Basic Idea

Approximate E {g (yi ) | xi } by

E {g (yi ) | xi } ∼

=

Mi

X

∗(j)

wij∗ g (yi

)

j=1

∗(j)

where wij∗ is the fractional weight assigned to yi

value of yi .

The fractional weights satisfy

Yang & Kim (Iowa State University )

PMi

∗

j=1 wij

Fractional Imputation

, the j-th imputed

= 1.

2015 JSM

8 / 29

Fractional Imputation for categorical data

If yi is a categorical variable, we can use

Mi

∗(j)

yi

∗(j)

wij

= total number of possible values of yi

= the j-th possible value of yi

∗(j)

= P(yi = yi

| xi ; θ̂),

where θ̂ is the pseudo MLE of θ.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

9 / 29

Parametric Fractional Imputation (Kim, 2011)

More generally, we can write yi = (yi1 , · · · , yip ) and yi can be

partitioned into (yi,obs , yi,mis ).

1

2

More than one (say M) imputed values of ymis,i , denoted by

∗(M)

∗(1)

ymis,i , · · · , ymis,i , are generated from some density h (ymis,i | yobs ).

Create weighted data set

wi wij∗ , yij∗ ; j = 1, 2, · · · , M; i ∈ A

where

PM

j=1

∗(j)

wij∗ = 1, yij∗ = (yobs,i , ymis,i )

∗(j)

wij∗ ∝ f (yij∗ ; θ̂)/h(ymis,i | yi,obs ),

3

θ̂ is the (pseudo) maximum likelihood estimator of θ, and f (y ; θ) is the

joint density of y .

The weight wij∗ are the normalized importance weights and can be

called fractional weights.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

10 / 29

EM algorithm using PFI

EM algorithm by fractional imputation

1

2

∗(j)

Initial imputation: generate ymis,i ∼ h (yi,mis | yi,obs ).

E-step: compute

∗(j)

∗

∝ f (yij∗ ; θ̂(t) )/h(yi,mis | yi,obs )

wij(t)

3

PM

∗

= 1.

where j=1 wij(t)

M-step: update

θ̂(t+1) : solution to

M

XX

∗

wi wij(t)

S θ; yij∗ = 0,

i∈A j=1

4

where S(θ; y ) = ∂ log f (y ; θ)/∂θ is the score function of θ.

Repeat Step2 and Step 3 until convergence.

∗

We may add an optional step that checks if wij(t)

is too large for

some j. In this case, h(yi,mis | yi,obs ) needs to be changed.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

11 / 29

Approximation: Calibration Fractional imputation

In large scale survey sampling, we prefer to have smaller M.

Two-step method for fractional imputation:

1

2

Create a set of fractionally imputed data with size nM, (say

M = 1000).

Use an efficient sampling and weighting method to get a final set of

fractionally imputed data with size nm, (say m = 10).

Thus, we treat the step-one imputed data as a finite population and

the step-two imputed data as a sample. We can use efficient sampling

technique (such as systematic sampling or stratification) to get a final

imputed data and use calibration technique for fractional weighting.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

12 / 29

Why Fractional Imputation?

1

To improve the efficiency of the point estimator (vs. single

imputation)

2

To obtain valid frequentist inference without congeniality condition

(vs. multiple imputation)

3

To handle informative sampling mechanism

We will discuss the third issue first.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

13 / 29

Informative sampling design

Let f (y | x) be the conditional distribution of y given x.

x is always observed but y is subject to missingness.

A sampling design is called noninformative (w.r.t f ) if it satisfies

f (y | x, I = 1) = f (y | x)

(1)

where Ii = 1 if i ∈ A and Ii = 0 otherwise.

If (1) does not hold, then the sampling design is informative.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

14 / 29

Missing At Random

Two versions of Missing At Random (MAR)

1

PMAR (Population Missing At Random)

Y ⊥R|X

2

SMAR (Sample Missing At Random)

Y ⊥ R | (X , I )

Fractional imputation assumes PMAR while multiple imputation

assumes SMAR

Under noninformative sampling design, PMAR=SMAR

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

15 / 29

Imputation under informative sampling

Two approaches under informative sampling when PMAR holds.

1

2

Weighting approach: Use weighted score equation to estimate θ in

f (y | x; θ). The imputed values are generated from f (y | x, θ̂).

Augmented model approach: Include w into model covariates to get

the augmented model f (y | x, w ; φ). The augmented model makes the

sampling design noninformative in the sense that

f (y | x, w ) = f (y | x, w , I = 1). The imputed values are generated

from f (y | x, w ; φ̂), where φ̂ is computed from unweighted score

equation.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

16 / 29

Berg, Kim, and Skinner (2015)

Figure : A Directed Acyclic Graph (DAG) for a setup where PMAR holds but

SMAR does not hold. Variable U is latent in the sense that it is never observed.

R

Y

W

X

I

U

f (y | x, R) = f (y | x) holds but f (y | x, w , R) 6= f (y | x, w ).

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

17 / 29

Imputation under informative sampling

Weighting approach generates imputed values from f (y | x, R = 1)

and

X

θ̂I =

wi {Ri yi + (1 − Ri )yi∗ }

(2)

i∈A

is unbiased under PMAR.

The augmented model approach generates imputed values from

f (y | x, w , I = 1, R = 1) and (2) is unbiased when

f (y | x, w , I = 1, R = 1) = f (y | x, w , I = 1, R = 0)

(3)

holds. PMAR does not necessarily imply SMAR in (3).

Fractional imputation is based on weighting approach.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

18 / 29

Numerical illustration

A pseudo finite population constructed from a single month data in

Monthly Retail Trade Survey (MRTS) at US Bureau of Census

N = 7, 260 retail business units in five strata

Three variables in the data

h: stratum

xhi : inventory values

yhi : sales

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

19 / 29

Box plot of log sales and log inventory values by strata

Box plot of inventory data by strata

17

Box plot of sales data by strata

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

13

11

●

10

2

3

4

5

strata

Yang & Kim (Iowa State University )

1

2

3

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

1

●

●

●

14

●

●

●

●

●

●

●

●

15

log scale

14

13

16

●

●

●

●

●

●

●

●

●

●

12

log scale

15

17

16

●

●

●

●

4

5

strata

Fractional Imputation

2015 JSM

20 / 29

Imputation model

log (yhi ) = β0h + β1 log(xhi ) + ehi

where

ehi ∼ N(0, σ 2 )

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

21 / 29

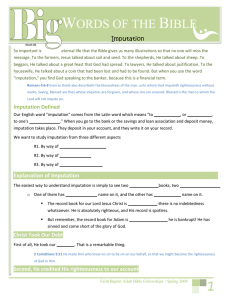

Residual plot and residual QQ plot

Regression model of log(y) against log(x) and strata indicator

Normal Q−Q

3

Residuals vs Fitted

● 6424

6424 ●

●

5

2

●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●●

●

●

●

●

●●

●

●

●●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

0

Standardized residuals

●

● ● ●●

●●

●● ●

●

● ●●

●

●●●●●

●

●●

● ●

●

●●●●

● ●

●

●

●

●

●

●

●●●

●●

●

●

●

●

●

●

●

●

● ●●●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●●●

●

●

●●

●

●●●

●●●●●

●

●

●

●

●●

●●

● ●

●

● ●●

●●●

●●

●

●

●●

●●●

●●

●

●●

●

●

●●●

●●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●●●

●

●

●

●

●

●

●

● ●

●

●●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●●

●●●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●●

●

●

●

●

●

●

●● ● ●

●

●

●

●

● ●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●

●

●● ●●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

● ● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●●

●●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●●●

●

●

●●

●

●

●

●

●

●

●●

●

●

●●

●

●●

●

●

●

●●

●

●

●

●

●●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●● ●

●● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●

●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●● ●

●

●

●

●

● ●●●

●

●●●

● ●

●●●

●●●

●

● ●

● ●●

●

●

●

●● ●

●●

●

●●

● ●

●●●

●

●

●

● ●●

● ●●

●●

●

●

●● ● ● ●

● ●

●●

●

●●

●

●

●

−5

0

−1

−2

Residuals

1

●

●

●

●

●

●

●

−3

● 6658

4●

● 6658

●4

12

13

14

15

16

−4

Fitted values

Yang & Kim (Iowa State University )

−2

0

2

4

Theoretical Quantiles

Fractional Imputation

2015 JSM

22 / 29

Stratified random sampling

Table : The sample allocation in stratified simple random sampling.

Strata

Strata size Nh

Sample size nh

Sampling weight

Yang & Kim (Iowa State University )

1

352

28

12.57

2

566

32

17.69

Fractional Imputation

3

1963

46

42.67

4

2181

46

47.41

5

2198

48

45.79

2015 JSM

23 / 29

Response mechanism: PMAR

Variable xhi is always observed and only yhi is subject to missingness.

PMAR

Rhi ∼ Bernoulli(πhi ), πhi = 1/[1 + exp{4 − 0.3 log(xhi )}].

The overall response rate is about 0.6.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

24 / 29

Simulation Study

Table 1 Monte Carlo bias and variance of the point estimators.

Parameter

θ = E (Y )

Estimator

Complete sample

MI

FI

Bias

0.00

0.00

0.00

Variance

0.42

0.59

0.58

Std Var

100

134

133

Table 2 Monte Carlo relative bias of the variance estimator.

Parameter

V (θ̂)

Yang & Kim (Iowa State University )

Imputation

MI

FI

Relative bias (%)

18.4

2.7

Fractional Imputation

2015 JSM

25 / 29

Discussion

Rubin’s formula is based on the following decomposition:

V (θ̂MI ) = V (θ̂n ) + V (θ̂MI − θ̂n )

where θ̂n is the complete-sample estimator of θ. Basically, WM term

estimates V (θ̂n ) and (1 + M −1 )BM term estimates V (θ̂MI − θ̂n ).

For general case, we have

V (θ̂MI ) = V (θ̂n ) + V (θ̂MI − θ̂n ) + 2Cov (θ̂MI − θ̂n , θ̂n )

and Rubin’s variance estimator ignores the covariance term. Thus, a

sufficient condition for the validity of unbiased variance estimator is

Cov (θ̂MI − θ̂n , θ̂n ) = 0.

Meng (1994) called the condition congeniality of θ̂n .

Congeniality holds when θ̂n is the MLE of θ (self-efficient estimator).

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

26 / 29

Discussion

For example, there are two estimators of θ = E (Y ) when log(Y )

follows from N(β0 + β1 x, σ 2 ).

1

Maximum likelihood method:

θ̂MLE = n−1

n

X

exp{β̂0 + β̂1 xi + 0.5σ̂ 2 }

i=1

2

Method of moments:

θ̂MME = n−1

n

X

yi

i=1

The MME of θ = E (Y ) does not satisfy the congeniality and Rubin’s

variance estimator is biased (R.B. = 58.5%)

Rubin’s variance estimator is essentially unbiased for MLE of θ (R.B.

= -1.9%) but MLE is rarely used in practice.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

27 / 29

Summary

Fractional imputation is developed as a frequentist imputation.

Multiple imputation is motivated from a Bayesian framework. The

frequentist validity of multiple imputation requires congeniality.

Fractional imputation does not require the congeniality condition and

works well for Method of Moments estimators.

For informative sampling, augmented model approach does not

necessarily achieve SMAR. Fractional imputation uses weighting

approach for informative sampling.

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

28 / 29

The end

Yang & Kim (Iowa State University )

Fractional Imputation

2015 JSM

29 / 29