Transport problems 18.S995 - L20

advertisement

Transport problems

18.S995 - L20

dunkel@math.mit.edu

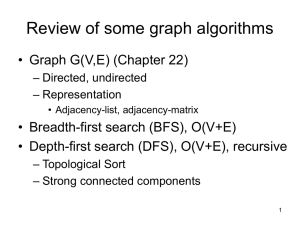

Root systems

Katifori lab, MPI Goettingen

Maze solving

time = 0

1 cm

after 8 hours

Nakagaki et al (2000) Nature

dunkel@math.mit.edu

Tree graphs

Spanning trees

Minimal spanning tree

Kirchhoff’s theorem

Number of spanning trees

L

L 11

sufficient to consider since row and column sums

of Laplacian are zero

det L 11 =8

Max-Flow Min-Cut

An example of a flow network with a maximum flow. The source is s, and the sink t. !

The numbers denote flow and capacity.

Deterministic transport

DU/~cos226

Maximum Flow and Minimum Cut

Max flow and min cut.

Max Flow, Min Cut

!

Two very rich algorithmic problems.

!

Cornerstone problems in combinatorial optimization.

!

Beautiful mathematical duality.

Nontrivial applications / reductions.

!

!

!

!

!

!

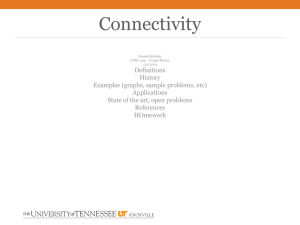

Network connectivity.

Bipartite matching.

Data mining.

Open-pit mining.

Airline scheduling.

Image processing.

!

Project selection.

!

Baseball elimination.

Source:

Minimum cut

Maximum flow

Network reliability.

Max-flow min-cut theorem

!

Security of statistical data.

Ford-Fulkerson

augmenting path algorithm

Distributed computing.

!

!

Edmonds-Karp

heuristics

Egalitarian stable

matching.

!

Bipartite

matching

Distributed

computing.

!

!

Many many more . . .

2

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Soviet Rail Network, 1955

Max Flow, Min Cut

Minimum cut

Maximum flow

Max-flow min-cut theorem

Ford-Fulkerson augmenting path algorithm

Edmonds-Karp heuristics

Bipartite matching

Source: On the history of the transportation and maximum flow problems.

Alexander Schrijver in Math Programming, 91: 3, 2002.

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

s

3

http://www.P

U/~cos226

2

Minimum Cut Problem

Max Flow, Min Cut

Network: abstraction for material FLOWING through the edges.

!

Directed graph.

!

Capacities on edges.

!

Source node s, sink node t.

Min cut problem. Delete "best" set of edges to disconnect t from s.

Minimum cut

Maximum flow

source

s

capacity

3

Max-flow min-cut theorem

2

9

10

4

15

5

3

8

6

10

15

4

6

15

10

4

30

7

5

Ford-Fulkerson augmenting path algorithm

Edmonds-Karp

heuristics

15

10

Bipartite matching

t

sink

4

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Cuts

Max Flow, Min Cut

A cut is a node partition (S, T) such that s is in S and t is in T.

!

capacity(S, T) = sum of weights of edges leaving S.

10

s

5

15

9

4

15

Max-flow

min-cut theorem

15

3

8

Edmonds-Karp

heuristicst

6

4

6

4

!

Minimum cut

2

S

A

5

Maximum flow

10

Ford-Fulkerson augmenting path algorithm

10

Bipartite matching

30

15

7

10

Capacity = 30

5

Source:

Minimum Cut Problem

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Cuts

Max Flow, Min Cut

A cut is a node partition (S, T) such that s is in S and t is in T.

!

capacity(S, T) = sum of weights of edges leaving S.

Minimum cut

2

10

5

s

S

= 30

15

4

9

15

3

8

4

6

4

30

5 flow

Maximum

Max-flow min-cut theorem

15

10

Ford-Fulkerson augmenting path algorithm

Edmonds-Karp heuristics

6

10

15

10

Bipartite matching

7

5

t

Capacity = 62

6

Source:

Maximum Flow Problem

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

5

Minimum Cut Problem

Max Flow, Min Cut

A cut is a node partition (S, T) such that s is in S and t is in T.

!

N

capacity(S, T) = sum of weights of edges leaving S.

!

!

Min cut problem. Find an s-t cut of minimum capacity.

!

M

Minimum cut

2

10

5

s

S

15

4

3

4

4

9

15

8

6

30

!

Maximum5 flow

!

Max-flow min-cut theorem

15

10

Ford-Fulkerson augmenting path algorithm

Edmonds-Karp heuristics

6

10

Bipartite matching

15

7

t

sourc

10

Capacity = 28

7

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

5

6

Maximum Flow Problem

Max Flow, Min Cut

Network: abstraction for material FLOWING through the edges.

!

Directed graph.

!

Capacities on edges.

!

Source node s, sink node t.

same input as min cut problem

Max flow problem. Assign flow to edges so as to:

!

cut vertex.

Equalize inflow and outflow at everyMinimum

intermediate

!

Maximize flow sent from s to t.

10

source

s

capacity

y = 28

7

Maximum flow

2

9

4

15

5

3

8

15

4

4

Max-flow min-cut theorem

5

Ford-Fulkerson augmenting path algorithm

15

Edmonds-Karp

heuristics

10

Bipartite matching

6

10

6

15

10

30

7

t

sink

8

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Flows

A flow f is an assignment of weights to edges so that:

Max Flow, Min Cut

!

Capacity: 0 ! f(e) ! u(e).

!

Flow conservation: flow leaving v = flow entering v.

A

!

!

except at s or t

4

10

s

capacity

flow

0

5

15

0

2

0

9

Minimum5cut

4 4

0

15

0

Max-flow

15 min-cut

0

10 theorem

3

4

8

4 0

0

6

4

0

30

Maximum flow

Ford-Fulkerson augmenting path algorithm

6

Edmonds-Karp

4

t

heuristics

10

Bipartite matching

0

15 0

7

10

Value = 4

9

Maximum Flow Problem

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Flows

Max Flow, Min Cut

A flow f is an assignment of weights to edges so that:

!

Capacity: 0 ! f(e) ! u(e).

!

Flow conservation: flow leaving v = flow entering v.

except at s or t

10

10

s

capacity

flow

3

5

15

11

Minimum cut

2

6

9

4 4

0

15

Max-flow min-cut6theorem

3

8

8

8

Edmonds-Karp heuristics

4 0

1

6

4

Maximum5 flow

15 0

10

Ford-Fulkerson augmenting path algorithm

6

10

15 0

10

10

Bipartite matching

11

30

9

7

t

Value = 24

10

Source:

Flows and Cuts

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

9

Maximum Flow Problem

Max Flow, Min Cut

Max flow problem: find flow that maximizes net flow into sink.

10

10

s

capacity

flow

4

5

15

14

Obs

net f

Minimum cut

2

9

9

4 0

1

15

Max-flow min-cut theorem

3

8

8

Edmonds-Karp

heuristics

6

t

10

4 0

4

6

4

14

30

5

Maximum flow

15 0

9

10

Ford-Fulkerson augmenting path algorithm

9

Bipartite matching

15 0

7

10

10

Value = 28

11

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

9

10

Flows and Cuts

Max Flow, Min Cut

Observation 1. Let f be a flow, and let (S, T) be any s-t cut. Then, the

net flow sent across the cut is equal to the amount reaching t.

10

10

s

S

28

11

4

5

10

15

Minimum cut

2

6

9

4 4

0

15

6

Max-flow min-cut

theorem

3

8

8

8

Edmonds-Karp

heuristics

6

t

4 0

0

6

4

10

30

5

Maximum

flow

15 0

10

Ford-Fulkerson augmenting path algorithm

10

Bipartite matching

15 0

7

10

10

Value = 24

12

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Flows and Cuts

Max Flow, Min Cut

Observation 1. Let f be a flow, and let (S, T) be any s-t cut. Then, the

net flow sent across the cut is equal to the amount reaching t.

10

10

s

S

4

5

10

15

2

6

9

Minimum cut

4 4

0

15

Max-flow

min-cut theorem

15

3

8

8

Edmonds-Karp

heuristicst

6

4 0

0

6

4

10

30

Ob

net

5

Maximum flow

0

6

10

Ford-Fulkerson augmenting path algorithm

8

10

Bipartite matching

15 0

7

10

10

Value = 24

13

Source:

Flows and Cuts

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Flows and Cuts

Max Flow, Min Cut

he

Observation 1. Let f be a flow, and let (S, T) be any s-t cut. Then, the

net flow sent across the cut is equal to the amount reaching t.

10

10

s

S

24

4

5

10

15

Minimum cut

2

6

9

Max-flow min-cut6 theorem

4 4

0

15

3

8

8

Edmonds-Karp heuristics

8

4 0

0

6

4

10

30

Maximum5 flow

15

0

10

Ford-Fulkerson

augmenting

path algorithm

6

10

Bipartite matching

15 0

13

7

t

10

10

Value = 24

14

Source:

Max Flow and Min Cut

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

13

Flows and Cuts

Max Flow, Min Cut

Observation 2. Let f be a flow, and let (S, T) be any s-t cut. Then the

value of the flow is at most the capacity of the cut.

Cut capacity = 30 !

O

e

Flow value " 30

Minimum cut

2

10

9

Maximum5 flow

Max-flow min-cut theorem

4

15

15

10

Ford-Fulkerson

augmenting

path algorithm

Edmonds-Karp heuristics

s

5

3

8

10

Bipartite6matching

4

6

15

4

30

7

S

15

t

10

15

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

4

30

7

13

14

Max Flow and Min Cut

Max Flow, Min Cut

hen the

Observation 3. Let f be a flow, and let (S, T) be an s-t cut whose capacity

equals the value of f. Then f is a max flow and (S, T) is a min cut.

Cut capacity = 28 !

10

10

s

t

S

15

4

5

15

15

Flow value " 28

Flow value = 28

2

9

9

Minimum5 cut

4 0

1

15

9

15 min-cut

0

10

Max-flow

3

8

8

4 0

4

6

4

15

30

Maximum flow

theorem

Ford-Fulkerson 9augmenting path algorithm

6

t

10

Edmonds-Karp heuristics

10

Bipartite

matching

15 0

10

7

16

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

http://www.P

Max-Flow Min-Cut Theorem

Max Flow, Min Cut

Max-flow min-cut theorem. (Ford-Fulkerson, 1956): In any network,

the value of max flow equals capacity of min cut.

!

Find

Proof IOU: we find flow and cut such that Observation 3 applies.

Min cut capacity = 28

10

10

s

S

4

5

15

15

! Max flow value = 28

2

9

9

4 0

1

15

Maximum flow

3

8

8

Ford-Fulkerson

augmenting

path algorithm

6

t

4 0

4

6

10

0

Bipartite15matching

10

4

15

30

Minimum5 cut

15 0

9

10

Max-flow min-cut theorem

9

10

Edmonds-Karp heuristics

7

17

Towards an Algorithm

Source:

Princeton University • COS 226 • Algorithms and Data Structures • Spring 2004 • Kevin Wayne •

Find s-t path where each arc has f(e) < u(e) and "augment" flow along it.

http://www.P

Find

Random transport

and Andrew Kotlov for the careful reading of the manuscript of this paper,

BOLYAI SOCIETY

and for suggesting many improvements.

4

Random Walks on Graphs: A SurveyMATHEMATICAL STUDIES, 2

Combinatorics,

Paul Erdős is Eighty

L.(Volume

Lovász2)

Keszthely (Hungary), 1993, pp. 1–46.

L. LOVÁSZ

1. BasicIfnotions

and facts

G is regular,

then

thisrandom

Markov

Dedicated

to the marvelous

walk

chain is symmetric: the probability of

moving to u, given that we are at node v, is the same as the probability of

Let G = (V, E) be a connected graph with n nodes and m edges. Consider

moving

node

v, at

given

wetheare

at

u. For a non-regular graph G,

a random

walk to

on G:

we start

a nodethat

v0 ; if at

t-th

step node

we areWalks

at

Random

on Graphs: A Survey

a node

we move neighbor

of vt with probability

1/d(vt ). Clearly, the

thisvt,property

is replaced

by time-reversibility:

a random walk considered

sequence of random nodes (vt : t = 0, 1, . . .) is a Markov chain. The node

also

walk.

More

exactly,

this

means

v0 backwards

may be fixed, butis

may

itself a

berandom

drawn from some

initial

distribution

P0 .

L. LOV

ÁSZ that if we look

Weat

denote

Pt the distribution

vt : , . . . , v ), where v is from some initial distribution

all byrandom

walksof(v

0

t

0

0. Introduction

Dedicated

the marvelous random walk

= Prob(vt = i). distribution Pt on vt . We also

P0 , then we get Pat(i)

probability

get ato probability

Given a graph and a starting point, we select a neighbor of it at random, and

of Paul Erdős

move

to

this

neighbor;

then

we

select

a

neighbor

of

this

point

at

random,

distribution

Q on

the

sequences

(v0 probabilities

, . . . , vt ).of If wethrough

reverse

each

sequence,

universities,

continents,

and mathematics

and

to it etc.by

TheM

(random)

pointsmatrix

selected this

is a

Wemove

denote

= (psequence

ofway

transition

ij )i,j2Vof the

random walk on the graph.

0 on such sequences. Now timethiswe

Markov

chain.

So

get

another

probability

distribution

Q

A random walk is a finite Markov chain that is time-reversible (see

Ω between the theory of random

below). In fact, there is not much difference

0 is the same as the distribution

reversibility

means

that

this

distribution

Q

1/d(i),

ij Markov

2 E,

walks on graphs and the theory of finite Markov

chains;if

every

chain

Various

aspects

of the theory of random walks on graphs are surveyed. In

pijon=a directed graph, if we allow weighted

(1.1)

can be viewed as random walk

0,

otherwise. particular, estimates on the important parameters of access time, commute time,

edges. Similarly, time-reversible

Markov chains can

be viewed

as random walks starting from the distribution Pt .

obtained

by

looking

at

random

cover time and mixing time are discussed. Connections with the eigenvalues

walks on undirected graphs, and symmetric Markov chains, as random walks

of

and with

electrical networks, and the use of these connections in

on

symmetric

graphs. Inmatrix

this paperofwe’ll

in graphs

Let(We’ll

Aregular

adjacency

G formulate

andhandy

letthe

D results

denote

the diagonal

matrix

G be the

formulate

a

more

characterization

of

time-reversibility a little

of random walks, and mostly restrict our attention to the undirected

the then

studyMof=random

walks

is described. We also sketch recent algorithmic

withterms

(D)

=

1/d(i),

then

M

=

DA

.

If

G

is

d-regular,

(1/d)A

.

G

G

case. ii

applications of random walks, in particular to the problem of sampling.

later.)

The rule of the walk can be expressed by the simple equation

The probability

distributions

P0 , P1 , . . . are of course different in genPt+1 =

M T Pt ,

eral. We say that the distribution

P is stationary (or steady-state) for the

0. Introduction

(the distribution of the t-th point is viewed as a vector in R0V ), and hence

graph G if P1 = P0 . InT tthis case, of course, Pt = P0 for all t ∏ 0; we call

Pt = (M ) P0 .

this walk the stationary walk.Given a graph and a starting point, we select a neighbor of it at random, and

to this

then we select a neighbor of this point at random,

It follows that the probability ptij that, startingmove

at i, we

reachneighbor;

j in t steps

Atheone-line

that

graphsequence

G, the

distribution

and move

to itfor

etc. every

The (random)

of points

selected this way is a

is given by

ij-entry of calculation

the matrix M t . shows

of Paul Erdős

through universities, continents, and mathematics

Various aspects of the theory of random walks on graphs are surveyed. In

particular, estimates on the important parameters of access time, commute time,

cover time and mixing time are discussed. Connections with the eigenvalues

of graphs and with electrical networks, and the use of these connections in

the study of random walks is described. We also sketch recent algorithmic

applications of random walks, in particular to the problem of sampling.

random walk on the graph.

A random d(v)

walk is a finite Markov chain that is time-reversible (see

º(v)

= there is not much difference between the theory of random

below).

In fact,

walks on graphs 2m

and the theory of finite Markov chains; every Markov chain

1/º(i) = 2m/d(i). If G is regular, then this “return time” is just n, the

number of nodes.

BOLYAI SOCIETY

MATHEMATICAL STUDIES, 2

Combinatorics,

Paul Erdős is Eighty (Volume 2)

Keszthely (Hungary), 1993, pp. 1–46.

2. Main parameters

We now formally introduce the measures of a random walk that play the

most important role in the quantitative theory of random walks, already

mentioned in the introduction.

Random Walks on Graphs: A Survey

(a) The access time or hitting time Hij is the expected number of steps

before node j is visited, starting from node i. The sum

∑(i, j) = H(i, j) + H(j, i)

L. LOVÁSZ

is called the commute time: this is the expected number of steps in a

random walk starting at i, before node j is visited and then node i is

Dedicated to the marvelous random walk

reached again. There is also a way to express access times in terms of

of Paul Erdős

commute times, due to Tetali [63]:

through universities, continents, and mathematics

√

!

X

1

H(i, j) =

∑(i, j) +

º(u)[∑(u, j) ° ∑(u, i)] .

(2.1)

2

u

Various aspects of the theory of random walks on graphs are surveyed. In

This formula can be proved using either eigenvalues or the electrical

particular, estimates on the important parameters of access time, commute time,

resistance formulas (sections 3 and 4).

cover time and mixing time are discussed. Connections with the eigenvalues

of graphs

and with

electrical

networks, and the use of these connections in

(b) The cover time (starting from a given

distribution)

is the

expected

the If

study

of random

is described. We also sketch recent algorithmic

number of steps to reach every node.

no starting

nodewalks

(starting

applications

random

walks,

in particular to the problem of sampling.

distribution) is specified, we mean the

worst case,ofi.e.,

the node

from

which the cover time is maximum.

(c) The mixing rate is a measure of how fast the random walk converges to

its limiting distribution. This can be defined as follows. If the graph is

0. Introduction

(t)

non-bipartite, then pij ! dj /(2m) as t ! 1, and the mixing rate is

6

Ø

Ø1/t

Ø (t)

Ø

d

j

Ø

Ø and

µ = lim sup max

p

°

.

Given

ij a graph

Ø

i,j

2m Ø

t!1

a starting point, we select a neighbor of it at random, and

move to this neighbor; then we select a neighbor of this point at random,

(For a bipartite graph with bipartition {V1 , V2 }, the distribution

of

L. Lovász

and move to it etc. The (random)

sequence of points selected this way is a

00

vt oscillates between “almost proportional to the degrees on V1 and

random walk

on the graph.

00

“almost proportional to the degrees on V2 . The results for bipartite

graphs are similar, just a bit more complicated

state,isso awefinite

ignoreMarkov chain that is time-reversible (see

A randomtowalk

this case.)

below). In fact, there is not much difference between the theory of random

ontime”

graphs

andnumber

the theory

One could define the notion ofwalks

“mixing

as the

of stepsof

finite Markov chains; every Markov chain

length n. This leads to the recurrence

Combinatorics,

Paul Erdős is Eighty (Volume 2)

Keszthely (Hungary), 1993, pp. 1–46.

BOLYAI SOCIETY

f (n) = f (n ° 1) + (n °MATHEMATICAL

1),

STUDIES, 2

and through this, to the formula f (n) = n(n ° 1)/2.

Example 2. As another example, let us determine the access times and

cover times for a complete graph on nodes {0, . . . , n ° 1}. Here of course we

may assume that we start from 0, and to find the access times, it suffices

to determine H(0, 1). The probability that we first reachRandom

node 1 in theWalks

t-th

≥

¥t°1

1

step is clearly n°2

n°1

n°1 , and so the expected time this happens is

∂t°1

1 µ

X

n°2

1

H(0, 1) =

t

= n ° 1.

n°1

n°1

t=1

on Graphs: A Survey

L. LOVÁSZ

Dedicated to the marvelous random walk

of Paul Erdős

through universities, continents, and mathematics

The cover time for the complete graph is a little more interesting, and

is closely related to the so-called Coupon Collector Problem (if you want to

collect each of n different coupons, and you get every day a random coupon

in the mail, how long do you have to wait?). Let øi denote

first oftime

Variousthe

aspects

the theory of random walks on graphs are surveyed. In

when i vertices have been visited. So ø1 = 0 < ø2particular,

= 1 < øestimates

< the

øn . important parameters of access time, commute time,

3 < . . . on

cover

timevertex

and mixing

time are discussed. Connections with the eigenvalues

Now øi+1 ° øi is the number of steps while we wait for

a new

to occur

of graphs of

and

with

electrical networks, and the use of these connections in

— an event with probability (n ° i)/(n ° 1), independently

the

previous

the study of random walks is described. We also sketch recent algorithmic

steps. Hence

applications of random walks, in particular to the problem of sampling.

n°1

E(øi°1 ° øi ) =

,

n°i

and so the cover time is

n°1

X

n°1

X

0. Introduction

0

1

n°1

º n log n.

n°i

i=1

i=1 Given a graph and a starting point, we select a neighbor of it at random, and

move to this neighbor; then we select a neighbor of this point at random,

A graph with particularly bad random walk properties is obtained by

and move to it etc. The (random) sequence of points selected this way is a

taking a clique of size n/2 and attach to it an endpoint of a path of length

random walk on the graph.

n/2. Let i be any node of the clique and j, the “free” endpoint of the path.

A random walk is a finite Markov chain that is time-reversible (see

Then

H(i, j) = Ω(n3 ). below). In fact, there is not much difference between the theory of random

walks on graphs and the theory of finite Markov chains; every Markov chain

E(øn ) =

E(øi+1 ° øi ) =