Escape Problems & Stochastic Resonance 18.S995 - L05

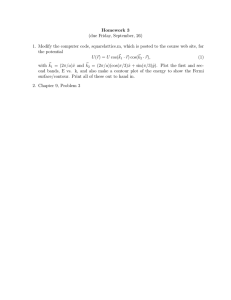

advertisement

Escape Problems &

Stochastic Resonance

18.S995 - L05

dunkel@mit.edu

1.4

Escape problem

Escape problems are ubiquitous in biological, biophysical and biochemical processes. Prominent examples include, but are not restricted to,

• unbinding of molecules from receptors,

• chemical reactions,

• transfer of ion through through pores,

• evolutionary transitions between di↵erent fitness optima.

Their mathematical treatment typically involves models that are structurally very similar

to the one-dimensional examples discussed in this section13 .

1.4.1

Generic minimal model

Consider the over-damped SDE

dx(t) =

@x U dt +

p

2D ⇤ dB(t)

(1.68a)

with a confining potential U (x)

lim U (x) ! 1

(1.68b)

x!±1

that has two (or more) minima and maxima. A typical example is the bistable quartile

double-well

U (x) =

a 2 b 4

x + x ,

2

4

a, b > 0

(1.68c)

Examples

Illustration by J.P. Cartailler. Copyright 2007, Symmation LLC.

transport & evolution:

stochastic

escape problems

http://evolutionarysystemsbiology.org/intro/

dunkel@math.mit.edu

Arrhenius law

Stochastic resonance

Gammaitoni et al.:

FIG. 1. Stochastic resonance in a symmetric double well. (a)

Sketch of the double-well potential V(x)5(1/4)bx 4

2(1/2)ax 2 . The minima are located at 6x m , where

x m 5(a/b) 1/2. These are separated by a potential barrier with

the height given by DV5a 2 /(4b). The barrier top is located at

dunkel@math.mit.edu

https://www.youtube.com/watch?v=4pdiAneMMhU

Freund et al (2002) J Theor Biol

FIG. 1. (a) A juvenile paddle"sh. (b) Close-up view of clusters of electroreceptor pores on the rostrum.

Paddle Fish

juvenile paddle"sh. (b) Close-up view of clusters of electroreceptor pores on the rostrum.

FIG. 2. (a) A single Daphnia. (b) Electric potential from a tethered Daphnia measured at a distance of 0.5 cm. The oscill

sult from higher frequency feeding motions of the legs and lower frequency swimming motions of the antennae. (c) P

.

ss

ision but

o adapt to

creatures

retaceous

mis, 1991),

ctrorecepd rostrum

Fig. 1(a).

mpullae of

the water

minate in

Fig. 1(b).

ected and

n entirely

rostral aral., 2000;

Freund et al (2002) J Theor Biol

$%&&%'( &) *+&,'%

Paddle Fish

demic Press

right

above

60 left

0

n=312

–60

–60

0

60

below

Vertical distance (mm)

juvenile

(b) Close-up view

clustersnoise

of electroreceptor

pores

on the rostrum.

a paddle"sh.

c

b ofOptimal

Control

High

noise

(0.5 µV cm–1 r.m.s.)

(20 µV cm–1 r.m.s.)

60

60

0

0

n=373

–60

–60

0

n=46

–60

60

–60

0

60

Horizontal distance (mm)

Russel et al (1999) Nature

.

ss

ision but

o adapt to

creatures

retaceous

mis, 1991),

ctrorecepd rostrum

Fig. 1(a).

mpullae of

the water

minate in

Fig. 1(b).

ected and

n entirely

rostral aral., 2000;

Freund et al110,

(2002)

J Theor Biol

nstitut fuK r Physik, Humboldt-;niversitaK t zu Berlin, Invalidenstr.

D-10115

Berlin, German

enter for ¸imnology, ;niversity of =isconsin-Madison, Madison, =I 53706, ;.S.A. and A Center f

Neurodynamics, ;niversity of Missouri at St. ¸ouis, St. ¸ouis, MO 63121, ;.S.A.

demic Press

(Received on 21 May 2001, Accepted in revised form on 18 August 2001)

Paddle Fish

Zooplankton emit weak electric "elds into the surrounding water that originate from their

own paddle"sh.

muscular activities

associatedview

with of

swimming

Juvenile paddle"sh

prey

juvenile

(b) Close-up

clustersand

of feeding.

electroreceptor

pores on

theupon

rostrum.

single zooplankton by detecting and tracking these weak electric signatures. The passive

electric sense in this "sh is provided by an elaborate array of electroreceptors, Ampullae of

Lorenzini, spread over the surface of an elongated rostrum. We have previously shown that the

"sh use stochastic resonance to enhance prey capture near the detection threshold of their

sensory system. However, stochastic resonance requires an external source of electrical noise in

order to function. A swarm of plankton, for example Daphnia, can provide the required noise.

We hypothesize that juvenile paddle"sh can detect and attack single Daphnia as outliers in the

vicinity of the swarm by using noise from the swarm itself. From the power spectral density of

the noise plus the weak signal from a single Daphnia, we calculate the signal-to-noise ratio,

Fisher information and discriminability at the surface of the paddle"sh's rostrum. The results

predict a speci"c attack pattern for the paddle"sh that appears to be experimentally testable.

! 2002 Academic Press

Introduction

ddle"sh, Polyodon spathula, are among the

and muddy water obscure normal vision b

where plankton are plentiful. In order to adapt

to the one-dimensional examples discussed in this section13 .

1.4.1

Generic minimal model

Consider the over-damped SDE

dx(t) =

@x U dt +

p

2D ⇤ dB(t)

(1.68a)

with a confining potential U (x)

lim U (x) ! 1

(1.68b)

x!±1

that has two (or more) minima and maxima. A typical example is the bistable quartile

double-well

U (x) =

a 2 b 4

x + x ,

2

4

a, b > 0

(1.68c)

p

with minima at ± a/b.

Generally, we are interested in characterizing the transitions between neighboring minima in terms of a rate k (units of time 1 ) or, equivalently, by the typical time required for

escaping from one of the minima. To this end, we shall first dicuss the general structure of

the time-dependent solution of the FPE14 for the corresponding PDF p(t, x), which reads

@t p =

@x j ,

j(t, x) =

[(@x U )p + D@x p],

(1.68d)

and has the stationary zero-current (j ⌘ 0) solution

ps (x) =

13

14

e

U (x)/D

Z

,

Z=

Z

+1

dx e

U (x)/D

.

1

Although things usually get more complicated in higher-dimensions.

FPEs for over-damped processes are sometimes referred to as Smoluchowski equations.

(1.69)

Generally, we are interested in characterizing the transitions between neighboring minima in terms of a rate k (units of time 1 ) or, equivalently, by the typical time required for

escaping from one of the minima. To this end, we shall first dicuss the general structure of

the time-dependent solution of the FPE14 for the corresponding PDF p(t, x), which reads

General time-dependent solution

@t p =

@x j ,

j(t, x) =

[(@x U )p + D@x p],

(1.68d)

and has the stationary zero-current (j ⌘ 0) solution

To find the time-dependent solution, we can make the ansatz

Z +1

U (x)/D

e

ps (x) =

dx ,e U (x)/D .

p(t, x), = %(t,Zx)=e U (x)/(2D)

Z

1

13 which leads to a Schrödinger equation in imaginary time

Although things usually get more complicated in higher-dimensions.

14

⇥

⇤

FPEs for over-damped processes are sometimes

2 referred to as Smoluchowski equations.

@t % = D@x + W (x) % =: H%,

(1.69)

(1.70)

(1.71a)

16

with an e↵ective potential

1

W (x) =

(@x U )2

4D

1 2

@ U.

2 x

(1.71b)

Assuming the Hamilton operator H has a discrete non-degenerate spectrum,

the general solution p(t, x) may be written as

p(t, x) = e

U (x)/(2D)

1

X

cn

n (x) e

nt

,

0

<

1

< . . .,

(1.72a)

n=0

where the eigenfunctions

n

of H satisfy

Z

dx ⇤n (x)

m (x)

=

nm ,

and the constants cn are determined by the initial conditions

Z

cn = dx ⇤n (x) eU (x)/(2D) p(0, x).

(1.72b)

(1.72c)

Generally, we are interested in characterizing the transitions between neighboring minima in terms of a rate k (units of time 1 ) or, equivalently, by the typical time required for

escaping from one of the minima. To this end, we shall first dicuss the general structure of

the time-dependent solution of the FPE14 for the corresponding PDF p(t, x), which reads

General time-dependent solution

@t p =

@x j ,

j(t, x) =

[(@x U )p + D@x p],

(1.68d)

and has the stationary zero-current (j ⌘ 0) solution

To find the time-dependent solution, we can make the ansatz

Z +1

U (x)/D

e

ps (x) =

dx ,e U (x)/D .

p(t, x), = %(t,Zx)=e U (x)/(2D)

Z

1

13 which leads to a Schrödinger equation in imaginary time

Although things usually get more complicated in higher-dimensions.

14

⇥

⇤

FPEs for over-damped processes are sometimes

2 referred to as Smoluchowski equations.

@t % = D@x + W (x) % =: H%,

(1.69)

(1.70)

(1.71a)

16

with an e↵ective potential

1

W (x) =

(@x U )2

4D

1 2

@ U.

2 x

(1.71b)

Assuming the Hamilton operator H has a discrete non-degenerate spectrum,

the general solution p(t, x) may be written as

p(t, x) = e

U (x)/(2D)

1

X

cn

n (x) e

nt

,

0

<

1

< . . .,

(1.72a)

n=0

where the eigenfunctions

n

of H satisfy

Z

dx ⇤n (x)

m (x)

=

nm ,

and the constants cn are determined by the initial conditions

Z

cn = dx ⇤n (x) eU (x)/(2D) p(0, x).

(1.72b)

(1.72c)

p(t, x)

e

W=(x)

=

cn) n (x) e @ U.

,

(@x U

x

4D n=0

2

(1.72a)

General time-dependent solution

wherethe

the Hamilton

eigenfunctions

satisfy

n of HH

Assuming

operator

has a discrete non-degenerate spectrum,

the general solution p(t, x) mayZ be written

as

⇤

dx

n (x)

m (x)

=

nm ,

<

(x)/(2D)

and the constants cn are

the initial conditions

p(t,determined

x) = e Uby

cn n (x) e

Z

n=0

⇤

U (x)/(2D)

cn = dx n (x) e

p(0, x).

nt

,

< ..

(1.72a

(1.72c)

of H satisfy

At large times, t ! 1, the solution

(1.72a) must approach the stationary solution (1.69),

Z

implying that

dx ⇤n (x) m (x) = nm ,

and

1

(1.72b)

1

X

where the eigenfunctions

0

(1.71b

n

1

e U (x)/(2D)

p

c0 = p ,

.

0 = 0 ,

0 (x) =

Z the initial conditions

Z

the constants cn are determined by

Note that 0 = 0 in particular Z

means that the first non-zero eigenvalue

⇤

the relaxation dynamics cat large

= times

dx and,

(x)therefore,

eU (x)/(2D) p(0, x).

n

(1.73)

1

> 0 dominates

(1.72

n

⌧⇤ = 1/

(1.72b

(1.74)

1

At large times, t ! 1, the solution (1.72a) must approach the stationary solution (1.69

is a natural measure of the escape time. In practice, the eigenvalue 1 can be computed

implying

that standard methods (WKB approximation, Ritz method, techniques exploiting

by various

supersymmetry, etc.) depending on the specifics of the e↵ective potential W .

0

Note that

0

=0,

1

c0 = p ,

Z

0 (x)

=

e

U (x)/(2D)

p

Z

.

= 0 in particular means that the first non-zero eigenvalue

(1.73

1

> 0 dominate

1.4.2

Two-state approximation

We next illustrate a commonly used simplified description of escape problems, which can

be related to (1.74). As a specific example, we can again consider the escape of a particle

from the left well of a symmetric quartic double well-potential

U (x) =

a 2 b 4

x + x ,

2

4

p(0, x) = (x

x )

(1.75a)

where

x =

p

a/b

(1.75b)

is the location of the left minimum, but the general approach is applicable to other types

of potentials as well.

The basic idea of the two-state approximation is to project the full FPE dynamics onto

simpler set of master equations by considering the probabilities P± (t) of the coarse-grained

particle-states ‘left well’ ( ) and ‘right well’ (+), defined by

Z 0

P (t) =

dx p(t, x),

(1.76a)

1

Z 1

P+ (t) =

dx p(t, x).

(1.76b)

_

0

+

If all particles start in the left well, then

P (0) = 1 ,

P+ (0) = 0.

(1.77)

Whilst the exact dynamics of P± (t) is governed by the FPE (1.68d), the two-state approximation assumes that this dynamics can be approximated by the set of master equations15

Ṗ =

k+ P + k P+ ,

Ṗ+ = k+ P

k P+ .

(1.78)

The basic idea of the two-state approximation is to project the full FPE dynamics onto

simpler set of master equations by considering the probabilities P± (t) of the coarse-grained

t=0

particle-states ‘left well’ ( ) and ‘right well’ (+), defined by

Z 0

P (t) =

dx p(t, x),

(1.76a)

1

Z 1

P+ (t) =

dx p(t, x).

(1.76b)

_

+

0

If all particles start in the left well, then

P (0) = 1 ,

P+ (0) = 0.

(1.77)

Whilst the exact dynamics of P± (t) is governed by the FPE (1.68d), the two-state approximation assumes that this dynamics can be approximated by the set of master equations15

Ṗ =

k+ P + k P+ ,

Ṗ+ = k+ P

k P+ .

(1.78)

For a symmetric potential, U (x) = U ( x), forward and backward rates are equal, k+ =

k = k, and in this case, the solution of Eq. (1.78) is given by

1 1

P± (t) = ⌥ e

2 2

2k t

.

(1.79)

For comparison, from the FPE solution (1.72a), we find in the long-time limit

U (x)/2D

p(t, x) ' ps (x) + c1 e

1 (x)

e

1t

,

(1.80)

Due to the symmetry of ps (x), we then have

1

P (t) ' + C1 e

2

15

1t

Note that Eqs. (1.78) conserve the total probability, P (t) + P (t) = 1.

18

(1.81a)

The basic idea of the two-state approximation is to project the full FPE dynamics onto

simpler set of master equations by considering the probabilities P± (t) of the coarse-grained

t=0

particle-states ‘left well’ ( ) and ‘right well’ (+), defined by

Z 0

P (t) =

dx p(t, x),

(1.76a)

1

Z 1

P+ (t) =

dx p(t, x).

(1.76b)

_

+

0

If all particles start in the left well, then

P (0) = 1 ,

P+ (0) = 0.

(1.77)

Whilst the exact dynamics of P± (t) is governed by the FPE (1.68d), the two-state approximation assumes that this dynamics can be approximated by the set of master equations15

Ṗ =

k+ P + k P+ ,

Ṗ+ = k+ P

k P+ .

(1.78)

For a symmetric potential, U (x) = U ( x), forward and backward rates are equal, k+ =

k = k, and in this case, the solution of Eq. (1.78) is given by

1 1

P± (t) = ⌥ e

2 2

2k t

.

(1.79)

For comparison, from the FPE solution (1.72a), we find in the long-time limit

U (x)/2D

p(t, x) ' ps (x) + c1 e

1 (x)

e

1t

,

(1.80)

Due to the symmetry of ps (x), we then have

1

P (t) ' + C1 e

2

15

1t

Note that Eqs. (1.78) conserve the total probability, P (t) + P (t) = 1.

18

(1.81a)

1 1

P± (t) = ⌥ e

2 2

2k t

.

(1.79)

arison, from the FPE solution (1.72a), we find in the long-time limit

p(t, x) ' ps (x) + c1 e

U (x)/2D

1 (x)

e

1t

,

_

+

(1.80)

Solution

he symmetry

of ps (x), we then have

1

P (t) ' + C1 e

2

1t

(1.81a)

hat Eqs. (1.78) conserve the total probability, P (t) + P (t) = 1.

where

C 1 = c1

Z

0

18U (x)/2D

e

1 (x)

,

c1 =

1

⇤

1 (x

) eU (x

)/(2D)

.

(1.81b)

Since Eq. (1.81a) neglects higher-order eigenfunctions, C1 is in general not exactly equal

but usually close to 1/2. But, by comparing the time-dependence of (1.81a) and (1.79), it

is natural to identify

1

k'

=

.

2

2⌧⇤

1

(1.82)

We next discuss, by considering in a slightly di↵erent setting, how one can obtain an

explicit result for the rate k in terms of the parameters of the potential U .

1.4.3

Constant-current solution

Consider a bistable potential as in Eq. (1.75), but now with a particle source at x0 < x < 0

Since Eq. (1.81a) neglects higher-order eigenfunctions, C1 is in general not exactly equal

but usually close to 1/2. But, by comparing the time-dependence of (1.81a) and (1.79), it

is natural to identify

_

1

k'

=

.

2

2⌧⇤

1

(1.82)

We next discuss, by considering in a slightly di↵erent setting, how one can obtain an

explicit result for the rate k in terms of the parameters of the potential U .

source

1.4.3

Constant-current solution

Consider a bistable potential as in Eq. (1.75), but now with a particle source at x0 < x < 0

and a sink16 at x1 > xb = 0. Assuming that particles are injected at x0 at constant flux

j(t, x) ⌘ J = const, the escape rate can be defined by

k :=

J

,

P

(1.83)

with P denoting the probability of being in the left well, as defined in Eq. (1.76a) above.

To compute the rate from Eq. (1.83), we need to find a stationary constant flux solution

pJ (x) of Eq. (1.68d), satisfying pJ (x1 ) = 0 and

J=

(@x U )pJ

D@x pJ

(1.84)

for some constant J. This solution is given by [HTB90]

Z

J U (x)/D x1

pJ (x) = e

dy eU (y)/D ,

D

x

(1.85)

as one can verify by di↵erentiation

(@x U )pJ

D@x pJ =

(@x U )pJ

=

(@x U )pJ

= J.

Z

J U (x)/D x1

D@x

e

dy eU (y)/D

D

x

Z x1

(@x U ) U (x)/D

J

e

dy eU (y)/D

D

x

1

(1.86)

+

sink

Therefore, the inverse rate k

k

1

1

from Eq. (1.83) can be formally expressed as

Z

Z x1

P

1 x1

=

=

dx e U (x)/D

dy eU (y)/D ,

J

D 1

x

and a partial integration yields the equivalent representation

Z x1

Z x

1

k 1=

dx eU (x)/D

dy e U (y)/D .

D 1

1

(1.87)

(1.88)

Assuming a sufficiently steep barrier, the integrals in Eq. (1.88) may be evaluated by

adopting steepest descent approximations near the potential minimum at x and near the

maximum at the barrier position xb . More precisely, taking into account that U 0 (x ) =

U 0 (xb ) = 0, one can replace the potentials in the exponents by the harmonic approximations

1

(x

2⌧b

1

U (y) ' U (x ) +

(y

2⌧

U (x) ' U (x )

x )2 ,

(1.89a)

x )2 ,

(1.89b)

where

⌧ =

U 00 (x0 ) > 0 ,

⌧b = U 00 (xb ) > 0

(1.90)

carry units of time. Inserting (1.89) into (1.88) and replacing the upper integral boundaries

by +1, one thus obtains the so-called Kramers rate [HTB90, GHJM98]

e U/D

k' p

=: kK ,

2⇡ ⌧ ⌧b

U = U (xb )

U (x ).

(1.91)

This result agrees with the well-known empirical Arrhenius law. Note that, because typically D / kB T for thermal noise, binding/unbinding rates depend sensitively on temperature – this is one of the reasons why many organisms tend to function properly only within

a limited temperature range.

Therefore, the inverse rate k

k

1

1

from Eq. (1.83) can be formally expressed as

Z

Z x1

P

1 x1

=

=

dx e U (x)/D

dy eU (y)/D ,

J

D 1

x

and a partial integration yields the equivalent representation

Z x1

Z x

1

k 1=

dx eU (x)/D

dy e U (y)/D .

D 1

1

(1.87)

(1.88)

Assuming a sufficiently steep barrier, the integrals in Eq. (1.88) may be evaluated by

adopting steepest descent approximations near the potential minimum at x and near the

maximum at the barrier position xb . More precisely, taking into account that U 0 (x ) =

U 0 (xb ) = 0, one can replace the potentials in the exponents by the harmonic approximations

1

(x

2⌧b

1

U (y) ' U (x ) +

(y

2⌧

U (x) ' U (x )

x )2 ,

(1.89a)

x )2 ,

(1.89b)

where

⌧ =

U 00 (x0 ) > 0 ,

⌧b = U 00 (xb ) > 0

(1.90)

carry units of time. Inserting (1.89) into (1.88) and replacing the upper integral boundaries

by +1, one thus obtains the so-called Kramers rate [HTB90, GHJM98]

e U/D

k' p

=: kK ,

2⇡ ⌧ ⌧b

U = U (xb )

U (x ).

_(1.91) +

This result agrees with the well-known empirical Arrhenius law. Note that, because typically D / kB T for thermal noise, binding/unbinding rates depend sensitively on temperature – this is one of the reasons why many organisms tend to function properly only within

source

a limited temperature range.

Arrhenius law

sink

k

1

P

1

=

=

J

D

Z

x1

dx e

U (x)/D

1

Z

x1

dy eU (y)/D ,

x

Stochastic resonance

and a partial integration yields the equivalent representation

Z x1

Z x

1

1

U (x)/D

U (y)/D

k

=

dx

e

dy

e

.

Gammaitoni et al.: Stochastic resonance

D 1

1

17

of a bistable string

c resonance in

(1.88)

1. a nonlinear measurement device ,

d On

nce

stochastic resonance

nance in the deep

tially extended

(1.87)

261

261

262

Assuming

a sufficiently

steep

the integrals

in Eq. (1.88)

2. a periodic

signal weaker

thanbarrier,

the threshold

of measurement

device,may be evaluated by

adopting steepest descent approximations near the potential minimum at x and near the

263

3. additional

input

noise,position

uncorrelated

with the

signal oftaking

interest.

maximum

at the

barrier

xb . More

precisely,

into account that U 0 (x ) =

Gammaitoni et al.: Stochastic resonance

U 0 (x267

b ) = 0, one can replace the potentials in the exponents by the harmonic approximations

267

To provide some intuition, assume that a weak periodic signal (frequency ⌦) is detected

escape rate r K . H

268

by

a

particle

that

can

move

move

in

the

bistable

double

well-potential

(1.75).

For

weak

tried to reproduce

, and crisis

270

(including here th

noise,272the particle will remain trapped in one of the

we

will

be

unable

toa synchroni

1 minima and

2

that

274

U (x) motion.

' U (xSimilarly,

)

(xnot xmuch

) , information abouttime

(1.89a)

the conditio

infer 274

the signal from the particle’s

the

2⌧

b

ther D or V. In F

d the dithering effect

274

underlying

signal

can

be

gained

if

the

noise

is

too

strong,

for

in

this

case

the

particle

will

input-output

sync

1

upled systems

274

2

tem (1.89b)

Eqs. (2.1)–(2

stems

274

U (y)

' U

(xminima.

)+

(y

x ) , the noise strength

jump

randomly

back

and

forth

between

the

If,

however,

is

increased from low

obally coupled

2⌧

very large values,

tuned275such that the Kramers escape rate (1.91) is of the order of the driving frequency,

n models

chastic resonance

resonance

onance

ed topics

es

ecular motors

ally driven systems

y

275

275

275

276

277

277

277

278

279

279

281

281

283

where

⌧ =

kK ⇠ ⌦,

U 00 (x0 ) > 0 ,

⌧b = U 00 (xb ) > 0

Eqs. (2.8). In the

comes tightly lock

(1.92)

the noise intensit

quency V is incre

alternate asymme

correlated

positive or negativ

input signal. How

rections within an

of V, the effect o

and the symmetry

restored. Finally,

nism is establishe

In the following

resonance as a

enon, resulting fr

periodic forcing in

(1.93a)

to this purpose is

(1.90)

then it is plausible to expect that the particle’s escape dynamics will be closely

with

the

driving

frequency,

thus(1.89)

exhibiting

SR. and replacing the upper integral boundaries

carry

units

of time.

Inserting

into (1.88)

FIG. 1. Stochastic resonance in a symmetric double well. (a)

Sketch of the double-well potential V(x)5(1/4)bx 4

2(1/2)ax 2 . The minima are located at 6x m , where

x m 5(a/b) 1/2. These are separated by a potential barrier with

the height given by DV5a 2 /(4b). The

barrier top is located at

U/D

x b 50. In the presence of periodic driving, the double-well potential V(x,t)5V(x)2A 0 x cos(Vt) is tilted back K

and forth,

b

thereby raising and lowering successively the potential barriers

of the right and the left well, respectively, in an antisymmetric

by +1, one thus obtains the so-called Kramers rate [HTB90, GHJM98]

1.5.1

Generic model

e

To illustrate SR morek quantitatively,

periodically

' p

=:consider

k , the U

= U (xb ) driven

U (x SDE

).

(1.91)

2⇡ ⌧ ⌧

p

dunkel@math.mit.edu

dX(t) =

@x U dt + A cos(⌦t) dt +

2D ⇤ dB(t),

kK ⇠ ⌦,

(1.92)

then it is plausible to expect that the particle’s escape dynamics will be closely correlated

with the driving frequency, thus exhibiting SR.

1.5

Stochastic resonance

1.5.1 typically

Generic impairs

model signal transduction, but under certain conditions an

Noise

of

randomness

actuallyconsider

help the

to periodically

enhance driven

weak SDE

signals [GHJM98]. Th

To illustrate

SR moremay

quantitatively,

p

phenomenon isdX(t)

known

as

stochastic

resonance

(SR). Whilst SR

was originall

= @x U dt + A cos(⌦t) dt + 2D ⇤ dB(t),

(1.93a)

a possible explanation for periodically recurring climate cycles [NN81, BPS

where A is the signal amplitude

and

ments

suggest [FSGB+ 02]

that some organisms like juvenile paddle-fish mig

a 2foraging

b 4

to enhance signal detection

while

for food.

U (x) =

x + x

(1.93b)

2

4

The occurrence of SR requires three main ‘ingredients’

p

a symmetric double-well potential with minima at ±x⇤ = ±

U = a2 /(4b). Introducing rescaled variables

a/b and barrier height

20

x0 = x/x⇤ ,

t0 = at ,

A0 = A/(ax⇤ ) ,

D0 = D/(ax2⇤ ) ,

⌦0 = ⌦/a.

and dropping primes. we can rewrite (1.93a) in the dimensionless form

p

3

dX(t) = (x x ) dt + A cos(⌦t) dt + 2D ⇤ dB(t),

with a rescaled barrier height

@t p =

(1.93c)

U = 1/4. The associated FPE reads

@x {[ (@x U ) + A cos(⌦t)]p

D@x p}.

(1.94)

For our subsequent discussion, it is useful to rearrange terms on the rhs. as

@t p = @x [(@x U )p + D@x p]

17

A cos(⌦t)@x p.

(1.95)

That is, the input-output relationship between the input signal and the observable must be nonlinear

dX(t) = (x

with a rescaled barrier height

3

x ) dt + A cos(⌦t) dt +

p

2D ⇤ dB(t),

U = 1/4. The associated FPE reads

Perturbation

theory

@ {[ (@ U ) + A cos(⌦t)]p D@ p}.

@t p =

(1.93c)

x

x

x

(1.94)

For our subsequent discussion, it is useful to rearrange terms on the rhs. as

@t p = @x [(@x U )p + D@x p]

17

A cos(⌦t)@x p.

(1.95)

That is, the input-output relationship between the input signal and the observable must be nonlinear

To solve Eq. (1.95) perturbatively, we insert the series ansatz

p(t, x) =

21

1

X

An pn (t, x),

(1.96)

n=0

which gives

1

X

n=0

An @t pn =

1

X

An @x [(@x U )pn + D@x pn ]

An+1 cos(⌦t)@x pn

(1.97)

n=0

Focussing on the liner response regime, corresponding to powers A0 and A1 , we find

@t p0 = @x [(@x U )p0 + D@x p0 ]

@t p1 = @x [(@x U )p1 + D@x p1 ]

cos(⌦t)@x p0

(1.98a)

(1.98b)

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

solution is just the Boltzmann distribution

Z

e U (x)/D

p0 (x) =

,

Z0 = dx e U (x)/D

(1.99)

Z0

To obtain a formal solution to Eq. (1.98b), we make use of the following ansatz

dX(t) = (x

with a rescaled barrier height

3

x ) dt + A cos(⌦t) dt +

p

2D ⇤ dB(t),

U = 1/4. The associated FPE reads

Perturbation

theory

@ {[ (@ U ) + A cos(⌦t)]p D@ p}.

@t p =

(1.93c)

x

x

x

(1.94)

For our subsequent discussion, it is useful to rearrange terms on the rhs. as

@t p = @x [(@x U )p + D@x p]

17

A cos(⌦t)@x p.

(1.95)

That is, the input-output relationship between the input signal and the observable must be nonlinear

To solve Eq. (1.95) perturbatively, we insert the series ansatz

p(t, x) =

21

1

X

An pn (t, x),

(1.96)

n=0

which gives

1

X

n=0

An @t pn =

1

X

An @x [(@x U )pn + D@x pn ]

An+1 cos(⌦t)@x pn

(1.97)

n=0

Focussing on the liner response regime, corresponding to powers A0 and A1 , we find

@t p0 = @x [(@x U )p0 + D@x p0 ]

@t p1 = @x [(@x U )p1 + D@x p1 ]

cos(⌦t)@x p0

(1.98a)

(1.98b)

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

solution is just the Boltzmann distribution

Z

e U (x)/D

p0 (x) =

,

Z0 = dx e U (x)/D

(1.99)

Z0

To obtain a formal solution to Eq. (1.98b), we make use of the following ansatz

dX(t) = (x

with a rescaled barrier height

3

x ) dt + A cos(⌦t) dt +

p

2D ⇤ dB(t),

U = 1/4. The associated FPE reads

Perturbation

theory

@ {[ (@ U ) + A cos(⌦t)]p D@ p}.

@t p =

(1.93c)

x

x

x

(1.94)

For our subsequent discussion, it is useful to rearrange terms on the rhs. as

@t p = @x [(@x U )p + D@x p]

17

A cos(⌦t)@x p.

(1.95)

That is, the input-output relationship between the input signal and the observable must be nonlinear

To solve Eq. (1.95) perturbatively, we insert the series ansatz

p(t, x) =

21

1

X

An pn (t, x),

(1.96)

n=0

which gives

1

X

n=0

An @t pn =

1

X

An @x [(@x U )pn + D@x pn ]

An+1 cos(⌦t)@x pn

(1.97)

n=0

Focussing on the liner response regime, corresponding to powers A0 and A1 , we find

@t p0 = @x [(@x U )p0 + D@x p0 ]

@t p1 = @x [(@x U )p1 + D@x p1 ]

cos(⌦t)@x p0

(1.98a)

(1.98b)

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

solution is just the Boltzmann distribution

Z

e U (x)/D

p0 (x) =

,

Z0 = dx e U (x)/D

(1.99)

Z0

To obtain a formal solution to Eq. (1.98b), we make use of the following ansatz

n=0

n=0

@t p0 = @x [(@x U )p0 + D@x p0 ]

@t p1 = @x [(@x U )p1 + D@x p1 ]

(1.98a)

(1.98b)

First-order

correction

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

cos(⌦t)@x0p0

Focussing on the liner response regime, corresponding to powers A and A1 , we find

@t p0the=Boltzmann

@x [(@x Udistribution

)p0 + D@x p0 ]

solution is just

Zcos(⌦t)@x p0

@t p1 = @x [(@xeU )p

U (x)/D

1 + D@x p1 ]

p0 (x) =

,

Z0

Z0 =

dx e

U (x)/D

(1.98a)

(1.98b)

(1.99)

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

To the

obtain

a formal solution

to Eq. (1.98b), we make use of the following ansatz

solution is just

Boltzmann

distribution

1

Z

X

U (x)/D

e p1 (t, x) = e U (x)/(2D)

a (t) m (x),

(1.100)

p0 (x) =

,

Z0 = m=1dx1me U (x)/D

(1.99)

Z0

where

m (x)

are the eigenfunctions of the unperturbed e↵ective Hamiltonian, cf. Eq. (1.71),

To obtain a formal solution to Eq. (1.98b), we make use of the following ansatz

H0 =

p1 (t, x) = e

1

(@x U )2

X4D

D@x2 +1

U (x)/(2D)

Inserting (1.100) into Eq. (1.98b) gives

a1m (t)

1 2

@ U.

2 x

m (x),

(1.101)

(1.100)

m=1

where

m (x)

1

X

1

X

are the eigenfunctions

e↵ective

Hamiltonian,

cf. Eq.(1.102)

(1.71),

ȧ1m m of

= the unperturbed

cos(⌦t)

eU (x)/(2D)

@ x p0 .

m a1m m

m=1

m=1

1

1

2

Multiplying thisHequation

by x2 +

1 to +1 while exploiting

the

D@

(@xintegrating

U )2

@from

(1.101)

n (x), and

0 =

x U.

orthonormality of the system { m4D

}, we obtain the2 coupled ODEs

Inserting (1.100) into Eq. (1.98b) gives

ȧ1m =

1

1

X

X

with

‘transition matrix’

elements

m=1

ȧ1m

m

=

m=1

Mm0 cos(⌦t),

cos(⌦t) eU (x)/(2D) @x p0 .

Zm

= dx m eU (x)/(2D) @x p0 .

m a1m

Mm0

m a1m

(1.103)

(1.102)

(1.104)

Multiplying this equation by n (x), and integrating from 1 to +1 while exploiting the

orthonormality of the system { }, we obtain the22coupled ODEs

n=0

n=0

@t p0 = @x [(@x U )p0 + D@x p0 ]

@t p1 = @x [(@x U )p1 + D@x p1 ]

(1.98a)

(1.98b)

First-order

correction

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

cos(⌦t)@x0p0

Focussing on the liner response regime, corresponding to powers A and A1 , we find

@t p0the=Boltzmann

@x [(@x Udistribution

)p0 + D@x p0 ]

solution is just

Zcos(⌦t)@x p0

@t p1 = @x [(@xeU )p

U (x)/D

1 + D@x p1 ]

p0 (x) =

,

Z0

Z0 =

dx e

U (x)/D

(1.98a)

(1.98b)

(1.99)

Equation (1.98a) is just an ordinary time-independent FPE, and we know its stationary

To the

obtain

a formal solution

to Eq. (1.98b), we make use of the following ansatz

solution is just

Boltzmann

distribution

1

Z

X

U (x)/D

e p1 (t, x) = e U (x)/(2D)

a (t) m (x),

(1.100)

p0 (x) =

,

Z0 = m=1dx1me U (x)/D

(1.99)

Z0

where

m (x)

are the eigenfunctions of the unperturbed e↵ective Hamiltonian, cf. Eq. (1.71),

To obtain a formal solution to Eq. (1.98b), we make use of the following ansatz

H0 =

p1 (t, x) = e

1

(@x U )2

X4D

D@x2 +1

U (x)/(2D)

Inserting (1.100) into Eq. (1.98b) gives

a1m (t)

1 2

@ U.

2 x

m (x),

(1.101)

(1.100)

m=1

where

m (x)

1

X

1

X

are the eigenfunctions

e↵ective

Hamiltonian,

cf. Eq.(1.102)

(1.71),

ȧ1m m of

= the unperturbed

cos(⌦t)

eU (x)/(2D)

@ x p0 .

m a1m m

m=1

m=1

1

1

2

Multiplying thisHequation

by x2 +

1 to +1 while exploiting

the

D@

(@xintegrating

U )2

@from

(1.101)

n (x), and

0 =

x U.

orthonormality of the system { m4D

}, we obtain the2 coupled ODEs

Inserting (1.100) into Eq. (1.98b) gives

ȧ1m =

1

1

X

X

with

‘transition matrix’

elements

m=1

ȧ1m

m

=

m=1

Mm0 cos(⌦t),

cos(⌦t) eU (x)/(2D) @x p0 .

Zm

= dx m eU (x)/(2D) @x p0 .

m a1m

Mm0

m a1m

(1.103)

(1.102)

(1.104)

Multiplying this equation by n (x), and integrating from 1 to +1 while exploiting the

orthonormality of the system { }, we obtain the22coupled ODEs

1

X

ȧ1m

m

1

X

=

m a1m

cos(⌦t) eU (x)/(2D) @x p0 .

m

(1.102)

First-order

correction

Multiplying this equation by (x), and integrating from 1 to +1 while exploiting the

m=1

m=1

n

orthonormality of the system {

m },

ȧ1m =

we obtain the coupled ODEs

m a1m

Mm0 cos(⌦t),

(1.103)

eU (x)/(2D) @x p0 .

(1.104)

with ‘transition matrix’ elements

Z

Mm0 =

dx

m

22

The asymptotic solution of Eq. (1.103) reads

⌦

a1m (t) = Mm0 2

sin(⌦t) +

2

+

⌦

m

m

2

m

+ ⌦2

cos(⌦t) .

(1.105)

Note that, because @x p0 is an antisymmetric function, the matrix elements Mm0 vanish18

for even values m = 0, 2, 4, . . ., so that only the contributions from odd values m = 1, 3, 5 . . .

are asymptotically relevant.

Focussing on the leading order contribution, m = 1, and noting that p0 (x) = p0 ( x),

we can estimate the position mean value

Z

E[X(t)] = dx p(t, x) x

(1.106)

from

E[X(t)] ' A

' A

=

Z

Z

dx p1 (t, x) x

dx e

AM10

U (x)/(2D)

⌦

a11 (t)

1 (x) x

sin(⌦t) +

1

cos(⌦t)

Z

dx e

U (x)/(2D)

1 (x) x

a1m (t) =

Mm0

⌦

sin(⌦t) +

2 + ⌦2

m

m

cos(⌦t) .

Linear response

2

m

+ ⌦2

(1.105)

Note that, because @x p0 is an antisymmetric function, the matrix elements Mm0 vanish18

for even values m = 0, 2, 4, . . ., so that only the contributions from odd values m = 1, 3, 5 . . .

are asymptotically relevant.

Focussing on the leading order contribution, m = 1, and noting that p0 (x) = p0 ( x),

we can estimate the position mean value

Z

E[X(t)] = dx p(t, x) x

(1.106)

from

E[X(t)] ' A

' A

=

Z

dx p1 (t, x) x

Z

dx e

AM10

U (x)/(2D)

a11 (t)

1 (x) x

⌦

sin(⌦t) +

2

2

+

⌦

1

1

2

1

+

⌦2

cos(⌦t)

Z

dx e

U (x)/(2D)

1 (x) x

Using 1 = 2kK , where kK is the Kramers rate from Eq. (1.91), we can rewrite this more

compactly as

E[X(t)] = X cos(⌦t

')

(1.107a)

with phase shift

✓

⌦

' = arctan

2kK

◆

(1.107b)

and amplitude

X=

M10

A

2

(4kK

+ ⌦2 )1/2

Z

dx e

U (x)/(2D)

1 (x) x.

(1.107c)

The amplitude X depends on the noise strength D through kK , through the integral factor

and also through the matrix element

Z

M10 =

dx 1 eU (x)/(2D) @x p0 .

(1.108)

with phase shift

✓

◆

Linear

response

⌦

' = arctan

2kK

(1.107b)

and amplitude

X=

M10

A

2

(4kK

+ ⌦2 )1/2

Z

dx e

U (x)/(2D)

1 (x) x.

(1.107c)

The amplitude X depends on the noise strength D through kK , through the integral factor

and also through the matrix element

Z

M10 =

dx 1 eU (x)/(2D) @x p0 .

(1.108)

18

potential

(x) is

symmetric

and,determine

therefore, the

Hamiltonian of

commutes

parity

To The

compute

X, Uone

first

needs to

the e↵ective

eigenfunction

H0 as with

defined

in

1

operator, implying that the eigenfunctions 2k are symmetric under x 7! x, whereas eigenfunctions

Eq. (1.101).

For the quartic double-well potential (1.93b), this cannot be done analytically

2k+1 are antisymmetric under this map.

but there exist standard techniques

p (e.g., Ritz method) for approximating 1 by functions

that are orthogonal to 0 = p0 /Z0 . Depending on the method employed, analytical

23

estimates for X may vary quantitatively but always show a non-monotonic dependence on

the noise strength D for fixed potential-parameters (a, b). As discussed in [GHJM98], a

reasonably accurate estimate for X is given by

✓

◆1/2

2

Aa

4kK

X'

,

(1.109)

2

Db 4kK

+ ⌦2

which exhibits a maximum for a critical value D⇤ determined by

✓

◆

U

2

4kK

= ⌦2

1 .

D⇤

(1.110)

That is, the value D⇤ corresponds to the optimal noise strength, for which the mean

value E[X(t)] shows maximal response to the underlying periodic signal – hence the name

‘stochastic resonance’ (SR).

1.5.2

Master equation approach

That is, the value D⇤ corresponds to the optimal noise strength, for which the mean

value E[X(t)] shows maximal response to the underlying periodic signal – hence the name

‘stochastic resonance’ (SR).

1.5.2

Master equation approach

Similar to the case of the escape problem, one can obtain an alternative description of

SR by projecting the full FPE dynamics onto a simpler set of master equations for the

probabilities P± (t) of the coarse-grained particle-states ‘left well’ ( ) and ‘right well’ (+),

as defined by Eq. (1.76). This approach leads to the following two-state master equations

with time-dependent rates

Ṗ (t) =

Ṗ+ (t) =

k+ (t) P + k (t) P+ ,

k+ (t) P

k (t) P+ .

The general solution of this pair of ODEs is given by [GHJM98]

Z t

k± (s)

P± (t) = g(t) P± (t0 ) +

ds

g(s)

t0

(1.111a)

(1.111b)

(1.112a)

where

g(t) = exp

⇢ Z

t

ds [k+ (s) + k (s)] .

(1.112b)

t0

To discuss SR within this framework, it is plausible to postulate time-dependent Arrheniustype rates,

Ax⇤

k± (t) = kK exp ±

cos(⌦t) .

(1.113)

D

24

Adopting these rates and considering the asymptotic limit t0 ! 1, one can Taylorexpand the exact solution (1.112) for Ax⇤ ⌧ D to obtain

"

#

✓

◆2

Ax⇤

Ax⇤

P± (t) = kK 1 ±

cos(⌦t) +

cos2 (⌦t) ± . . . .

(1.114)

D

D

These approximations are valid for slow driving (adiabatic regime), and they allow us to

compute expectation values to leading order in Ax⇤ /D. In particular, one then finds for

the mean position the asymptotic linear response result [GHJM98]

E[X(t)] = X cos(⌦t

')

(1.115a)

where

X=

Ax2⇤

D

✓

2

4kK

2

4kK

+ ⌦2

◆1/2

,

' = arctan

✓

⌦

2kK

◆

(1.115b)

with kK denoting Kramers rate as defined in Eq. (1.91). Note that Eqs. (1.115) are consistent with our earlier result (1.107).

1.6

Brownian motors

Many biophysical processes, from muscular contractions to self-propulsion of microorganisms or intracellular transport, rely on biological motors. These are, roughly speaking,