The Identification Zoo - Meanings of Identification in Econometrics Arthur Lewbel

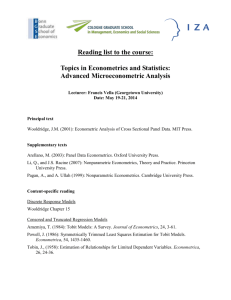

advertisement