Some Remarks on Sufficiency & Completeness Sufficiency Remarks 1. If X

advertisement

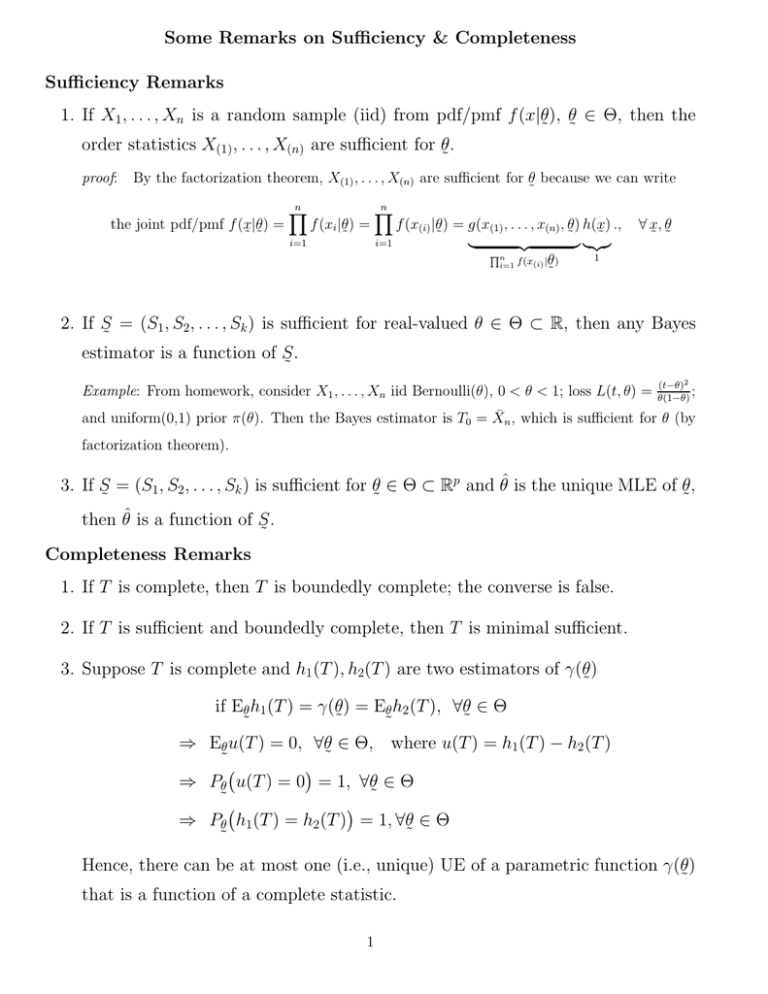

Some Remarks on Sufficiency & Completeness

Sufficiency Remarks

1. If X1 , . . . , Xn is a random sample (iid) from pdf/pmf f (x|θ), θ ∈ Θ, then the

˜ ˜

order statistics X(1) , . . . , X(n) are sufficient for θ.

˜

By the factorization theorem, X(1) , . . . , X(n) are sufficient for θ because we can write

˜

n

n

Y

Y

the joint pdf/pmf f (x|θ) =

f (xi |θ) =

f (x(i) |θ) = g(x(1) , . . . , x(n) , θ) h(x) ., ∀ x, θ

˜˜

˜

˜

˜} |{z}

˜

˜ ˜

i=1

i=1

|

{z

Qn f (x(i) |θ)

1

i=1

˜

proof:

2. If S = (S1, S2, . . . , Sk ) is sufficient for real-valued θ ∈ Θ ⊂ R, then any Bayes

˜

estimator is a function of S .

˜

Example: From homework, consider X1 , . . . , Xn iid Bernoulli(θ), 0 < θ < 1; loss L(t, θ) =

(t−θ)2

;

θ(1−θ)

and uniform(0,1) prior π(θ). Then the Bayes estimator is T0 = X̄n , which is sufficient for θ (by

factorization theorem).

3. If S = (S1, S2 , . . . , Sk ) is sufficient for θ ∈ Θ ⊂ Rp and θ̂ is the unique MLE of θ,

˜

˜

˜

then θ̂ is a function of S .

˜

Completeness Remarks

1. If T is complete, then T is boundedly complete; the converse is false.

2. If T is sufficient and boundedly complete, then T is minimal sufficient.

3. Suppose T is complete and h1 (T ), h2(T ) are two estimators of γ(θ)

˜

if Eθ h1 (T ) = γ(θ) = Eθ h2 (T ), ∀θ ∈ Θ

˜

˜

˜

˜

⇒ Eθ u(T ) = 0, ∀θ ∈ Θ, where u(T ) = h1 (T ) − h2 (T )

˜

˜

⇒ Pθ u(T ) = 0 = 1, ∀θ ∈ Θ

˜

˜

⇒ Pθ h1 (T ) = h2 (T ) = 1, ∀θ ∈ Θ

˜

˜

Hence, there can be at most one (i.e., unique) UE of a parametric function γ(θ)

˜

that is a function of a complete statistic.

1