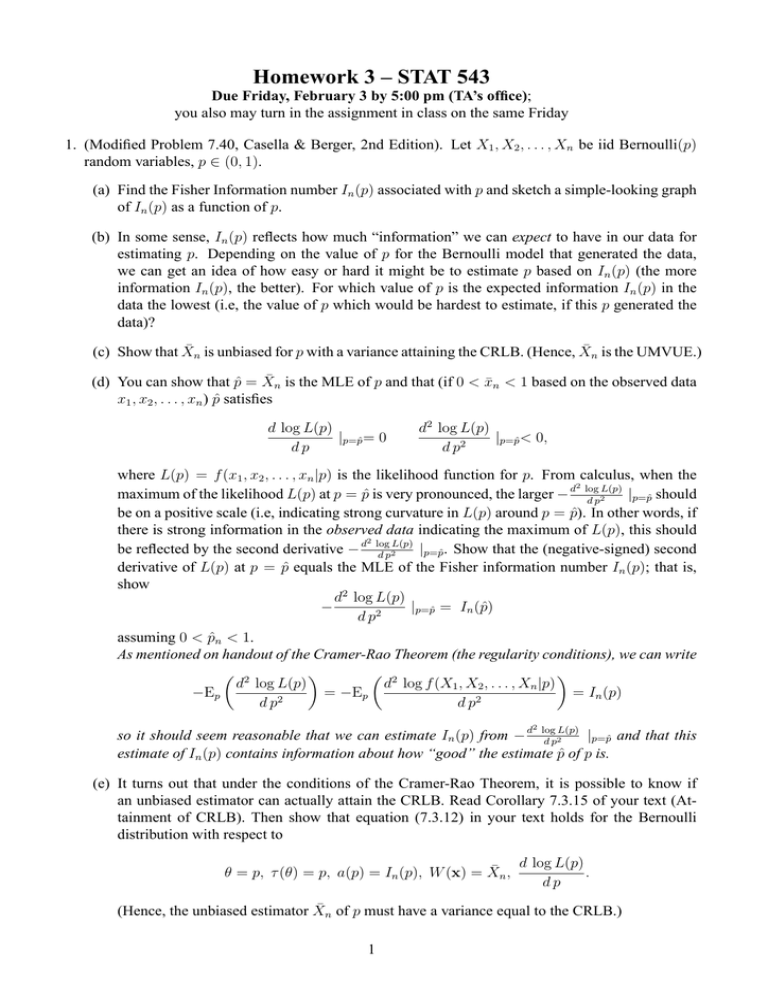

Homework 3 – STAT 543

advertisement

Homework 3 – STAT 543 Due Friday, February 3 by 5:00 pm (TA’s office); you also may turn in the assignment in class on the same Friday 1. (Modified Problem 7.40, Casella & Berger, 2nd Edition). Let X1 , X2 , . . . , Xn be iid Bernoulli(p) random variables, p ∈ (0, 1). (a) Find the Fisher Information number In (p) associated with p and sketch a simple-looking graph of In (p) as a function of p. (b) In some sense, In (p) reflects how much “information” we can expect to have in our data for estimating p. Depending on the value of p for the Bernoulli model that generated the data, we can get an idea of how easy or hard it might be to estimate p based on In (p) (the more information In (p), the better). For which value of p is the expected information In (p) in the data the lowest (i.e, the value of p which would be hardest to estimate, if this p generated the data)? (c) Show that X̄n is unbiased for p with a variance attaining the CRLB. (Hence, X̄n is the UMVUE.) (d) You can show that p̂ = X̄n is the MLE of p and that (if 0 < x̄n < 1 based on the observed data x1 , x2 , . . . , xn ) p̂ satisfies d log L(p) |p=p̂ = 0 dp d2 log L(p) |p=p̂ < 0, d p2 where L(p) = f (x1 , x2 , . . . , xn |p) is the likelihood function for p. From calculus, when the 2 L(p) |p=p̂ should maximum of the likelihood L(p) at p = p̂ is very pronounced, the larger − d log d p2 be on a positive scale (i.e, indicating strong curvature in L(p) around p = p̂). In other words, if there is strong information in the observed data indicating the maximum of L(p), this should 2 L(p) be reflected by the second derivative − d log |p=p̂ . Show that the (negative-signed) second d p2 derivative of L(p) at p = p̂ equals the MLE of the Fisher information number In (p); that is, show d2 log L(p) − |p=p̂ = In (p̂) d p2 assuming 0 < p̂n < 1. As mentioned on handout of the Cramer-Rao Theorem (the regularity conditions), we can write µ 2 ¶ µ 2 ¶ d log L(p) d log f (X1 , X2 , . . . , Xn |p) −Ep = −Ep = In (p) d p2 d p2 2 L(p) so it should seem reasonable that we can estimate In (p) from − d log |p=p̂ and that this d p2 estimate of In (p) contains information about how “good” the estimate p̂ of p is. (e) It turns out that under the conditions of the Cramer-Rao Theorem, it is possible to know if an unbiased estimator can actually attain the CRLB. Read Corollary 7.3.15 of your text (Attainment of CRLB). Then show that equation (7.3.12) in your text holds for the Bernoulli distribution with respect to θ = p, τ (θ) = p, a(p) = In (p), W (x) = X̄n , d log L(p) . dp (Hence, the unbiased estimator X̄n of p must have a variance equal to the CRLB.) 1 2. The last part of the problem above should make you suspicious that unbiased estimators must fulfill specific conditions for their variances to equal the CRLB. In other words, many times a UMVUE can be found but its variance will not necessary attain the CRLB (i.e, variance will be strictly greater). The next problem points out the limitation in always trying to use the CRLB for finding UMVUEs. (Modified from Problem 7.44, Casella & Berger, 2nd Edition) Suppose X1 , X2 , . . . , Xn are iid normal N (θ, 1), θ ∈ R. It turns out that T = X̄n2 − (1/n) is the UMVUE of γ(θ) = θ2 . (Don’t worry about showing this fact; we need more tools for this, which we will discuss.) (a) Show T is unbiased for γ(θ) and find the variance of T as a function of θ. (b) Find the CRLB for estimating γ(θ) = θ2 . (c) Show the variance of T is strictly greater than the CRLB for estimating γ(θ) = θ2 . 3. Problem 7.58 Casella and Berger (2nd Edition) 4. Let X1 , X2 , . . . , Xn be iid Bernoulli(θ), θ ∈ (0, 1). Find the Bayes estimator of θ with respect to the uniform(0, 1) prior under the loss function L(t, θ) = 2 (t − θ)2 θ(1 − θ)