Document 10639906

advertisement

Estimable Functions and Their Least

Squares Estimators

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

1 / 51

Consider the GLM

y =n×p

X β + ε,

n×1

p×1

n×1

where

E(ε) = 0.

Suppose we wish to estimate c0 β for some fixed and known c ∈ Rp .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

2 / 51

An estimator t(y) is an unbiased estimator of the function c0 β iff

E[t(y)] = c0 β

c

Copyright 2012

Dan Nettleton (Iowa State University)

∀ β ∈ Rp .

Statistics 611

3 / 51

An estimator t(y) is a linear estimator in y iff

t(y) = d + a0 y

for some known constants d, a1 , . . . , an .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

4 / 51

A function c0 β is linearly estimable iff ∃ a linear estimator that is an

unbiased estimator of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

5 / 51

Henceforth, we will use estimable as a synonym for linearly estimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

6 / 51

A function c0 β is said to be nonestimable if there does not exist a linear

estimator that is an unbiased estimator of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

7 / 51

Result 3.1:

Under the GLM, c0 β is estimable iff the following equivalent conditions

hold:

(i) ∃ a 3 E(a0 y) = c0 β

(ii) ∃ a 3 c0 = a0 X

∀ β ∈ Rp

(X0 a = c)

(iii) c ∈ C(X0 ).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

8 / 51

Show conditions (i), (ii), and (iii) are equivalent.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

9 / 51

Show that any of the equivalent conditions is equivalent to c0 β

estimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

11 / 51

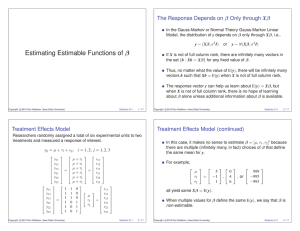

Example:

Suppose that when team i competes against team j, the expected

margin of victory for team i over team j is µi − µj , where µ1 , . . . , µ5 are

unknown parameters.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

13 / 51

Suppose we observe the following outcomes.

Team

1

beats Team

2

by

7

3

1

3

3

2

14

3

5

17

4

5

10

4

1

1

c

Copyright 2012

Dan Nettleton (Iowa State University)

points

Statistics 611

14 / 51

Determine y, X, β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

15 / 51

Is µ1 − µ2 is estimable?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

17 / 51

Is µ1 − µ3 is estimable?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

20 / 51

Is µ1 − µ5 is estimable?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

22 / 51

Is µ1 estimable?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

24 / 51

Result 3.1 tells us that c0 β is estimable iff ∃ a 3 c0 β = a0 Xβ

∀ β ∈ Rp .

Recall that E(y) = Xβ.

Thus, c0 β is estimable iff it is a LC of the elements of E(y).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

28 / 51

This leads to Method 3.1:

LCs of expected values of observations are estimable.

c0 β is estimable iff c0 β is a LC of the elements of E(y); i.e.,

c0 β =

n

X

ai E(yi )

for some

a1 , . . . , an .

i=1

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

29 / 51

Use Method 3.1 to show that µ2 − µ4 is estimable in our previous

example.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

30 / 51

Method 3.2:

c0 β is estimable iff c ∈ C(X0 ).

Thus, find a basis for C(X0 ), say {v1 , . . . , vr }, and determine if

c=

r

X

di vi

for some

d1 , . . . , dr .

i=1

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

32 / 51

Method 3.3:

By Result A.5, we know that C(X0 ) and N (X) are orthogonal

complements in Rp .

Thus,

c ∈ C(X0 )

iff

c0 d = 0

∀ d ∈ N (X),

which is equivalent to

Xd = 0 ⇒ c0 d = 0.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

33 / 51

Reconsider our previous example.

Use Method 3.3 to show that µ1 is nonestimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

34 / 51

Now use method 3.3 to establish that

c0 β = c1 µ1 + c2 µ2 + c3 µ3 + c4 µ4 + c5 µ5

is estimable iff

5

X

ci = 0.

i=1

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

36 / 51

The least squares estimator of an estimable function c0 β is c0 β̂, where

β̂ is any solution to the NE (X0 Xb = X0 y).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

38 / 51

Result 3.2:

If c0 β is estimable, then c0 β̂ is the same for all solutions β̂ to the NE.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

39 / 51

Result 3.3:

The least squares estimator of an estimable function c0 β is a linear

unbiased estimator of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

41 / 51

Consider again our previous example.

Recall that y1 is a linear unbiased estimator of µ1 − µ2 .

Is this the least squares estimator?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

44 / 51

Suppose y = Xβ + ε, where E(ε) = 0 and rank(n×p

X) = p.

Show that c0 β is estimable ∀ c ∈ Rp .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

47 / 51