Document 10639895

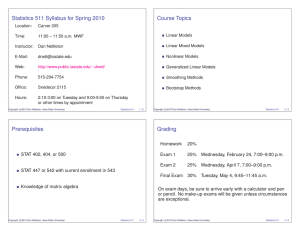

advertisement

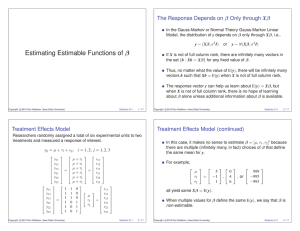

Introduction to the General Linear Model c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 1 / 22 Consider the General Linear Model y = Xβ + ε, where . . . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 2 / 22 y is an n × 1 random vector of responses that can be observed. The values in y are values of the response variable. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 3 / 22 X is an n × p matrix of known constants. Each column of X contains the values for an explanatory variable that is also known as a predictor variable or a regressor variable in the context of multiple regression. The matrix X is sometimes referred to as the design matrix. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 4 / 22 β is an unknown parameter vector in Rp . We are often interested in estimating a LC of the elements in β (c0 β) or multiple LCs (Cβ) for some known c or C. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 5 / 22 ε is an n × 1 random vector that cannot be observed. The values in ε are called errors. Initially, we assume only E(ε) = 0. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 6 / 22 Note that E(ε) = 0 ⇒ E(y) = E(Xβ + ε) c Copyright 2012 Dan Nettleton (Iowa State University) = Xβ + E(ε) = Xβ ∈ C(X). Statistics 611 7 / 22 Thus, this general linear model simply says that y is a random vector with mean E(y) in the column space of X. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 8 / 22 Example: Suppose 5 hogs are fed diet 1 for three weeks. Let yi be the weight gain of the ith hog for i = 1, . . . , 5. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 9 / 22 Suppose that we assume E(yi ) = µ ∀ i = 1, . . . , 5. Then ε1 1 y1 y2 1 ε2 = µ + ε3 y3 1 ε y 1 4 4 ε5 1 y5 where E(εi ) = 0 ∀ i = 1, . . . , 5. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 10 / 22 Example: Suppose 10 hogs are randomly divided into two groups of 5 hogs each. Group 1 is fed diet 1 for three weeks, group 2 is fed diet 2 for three weeks. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 11 / 22 Let yij denote the weight gain of the jth hog in the ith diet group (i = 1, 2; j = 1, . . . , 5). If we assume that E(yij ) = µi for i = 1, 2; j = 1, . . . , 5, then c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 12 / 22 1 y 11 y 1 12 y13 1 y14 1 y 1 15 = y21 0 y22 0 y 0 23 y24 0 0 y25 ε 11 0 ε12 ε13 0 ε 0 " # 14 ε 0 µ1 15 + ε21 1 µ2 ε22 1 ε 1 23 ε24 1 ε25 1 0 where E(εij ) = 0 ∀ i = 1, 2; j = 1, . . . , 5. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 13 / 22 Alternatively, we could assume E(yij ) = µ + τi ∀ i = 1, 2; j = 1, . . . , 5. Then the design matrix and parameter vector become . . . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 14 / 22 1 1 1 1 1 1 1 1 1 1 µ + τ1 µ + τ 0 1 µ + τ1 0 0 µ + τ1 µ µ + τ 0 1 = . τ 1 µ + τ2 1 τ 2 µ + τ2 1 µ + τ 1 2 µ + τ2 1 µ + τ2 1 1 0 1 1 1 1 0 0 0 0 0 c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 15 / 22 These models are equivalent because both design matrices have the same column space: a a a a a : a, b ∈ R . b b b b b c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 16 / 22 Example: 10 steer carcasses were assigned to be measured for pH at one of five times after slaughter. Data are as follows (Schwenke & Milliken(1991), Biometrics, 47, 563-573.) c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 17 / 22 Steer Hours after Slaughter pH 1 1 7.02 2 1 6.93 3 2 6.42 4 2 6.51 5 3 6.07 6 3 5.99 7 4 5.59 8 4 5.80 9 5 5.51 10 5 5.36 c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 18 / 22 ∀ i = 1, . . . , 10, let xi = measurement time (hours after slaughter) for steer i yi = pH for steer i. Suppose yi = β0 + β1 log(xi ) + εi where E(εi ) = 0 ∀ i = 1, . . . , 10. Determine y, X, and β. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 19 / 22 ε 1 log(x1 ) 1 ε y 1 log(x ) 2 2 2 y3 1 log(x3 ) ε3 ε y4 1 log(x4 ) " # 4 ε y 1 log(x ) β 0 5 5 5 + = y6 1 log(x6 ) β1 ε6 ε7 y7 1 log(x7 ) ε y 1 log(x ) 8 8 8 y9 1 log(x9 ) ε9 ε10 1 log(x10 ) y10 y1 c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 20 / 22 1 7.02 1 6.93 1 6.42 1 6.51 1 6.07 y= , X = 1 5.99 1 5.59 1 5.80 1 5.51 1 5.36 c Copyright 2012 Dan Nettleton (Iowa State University) log(1) log(1) log(2) log(2) " # β0 log(3) , β = β1 log(3) log(4) log(4) log(5) log(5) Statistics 611 21 / 22 Chapter 1 of our text contains many more examples. Please read the entire chapter. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 22 / 22