Exploration of Colleges in the United States

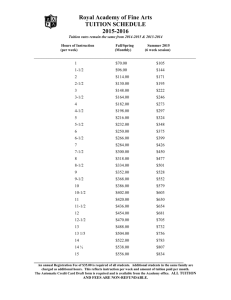

advertisement

Exploration of Colleges in the United States Brenna Curley, Karsten Maurer, Dave Osthus, and Bryan Stanfill 1 Introduction There is a vast amount of colleges and universities in the United States and distinguishing between all of them can seem a daunting task. Data on over 1300 schools were collected for the 1995 U.S. News & World Report’s Guide to America’s Best Colleges and can help provide insight into various characteristics of each school. From these data we use both R and SAS to explore differences and trends between these colleges. Before starting on any analyses of these data, we checked for assumption of normality on certain variables of interest. This data set also includes variables with a large amount of missing values. For certain variables we imputed data to increase the number of observations we were able to work with for later analyses. If there were too many missing values in one variable, however, it was not used in our analysis. Hotelling’s T 2 as well as discriminate analysis were used to explore differences between public and private schools and differences between regions. Trends associated with instate tuition and graduation rate were also explored using multiple linear regression. Since the dataset had over 30 variables, reducing the dimensionality using principal components was also desirable. The accuracy of estimates using principal components was compared to those estimates taken from the original data. 2 Data Description Our data set consists of 32 qualitative and quantitative variables for 1302 universities and colleges, representing all 50 states, as well as Washington, DC. All 32 variables are listed below: 1. Public/private indicator 15. Pct. new students from top 10% of H.S. class 2. Average Math SAT score 16. Pct. new students from top 25% of H.S. class 3. Average Verbal SAT score 17. Number of full time undergraduates 4. Average Combined SAT score 18. Number of part time undergraduates 5. Average ACT score 19. In-state tuition 6. First quartile - Math SAT 20. Out-of-state tuition 7. Third quartile - Math SAT 21. Room and board costs 8. First quartile - Verbal SAT 22. Room costs 9. Third quartile - Verbal SAT 23. Board costs 10. First quartile - ACT 24. Additional fees 11. Third quartile - ACT 25. Estimated book costs 12. Number of applications received 26. Estimated personal spending 13. Number of applicants accepted 27. Pct. of faculty with Ph.D.’s 14. Number of new students enrolled 28. Pct. of faculty with terminal degree 1 29. Student/faculty ratio 31. Instructional expenditure per student 30. Pct.alumni who donate 32. Graduation rate Though our data set began with 32 variables, we had to deal with missing data of various severity levels. All ten of the ACT and SAT related variables had missing data issues deemed severe. For each one of these variables, over 40% of the colleges and universities were missing data. Thus, the decision was made to remove these variables from our data set. We also removed “Room costs” and “Board costs,” due to a large proportion of the colleges and universities missing data. Data imputation was performed on the remaining 20 variables using the MI procedure in SAS. The Markov Chain Monte Carlo method was employeed to imput the missing data. One variable was created for use in our analysis, called “Region”. This variable classified all schools into four geographical regions, based on state. The states and regions are listed below: • The Midwest: IA, IL, IN, KS, MI, MN, MO, ND, NE, OH, SD, WI • The Northeast: CT, MA, ME, NE, NH, NJ, NY, PA, RI, VT • The South: AL, AR, DC, DE, FL, GA, KY, LA, MD, MS, NC, OK, SC, TN, TX, VA, WV • The West: AK, AZ, CA, CO, HI, ID, MT, NM, NV, OR, UT, WA, WY Thus, we were left with a data set of 21 variables. Two universities were removed from our data set, based on suspect data entries for the Student/faculty ratio. St. Leo University of Florida and Northwood University of Michigan had outlier student/faculty ratios of 72.4:1 and 91.8:1 respectively. They were removed for two main reasons. One, these ratios, though taken in 1995, seem unlikely. St. Leo University’s homepage claims they have a 15:1 student to faculty ratio, and Northwood University’s states theirs is approximately 30:1. Though 15 years have elapsed, it’s doubtful their student to faculty ratio’s have changed that significantly. The second reason is, these two schools were found to be influential points in our multiple regression analysis, based on high dffits values. Thus, they were removed from the data. Our final data set consisted of 1300 schools and 21 variables. 3 Exploratory Analyses With such a large data set we felt the assumption of normality would be easily taken care of by the Central Limit Theorem. In the interest of completeness, however, we decided to explore the variables of interest more closely namely cost per student, percent of students who graduated in the top 25%, percent faculty with PHDs, graduation rate and instate tuition. Looking at the diagonal elements of figure 1 you can see that each of these elements have reasonably normal marginal distributions with a little left skewness in FacWPHD and right skewness in cost per student. The variable that deviates most obviously from the normal distribution is instate tuition with it’s bi-modal histogram, but here we will defer to the Central Limit Theorem and assume approximate normality. The off diagonal elements above are the bivariate distributions of the variables and they seem to be approximately normal as well. 2 Figure 1: Marginal and Bivariate distributions of variables 4 4.1 Methods Comparing Mean Vectors Within this dataset are included both public and private schools from all around the united states. However there may be significant differences between types of schools or regions from where they are located. We will first explore the differences between public and private colleges using Hotelling’s T 2 statistic to compare means between the two populations. The variables we are interested in are: instate tuition, percent of new students from top 25% of their highschool class, percent of faculty with Ph.D.’s, instructional expenditure per student, and graduation rate. Will will also compare these same variables across regions of the country–Midwest, West, Northeast, and South. 4.2 Multivariate Multiple Regression Applying multiple linear regression (MLR) techniques will be the next step in our analysis of the College data set. The goal is to be able to use a set of variables from the data to predict the values of more than one response variable using regression. In our case we are interested in predicting the instate tuition and the graduation rate of a college using the student-faculty ratio, the percentage of students from the top 25 percent of their high school class, and the number of full time undergraduates for each college as explanatory variables. The goal is to create a model that allows us to predict unknown tuition costs and unknown graduation rates for a school outside of the data set. To simulate this situation we will subset out a small group of schools and use to main body of remaining colleges to build a model for prediction. We will be using Iowa State University, University of Texas-Austin, University of Minnesota at Morris, Luther College and Grinnell College (each of the group members’ collegiate schools) as the removed subset of colleges. First we will need to check the model fit to see if the assumptions hold. The major assumptions 3 for MLR are that the schools are independent, have constant variance and that errors are normally distributed. The independence of responses between schools is an assumption that we are going to make based on the belief that knowing an attribute for a given school will not tell you anything about that attribute for another. Residual plots will allow us to check for non-constant variance as well as dependence of residuals on the predicted values of the response. We will check for any distinct patterns that would indicate an issue with the variance. Lastly, we will check histograms and normal qq-plots of the residual values to assess their normality. Once the assumptions have been checked then we can move on to using the model we construct for prediction. Using multivariate techniques will result in the same estimated regression coefficients as would be obtained by separate simple regressions, and therefore the same estimates for response variables. However, it will allow us to utilize the correlation between variables to properly adjust for simultaneous interpretation of the regression results. This applies to constructing confidence intervals for regression coefficients and expected mean responses, as well as adjusting prediction intervals for an individual response vector. Since we are interested in making predictions on the tuition and graduation rates for specific schools we will focus on constructing simultaneous prediction intervals. We will be using Scheffe’s adjusted F-statistic to construct our prediction intervals that will allow us to interpret the intervals for tuition and for graduation rate simultaneously. An additional adjustment must be made since we are interested in creating these pairs of prediction intervals for all five removed schools at the same time. For this we will take the conservative Bonferroni approach and divide our α by 10; therefore, we will use an α = .005 to maintain 95% confidence on all our intervals. 4.3 Principal Components Since this dataset includes over 30 variables, reducing the dimensionality before any analyses are done could be helpful. After removing variables with too many missing values–such as all the test scores–as well as the variables we wish to regress on, we will still be left with 12 variables which we wish to reduce. In order to minimize any loss of variability we look at principal components before proceeding with further analysis. Due to the large differences in the variances we use the correlation matrix to derive our principal components. Viewing the relative size of the eigenvalues for each variable as well as looking at scree plots we should be able to limit all our variables to just a few principal components. Once we have reduced the dimensionality of the data, we are able to continue using these principal components to do multiple linear regression. Since normality is an important assumption for regression, we first check univariate and bivariate scatter plots of our principal components. Once normality is established, we will regress on both out of state tuition and graduation rate. The assumptions of regression will also be checked by plotting the residuals against the predicted values for both out of state tuition and graduation rate. 4.4 Discriminate analysis After some initial graphical exploration of our data set we realized there was a distinct difference in public and private schools in terms of tuition, percent of faculty with Ph.D.’s, cost per student, the percent of students who graduated in the top 25% of their graduating class, and the universities graduation rate. The best way to formaly define this distinction is with discriminate analysis. A few of the most interesting plots are in figure ??. 4 (a) instate tuition (b) Graduation Rate Figure 2: Tuition and Graduation Rate by Public Discriminate analysis is a method designed to allow you to classify a new observation into the appropriate group when only given a few variables. In this case we want to be able to determine if an unknown school is public or private given only the variables listed above. Before we decide on a rule we need to decide the form of the rule, should it be linear or quadratic? To decide this we need to test for the equality of covariance matrices of the explanatory variables. In the event of unequal covariance matrices we should use a quadratic discriminate rule and if the covariance matrices are close to equal we can use a linear discriminate rule. We also need to decide on the prior probabilities of public and private schools, which will be determined by the proportion of each type of school in the dataset as well as on line resources. Once we have developed a rule, we would like to determine if that rule is useful. To do this we need to approximate our apparent error rate via re substitution and cross-validation. Once we’ve decided on a rule, we would like to use that rule to classify our own universities into public or private as a second measure of accuracy (University of Texas-Austin, Luther College, University of Minnesota-Morris, Grinnell College and Iowa State University). Of course, we would leave our own schools out of the determination of the discriminate rule in order to ensure accuracy. Classifying into public and private universities is interesting, but we would also like to classify colleges by geographic regions such as West, South, Midwest and Northeast using the same variables as above. We have included a few box plots to demonstrate their discriminating power by region in figure ??. Obviously this classification deals with multiple populations which are less well defined so we don’t expect as accurate a result as with the public and private classification, but it still should be useful. 5 5.1 Results Comparing Mean Vectors One of the possible distinguishing characteristics between schools is whether or not they are a public or private institution. To see whether our variables of interest actually differ significantly between 5 (a) Instate Tuition (b) Graduation Rate Figure 3: Tuition and Graduation Rate by Region these two types of schools we used Hotelling’s T 2 . The variables of interest included: instate tuition, percent of new students from top 25% of their highschool class, percent of faculty with Ph.D.’s, instructional expenditure per student, and graduation rate. Simply looking at the means of each variable we can already see that there may be a difference between public and private. Variable Instate Tuition Top 25 HS Faculty w/ Ph.D. Cost per Student Grad Rate Public Mean 2325.46 46.37 72.26 7237.43 49.90 Private Mean 10968.86 53.082 66.66 9903.60 65.30 P-Value for H0 : µ1 = µ2 ≤.0001 ≤.0001 ≤.0001 ≤.0001 ≤.0001 For example, the mean graduation rate for a public is around 49.9% while for private schools the rate is much higher–at around 65.3%. Looking at the actual test statistics and pvalues for each variable, we see the difference in means is significant for all five variables. Another possible factor that may lead to differences in schools is where the college is located in the United States. To explore this idea we started by splitting up the schools into four different regions of the country–Midwest, West, Northeast, and South. Then we compared these four means to each other for each of the five variables of interest. Looking at our F statistic for each of the five variables we see that the p-value is significantly small. Therefore at least one of the regions differs for each of the variables. Variable Instate Tuition Top 25 HS Faculty w/ Ph.D. Cost per Student Grad Rate MW 7959.79 48.22 64.67 8327.30 60.52 NE 10612.19 52.44 72.29 10480.79 68.66 South 5914.18 49.19 67.30 7923.39 54.15 6 West 7204.91 56.04 73.56 9844.03 55.07 P-Value for H0 : µ1 = µ2 = µ3 = µ4 ≤.0001 .0001 ≤.0001 ≤.0001 ≤.0001 Looking closer at instate tuition, we see that all the regions differ significantly except the Midwest and the West where the average instate tuition is $7959.79 and $7204.91, respectively. For the percent of students in the top 25% of their highschool class, the Midwest and Northeast are significantly different as well as the Midwest and the West. The Northeast and the West have only slightly significantly different mean percents that came from the top 25%. Differences in the percent of faculty that have a Ph.D. are sigificantly different across all regions except between the Northeast and the West where 72.29% and 73.56% have a Ph.D., respectively. The mean expenditure per student does not differ significantly between the Midwest and the South nor between the Northeast and the West. All regions have significantly different mean graduation rates except between the South, with a mean graduation rate of 54.15%, and the West, with only a slightly higher rate of 55.07%. Instate Tuition P-Value for H0 : µi = µj Region MW NE South West MW ≤ .0001 ≤ .0001 0.1165 NE ≤.0001 ≤ .0001 ≤ .0001 South ≤ .0001 ≤.0001 West 0.1165 ≤ .0001 0.0055 0.0055 Top 25 HS P-Value for H0 : µi = µj Region MW NE South West MW 0.0075 0.5125 ≤ .0001 NE 0.0075 0.0292 0.0653 South 0.5125 0.0292 West ≤ .0001 0.0003 0.0003 0.0003 Faculty w/ Ph.D. P-Value for H0 : µi = µj Region MW NE South West MW ≤ .0001 0.0361 ≤ .0001 NE ≤ .0001 ≤ .0001 0.4451 South 0.0361 ≤ .0001 West ≤ .0001 0.4451 ≤ .0001 ≤ .0001 Cost per Student P-Value for H0 : µi = µj Region MW NE South West MW ≤ .0001 0.2768 0.0019 NE ≤ .0001 ≤ .0001 0.1957 7 South 0.2768 ≤ .0001 ≤ .0001 West 0.0019 0.1957 ≤ .0001 Grad Rate P-Value for H0 : µi = µj Region MW NE South West 5.2 MW ≤ .0001 ≤ .0001 0.0012 NE ≤ .0001 ≤ .0001 ≤ .0001 South ≤ .0001 ≤ .0001 West 0.0012 ≤ .0001 0.5669 0.5669 Multiple Linear Regression MLR was run on three variables, student/faculty ratio, top 25% in high school, and fulltime undergrads, to predict graduation rate and instate tuition. Plots were created and all assumptions of MLR were met. The histograms for the residuals of both instate tuition and graduation rates were unimodal and symmetric, showed no signs of violating normality. The residuals plotted against the predicted values of graduation rate were well behaved and showed no pattern indicating dependence, nor non-constant variance. The plot of residuals against the predicted values of instate tuition seemed peculiar since the points were all scattered randomly except that all points seemed to be forced above the line of y = −x, or Resid = −Predicted Tuition. This is ok however because it is possible to get negative predicted values of tuition and in the data set there were no observed values of tuition that fell below zero, so this pattern was expected. Since the plots indicated no violation of our model assumptions, we carried on with our predictions. A few of the plots are shown in figures 4 and 5. (a) Graduation Rate (b) Instate Tuition Figure 4: Residuals vs. Predicted Values All three explanatory variables were found to be significant at the α = .05 level, as seen in the tables below: Simultaneous prediction intervals were calculated for Grinnell College, Luther College, Iowa State University, University of Texas - Austin, and University of Minnesota - Morris. The intervals for instate tuition and graduation rate are in the table below: 8 Variable Intercept StFacRat Top25HS FullUnd DF 1 1 1 1 Parameter Estimate 10410 -412.61571 103.62050 -0.46245 Std Error 527.11138 26.02709 5.50179 0.02535 t Value 19.75 -15.85 18.83 -18.24 Pr > |t| <.0001 <.0001 <.0001 <.0001 Table 1: Beta estimates Instate Tuition Variable Intercept StFacRat Top25HS FullUnd DF 1 1 1 1 Parameter Estimate 50.47994 -0.75232 0.43883 -0.00050861 Std Error 2.15421 0.10637 0.02248 0.00010359 t Value 23.43 -7.07 19.52 -4.91 Pr > |t| <.0001 <.0001 <.0001 <.0001 Table 2: Beta estimates for Graduation Rate College/University Grinnell College Luther College Iowa State University U of Texas - Austin U of Minnesota - Morris Act. Grad Rate 83 77 65 65 51 Pred. Grad Rate 82.59 70.54 52.43 57.69 76.3 L. Bound 26.68 14.71 -3.62 1.14 20.41 U. Bound 138.5 126.37 108.47 114.24 132.19 Table 3: Prediction Intervals for Graduation Rate (MLR) College/University Grinnell College Luther College Iowa State University U of Texas - Austin U of Minnesota - Morris Act. Instate Tuition ($) 15,688 13,240 2,291 840 3,330 Pred. Instate Tuition ($) 15,303.17 11,127.28 -35.33 -2,792.15 12,443.49 Table 4: Prediction Intervals for Instate Tuition (MLR) 9 L. Bound ($) 1,623.37 -2,534.64 -13,748.94 -16,629.63 -1,232.05 U. Bound ($) 28,982.97 24,789.2 13,678.28 11,045.33 26,119.03 (a) Graduation Rate (b) Instate Tuition Figure 5: Histogram of residuals are normally distributed There are a couple things to be noted. One, the estimates for instate tuition are negative for both Iowa State University and the University of Texas - Austin. There was nothing in our model that bounds the instate tuition at $0. Thus, we saw negative instate tuition estimates for these two schools. Two, the β̂ for full time undergrads partially explains the negative instate tuition estimates. β̂F ullU nd is -0.46245. Thus, holding all else constant, our model predicted the instate tuition to decrease by 46 cents for every additional full time undergraduate. Iowa State and U of Texas are by far the largest of the five schools used for prediction. Thus, it seems our model under estimated instate tuition for large schools. 5.3 Principal Components To choose whether we would be using the covariance or the correlation matrix we first examined the variances of all the variables of interest. From the variance-covariance matrix we notice that there are large differences–some as low as 19.9 or some over 28 million. This also makes sense because of the large differences in scale between variables such as book costs or cost per student. Because of these discrepancies in variances, the correlation matrix will be used to calculate the principal components. In order to decide how many principle components to keep we can look at the size of the eigenvalues and the proportion of the variance explained by each–both numerically and graphically using scree plots. Below we see that the first three principal components are all above one and combined explain almost 74% of the variance. The fourth eigenvalue explains less than 8% of the variance. Similarly in the scree plot, figure 6, we see that the slope changes drastically after the first three eigenvalues. Therefore using just the first three eigenvalues should be adequate. 10 PC 1 2 3 4 5 Eigenvalue 4.82 2.95 1.11 0.98 0.79 Proportion 0.40 0.25 0.09 0.17 0.08 Cumulative 0.40 0.65 0.74 0.82 0.89 Figure 6: Scree Plot We have now reduced the dimensionality of our data and can proceed with our analyses of multiple linear regression. Before doing so we need to check that our assumption of normality is met. Looking at the bivariate scatter plots, the first three principal components appear fairly normal. Also, due to our large sample we can assume normality and continue with our regression. Two variables that would be interesting to predict for a new school are instate tuition and graduation rate. Regressing on our three principal components for instate tuition we get the line: tuition = 7860.52 + −25.628yˆ1 + 2325.215yˆ2 + −396.32yˆ3 We get an R2 value for this regression line of just 0.5517 so only 55.17% of the data is explained by our linear regression line suggesting a linear fit may not be the best option in this case. We also graphically checked the assumptions of this regression by looking at the residual plots for instate tuition and each of the principal components. Overall all of the plots showed randomly scatter plots around zero, so the regression assumptions are met and we could use it to predict instate tuition for any new school’s principal components. Next for graduation rate we get a linear regression line of: gradrate = 59.696 + 2.048yˆ1 + 5.712yˆ2 + −0.624yˆ3 We get an R2 value for the line predicting graduation rate of just .3317 so only 33.17% of the data is explained by our line. Again after checking the residual plots for graduation rate and the three principal components, the assumptions of our regression hold. 11 Figure 7: Bivariate Scatterplot of PCs Figure 8: Instate Tuition Residuals 5.4 Discriminate Analysis The first discriminate analysis we ran we let the priors be 50%/50%, which we quickly realized wasn’t reasonable. In our dataset we found 66% of the schools to be public and 34% of the schools to be private. Then we did a little online research to find that in 1995 the proportions were closer to 70%/30%. To reconcile this discrepency we made our priors 68% and 32% respectively. 12 Figure 9: Graduation Rate Residuals College/University Grinnell College Luther College Iowa State University U of Texas - Austin U of Minnesota - Morris Act. Grad Rate 83 77 65 65 51 Pred. Grad Rate 82.00 67.91 54.76 56.00 70.55 L. Bound 26.70 12.71 -0.58 0.34 15.34 U. Bound 137.29 123.12 110.10 111.67 125.77 Table 5: Prediction Intervals for Graduation Rate (PC) With our priors set we tested for equality of equal variance matrices which proved to be quite different: χ215 = 510.1 with a p-value< 0.0001. Therefore we developed a quadratic discriminate rule. This rule had the following re substitution and cross validation error rates: Public Private Total Re substitution 0.03 0.04 0.04 Cross-validation 0.03 0.04 0.04 When we tried to classify each of our schools using this rule we correctly assigned each school into their respective categories either public or private. Again relying on the size of the data set we decided to force SAS to assume equal covariance matrices and create a linear discriminate rule. Before we fit that, however, we ran a stepwise variable selection process to see which of the variables actually is useful in classifying schools into public or private at the 0.05 significance level to enter and stay. The process selected instate tuition, percent of faculty with Ph.D. and cost per student. Then the linear discriminate rule on these variables had the following, slightly different, re substitution and cross-validation error rates: 13 College/University Grinnell College Luther College Iowa State University U of Texas - Austin U of Minnesota - Morris Act. Instate Tuition ($) 5,688 13,240 2,291 840 3,330 Pred. Instate Tuition ($) 15,730.09 10,965.90 1,905.45 -1,194.95 11,932.83 L. Bound ($) 2,688.81 -2,054.66 -11,147.15 -14,324.03 -1,089.88 U. Bound ($) 28,771.37 23,986.46 14,958.04 11,934.12 24,955.53 Table 6: Prediction Intervals for Instate Tuition (PC) Public Private Total Re substitution 0.02 0.06 0.05 Cross-validation 0.02 0.06 0.05 Again we used our rule to classify our schools into public or private and the linear rule correctly classified each one. Next we tried to classify schools into their regions from the same variables as before. Following the same process as with public and private first we tried to determine the correct prior probabilities for a school being in each region. At first we thought we could count the number of states in each region and apply priors accordingly then we realized the number of states in each region doesn’t reflect the number of schools in each region correctly, so we decided to apply prior probabilities according to the number of schools in each region in our dataset. With the priors set, next we needed to determine if the covariance matrices were equal across our explanatory variables. Again they proved to be quite different with a test statistic of χ245 = 211.9 and a p-value< 0.001. This indicates we should use a quadratic rule to classify our data. The error rates of our quadratic classification rule are as follows: Midwest Northeast South West Total Re substitution 0.56 0.55 0.36 0.88 0.53 Cross-validation 0.59 0.57 0.38 0.90 0.55 Obviously these error rate are extremely high and make the classification impossible. As expected, when we tried to classify our schools into their regions we correctly classified only two schools the University of Texas-Austin and Luther College. Despite these high error rates we thought we’d try to force SAS to fit a linear discriminate rule to see if it would do any better. The error rates of the linear rule are: Midwest Northeast South West Total Re substitution 0.74 0.46 0.29 0.98 0.55 14 Cross-validation 0.74 0.47 0.30 0.98 0.55 These error rates are even higher so, as expected, when we tried to classify each of our schools we only classified University of Texas-Austin correctly. 6 6.1 Conclusion Comparing Mean Vectors Using Hotelling’s T 2 we were able to explore differences in means between public and private colleges as well as compare regions of the country. Differences between public and private schools was very significant for all the variables of interest–such as instate tuition, percent of new students from top 25% of their highschool class, percent of faculty with Ph.D.’s, instructional expenditure per student, and graduation rate. Differences between regions of the country, however, were more varied depending on which variable was looked at. In general most differences were fairly significant between pairs of regions, but in every variable there were some exceptions. One such instance was in comparing mean graduation rate between the South and the West where we only got a high p-value of .5667 giving strong evidence of no difference in graduation rates between those two regions. 6.2 Multivariate Multiple Regression Using multiple linear regression we set out to see if we could use a college’s student-faculty ratio, percentage of students from the top 25% of their high school class and the number of fulltime undergraduate students to build a model that could predict instate tuition and graduation rate simultaneously. Five schools were taken out of the data set during the construction of the model and then used as ”new schools” to have response variables predicted for. Prediction intervals for graduation rate and instate tuition for the five schools in question were attained using MLR methods. The resulting intervals were very wide in relation to the variables they were predicting. The prediction intervals for graduation rate on each school had ranges of greater than 100% and the intervals on tuition had widths of near $26,000. These intervals prove unacceptable for serious interpretation because they are far too wide to allow us to have any faith whatsoever in the predictions that they circumscribe. 6.3 Principal Components Due to the large number of variables in this data set, we tried to reduce the dimensionality using principal components. Since there were large differences in the variances, the eigenvectors and eigenvalues were computed using the correlation matrix. After calculating the principal components we looked at the proportion of variance each eigenvalue accounted for in the variation. Examining this both numerically and graphically, using scree plots, we decided an appropriate number of principal components to keep would be three. After successfully reducing the dimensionality of our data, we were able to do multiple linear regression on using our newly acquired principal components. We then obtained linear regression lines predicting both instate tuition and graduation rate. We had already regressed three explanatory variables from the data upon the values for instate tuition and graduation rate to try to create a predictive model. This proved to create predictions with standard errors far too large to be viable for any sort of conclusions. So, we also tried regressing our first three principle component scores on these variables and created another set of prediction 15 intervals, adjusted using MLR methods. This was only a negligible improvement over the previous prediction intervals and were again not useful for any serious conclusions. 6.4 Discriminate Analysis Our initial suspisions that schools could be classified into public or private according a few key variables was confirmed with our 100% classification rate in our discriminate analysis section. The distiction is so clear, in fact, that even if we ignore the wildly unequal covariance matrices we can effectively classify schools with no loss of accuracy. Classifying by region, however, proved impossible to do by any measure of accuracy. Not only did we have a 98% error rate for some regions, we also classified only two of our schools correcly with the quadratic rule. Then when we used the linear rule, ignoring the unequal covariance matrices, we could only classify one school correctly. Therefore it is safe to say that the schools in this data set can be classified into public or private schools effectively, but not by regions. 16 Citations ”Index of /datasets/colleges.” StatLib :: Data, Software and News from the Statistics Community. (accessed April 22, 2010). http://lib.stat.cmu.edu/datasets/colleges/usnews.data. ”U.S. Census Regions and Divisions Map.” Energy Information Administration - EIA - Official Energy Statistics from the U.S. Government. (accessed April 22, 2010). http://www.eia.doe. gov/emeu/reps/maps/us_census.html. 17