Orléans, France SDA 2015 November 17 – 19 Symbolic Data Analysis Workshop

advertisement

Symbolic Data Analysis Workshop

SDA 2015

November 17 – 19

Orléans, France

University of Orléans

Symbolic Data Analysis Workshop

SDA 2015

November 17 – 19

Orléans University, France

SPONSORS

CNRS

MAPMO Mathematics Laboratory

University of Orléans

Denis Poisson Federation

Centre-Val de Loire Region Council

Loiret District Council

STEERING COMMITTEE

Paula BRITO, University of Porto, Portugal

Monique NOIRHOMME, University of Namur, Belgium

ORGANISING COMMITTEE

Guillaume CLEUZIOU, Richard EMILION, Christel VRAIN

Secretary: Marie-France GRESPIER

University of Orléans, France

SCIENTIFIC COMMITTEE

Javier ARROYO, Spain

Lynne BILLARD, USA

Paula BRITO, Portugal

Chun-houh CHEN, Taiwan

Guillaume CLEUZIOU, France

Francisco DE CARVALHO, Brazil

Edwin DIDAY, France

Richard EMILION, France

Manabu ICHINO, Japan

Yves LECHEVALLIER, France

Monique NOIRHOMME, Belgium

Rosanna VERDE, Italy

Gilles VENTURINI, France

Christel VRAIN, France

Huiwen WANG, China

Symbolic Data Analysis Workshop

SDA 2015

November 17 – 19

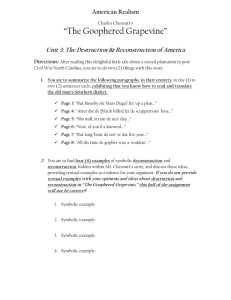

Tuesday, November 17

Orléans University Campus

IIIA Computer Science Building

TUTORIAL

1rst floor, Room E19

09:00 - 09:50 Introduction to Symbolic Data Analysis

Paula BRITO, FEP & LIAAD-INESC TEC, Univ. Porto, Portugal

09:50 - 10:15 Coffee Break

10:15 - 11:05 The Quantile Method for Symbolic Data Analysis

Manabu ICHINO, SSE, Tokyo Denki University, Japan

11:05 - 11:55 The R SDA Package

Oldemar RODRIGUEZ, University of Costa Rica

12:00 - 13:45 Welcome, Registration, Lunch

L'Agora Restaurant, Orléans University Campus

IIIA, Herbrand Amphitheatre

13:55 - 14:00 Workshop Opening

14:00 - 17:25 Workshop Talks

19:30

Workshop Dinner.

'Le Martroi' restaurant, 12 Place du Martroi, Orléans.

Tram stop: 'De Gaulle' or 'République'

Introduction to Symbolic Data Analysis

Paula Brito

FEP & LIAAD-INESC TEC, Univ. Porto, Portugal

Symbolic Data, introduced by E. Diday is concerned with analysing data presenting intrinsic

variability, which is to be explicitly taken into account. In classical Statistics and Multivariate Data

Analysis, the elements under analysis are generally individual entities for which a single value is

recorded for each variable - e.g., individuals, described by their age, salary, education level, marital

status, etc.; cars each described by its weight, length, power, engine displacement, etc.; students for

each of which the marks at different subjects were recorded. But when the elements of interest are

classes or groups of some kind - the citizens living in given towns; teams, consisting of individual

players; car models, rather than specific vehicles; classes and not individual students - then there is

variability inherent to the data. To reduce this variability by taking central tendency measures mean values, medians or modes - obviously leads to a too important loss of information.

Symbolic Data Analysis provides a framework allowing representing data with variability,

using new variable types. Also, methods have been developed which suitably take data variability

into account. Symbolic data may be represented using the usual matrix-form data arrays, where

each entity is represented in a row and each column corresponds to a different variable - but now

the elements of each cell are generally not single real values or categories, as in the classical case,

but rather finite sets of values, intervals or, more generally, distributions.

In this talk we shall introduce and motivate the field of Symbolic Data Analysis, present into

some detail the new variable types that have been introduced to represent variability, illustrating

with some examples. We shall furthermore discuss some issues that arise when analysing data that

does not follow the usual classical model, and present data representation models for some variable

types.

The Quantile Method for Symbolic Data Analysis

Manabu Ichino

School of Science and Engineering, Tokyo Denki University

ichino@mail.dendai.ac.jp

Keywords: Quantiles, Monotonicity, Visualization, PCA, Clustering

Abstract

The quantile method transforms the given (N objects)×(d variables) symbolic data table to a

standard {N×(m+1) sub-objects}×(d variables) numerical data table, where m is a preselected

integer number that controls the granularity to represent symbolic objects. Therefore, a set of (m+1)

d-dimensional numerical vectors, called the quantile vectors, represents each symbolic object.

According to the monotonicity of quantile vectors, we present the following three methods for

symbolic data analysis.

Visualization: We visualize each symbolic object by m+1 parallel monotone line graphs [Ichino and

Brito 2014]. Each line graph is composed of d-1 line segments accumulating the d zero-one

normalized variable values.

PCA: When the given symbolic objects have a monotone structure in the representation space, the

structure confines the corresponding quantile vectors to a similar geometrical shape. We apply

the PCA to the quantile vectors based on the rank order correlation coefficients. We reproduce

each symbolic object as m series of arrow lines that connect from the minimum quantile vector

to the maximum quantile vector in the factor planes [Ichino 2011].

Clustering: We present a hierarchical conceptual clustering based on the quantile vectors. We define

the concept sizes of d-dimensional hyper-rectangles spanned by quantile vectors. The concept

size plays the role of the similarity measure between sub-objects, i.e., quantile vectors, and it

plays also the role of the measure for cluster quality [Ichino and Brito 2015].

References

H-H. Bock and E. Diday (2000). Analysis of Symbolic Data - Exploratory Methods for Extracting

Statistical Information from Complex Data. Heidelberg: Springer.

L. Billard and E. Diday (2007). Symbolic Data Analysis - Conceptual Statistics and Data Mining.

Chichester: Wiley.

E. Diday and M. Noirhomme-Fraiture (2008). Symbolic Data Analysis and the SODAS Software.

Chichester: Wiley.

M. Ichino and P. Brito (2014). The data accumulation graph (DAG) to visualize multi-dimensional

symbolic data. Workshop in Symbolic Data Analysis. Taipei, Taiwan.

M. Ichino (2011). The quantile method for symbolic principal component analysis. Statistical

Analysis and Data Mining, 4, 2, pp. 184-198.

M. Ichino and P. Brito (2015). A hierarchical conceptual clustering based on the

quantile method for mixed feature-type data. (Submitted to the IEEE Trans.

SMC).

Latest developments of the RSDA: An R package for

Symbolic Data Analysis

Oldemar Rodrı́guez

⇤

June 26, 2015

Abstract

This package aims to execute some models on Symbolic Data Analysis. Symbolic Data

Analysis was propose by the professor E. DIDAY in 1987 in his paper “Introduction à l’ approche

symbolique en Analyse des Données”. Premiére Journées Symbolique-Numérique. Université

Paris IX Dauphine. Décembre 1987. A very good reference to symbolic data analysis can be

found in “From the Statistics of Data to the Statistics of Knowledge: Symbolic Data Analysis”

of L. Billard and E. Diday that is the journal American Statistical Association Journal of the

American Statistical Association June 2003, Vol. 98.

The main purpose of Symbolic Data Analysis is to substitute a set of rows (cases) in a

data table for an concept (second order statistical unit). For example, all of the transactions

performed by one person (or any object) for a single “transaction” that summarizes all the

original ones (Symbolic-Object) so that millions of transactions could be summarized in only

one that keeps the customary behavior of the person. This is achieved thanks to the fact that

the new transaction will have in its fields, not only numbers (like current transactions), but

can also have objects such as intervals, histograms, or rules. This representation of an object

as a conjunction of properties fits within a data analytic framework concerning symbolic data

and symbolic objects, which has proven useful in dealing with big databases.

In RSDA version 1.2, methods like centers interval principal components analysis, histogram principal components analysis, multi-valued correspondence analysis, interval multidemensional scaling (INTERSCAL), symbolic hierarchical clustering, CM, CRM, Lasso, Ridge

and Elastic Net Linear regression model to interval variables have been implemented. This

new version also includes new features to manipulate symbolic data through a new data structure that implements Symbolic Data Frames and methods for converting SODAS and XML

SODAS files to RSDA files.

Keywords

Symbolic data analysis, R package, RSDA, interval principal components analysis, Lasso, Ridge,

Elastic Net, Linear regression.

⇤

University of Costa Rica, San José, Costa Rica; E-Mail: oldemar.rodriguez@ucr.ac.cr

1

References

[1] Billard, L., Diday, E., (2003). From the statistics of data to the statistics of knowledge: symbolic

data analysis. J. Amer. Statist. Assoc. 98 (462), 470-487.

[2] Billard, L. & Diday, E. (2006) Symbolic Data Analysis: Conceptual Statistics and Data Mining,

John Wiley & Sons Ltd, United Kingdom.

[3] Bock, H.-H., and Diday, E. (eds.) (2000). Analysis of Symbolic Data: Exploratory Methods for

Extracting Statistical Information From Complex Data, Berlin: Springer-Verlag.

[4] Diday E. (1987): “Introduction à l’approche symbolique en Analyse des Données”. Premières

Journées Symbolique-Numérique. Université Paris IX Dauphine. Paris, France.

[5] Lima-Neto, E.A., De Carvalho, F.A.T., (2008). Centre and range method to fitting a linear

regression model on symbolic interval data. Computational Statistics and Data Analysis 52,

1500-1515.

[6] Lima-Neto, E.A., De Carvalho, F.A.T., (2010). Constrained linear regression models for symbolic interval-valued variables. Computational Statistics and Data Analysis 54, 333-347.

[7] Rodrı́guez, O. (2000). Classification et Modèles Linéaires en Analyse des Données Symboliques.

Ph.D. Thesis, Paris IX-Dauphine University.

[8] Rodrı́guez,

O. with contributions from Olger Calderon and Roberto Zuniga (2014). RSDA - R to Symbolic Data Analysis. R package version 1.2.

[http://CRAN.R-project.org/package=RSDA]

2

Symbolic Data Analysis Workshop

SDA 2015

November 17 – 19

Session Speakers

SDA 2015

November 17 – 19

University of Orléans, France

Tuesday, November 17, afternoon

12:00 - 13:45 Welcome, Registration, Lunch

L'Agora Restaurant, Orléans University Campus

_______________

IIIA Computer Science Building

Herbrand Amphitheatre

13:55 - 14:00 Workshop Opening

Session 1: VARIABLE DEPENDENCIES

Chair: Rosanna VERDE

14:00 – 14:25 Explanatory Power of a Symbolic Data Table

Edwin DIDAY, University Paris-Dauphine, France

14:25 – 14:50 Methods for Analyzing Joint Distribution Valued Data

and Actual Data Sets

Masahiro MIZUTA, Hiroyuki MINAMI, IIC, Hokkaido University, Japan

14:50 – 15:15 Advances in regression models for interval variables: a copula based model

Eufrasio LIMA NETO, Ulisses DOS ANJOS, Univ. Paraiba, Joao Pessoa, Brasil

15:15 – 15:40 Symbolic Bayesian Networks

Edwin DIDAY, University Paris-Dauphine

Richard EMILION, MAPMO, University of Orléans, France

15:40 – 16:10 Coffee Break

Session 2: STATISTICAL APPROACHES

Chair: Didier CHAUVEAU

16:10 – 16:35 Maximum Likelihood Estimations for Interval-Valued Variables

Lynne BILLARD, University of Georgia, USA

16:35 – 17:00 Function-valued Image Segmentation using Functional Kernel Density Estimation

Laurent DELSOL, Cecile LOUCHET, MAPMO, University of Orléans, France

17:00 – 17:25 Outlier Detection in Interval Data

A. Pedro DUARTE SILVA, UCP Porto, Portugal

Peter FILZMOSER, TU Vienna, Austria

Paula BRITO, FEP & LIAAD-INESC TEC, Univ. Porto, Portugal

19:30

Workshop Dinner. 'Le Martroi' restaurant. 12, Place du Martroi. Orléans

Tram stop: 'De Gaulle' or 'République'

Explanatory Power of a Symbolic Data Table

Edwin Diday (Paris-Dauphine University)

The main aim of this talk is to study the « explanatory » quality of a symbolic data table. We give

criterion based on entropy and discrimination which are shown to be complementary. We show that

under some conditions the best descriptive variables of the concepts are also the best predictive one

of the concepts.

Methods for Analyzing Joint Distribution Valued Data

and Actual Data Sets

Masahiro Mizuta1*, Hiroyuki Minami1*

1. Advanced Data Science Laboratory, Information Initiative Center, Hokkaido University, JAPAN

*Contact author: mizuta@iic.hokudai.ac.jp

Keywords: Simultaneous Distribution Valued Data, Parkinsons Telemonitoring Data

Analysis of distribution valued data is one of the hottest topics in SDA: especially, joint distribution

(or, simultaneous distribution) valued data. In this talk, we introduce methods for them and show an

open actual data. We assume that we have n concepts (or, objects) and each of concepts is described

by distribution. Many methods are proposed. Key ideas are summarized as follows: (1) Use of

distances between concepts, (2) Use o f parameters of distributions, (3) Use o f quantile function.

When the concepts are described by joint distributions, the approach (1) is natural. Igarashi (2015)

proposed a method based on it. There is room to adopt the approaches (2) and (3).

In order to study methods for data analysis, good actual data sets are helpful. But, there are not so

many good datasets of joint distribution valued data. Mizuta (2014) showed a dataset; Monitoring

Post Data in around Fukushima Prefecture. Another good data set is Parkinsons Telemonitoring data

set, which can be gotten from Web

(https://archive.ics.uci.edu/ml/datasets/Parkinsons+Telemonitoring). I will introduce them.

Acknowledgment: I wish to thank Mr. Igarashi. A part of this work is based on the results of

Igarashi (2015).

References

M. Mizuta (2012). Analysis of Distribution Valued Dissimilarity Data. In Challenges at the

Interface of Data Analysis, Computer Science, and Optimization. Springer, 23-28.

M. Mizuta (2014). Symbolic Data Analysis for Big Data. Proceedings of 2014 Workshop in

Symbolic Data Analysis 59.

K. Igarashi, H. Minami, M. Mizuta (2015). Exploratory Methods for Joint Distribution Valued Data

and Their Application. Communications for Statistical Applications and Methods, 2015, Vol.

22, No. 3, 265–276, DOI: http://dx.doi.org/10.5351/CSAM.2015.22.3.265.

A. Irpino, R. Verde (2015). Basic Statistics for Distributional Symbolic Variables: A New Metricbased Approach. Advances in Data Analysis and Classification, Vol.9, No.2, 143-175.

Advances in regression models for interval variables: a copula

based model

Eufrásio Lima Neto1,*, Ulisses dos Anjos1

1. Department of Statistics, Federal University of Paraíba, João Pessoa, PB, Brazil.

*Contact author: eufrasio@de.ufpb.br

Keywords: Inference, Copulas, Regression, Interval Variable.

Regression models are widely used to solve problems in many fields. However, the uses of inferential

techniques play an important role in order to validate these models. Recently, some contributions were

presented in order to fit a regression model for interval-valued variables. We start this talk discussing

about some of these techniques. Then, it is stated a regression model for interval-valued variables based

on copula theory that allows more flexibility for the model’s random component. In this way, the main

advance of the new approach is that is possible to consider inferential procedures over the parameters

estimates as well as goodness-of-fit measures and residual analysis based on general probabilistic

background. A Monte Carlo simulation study demonstrated asymptotic properties for the maximum

likelihood estimates obtained form the copula regression model. Applications to real data sets are also

considered.

!

References

Brito, P. and Duarte Silva, A.P. (2012). Modelling interval data with normal and skew-normal

distributions. Journal of Applied Statistics 39, 3–20.

Blanco-Fernández, A., Corral, N. and González-Rodríguez, G. (2011). Estimation of a flexible simple

linear model for interval data based on set arithmetic. Computational Statistics Data Analysis 55, 2568–

2578.

Diday, E. and Vrac, M. (2005). Mixture decomposition of distributions by copulas in the symbolic data

analysis framework. Discrete Applied Mathematics 147(1), 27–41.

Lima Neto, E.A. and Anjos, U.U. (2015). Regression model for interval-valued variables based on

copulas. Journal of Applied Statistics, 1–20.

Lima Neto, E.A., Cordeiro, G.M. and De Carvalho, F.A.T. (2011). Bivariate symbolic regression

models for interval-valued variables, Journal of Statistical Computation and Simulation 81, 1727–1744.

Symbolic Bayesian Networks

Edwin DIDAY1 , Richard EMILION2,

?

1. CEREMADE, University Paris-Dauphine

2. MAPMO, University of Orléans, France

? Contact author: richard.emilion@univ-orleans.fr

Keywords: Bayesian network, Conditional distribution, Dirichlet distribution, Independence test.

Bayesian networks, see e.g. [1], are probabilistic directed acyclic graphs used

for system behavior modelling through conditional distributions. They generally

deal with coorelated categorical or real-valued random variables. We consider

Bayesian networks dealing with probability-distribution-valued random variables.

1. Statistical setting

Le X = (X1 , . . . , Xj , . . . , Xp ) be a random vector, p 1 being a integer and each

Xj taking values in the space of probability measures defined on a measurable

space (Vj , Vj ), j = 1, . . . , p. Let (Xk,1 , . . . , Xk,j , . . . , Xk,p ) k = 1, . . . , K be a

sample of size K of X. Consider k as a row index and j as a column one.

2. Motivation

Actually the sample (Xk,1 , . . . , Xk,j , . . . , Xk,p ) k = 1, . . . , K is not observed but

only estimated from observed data. In symbolic data analysis (SDA), each observed data belong toQa class among K disjoint classes, say c1 , . . . , cK . They can

be either vectors in pj=1 Vj or in some Vj as seen in the two examples below

which illustrate two different situations. The empirical distribution of the data in

Vj which belong to class ck is an estimation of the probability distribution Xk,j .

This distribution is considered as the j-th descriptor of class ck .

2.1 Paired Samples

In the well-known Fisher’s iris data set, K = 3, c1 = ’setosa’, c2 = ’versicolor, c3

= ’virginica’, p = 4. The observations are 50 iris in each of these 3 classes. The

observed samples are paired since each iris is described by a vector of 4 data. As

an example, X3,2 is the probability distribution of sepal width in ’virginica’ class.

2.2 Unpaired Samples

Let c1 , . . . , ck be K students and p professors that grade several students’ exams.

Let Xk,j be the distribution of student ck grades given by professor j. It is seen

here that the samples are unpaired since the exams and the number of exams can

differ from one professor to another.

2.3 Dependencies

Clearly, in the case of paired samples, within each class, data of descriptor j are

correlated to data of descriptor j0 while this correlation is meaningless in the case

of unpaired samples. However considering the K pairs of estimated distributions

(Xk,j , Xk,j0 ), k = 1, . . . , K, j, j0 = 1, . . . , p, j 6= j0, it is seen that the random

distributions Xj and Xj0 can be correlated. This motivates us to consider Bayesian

networks dealing with probability distributions.

3. The case of finite sets

Assume Vj finite so that Xk,j is a probability vector of frequencies which size

can depend on j. Therefore, Bayesian networks are built by testing the independence (resp. the correlation) between the two random vectors Xj and Xj0 . We

have used the indep.etest() function implemented in the ’energy’ package for R

[3]. Distributions and conditional distributions are estimated using kernels in the

nonparametric case while Dirichlet distributions are used in the parametric case.

4. The case of densities

Assume that each Vj is a measurable subsets of some Rdj and that Xk,j has a

density fk,j w.r.t. the Lebesgue measure. Independence tests can be performed

and conditional distributions can be estimated using some functional data analysis

methods either using a finite number of coordinates on some basis to be in the

finite sets case, or using kernel estimators w.r.t. a distance on a function space [2].

References

[1] Darwich, A. (2009). Modeling and Reasoning with Bayesian Networks. Cambridge University Press.

[2] Ramsey, J.O. - Silverman, B.W. (2005) Functional Data Analysis. Springer.

[3] Szekely, G.J. - Rizzo, M.L. (2013). The distance correlation t-test of independence in high dimension. J. Mult. Variate Anal. 17, 193-213. http:

//dx.doi.org/10.1016/j.jmva.2013.02.012

Maximum Likelihood Estimation

for Interval-valued Data

Lynne BILLARD, University of Georgia

Bertrand and Goupil (2000) obtained empirical formulas for the mean and variance of interval-valued observations. Billard (2008) obtained empirical formulas

for the covariance of interval-valued observations. These are in effect moment estimators. We show how, under certain probability assumptions, these are the same

as the maximum likelihood estimators for the corresponding population parameters.

Function-valued image segmentation

using functional kernel density estimation

Laurent Delsol1 , Cécile Louchet1,

?

1. MAPMO, UMR CNRS 7349, Université d’Orléans, Fédération Denis Poisson FR CNRS 2964

? Contact author: cecile.louchet@univ-orleans.fr

Keywords: Hyperspectral imaging, functional kernel density estimation, minimal partition.

Introduction. More and more image acquisition systems record high-dimensional vectors for

each pixel, as it is the case for hyperspectral imaging or dynamic PET imaging, to cite only them.

In hyperspectral imaging, each pixel is associated with a light-spectrum containing up to several hundreds of radiance values, each corresponding to narrow spectral bands. In dynamic PET

images, each pixel is associated with a time activity curve giving a radioactivity measurement

throughout the (discretized) time after radiotracer injection. In both cases, the data accessible for

each pixel is a vector coming from the discretization of a function with physical meaning. It is thus

interesting to extend classical image processing techniques on function-valued images.

Image segmentation is the task of partitioning the pixel grid of an image such that the obtained

regions correspond to distinct physical objects; it can be described as automatic search of regions

of interest. It is based on the assumption that each region has different effects on the image values.

Many research works have been about segmenting single- or color-valued images, but little has

been done on function-valued images.

In symbolic data analysis terminology, each pixel is an object whose symbolic description is a

function, and image segmentation can be seen as a symbolic clustering method.

In our work, we recall the most famous single-valued image segmentation method, namely the

minimal partition model (Mumford, Shah, 1989), and adapt it to our case using functional estimation tools, namely functional kernel density estimation, depending on a distance between functions.

We discuss the choice of this distance, and test it on a hyperspectral image and give an application

to gray-level texture image segmentation.

Function-valued image segmentation. Let y be an image defined on a finite grid ⌦, with values

in a function set F. We aim at segmenting y into L regions, that is at finding x : ⌦ ! {1, . . . , L}

such that each region {s 2 ⌦ : xs = l} (1 l L) corresponds to one object. A Bayesian

approach is to consider the segmentation x̂ that maximizes P (X = x|Y = y), or equivalently,

that maximizes fY |X=x (y)PX (x) among x : ⌦ ! {1, . . . , L}. The widely used minimal partition

model estimates the data term fY |X=x using an isotropic Gaussian model. For the regularity term,

it uses a parameter > 0 and chooses the Potts (1952) model

PX (x) / e

P

s⇠t

1xs 6=xt

,

(1)

where ⇠ refers

P to a neighborhood system in ⌦, typically the 4 or the 8 nearest neighbor system. The

potential s⇠t 1xs 6=xt corresponds to a discrete boundary length between regions: short boundary

segmentations are preferred.

In our context, the isotropic Gaussian assumption on the data term is not admissible because it is

not able to account for any function regularity. The only assumption we make is that the functions

Q

(ys )s2⌦ are independent conditionally to the segmentation, yielding fY |X=x (y) = s2⌦ fYs |Xs =xs (ys ),

each term of which can be estimated using functional kernel density estimation (Dabo-Niang,

2004)

✓

◆

X

d(y

,

y

)

t

s

fˆYs |Xs =xs (ys ) /

K

,

(2)

h

t:x =x

t

s

where K is a positive kernel, h > 0 is the K’s bandwidth, and d is a distance on the space of

functions F. The normalizing factor, not written here, is linked to the small ball probability, but

luckily enough does not depend on the segmentation.

Results. The maximization algorithm details can be found in Delsol, Louchet (2014), so we prefer focusing here on the choice of the distance d. In many hyperspectral images, the L2 distance

between the derivatives of the functions (Tsai, Philpot, 1998) is able to accurately measure a discrepancy between functions because it is more robust to vertical shifts of the functions, even if it

is more sensitive to noise than usual L2 distance. This distance was used in Figure 1 (a-c) where a

fake lemon slice was well distinguished from a real one.

Another application to gray-level image texture segmentation is proposed. A texture will be described by the density of gray levels estimated at each pixel’s neighborhood: a simple gray-level

image becomes a function-valued image, where each function is a density. In this particular case,

natural choices of distance are transport distances: they allow for small horizontal shifts, and are

straighforward to compute in dimension 1. The L2 transport distance was used in a mouse brain

MRI to extract the cerebellum region which is more textured than the others (Figure 1 (d-e)).

(a)

(b)

(c)

(d)

(e)

Figure 1: (a) One slice of hyperspectral image (fake and real lemon) (b) Functions attached to a

sample of pixels (c) Segmentation using the distance between derivatives (d) MRI of mouse brain

(e) Cerebellum segmentation using L2 transport distance.

References

S. Dabo-Niang (2004). Kernel density estimator in an infinite-dimensional space with a rate of

convergence in the case of diffusion process. Applied Mathematics Letters, 17(4), pp. 381–386.

L. Delsol, C. Louchet (2014). Segmentation of hyperspectral images from functional kernel density

estimation. Contributions in infinite-dimensional statistics and related topics, 101–105.

D. Mumford, J. Shah (1989). Optimal approximations by piecewise smooth functions and associated variational problems. Comm. on Pure and Appl. Math., XLII (5), 577–685.

R. B. Potts (1952). Some generalized order-disorder transformations. Mathematical Proceedings,

48(1), 106–109.

F. Tsai, W. Philpot (1998). Derivative analysis of hyperspectral data. Remote Sensing of Environment, 66(1), 41–51.

Outlier Detection in Interval Data

?

A. Pedro Duarte Silva1 , Peter Filzmoser2 , Paula Brito3, ,

1. Faculdade de Economia e Gestão & CEGE, Universidade Católica Portuguesa, Porto, Portugal

2. Institute of Statistics and Mathematical Methods in Economics, Vienna University of Technology, Vienna,

Austria

3. Faculdade de Economia & LIAAD-INESC TEC, Universidade do Porto, Porto, Portugal

? Contact author: mpbrito@fep.up.pt

Keywords: Mahalanobis distance, Modeling interval data, Robust estimation, Symbolic Data

Analysis

In this work we are interested in identifying outliers in multivariate observations that are consisting

of interval data. The values of an interval-valued variable may be represented by the corresponding

lower and upper bounds or, equivalently, by their mid-points and ranges. Parametric models have

been proposed which rely on multivariate Normal or Skew-Normal distributions for the mid-points

and log-ranges of the interval-valued variables. Different parameterizations of the joint variancecovariance matrix allow taking into account the relation that might or might not exist between

mid-points and log-ranges of the same or different variables Brito and Duarte Silva (2012).

Here we use the estimates for the joint mean t and covariance matrix C for multivariate outlier

detection. The Mahalanobis distances D based on these estimates provide information on how

different individual multivariate interval data are from the mean with respect to the overall covariance structure. A critical value based on the Chi-Square distribution allows distinguishing outliers

from regular observations. The outlier diagnostics is particularly interesting when the covariance

between the mid-points and the log-ranges in restricted to be zero. Then, Mahalanobis distances

can be computed separately for mid-points and log-ranges, and the resulting distance-distance plot

identifies outliers that can be due to deviations with respect to the mid-point, or with respect to

the range of the interval data, or both. However, if t and C are chosen to be the classical sample

mean vector and covariance matrix this procedure is not reliable, as D may be strongly affected

by atypical observations. Therefore, the Mahalanobis distances should be computed with robust

estimates of location and scatter.

Many robust estimators for location and covariance have been proposed. The minimum covariance

determinant (MCD) estimator Rousseeuw (1984, 1985) uses a subset of the original sample, consisting of the h points in the dataset for which the determinant of the covariance matrix is minimal.

Weighted trimmed likelihood estimators Hadi and Luceño (1997) are also based on a sample subset, formed by the h observations that contribute most to the likelihood function. In either case, the

proportion of data points to be used needs to be specified a priori. For multivariate Gaussian data,

the two approaches lead to the same estimators Hadi and Luceño (1997); Neykov et al (2007).

In this work we consider the Gaussian model for interval data, and employ the above approach

based on minimum (restricted) covariance esimators, with the correction suggested in Pison et

al (2002) and with the trimming percentage selected by a two-stage procedure. We evaluate our

proposal with an extensive simulation study for different data structures and outlier contamination

levels, showing that the proposed approach generally outperforms the method based on simple

maximum likelihood estimators. Our methodology is illustrated by an application to a real dataset.

References

Brito, P. and Duarte Silva, A.P. (2012) Modelling interval data with Normal and Skew-Normal

distributions. Journal of Applied Statistics 39 (1), 3–20.

Hadi, A.S., and Luceño, A. (1997) Maximum trimmed likelihood estimators: a unified approach,

examples, and algorithms. Computational Statistics & Data Analysis 25 (3), 251–272.

Neykov, N., Filzmoser, P., Dimova, R. and Neytchev, P. (2007) Robust fitting of mixtures using

the trimmed likelihood estimator. Computational Statistics & Data Analysis 52 (1), 299–308.

Pison, G., Van Aelst, S. and Willems, G. (2002) Small sample corrections for LTS and MCD.

Metrika 55(1-2), 111–123.

Rousseeuw, P.J. (1984) Least median of squares regression. Journal of the American Statistical

Association 79 (388), 871–880.

Rousseeuw, P.J. (1985) Multivariate estimation with high breakdown point. Mathematical Statistics and Applications 8, 283–297.

SDA 2015

November 17 – 19

University of Orléans, France

Wednesday, November 18

Morning

IIIA Computer Science Building

Herbrand Amphitheatre

Session 3: PRINCIPAL COMPONENT ANALYSIS

Chair: Edwin DIDAY

09:00 – 09:25 The Data Accumulation Method

Manabu ICHINO, SSE,Tokyo Denki University, Japan

09:25 – 09:50 Principal Curves and Surfaces for Interval-Valued Variables

Jorge Arce G., Oldemar RODRIGUEZ, University of Costa Rica, Costa Rica

09:50 – 10:15 Principal Component Analyses of Interval Data

using Patterned Covariance Structures

Anuradha ROY, University of Texas, USA

10:15 – 10:40 Population and Robust Symbolic PCA

Rosario OLIVEIRA, Margarida VILELA, Rui VALADAS

IST, University of Lisbon, Portugal,

Paulo SALVADOR, TI, University of Aveiro, Portugal

10:40 – 11:05 Coffee Break

Session 4: CLUSTERING 1

Chair: Yves LECHEVALLIER

11:05 – 11:30 A Topological Clustering Method for Histogram Data

Guénaël CABANES, Younès Bennani, LIPN-CNRS, Univ. Paris 13, France

Rosanna VERDE, Antonio IRPINO, Second University, Naples, Italy

11:30 – 11:55 A Co-Clustering Algorithm for Interval-Valued Data

Francisco DE CARVALHO, Roberto C. FERNANDES, UFPE, Recife, Brazil

11:55 – 12:20 Dynamic Clustering with Hibrid L1, L2 and L∞ Distances

Leandro SOUZA, Renata M. C. R. SOUZA, Getulio J. A. AMARAL

UFPE, Recife, Brazil

12:20

Lunch. L'Agora restaurant, Campus

The Data Accumulation Method: Dimensionality Reduction,

PCA, and PCA Like Visualization

Manabu Ichino

School of Science and Engineering, Tokyo Denki University

ichino@mail.dendai.ac.jp

Keywords: Data Accumulation, Monotonicity, Dimensionality Reduction, PCA,

Visualization

Abstract

The data accumulation method is based on the accumulation of feature values after the

zero-one normalization of feature variables. Let x1, x2,..., xd be the normalized feature

values that describe an object. The data accumulation generate new values as y1 = x1, y2 =

x1 + x2,..., yd = x1 + x2 + ··· + xd. These new values define a monotone line graph, called

the accumulated concept function. We regard the value yd as the maximum concept size

of the object. The roughest approximation of the concept function for the object is the

selection of the minimum and the maximum values y1 and yd. On the other hand, the

selection of all of d-accumulated values yields the finest approximation for the function.

By the sampling of the accumulated values we achieve various approximation level.

From this viewpoint, this paper describes data accumulation of categorical multi-valued

data, PCA and PCA like visualization for symbolic data, and the dimensionality reduction

of high-dimensional data and PCA.

References

H-H. Bock and E. Diday (2000). Analysis of Symbolic Data - Exploratory Methods for

Extracting Statistical Information from Complex Data. Heidelberg: Springer.

L. Billard and E. Diday (2007). Symbolic Data Analysis - Conceptual Statistics and Data

Mining. Chichester: Wiley.

E. Diday and M. Noirhomme-Fraiture (2008). Symbolic Data Analysis and the SODAS

Software. Chichester: Wiley.

M. Ichino and P. Brito (2014). The data accumulation graph (DAG) to visualize multidimensional symbolic data. Workshop in Symbolic Data Analysis. Taipei, Taiwan.

P. Brito and M. Ichino (2014). The data accumulation graph (DAG): visualization of high

dimensional complex data. 21st International Conference on Computational

Statistics. Geneva, Swiss.

M. Ichino and P. Brito (2015). A hierarchical conceptual clustering based on the quantile

method for mixed feature-type data. (Submitted to the IEEE Trans. SMC).

M. Ichino and P. Brito (2015). The data accumulation PCA to analyze periodically

summarized multiple data tables. (Submitted to the IEEE Trans. SM

Principal Curves and Surfaces to Interval Valued Variables

Jorge Arce G.

⇤

Oldemar Rodrı́guez

†

June 26, 2015

Abstract

In this paper we propose a generalization to symbolic interval valued variables of the

Principal Curves and Surfaces method proposed by T. Hastie in [4]. Given a data set X with n

observations and m continuos variables the main idea of Principal Curves and Surfaces method

is to generalize the principal component line, providing a smooth one-dimensional curved

approximation to a set of data points in Rm . A principal surface is more general, providing a

curved manifold approximation of dimension 2 or more. In our case we are interested in finding

the main principal curve that approximates better symbolic interval data variables. In [2] and

[3], the authors proposed the Centers and the Vertices Methods to extend the well known

principal components analysis method to a particular kind of symbolic objects characterized

by multi-valued variables of interval type. In this paper we generalize both, the Centers and

the Vertices Methods, finding a smooth curve that passes through the middle of the data

X in an orthogonal sense. Some comparisons of the proposed method regarding the Centers

and the Vertices Methods are made, these was done using the RSDA package using Ichino

and Interval Iris Data sets, see [8] and [1]. To make these comparisons we have used the

cumulative variance and the correlation index.

Keywords

Interval-valued variables, Principal Curves and Surfaces, Symbolic Data Analysis.

References

[1] Billard, L. & Diday, E. (2006) Symbolic Data Analysis: Conceptual Statistics and Data Mining,

John Wiley & Sons Ltd, United Kingdom.

[2] Cazes P., Chouakria A., Diday E. et Schektman Y. (1997). Extension de l’analyse en composantes principales à des données de type intervalle, Rev. Statistique Appliquée, Vol. XLV

Num. 3 pag. 5-24, France.

⇤

†

University of Costa Rica, San José, Costa Rica & Banco Nacional de Costa Rica;E-Mail: jarceg@bncr.fi.cr

University of Costa Rica, San José, Costa Rica; E-Mail: oldemar.rodriguez@ucr.ac.cr

1

[3] Douzal-Chouakria A., Billard L., Diday E. (2011). Principal component analysis for intervalvalued observations. Statistical Analysis and Data Mining, Volume 4, Issue 2, pages 229-246.

Wiley.

[4] Hastie,T. (1984) Principal Curves and Surface, Ph.D Thesis Stanford University.

[5] Hastie,T. & Weingessel,A. (2014). princurve - Fits a Principal Curve in Arbitrary Dimension.

R package version 1.1-12

[http://cran.r-project.org/web/packages/princurve/index.html]

[6] Hastie,T. & Stuetzle, W. (1989). Principal Curves, Journal of the American Statistical Association, Vol. 84 406: 502–516.

[7] Hastie, T., Tibshirani, R. and Friedman, J. (2008). The Elements of Statistical Learning; Data

Mining, Inference and Prediction. New York: Springer.

[8] Rodrı́guez,

O. with contributions from Olger Calderon and Roberto Zuniga (2014). RSDA - R to Symbolic Data Analysis. R package version 1.2.

[http://CRAN.R-project.org/package=RSDA]

[9] Rodrı́guez, O. (2000). Classification et Modèles Linéaires en Analyse des Données Symboliques.

Ph.D. Thesis, Paris IX-Dauphine University

2

Principal component analyses of interval data using

patterned covariance structures

Anuradha Roy

Department of Management Science and Statistics

The University of Texas at San Antonio

One UTSA Circle, San Antonio, TX 78249, USA

Contact author: Anuradha.Roy@utsa.edu

Keywords: Equicorrelated covariance structure, Jointly equicorrelated covariance structure, Interval data

New approaches to derive the principal components of interval data (Billard and Diday, 2006) are

proposed by using block variance-covariance matrices, namely equicorrelated and jointly equicorrelated covariance structures (Leiva and Roy, 2011). This is accomplished by considering each

interval as two repeated measures at the lower and upper bounds of the interval (two-level multivariate data), and then by assuming equicorrelated covariance structure for the data. That is,

for interval data with p intervals, the data no more belong to the p dimensional space, but in

2p dimensional space, and the set of p lower bounds is correlated with the set of p upper bounds.

The second set of p points is another possible realization of the first set of p points. So, we start

with random points in dimension p and the computations are done as if we are in dimension 2p.

Eigenblocks and eigenmatrices are obtained based on the eigendecomposition of the block variancecovariance matrix of the data. We then analyze these eigenblocks and the corresponding principal vectors together in some seemly sense to get the adjusted eigenvalues and the corresponding

eigenvectors of the interval data. It is shown that the (p ⇥ 1) dimensional first principal vector

corresponding to the first eigenblock represents the midpoints of the lower bounds and the corresponding upper bounds of the intervals. Similarly, the (p ⇥ 1) dimensional second principal

vector corresponding to the second eigenblock represents the midranges of the lower bounds and

the corresponding upper bounds of the intervals.

We then work independently with these principal vectors (the components of whom are midpoints

and midranges of the intervals respectively) and their corresponding variance-covariance matrices,

i.e., the corresponding eigenblocks to get the eigenvalues and eigenvectors of the interval data. If

there is some additional information (like brands etc.) in the interval data, the interval data can be

considered as three-level multivariate data and one can analyze it by assuming jointly equicorrelated covariance structure. In this case, it is shown that the grand midpoints and grand midranges

are the first and third principal vectors. The second principal vector turns out to be the additional

information in the interval data. The proposed methods are illustrated with a real data set.

References

Billard L. and Diday, E. (2006). Symbolic Data Analysis: Conceptual Statistics and Data Mining

John Wiley & Sons Ltd., England.

Leiva, R. and Roy, A. (2011). Linear Discrimination for Multi-level Multivariate Data with Separable Means and Jointly Equicorrelated Covariance Structure. J. Statist. Plann. Inference 141,

1910–1924.

Population and Robust Symbolic Principal

Component Analysis

?

M. Rosário Oliveira1 , Margarida Vilela1 , Rui Valadas2 , Paulo Salvador3

1. CEMAT and Departmento de Matemática, Instituto Superior Técnico, Universidade de Lisboa, Portugal

2. DEEC and Instituto de Telecomunicações, Instituto Superior Técnico, Universidade de Lisboa, Portugal

3. DETI and Instituto de Telecomunicações, Universidade de Aveiro, Portugal

? Contact author: rosario.oliveira@tecnico.ulisboa.pt

Keywords: Principal component analysis,

Internet traffic.

Interval symbolic data,

Robust methods,

Principal component analysis (PCA) is one of the most used statistical methods in the analysis of

real problems. In the symbolic data analysis (SDA) context there have been several proposals to

extend this methodology. The methods CPCA (centers) and VPCA (vertices), pioneers in symbolic

PCA and proposes by Cazes (1997) (vide Billard, 2006) are the best known examples of this family

of methods. However, in recent years many other alternatives have emerged in the literature (vide

e.g. Wang, 2012).

In this work, we present the population formulations corresponding to three of the symbolic PCA

algorithms for interval-data: method of the centers (CPCA), method of the vertices (VPCA), and

complete information PCA (CIPCA) (Wang, 2012). The theoretical formulations define a general

method which allows substantial improvements on the existing algorithms in terms of time and

number of operations, making them easily applicable to datasets with large number of symbolic

variables and high number of objects. Moreover, this formulation enables the definition of the

population symbolic components even when one or more variables are degenerate.

Furthermore, analogously to conventional (non-symbolic) data, we have verified that the existence

of atypical observations could distort the sample symbolic principal components and correspondent

scores. To overcome this problem in the context of SDA, we defined two families of robust methods

for symbolic PCA: one based on robust covariance matrices (Filzmoser, 2011) and another based

on Projection Pursuit (Croux, 2007).

To make this new statistical tools easily used in the analysis of real problems, we also developed a

web application, using the Shiny web application framework for R, which includes several tools to

analyse, represent and perform symbolic (classical and robust) PCA in interval data, in an interactive manner. In this app it is possible to compare the classical symbolic PCA methods with all the

new robust approaches proposed in this work and its operation will be illustrated with telecommunications data.

For conventional data, PCA is frequently used as an intermediate step in the analysis of complex

problems (Johnson, 2007), and is commonly used as input for other multivariate methods. To

pursue this goal, we designed R routines to make conversions between different representations

of interval-valued data, making easier to use several R SDA packages consecutively, in the same

analysis. These packages were developed independently and each one requires reading the data in

a specific format.

Acknowledgment

This work was partially funded by Fundação para a Ciência e a Tecnologia (FCT) through projects

PTDC/EEI-TEL/5708/2014 and UID/Multi/04621/2013.

References

Billard, L., Diday, E. (2006). Symbolic Data Analysis: Conceptual Statistics and Data Analysis

John Wiley and Sons, Chichester.

Cazes, P., Chouakria, A., Diday, E., Schektman, Y. (1997). Extensions de l’analyse en composantes

principales à des données de type intervalle. Revue de Statistique Appliquée 45(3), 5-24.

Croux, C., Filzmoser, P., Oliveira, M. R. (2007). Algorithms for Projection - Pursuit robust principal component analysis. Chemometr. Intell. Lab. 87(2), 218–225.

Filzmoser, P., Todorov, V. (2011). Review of robust multivariate statistical methods in high dimension. Anal. Chim. Acta 705, 2 – 14.

Johnson, R. A. and Wichern, D. W. (2007). Applied Multivariate Statistical Analysis Prentice-Hall,

Inc., Upper Saddle River, NJ, USA.

Wang, H., Guan, R., Wu, J. (2012). CIPCA: Complete-Information-based Principal Component

Analysis for Interval-valued Data. Neurocomputing 86, 158–169.

A topological clustering method for histogram data

?

Guénaël Cabanes 1 , Younès Bennani1 , Rosanna Verde2 , Antonio Irpino2

1. LIPN-CNRS, UMR 7030, Université Paris 13, France

2. Dip. Scienze Politiche “Jean Monnet” Seconda Università di Napoli, Italia

? Contact author: cabanes@lipn.univ-paris13.fr

Keywords: Clustering, Self-Organising Map, Histogram data, Wasserstein distance

We present a clustering algorithm for histogram data based on a Self-Organising Map (SOM)

learning. SOM for symbolic data was firstly proposed by Bock (Bock and Diday, 2000) to visualise in a reduced subspace the structure of symbolic data. Further SOM method for particular

symbolic data, the interval data, have been developed using suitable distances for interval data, like

Hausdorff distance; L2 distance, adaptive distances (Irpino and Verde, 2008). In the analysis of

histogram data, that represent another representation of symbolic data by empirical distributions,

SOM has been proposed by De Carvalho et al. (2013) based on the Wasserstein L2 distance to

clustering distributions. Adaptive Wasserstein distance has been also developped in this context

to find, automatically, weights for the variables as well as for the clusters. However, the most

part of these methods can provide a quantification and a visualization of symbolic data (intervals,

histograms) but cannot be used directly to obtained a clustering of the data. The recent algorithm

proposed by Cabanes et al. (2013): S2L-SOM learning for interval data, is a two-level clustering

algorithm based on SOM that combine the dimension reduction by SOM and the clustering of the

data in a reduced space in a certain number of homogeneous clusters. Here, we propose an extension of this approach to histogram data. In the clustering phase is used the L2 Wasserstein distance

according to the dynamic clustering algorithm proposed by Verde and Irpino (2006). The number

of cluster is not a priori fixed as parameter of the clustering algorithm but it is automatically found

according to an estimation of local density and connectivity of the data in the original space, as in

Cabanes et al. (2012).

References

Bock, H.-H. and E. Diday, Eds. (2000). Analysis of Symbolic Data. Exploratory methods for

extracting statistical information from complex data. Springer Verlag, Heidelberg.

Cabanes, G., Y. Bennani, R. Destenay, and A. Hardy (2013). A new topological clustering algorithm for interval data. Pattern Recognition 46(11), 3030–3039.

Cabanes, G., Y. Bennani, and D. Fresneau (2012). Enriched topological learning for cluster detection and visualization. Neural Networks (32), 186–195.

De Carvalho, F. d. A., A. Irpino, and R. Verde (2013). Batch self organizing maps for interval and

histogram data (ISI ed.)., pp. 143–154. Curran Associates, Inc. (2013).

Irpino, A. and R. Verde (2008). Dynamic clustering of interval data using a wasserstein-based

distance. Pattern Recogn. Lett. 29(11), 1648–1658.

Verde, R. and A. Irpino (2006). Dynamic clustering of histograms using wasserstein metric. In

A. Rizzi and M. Vichi (Eds.), Proceedings in Computational Statistics, COMPSTAT 2006, Heidelberg, pp. 869–876. Compstat 2006: Physica Verlag.

A co-clustering algorithm for interval-valued data

?

Francisco de A. T. de Carvalho1, , Roberto C. Fernandes 1

1. Centro de Informatica - CIn/UFPE

? Contact author: fatc@cin.ufpe.bf

Keywords: Co-clustering, Double k-means, Interval-valued data, Symbolic data analysis

Co-clustering, also known as bi-clustering or block clustering, methods aim simultaneously cluster objects and variables of a data set. They resume the initial data matrix into a much smaller

matrix representing homogeneous blocks or co-clusters of similar objects and variables (Govaert,

1995; Govaert and Nadif, 2013). A clear advantage of this approach, Rather than the traditional

sequential approach, is that the simultaneous clustering of objects and variables may provide news

insights about the association between isolated clusters of objects and isolated clusters of variables

(Mechelen et al., 2004). Co-clustering is being used successfully in many different areas such as

text mining, bioinformatics, etc.

This presentation aims at giving a co-clustering algorithm able to simultaneously cluster objects

and interval-valued variables. Interval-valued variables are needed, for example, when an object

represents a group of individuals and the variables used to describe it need to assume a value which

express the variability inherent to the description of a group. Interval-valued data arise in practical

situations such as recording monthly interval temperatures at meteorological stations, daily interval

stock prices, etc. Another source of interval-valued data is the aggregation of huge databases into

a reduced number of groups, the properties of which are described by interval-valued variables.

Therefore, tools for interval-valued data analysis are very much required (Bock and Diday, 2000) .

Symbolic data analysis has provided suitable tools for clustering objects described by intervalvalued variables: agglomerative (Gowda and Diday, 1991; Guru et al, 2004) and divisive (Gowda

and Ravi, 1995; Chavent, 2000) hierarchical methods, partitioning hard (Chavent and Lechevallier,

2002; De Souza and De Carvalho, 2004; De Carvalho et al., 2006) and fuzzy (Yang et al, 2004; De

Carvalho, 2007) cluster algorithms. However, much more less attention was given for simultaneous

clustering of objects and symbolic variables (Verde and Lechevallier, 2001).

This presentation gives a co-clustering algorithm for interval-valued data using suitable Euclidean,

City-Block and Hausdorff distances. The presented algorithm is a double k-means type (Govaert, 1995; Govaert and Nadif, 2013). It means that it is an iterative three steps (representation,

allocation of variables, allocation of objects) relocation algorithm that looks, simultaneously, for

the representatives of the co-clusters (blocks), for a partition of the set of variables into a fixed

number of clusters and for a partition of the set of objects also in a fixed number of clusters, such

that a clustering criterion (objective function) measuring the fit between the initial data matrix and

the matrix of co-clusters representatives is locally minimized. These steps are repeated until the

algorithm convergence that can be proved (Diday and Coll, 1980).

In this presentation it is given the clustering criterion (objective function) and the main steps of

the algorithm (the computation of the co-clusters representatives, the determination of the best

partition of the variables and the determination of the best partition of the objects). The usefulness of the presented co-clustering algorithm is illustrated with its execution on some benchmark

interval-valued data sets.

References

H-.H. Bock and E. Diday (2000). Analysis of Symbolic Data, Springer, Berlin et al.

M. Chavent (2000). Criterion-based divisive clustering for symbolic objects. In in H.-H. Bock,

and E. Diday (Eds.), Analysis of symbolic data, exploratory methods for extracting statistical

information from complex data (Springer, Berlin), pp. 291–311.

M. Chavent and Y. Lechevallier (2002). Dynamical clustering algorithm of interval data: optimization of an adequacy criterion based on Hausdorff distance. In IFCS 2002, 8th Conference

of the International Federation of Classification Societies (Cracow, Poland), pp. 53–59.

F.A.T. De Carvalho (2007). Fuzzy c-means clustering methods for symbolic interval data. Pattern

Recognition Letters, 28, 423–437.

F.A.T. De Carvalho, P. Brito, and H.-H. Bock (2006). Dynamic clustering for interval data based

on L2 distance. Computational Statistics, 2, 231–250.

R.M.C.R. de Souza and F.A.T. de Carvalho (2004). Clustering of interval data based on City-Block

distances. Pattern Recognition Letters, 25, 353–365.

Diday and Coll (1980). Optimisation en Classification Automatique INRIA, Le Chesnay.

Y. El-Sonbaty and M.A. Ismail (1998). Fuzzy clustering for symbolic data. IEEE Transactions on

Fuzzy Systems, 6, 195–204.

G. Govaert (1991). Simultaneous clustering of rows and columns. Control and Cybernetics 24,

437–458.

G. Govaert and M. Nadif (2013). Co-clustering: models, algorithms and applications, Wiley, New

York.

K.C. Gowda and E. Diday (1991). Symbolic clustering using a new dissimilarity measure. Pattern

Recognition 24, 567–578.

K.C. Gowda and T.R. Ravi (1995). Divisive clustering of symbolic objects using the concepts of

both similarity and dissimilarity. Pattern Recognition 28, 1277–1282.

D.S. Guru, B.B. Kiranagi, and P. Nagabhushan (2004). Multivalued type proximity measure and

concept of mutual similarity value useful for clustering symbolic patterns. Pattern Recognition

Letters 25, 1203–1213.

I. V. Mechelen, H. H. Bock, and P. D. Boeck (2004). Two-mode clustering methods: a structured

overview. Statistical methods in medical research, 13, 363–394.

R. Verde and Y. Lechevallier (2005). Crossed Clustering Method on Symbolic Data Tables. In

Proceedings of the Meeting of the Classification and Data Analysis Group (CLADAG) of the

Italian Statistical Society - CLADAG 2003 (Bologna, Italy), pp. 87–94.

M.-S. Yang, P.-Y. Hwang, and D.-H. Chen (2004). Fuzzy clustering algorithms for mixed feature

variables. Fuzzy Sets and Systems, 141, 301–317.

Dynamic clustering of interval data based on hibrid

L1, L2 and L1 distances

?

Leandro C. Souza1,2, , Renata M. C. R. Souza1 , Getúlio J. A. Amaral3

1. Universidade Federal de Pernambuco (UFPE), Cin, Recife - PE, Brazil

2. Universidade Federal Rural do Semi-Árido (UFERSA), DCEN, Mossoró - RN, Brazil

3. Universidade Federal de Pernambuco (UFPE), DE, Recife - PE, Brazil

? Contact author: lcs6@cin.ufpe.br

Keywords: Interval Distance, Interval Symbolic Data, Dynamic Clustering

Cluster analysis is a traditional approach to provide exploratory discovery of knowledge. Dynamic

Partitional clustering approach does partitions on data and associates prototypes to each partition.

Distances measures are necessary to perform the clustering. In literature, a variety of distances are

proposed for clustering interval data, as L1 , L2 and L1 distances. Therefore, interval data has an

extra kind of information, which is not verified on the point one, and it is related with the variation

or uncertain represented by them. In this way, we propose a mapping from intervals to points,

which preserves their location and internal variation and allows formulating the new hybrid L1 , L2

and L1 distances, all based on the Lq distance for point data. These distances are used to perform

dynamic clustering for interval data. Let a set of p-dimensional interval data with N observations,

such as = {I1 , I2 , · · · , In , · · · , IN }. An interval multivariate instance representation In 2 is

given by

In = [a1n , b1n ], [a2n , b2n ], · · · , [apn , bpn ] ,

(1)

where n = 1, 2, · · · , N and ajn bjn , for j = 1, · · · , p. Consider the partition of the set in K

clusters. Let Gk the p-dimensional interval prototype of class k and Ck the k th class. Partitional

dynamic clustering over is proposed to minimize the criterion J , defined by

J =

K X

X

(2)

(In , Gk ),

k=1 In 2Ck

where is a distance function. For the generic interval instance In , the mapping M which preserves location and internal variation generates one point and one vector(both p-dimensional) and

it is given by

([a1n , b1n ], · · · , [apn , bpn ]) ! {(a1n , · · · , apn ), ( 1n , · · · , pn )},

(3)

M

with jn = bjn ajn . As two different kind of information are used, occurs a hybridism on mapping.

The hybrid L1 (HL1 ) distance formulation is given by

dHL1 (In , Gk ) =

p

X

j=1

|ajn

ajGk | + |

j

n

j

Gk |

ajGk |2 + |

j

n

j 2

Gk |

(4)

.

The hybrid L2 (HL2 ) distance has the expression

dHL2 (In , Gk ) =

p

X

j=1

|ajn

.

(5)

The hybrid L1 distance (HL1 ) distance is proposed as follows

p

dHL1 (In , Gk ) = max{|ajn

j=1

p

ajGk |} + max{|

j

n

j=1

j

Gk |},

(6)

where max{·} is the maximum function. To compare the quality of the clustering results, adjusted

rand index (ARI) is used associated with a synthetic dataset. ARI values more close to 1 indicates a

strong agreement between the obtained clusters and a known partition. Bootstrap statistical method

constructs non-parametric confidence intervals for the mean of ARI values for the distances L1 , L2 ,

L1 , HL1 , HL2 and HL1 distances, with 95% of confidence. In the synthetic dataset, intervals

are constructed sorting randomly values for centers and ranges, which delivers three clusters, two

ellipsoidal (with 150 elements) and the third one spherical (with 50 elements). The centers, with

coordinates

(cx , cy ),

bivariate

normal distributions with parameters µ and ⌃, with µ =

✓

◆

✓ follow

◆

2

0

µx

x

and ⌃ =

, with the following values : Cluster 1: µx = 30, µy = 10, x2 =

2

0

µy

y

100 e y2 = 25; Cluster 2: µx = 50, µy = 30, x2 = 36 e y2 = 144; and Cluster 3: µx = 30,

µy = 35, x2 = 16 e y2 = 16; The range is generated using uniform distributions over an interval

[v, u], represented by U n(v, u). The rectangle with center coordinates in the point (cxi , cyi ) has

ranges represented by xi and yi , for x and y, respectively . Interval data is constructed by

([cxi

xi /2, cxi

+

xi /2], [cyi

yi /2, cyi

+

(7)

yi /2]).

A general configuration is used for the ranges, where the uniform distributions are different for

clusters and dimensions. Table 1 shows these distributions. Table 2 presents the non-parametric

Table 1: Uniform distributions for interval ranges

Cluster

1

2

3

x

distribution

U n(4, 7)

U n(1, 2)

U n(2, 3)

y

distribution

U n(1, 3)

U n(6, 9)

U n(3, 6)

confidence intervals for this synthetic configuration. 100 datasets were generated. For each one,

the clustering was applied 100 times. The solution which has the lowest criterion was selected,

resulting in 100 ARI values. The bootstrap method is applied to the ARI values with 2000 repetitions and confidence of 95%. The confidence intervals for the ARI means revel the better adjust of

Table 2: Non-parametric confidence intervals for the comparison of distances

Distance

ARI confidence

interval

L1

HL1

L2

HL2

L1

HL1

[0.72, 0.77]

[0.85, 0.87]

[0.51, 0.56]

[0.51, 0.55]

[0.81, 0.84]

[0.78, 0.82]

HL1 , instead its limits are greater than the other distances.

References

[1] Chavent, M., Lechevallier, Y. (2002). Dynamical clustering of interval data: Optimization of an adequacy criterion based on hausdorff distance, Classification, Clustering, and Data Analysis, 53–60.

[2] Souza, R. M. C. R., De Carvalho, F. D. A. T. (2004). Clustering of interval data based on city-block

distances, Pattern Recognition Letters 25, 353–365.

[3] De Carvalho, F. D. A. T., Brito, P., Bock, H.-H. (2006). Dynamic clustering for interval data based on

l2 distance, Computational Statistics 21, 231–250.

SDA 2015

November 17 – 19

University of Orléans, France

Wednesday, November 18

Afternoon

IIIA Computer Science Building,

Herbrand Amphitheatre

Session 5: NETWORKS

Chair: Christel VRAIN

14:00 – 14:25 Symbolic Data Analysis for Social Networks

Fernando PEREZ, University of Mexico, Mexico

14:25 – 14:50 Symbolic Data Analysis of Large Scale Spatial Network Data

Carlo DRAGO, Alessandra REALE

Niccolo Cusano University, Rome & ISTATN, Italy

Session 6: VISUALIZATION

Chair: Monique NOIRHOMME

14:50 – 15:15 Matrix Visualization for Big Data

Chun-houh CHEN, Chiun-How KAO, Academia Sinica, Taipei, Taiwan

Yin-Jing TIEN, UST, Taiwan

15:15 – 15:40 Exploration on Audio-Visual Mappings

Daniel DEFAYS, University of Liège, Belgium

15:40 – 16:10 Coffee Break

Session 7: COMPOSITIONAL DATA

Chair: Paula BRITO

16:10 – 16:40 Sample Space Approach of Compositional Data

Vera PAWLOWSKY, University of Girona, Spain

16:40 – 17:10 Compositional Data of Contingency Tables

Juan José EGOZCUE, University of Catalonia, Spain

17:10 – 17:35 Distributional Modeling Using the Logratio Approach

Karel HRON, Palacky University of Olomouc, Czech Republic

Peter FILZMOSER, TU Vienna, Austria

Alessandra MENAFOGLIO, Polytechnic University, Milan, Italy

19:30

Workshop Dinner. 'Au Bon Marché' Restaurant, 12 Place du Châtelet,

Orléans. Tram stop: Royale-Châtelet

Symbolic Data Analysis for Social Networks

Fernando Pérez1,

?

1. IIMAS, National Autonomous University of Mexico

? Contact author: fernando@sigma.iimas.unam.mx

Keywords: Symbolic Data, Social Network Analysis, Public Health.

Public Health problems are one of the most interesting topics in Social Network Analysis given

its repercusions and variety of contexts (Shaefer & Simpkins, 2014). We seek to study “infection”

by customs and values, so we will be looking into non traditional structures. This is interesting

because of the unusual nature and the complexity of quantifying a relationship of this abstract

nature, but it is precisely this that allows the incorporation of Symbolic Data analysis (Diday &

Noirhomme-Fraiture, 2008) (Giordano & Brito, 2014).

References

Giordano, G. & Brito, P (2014). Social Networks as Symbolic Data. Analysis and Modeling of

Complex Data in Behavioral and Social Sciences, Springer

Schaefer, D. & Simpkins, S. (2014). Using Social Nework Analysis to Clarify the Role of Obesity

in Selection of Adolescent Friends. American Journal of Public Health. 104, 1223–1229.

Diday, E. & Noirhomme-Fraiture, M. (2008). Symbolic Data Analysis and the SODAS Software.

Symbolic Data Analysis and the SODAS Software Wiley, England.

Symbolic Data Analysis of Large Scale Spatial Network Data

Carlo Drago1,* , Alessandra Reale1

1. University of Rome “Niccolò Cusano” and Italian National Institute of Statistics (ISTAT)

*Contact author: c.drago@mclink.it

Keywords: Symbolic Data Analysis, Social Network Analysis, Community Detection, Spatial Data

Mining

Modern spatial networks are ubiquitous in various different contexts and are increasingly massive on

their size. The challenges for large-scale spatial networks call for new methodologies and approaches

which can allow to extract the relevant patterns on data. In this work we will examine spatial networks

data, taking into account their characteristics, and we will consider different approaches in order to

represent and analyze these networks by means of Symbolic Data. From the representations of the

networks, we will show different Symbolic Data Analysis approaches to detect the different patterns is

possible to find on data. We will conduct a simulation study and an application on real data.

References

Billard, L., & Diday, E. (2003). From the statistics of data to the statistics of knowledge: symbolic data

analysis. Journal of the American Statistical Association, 98(462), 470-487.

Diday, E., & Noirhomme-Fraiture, M. (Eds.). (2008). Symbolic data analysis and the SODAS software.

J. Wiley & Sons.

Drago C. (2015) Large Network Analysis: Representing the Community Structure by Means of Interval

Data. Fifth International Workshop on Social Network Analysis (ARS 2015); 04/2015

Giordano G., Brito M. P. , (2014) Social Networks as Symbolic Data, in: Analysis and Modeling of

Complex Data in Behavioral and Social Sciences, Edited by Vicari, D, Okada, A, Ragozini, G,

Weihs, C. (Eds, 06/2014; Springer Series: Studies in Classification, Data Analysis, and Knowledge

Organization., ISBN: 978-3-319-06691-2

Giordano G., Signoriello S. and Vitale M.P. (2008) Comparing Social Networks in the framework of

Complex Data Analysis. CLEUP Editore, Padova: pp.1- 2, In: XLIV Riunione Scientifica della

Società Italiana di Statistica

Matrix Visualization for Big Data

Chun-houh Chen1,*, Chiun-How Kao1,2,3, Yin-Jing Tien2

1. Academia Sinica

2. National Taiwan University of Science and Technology

3. Institute for Information Industry

*Contact author: cchen@stat.sinica.edu.tw

Keywords: Exploratory Data Analysis (EDA), Generalized Association Plots (GAP), Symbolic Data

Analysis (SDA)

“It is important to understand what you CAN DO before you learn to measure how WELL you seem to

have DONE it” (Exploratory Data Analysis: John Tukey, 1977). Data analysts and statistics

practitioners nowadays are facing difficulties in understanding higher and higher dimensional data with

more and more complex nature while conventional graphics/visualization tools do not answer the needs.

It is more difficult a challenge for understanding overall structure in big data sets so good and

appropriate Exploratory Data Analysis (EDA) practices are going to play more important roles in

understand what one can do in the big data era.

Matrix Visualization (MV) has been shown to be more efficient than conventional EDA tools such as

Boxplot, Scatterplot (with dimension reduction techniques), and Parallel Coordinate Plot for extracting

information embedded in moderate to large data sets of binary, continuous, ordinal, and nominal nature.

In this study we plan to investigate feasibility and potential difficulties for applying related MV

techniques for visualizing and exploring structure from big data: 1). Memory/computation (permutation

with clustering) of proximity matrices for variables and subject; 2). Display of data and proximity

matrices for variables and subject. We shall integrate techniques from Hadoop computing environment,

Image scaling, and Symbolic Data Analysis into the framework of GAP (Generalized Association Plots)

in coming up with an appropriate package for conducting Big Data EDA with visualization.

!

References

Tukey, John W (1977). Exploratory Data Analysis. Addison-Wesley.

Exploration on audio-visual mappings

Daniel Defays1

Keywords: Dissimilarities, Distances,

Metricon

spaces,

Isometric

mapping, Pattern recognition

Exploration

audio-visual

mappings

Abstract

Summary

WhichWhichofthesevisualsequencesofimagescorrespondstotheHappyBirthdaysong?

of these 5 visual sequences of images corresponds to the Happy Birthday song

?

!

!

!

!

!

!

!

!

!

!!

Sequence 1

Sequence 2

Sequence 3 Sequence 4 Sequence 5

1. Which of these visual images corresponds best to the Happy BIrthday song? And why?

Figure

Most people will probably choose the third sequence, with the cake, glasses of Champaign, birds and

balloons,

which evokes a birthday party. But those familiar with music notation could choose

Mostpeoplewillprobablychosethethirdsequenceinthemiddle,withthecake,glassesof

sequence

1 or 2 which code the music of the song. It is unlikely that the last two sequences will be

Champaign,threebirdsandballoons.Somefamiliarwithmusicnotationcouldchoosesequence

very appealing

for anybody, despite of the fact that they are linked to the four parts of the song, as it

1or2anditiisunlikelythathelasttwosequenceswillbeveryappealing.

will be

explained

in the talk.

choosethescore,

!

2 0 1 5 -0 2 -0 7 1 9 : 2 7 :0 0

1 /1

!

P a rtitio n H B (# 2 3 )

The figure 1 illustrates the topic of an exploration on trans-sensory mappings: how can different

sensorial inputs be mapped into each other. More specifically here, how can a set of images be

associated with a piece of music ?

Numerous facts suggest that different inputs to our sensorial channels share some common patterns

or at least at some stage of their processing by the nervous system activates the same areas. If this is

the case, matching between songs, images and odours could make some sense. In fact, software

already exists to bridge music and images, like in the work of the Analema group or in the Media

Player application.

The paper will focus on one particular aspect of that exploration : the « mathematical » mapping of

images on songs.

The songs are decomposed into segments that are then represented in a multidimensional space

through a kind of spectral analysis (with the use of Mell frequency Cepstral Coefficients) widely used

in automatic and speaker recognition [Berenzweig et al, 2003]. In the area of automated processing of

images, Nguyen-Khang Pham, Annie Morin, Patrick Gros and Quyet-Thang Le have used local

descriptors obtained through filters to quantify the content of images and Factorial Correspondence

1UniversityofLiege,ddefays@ulg.ac.be

Analysis (FCA) to reduce the number of dimensions [Pham et al, 2009]. This makes it possible to

represent images into Cartesian spaces as well.

Once the two sets have been wrapped into structures, a morphism between the two sets can be

elaborated.

A method will be presented which makes it possible to find the subset of images (S) - characterized

by their dissimilarities - which matches in an optimal way the musical segments (C) of a song also

characterized by dissimilarities. The quality of the match is assessed by comparing the dissimilarities

of the elements of the target C with the corresponding dissimilarities in S. The closer they are, the

better the fit is. Two different algorithms will be presented and commented.

The images in the 5th sequence of figure 1 have been extracted from a set of 55 photos using that

method.

References

Berenzweig A., Ellis D., Lawrence S. (2003). Anchor space for classification and similarity

measurement of music, http://www.ee.columbia.edu/~dpwe/pubs/icme03-anchor.pdf/.

Defays D. (1978). « A short note on a method of seriation » , British Journal of Mathematical

Psychology 31, pp. 49-53

Diday E., et Noirhomme-Fraiture M. (eds) (2008). Symbolic Data Analysis and the SODAS Software,

Wiley.

Pham N-K., Morin A., Gros P., Le Q-T. (2009). « Utilisation de l’analyse factorielle des

correspondances pour la recherche d’images à grande échelle », Actes d'EGC, RNTI-E-15, Revue des

Nouvelles Technologies de l'Information - Série Extraction et Gestion des Connaissances, Cépaduès

Editions, pp. 283 - 294.

Widmer G., Dixon S., Goebl W., Pampalk E., Tobudic A. (2003). « In search of the Horowitz factor »,

AI Magazine Volume 24 Number 3, pp. 111-130.

SAMPLE SPACE APPROACH

TO COMPOSITIONAL DATA

Vera PAWLOSKY-GLAHN

University of Girona, Spain

The analysis of compositional data based on the Euclidean space structure of their

sample space is presented. Known as the Aitchison geometry of the simplex, it is

based on the group operation known as perturbation, the external multiplication

known as powering, and the simplicial inner product with the induced distance and

norm. These basic operations are introduced, together with their geometric

interpretation. To work with standard statistical methods within this geometry, it is

necessary to build in a sensible way coordinates which are interpretable. This is done

using Sequential Binary Partitions, which can be illustrated with the CoDadendrogram. Tools like the biplot and the variation array are used as a previous

exploratory analysis to guide this construction.

COMPOSITIONAL ANALYSIS

OF CONTINGENCY TABLES

J. Juan José EGOZCUE and Vera PAWLOSKY-GLAHN

University of Catalonia and University of Girona, Spain

Contingency tables contain in each cell counts of the corresponding events. In a

multinomial sampling scenario, the join distribution of counts in the cells can be

parametrised by a table of probabilities, which are a joint probability function of two

categorical variables. These probabilities can be assumed to be a composition. The

goal of the analysis is to decompose the probability table into an independent table

and an interaction table. The optimal independent table, in the sense of the Aitchison

geometry of the simplex, has been shown to be the product of geometric marginals,