LightSpeed: A Many-core Scheduling Algorithm 1 Introduction Karl Naden

advertisement

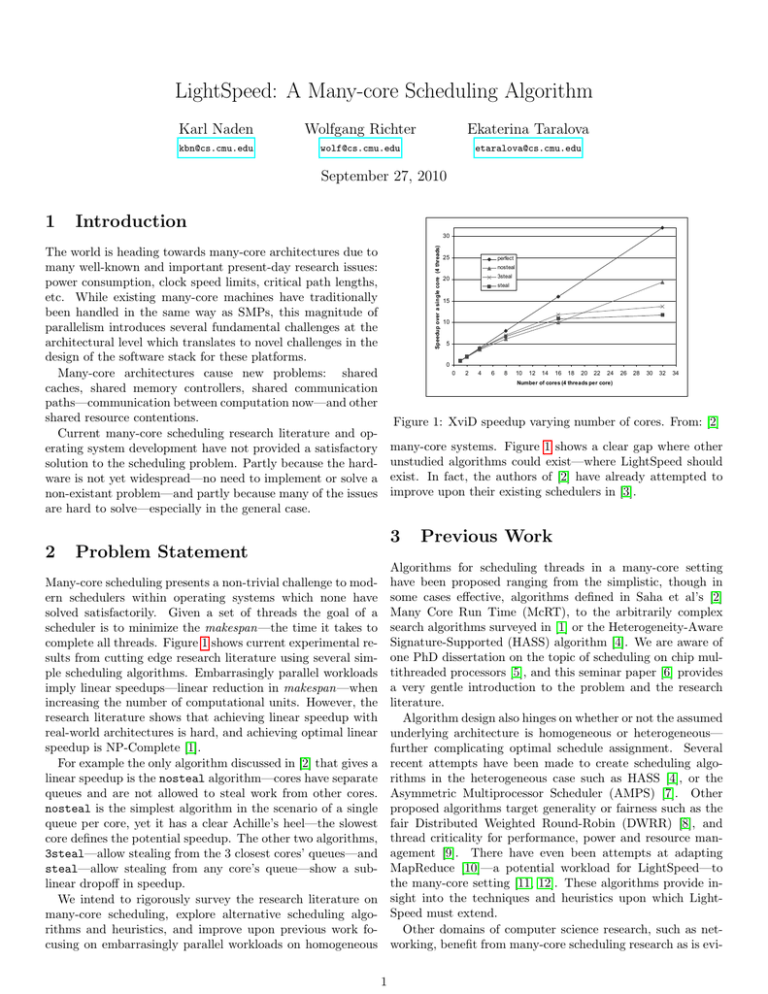

LightSpeed: A Many-core Scheduling Algorithm Karl Naden Wolfgang Richter Ekaterina Taralova kbn@cs.cmu.edu wolf@cs.cmu.edu etaralova@cs.cmu.edu September 27, 2010 1 Figure 6 shows the speedup seen for the RMS workloads Introduction on our many core platform. Once again, these experiments demonstrate that McRT can scale almost linearly The world is heading towards many-core architectures dueuptoto 64 HW threads. We plot the number of cores on the x-axis many well-known and important present-day research issues: with each core containing 4 HW threads. We compute the power consumption, clock criticaltime path speedup with speed respect limits, to the execution onlengths, a single core etc. While existing many-core machines haveoftraditionally (4 threads). Again, the serial version the workloads had been handled in the same SMPs,time thisasmagnitude of essentially the way same as execution the single-threaded parallel version. parallelism introduces several fundamental challenges at the 25 perfect nosteal 3steal 20 steal 15 10 5 Speedup over a single core (4 threads) 0 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 32 34 Number of cores (4 threads per core) Figure 8: Effect of scheduling on XviD speedup Figure 1: 1 XviD speedup varying number of cores. From: [2] 3.2.3 Statement Configurability Problem Relative Processor Utilization many-core systems. Figure 1 shows a clear gap where other 0.9 unstudied algorithms could exist—where LightSpeed should exist. In0.8fact, the authors of [2] have already attempted to XviD improve upon their existing schedulers in [3]. Equake Figure 7: Effect of different scheduling and locking policies 2 Speedup over a single core (4 threads) architectural level which translates to novel challenges in the 14 1queue/core 1queue/core no stealing design of the software stack for thesestealing platforms. 1 queue (queued locks) 1 queue (unqueued locks) 12 Many-core architectures cause new problems: shared 10 caches, shared memory controllers, shared communication paths—communication between computation now—and other 8 shared resource contentions. 6 Current many-core scheduling research literature and op4 erating system development have not provided a satisfactory solution to the scheduling problem. Partly because the hard2 ware is not yet widespread—no need to implement or solve a 0 1 corepartly 2 core 8 core 16 core non-existant problem—and because4 core many of the issues Number of cores (4 threads per core) are hard to solve—especially in the general case. 30 3 0.7 0.6 Previous Work 0.5 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 This section demonstrates the need for configurability in Algorithms a for scheduling threads in a many-core setting Thread Number large scale CMP runtime. For the sake of discussion, this Many-core scheduling presents a non-trivial challenge to mod- have been proposed ranging from the simplistic, though in section primarily concentrates on experiences with XviD. Figure 9: Per thread load balancing in Xvid and Equake ern schedulers within operating systems which none have some cases effective, algorithms defined in Saha et al’s [2] Figure 7 shows the performance of XviD with different thisTime result,(McRT), it would to appear though workCore on Run the asarbitrarily complex solved satisfactorily. Given a set of threads the goal of a Many Based McRT policies. The figure compares the performance with stealing is the optimal policy for or XviD. Figure 8 shows the search algorithms surveyed in [1] the Heterogeneity-Aware scheduler is to aminimize the makespan—the time it takes to single scheduling queue using a TTS (test & test & set) XviD speedup as we increase the number of HW threads to (HASS) algorithm [4]. We are aware of complete all threads. Figure 1 shows queue current experimental lock, a single scheduling using a ticket lock, reusing Signature-Supported 1 128. At 128 HW threads, the performance of work stealing one PhD dissertation of scheduling on upon chip mulsults from cutting edge research literature using several simscheduling queue/core (ticket lock) without any work is worse than thaton of the a notopic stealing configuration; tithreaded processors [5], and this seminar paper [6] stealing, and using 1 schedulingparallel queue/core (ticket lock) ple scheduling algorithms. Embarrasingly workloads inspection it turned out that work stealing introduces provides an with work stealing. There isinnomakespan—when perceptible difference a veryoverhead gentle that introduction the problem andnumber the research imply linear speedups—linear reduction grows moreto than linearly with the of between the policies till 16 HW threads (4 cores).the At 32 HW threads. At 128 threads, the overhead of stealing hurts literature. increasing the number of computational units. However, HW threads, the distributed scheduling queue (1 more than the load imbalance. Towhether address this, we added a research literature shows that achieving linear speedup with Algorithm design also hinges on or not the assumed queue/core) without work stealing performs the worst. The restricted form of stealing where a processor isheterogeneous— allowed to real-world architectures is hard, and achieving optimal linear underlying architecture is homogeneous or distributed queue reduces contention; however the absence steal work only from the queues of its neighbors (denoted speedup is NP-Complete [1]. hurts load balance. By definition, a singlefurther complicating optimal schedule assignment. Several of work stealing as “3steal” in the graph). The restricted stealing performs recentbetter attempts have been made to atcreate For example the only algorithm discussed [2]balance. that gives scheduling queue provides perfect in load At 32aHW than the unrestricted stealing all datascheduling points, but algothreads, the load imbalance hurts more than contention.rithmseven in that the does heterogeneous case such as HASS linear speedup is the nosteal algorithm—cores havethe separate not perform as well as with no stealing[4], in aor the Asymmetric Multiprocessor Scheduler (AMPS) [7]. Other 128 HW thread configuration. This shows that a runtime queues and areOn not allowed to steal work from other cores. the other hand, at 64 HW threads, contention becomes system must be configurable to enable or different policies pronounced, andin mitigates the of effect of load proposed algorithms target generality fairness suchtoas the nosteal is the more simplest algorithm the scenario a single optimize application performance. the distributed queue performs fair Distributed Weighted Round-Robin (DWRR) [8], and queue per core,imbalance: yet it hasthus a clear Achille’sscheduling heel—the slowest well as the single queue. The effect of contention is an image the amount of computation required manthreadGiven criticality forframe, performance, power and resource core defines theaspotential speedup. The other two algorithms, highlighted further by the fact that the queued locking for encoding different parts of it been can vary significantly. For agement [9]. There have even attempts at adapting 3steal—allow (ticket stealing from the 3 closest cores’ queues—and lock) starts to help compared to a TTS lock. Not example,[10]—a the partspotential of the frameworkload comprisingfor the LightSpeed—to background MapReduce steal—allow stealing from any core’s queue—show a subsurprisingly, the distributed queue with work stealing take significantly less computation since they remain static the many-core setting [11, 12]. These algorithms provide inlinear dropoff inprovides speedup. the best result – the load imbalance is removed between frames. On the other hand, the parts of the frame sight into the techniques and heuristics upon which Lightdue to the stealing, and the contention is reduced since the We intend to rigorously survey the research literature on containing objects in motion require more computation. queues are distributed. Speed must extend. many-core scheduling, explore alternative scheduling algoOther application domains do not exhibit such imbalance. Figure 9 compares the load science balance between XviD Other domains of computer research, suchandas netrithms and heuristics, and improve upon previous work focusing on embarrasingly parallel workloads on homogeneous working, benefit from many-core scheduling research as is evi1 80 References denced by the attempt of Mallik et al. [13] to create a scheduling algorithm using statistical analysis in network processors. Their use of statistical techniques encourages a line of research that could lead to using machine learning within scheduling algorithms for many-core architectures. Our work may explore this potential synergy between machine learning and thread schedulers in the many-core setting. 4 [1] S. Jin, G. Schiavone, and D. Turgut, “A performance study of multiprocessor task scheduling algorithms,” The Journal of Supercomputing, vol. 43, pp. 77–97, 2008, 10.1007/s11227-007-0139-z. [Online]. Available: http://dx.doi.org/10.1007/s11227-007-0139-z [2] B. Saha, A.-R. Adl-Tabatabai, A. Ghuloum, M. Rajagopalan, R. L. Hudson, L. Petersen, V. Menon, B. Murphy, T. Shpeisman, E. Sprangle, A. Rohillah, D. Carmean, and J. Fang, “Enabling scalability and performance in a large scale cmp environment,” SIGOPS Oper. Syst. Rev., vol. 41, no. 3, pp. 73–86, 2007. [Online]. Available: http://doi.acm.org/10.1145/1272998.1273006 Algorithm Exploration, Experimental Methods and Plan [3] M. Rajagopalan, B. T. Lewis, and T. A. Anderson, “Thread scheduling for multi-core platforms,” in HOTOS’07: Proceedings of the 11th USENIX workshop on Hot topics in operating systems. Berkeley, CA, USA: USENIX Association, 2007, pp. 1–6. We will implement thread scheduling algorithms explored in prior works on a simulator for many-core systems in a MapReduce-like application for highly parallelizable jobs. We will evaluate weaknesses and strengths of these algorithms and propose modifications to increase performance when the number of cores in the system increases. We will explore scheduling algorithms like the ones used in the McRT system [2], which is a software prototype of an integrated language runtime that was designed to explore configurations of the software stack for enabling performance and scalability on large scale many-core platforms. We will use a simulator for the Intel Single-chip Cloud Computer, (SCC), or similar. The SCC is a research microprocessor containing the most Intel Architecture cores ever integrated on a silicon CPU chip: 48 cores. It incorporates technologies intended to scale multi-core processors to 100 cores and beyond, such as an on-chip network, advanced power management technologies and support for message-passing. The potential workloads and benchmarks, in addition to the standard ones used in prior work [10] and [14], will include using existing implementations of MapReduce for various applications that lend themselves to high parallelization. By the Milestone 1 deadline, October 13th, we will: [4] D. Shelepov, S. Alcaide, J. Carlos, S. Jeffery, A. Fedorova, N. Perez, Z. F. Huang, S. Blagodurov, and V. Kumar, “Hass: a scheduler for heterogeneous multicore systems,” SIGOPS Oper. Syst. Rev., vol. 43, no. 2, pp. 66–75, 2009. [Online]. Available: http://doi.acm.org/10.1145/1531793.1531804 [5] A. Fedorova, “Operating system scheduling for chip multithreaded processors,” Ph.D. dissertation, Cambridge, MA, USA, 2006, adviserMargo I. Seltzer. [6] T. Fahringer, “Optimisation: Operating system scheduling on multi-core architectures,” Website, August 2008, http://www.scribd.com/doc/4838281/ Operating-System-Scheduling-on-multicore-architectures. [7] T. Li, D. Baumberger, D. A. Koufaty, and S. Hahn, “Efficient operating system scheduling for performance-asymmetric multi-core architectures,” in SC ’07: Proceedings of the 2007 ACM/IEEE conference on Supercomputing. New York, NY, USA: ACM, 2007, pp. 1–11. [Online]. Available: http://doi.acm.org/10.1145/1362622.1362694 [8] T. Li, D. Baumberger, and S. Hahn, “Efficient and scalable multiprocessor fair scheduling using distributed weighted round-robin,” SIGPLAN Not., vol. 44, no. 4, pp. 65–74, 2009. [Online]. Available: http://doi.acm.org/10.1145/1594835.1504188 [9] A. Bhattacharjee and M. Martonosi, “Thread criticality predictors for dynamic performance, power, and resource management in chip multiprocessors,” in ISCA ’09: Proceedings of the 36th annual international symposium on Computer architecture. New York, NY, USA: ACM, 2009, pp. 290–301. [Online]. Available: http://doi.acm.org/10.1145/1555754.1555792 [10] J. Dean and S. Ghemawat, “Mapreduce: simplified data processing on large clusters,” Commun. ACM, vol. 51, no. 1, pp. 107–113, 2008. [Online]. Available: http://doi.acm.org/10.1145/1327452.1327492 • Explore simulation tools. Ideally, we will try to obtain a simulator for the Intel SCC 48-core research architecture [15], or similar (for example the simulator of [16] for multicore simulation). We will have a simulator chosen and running. [11] C. Ranger, R. Raghuraman, A. Penmetsa, G. Bradski, and C. Kozyrakis, “Evaluating mapreduce for multi-core and multiprocessor systems,” in HPCA ’07: Proceedings of the 2007 IEEE 13th International Symposium on High Performance Computer Architecture. Washington, DC, USA: IEEE Computer Society, 2007, pp. 13–24. [Online]. Available: http://dx.doi.org/10.1109/HPCA.2007.346181 • Research existing implementations of libraries for MapReduce-like [10] functionality. For instance, Phoenix [11, 12], developed at Stanford, is a potentially suitable implementation of MapReduce for sharedmemory systems. [12] R. Yoo, A. Romano, and C. Kozyrakis, “Phoenix rebirth: Scalable mapreduce on a large-scale shared-memory system,” October 2009, pp. 198 –207. [13] A. Mallik, Y. Zhang, and G. Memik, “Automated task distribution in multicore network processors using statistical analysis,” in ANCS ’07: Proceedings of the 3rd ACM/IEEE Symposium on Architecture for networking and communications systems. New York, NY, USA: ACM, 2007, pp. 67–76. [Online]. Available: http://doi.acm.org/10.1145/1323548.1323563 • Review literature on scheduling jobs in many-core systems - Saha et al. [2], Jin et al. [1], Mallik et al. [13], Rajagopalan et al. [3], and other research that we come across. [14] L. Huston, R. Sukthankar, R. Wickremesinghe, M. Satyanarayanan, G. R. Ganger, E. Riedel, and A. Ailamaki, “Diamond: A storage architecture for early discard in interactive search,” in FAST ’04: Proceedings of the 3rd USENIX Conference on File and Storage Technologies. Berkeley, CA, USA: USENIX Association, 2004, pp. 73–86. By the Milestone 2 deadline, November 1st, we will: • Reproduce baseline cases or prior results [15] Intel Corporation, “The scc platform overview,” Website, September 2010, http://communities.intel.com/servlet/JiveServlet/ downloadBody/5512-102-1-8627/SCC Platform Overview 90110.pdf. • Evaluate performance of existing scheduling algorithms • Determine weaknesses in existing algorithms, propose and implement improvements [16] H. Zeng, M. Yourst, K. Ghose, and D. Ponomarev, “Mptlsim: a cycle-accurate, full-system simulator for x86-64 multicore architectures with coherent caches,” SIGARCH Comput. Archit. News, vol. 37, no. 2, pp. 2–9, 2009. [Online]. Available: http://doi.acm.org/10.1145/ 1577129.1577132 • Provide results for some of the improved algorithms and continue evaluation of results 2