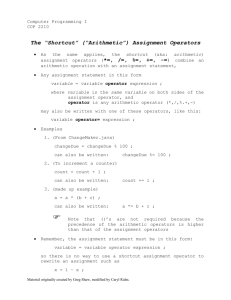

Modeling Operator Performance in

advertisement