6: THE ACCELERATION OF SOR

advertisement

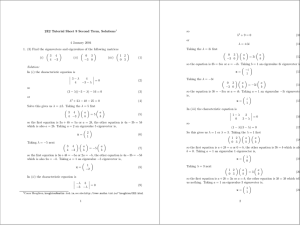

6: THE ACCELERATION OF SOR Math 639 (updated: January 2, 2012) We shall see that the judicious choice of parameter ω can lead to a significancy faster converging algorithm. Let us first set the stage. Suppose that we have a linear iterative method with reduction matrix G satisfying ρ(G) < 1. Then we know there is a norm k · k∗ such that the induced matrix norm satisfies kGk∗ = γ with γ < 1. Actually, γ can be taken arbitrarily close to ρ(G). We then have kek k∗ ≤ kGkk∗ ke0 k∗ = γ i ke0 k∗ . Now, to reduce the k · k∗ -error by a factor of ǫ, we need γ k ≤ ǫ, i.e., k ≥ ln(ǫ−1 ) . ln(γ −1 ) Setting δ = 1 − γ, we find that ln(γ −1 ) = − ln(γ) = − ln(1 − δ) ≈ δ where we used Taylor’s series for the approximation. Thus, the number of iterations for a fixed reduction should grow proportionally with δ −1 = (1 − γ)−1 ≈ (1 − ρ(G))−1 , i.e., k · (1 − γ) should behave like a constant. Recall that for A3 , we computed the spectral radius of Gjac (the Jacobi method) and found that ρ(Gjac ) = cos(π/(n + 1) ≈ 1 − π/(2(n + 1)2 ). We again used Taylor’s series for the approximation. Thus, one should expect that k grows like a constant times n2 for Jacobi (compare this with you homework results). Our goal is to show that ρ(Gsor ) = 1 − O(n−1 ) for an appropriate choice of ω (this is illustrated in Programming 4). To analyze the SOR method we start by setting β = ρ(L + U ). Note that the Jacobi method in the case when A = I + L + U is xi+1 = −(L + U )xi + b and the corresponding reduction matrix is GJ = −(L + U ) so β is nothing more than the spectral radius of GJ . We introduce the following hypothesis: (A.1) 0 < ω < 2. (A.2) GJ only has real eigenvalues and ρ(GJ ) = β. with 0 < β < 1. (A.3) The matrix A = I + L + U satisfies Property A. 1 2 Remark 1. The case when β = 0 is not interesting since this means that ρ(GJ ) = 0 and we should use the Jacobi method. Under the above hypothesis, we have the following theorem. Theorem 1. (D. Young ) Assume that (A.1)-(A.3) hold and let GSOR be the reduction matrix corresponding to SOR. Then, ω−1: if ω ∈ [ωopt , 2), r ρ(GSOR ) = 2 2 1 − ω + 1 ω 2 β 2 + ωβ 1 − ω + ω β : if ω ∈ (0, ωopt ] 2 4 where 2 p ωopt = . 1 + 1 − β2 Before proving the theorem, we should investigate the estimate which it gives. We start by assuming that the Jacobi method requires a large number of iterations, i.e., β is positive and close to one. First, ω − 1 is an increasing function so the smallest value on the interval [ωopt , 2) is achieved at ω = ωopt where its value is p p 1 − 1 − β2 p ≈ 1 − 2 1 − β2. ρ(GSOR ) = 1 + 1 − β2 It is also a consequence of the proof below that the expression for ρ(GSOR ) in the second case is always greater than or equal to ωopt − 1 and hence ω = ωopt gives the lowest possible spectral radius. Now if Jacobi requires a lot of iteration, β = 1 − γ with γ small. In this case, p p 1 − β 2 = 2γ − γ 2 ≈ 21/2 γ 1/2 i.e., ρ(GSOR ) ≈ 1 − 23/2 γ 1/2 . Thus, the number of iterations for SOR with a good parameter choice should grow like γ −1/2 instead of the γ −1 growth for Jacobi. This slower growth in the number of iterations was illustrated in Program 4. Even though we only used a ball park estimate for ωopt , we still achieved considerable acceleration. Proof. Let λ be a nonzero eigenvalue for GSOR with eigenvector e. Then (6.1) or λ(ω −1 I + L)e = ((ω −1 − 1)I − U )e (λωL + ωU )e = (1 − λ − ω)e. 3 √ Dividing by ±ω λ gives 1 1−λ−ω √ e L + zU e = z ±ω λ √ where z = ±1/ λ. Note that in general λ is a complex number and so must be z. It follows by Property A that 1−λ−ω √ (6.2) µ= ±ω λ is an eigenvalue of −GJ = (L + U ). By Property A, the eigenvalues of J−1 = −L − U are the same as J1 = L + U so that if µ is an eigenvalue of GJ , so is −µ. It follows that equation (6.2) has the same solutions (we think of λ as being the unknown here) as (λ + ω − 1)2 = ω 2 λµ2 . (6.3) Note that if λ 6= 0 satisfies (6.3) for some eigenvalue µ of GJ , then, going through the equations in reverse order implies that there is an eigenvector e satisfying (6.1) with this value of λ. Thus, if λ 6= 0 and µ solve (6.2) or (6.3), then λ is an eigenvalue of GSOR if and only if µ is an eigenvalue of GJ . Now, when ω = 1, λ = 0 is an eigenvalue of GSOR (1) = GGS . However, any eigenvector e with eigenvalue µ leads to an eigenvalue λ = µ2 for GSOR (1). It follows in this case that ρ(GSOR (1)) = β 2 and is agreement with the theorem. Examining (6.1), we see that for all other ω, GSOR has only nonzero eigenvalues and we can proceed with our assumption of λ 6= 0. The equation (6.3) can be rewritten ω 2 µ2 2 λ + (ω − 1)2 = 0. λ + 2 (ω − 1) − 2 The roots are (6.4) r ω 2 µ2 2 ω 2 µ2 1−ω+ − (ω − 1)2 λ = (1 − ω) + ± 2 2 r ω 2 µ2 ω 2 µ2 = (1 − ω) + ± ωµ 1 − ω + . 2 4 First, the only way that we can get a complex root is if ω 2 µ2 . 4 In this case, the roots are complex conjugates of each other and a straightforward computation using the second equality of (6.4) shows that they have absolute value ω − 1. (6.5) ω−1> 4 We next consider the case when both roots are real. We fix µ < 1 and at the intersection of the two ω ∈ (0, 2). Then the solution λ of (6.2) occurs √ x+ω−1 curves f1 (x) = and f2 (x) = ±µ x. Note that f1 is a straight line ω with slope 1/ω which passes through the point (1,1). Two such lines with different values of ω (ω = 1.25 and ω = .7) are illustrated in Figure 1 as well as the graph of f2 with µ = .8. The line corresponding to ω = .7 intersects f2 in two places while the line corresponding to ω = 1.25 is tangent to f2 . Note that f1 (1) = 1 for every value of ω and changing ω simply changes the slope. It is clear that one can increase the slope until f1 is tangent to f2 and we define ωopt (µ) to be the value of ω for which this happens. Let ωopt (µ) be the value of ω which makes f1 tangent to f2 and let λopt (µ) be the value of x where they meet (see the figure below where λopt (µ) = .25). The lines are tangent when f1′ (x) = f2′ (x), or (6.6) µ2 ωopt (µ)2 µ 1 or λopt (µ) = = p . ωopt (µ) 4 2 λopt (µ) Substituting this into f1 (x) = f2 (x) gives or 2 (µ) 2 (µ) µ2 ωopt µ2 ωopt + ωopt (µ) − 1 = 4 2 2 (µ) µ2 ωopt = ωopt (µ) − 1. 4 Comparing this with (6.5) shows that ωopt (µ) is precisely the place where the roots switch from real to complex. (6.7) Note that if ω < ωopt (µ) then one of the intersection points leads to a value of λ greater than λopt . In contrast, ω > ωopt (µ) leads to complex λ of absolute value ω − 1 (corresponding to this µ). We get an optimal choice over all µ ∈ σ(GJ ) by setting (6.8) ωopt = ωopt (β). Indeed, for this choice, any eigenvalue µ of GJ with µ < β gives rise to complex λ since ωopt (β) > ωopt (µ) and hence |λ| = ωopt − 1. It follows from (6.6) and (6.7) that for µ = ±β, λ = λopt = ωopt − 1. Thus, every eigenvalue of GSOR with this choice of ωopt has the same absolute value as the spectral radius ρ(GSOR ) = ωopt − 1. Finally, if use a larger ω then the spectral radius increases to ω − 1. Thus, we have shown that (6.8) gives the optimal choice of ω and leads a reduction matrix with all eigenvalues of absolute value equal to ρ(GSOR ) = ωopt − 1. The value of ωopt appearing in the theorem results from computing the smaller solution of (6.7) (with µ = β) (check it!). The result for ρ(GSOR ) 5 1.5 1 0.5 0 −0.5 −1 0 0.2 0.4 0.6 0.8 1 1.2 1.4 x Figure 1. f1 corresponding to ω = .7 and ω = ωopt (.8) = 1.25 and f2 with µ = .8. in the theorem when ω ∈ [ωopt , 2) is immediate from the above discussion. Finally, the result for when ω ∈ (0, ωopt ] is the larger of the two roots in (6.8) with µ = β. This completes the proof of the theorem.