18.303 Problem Set 5 Solutions Problem 1: (5+10+10)

advertisement

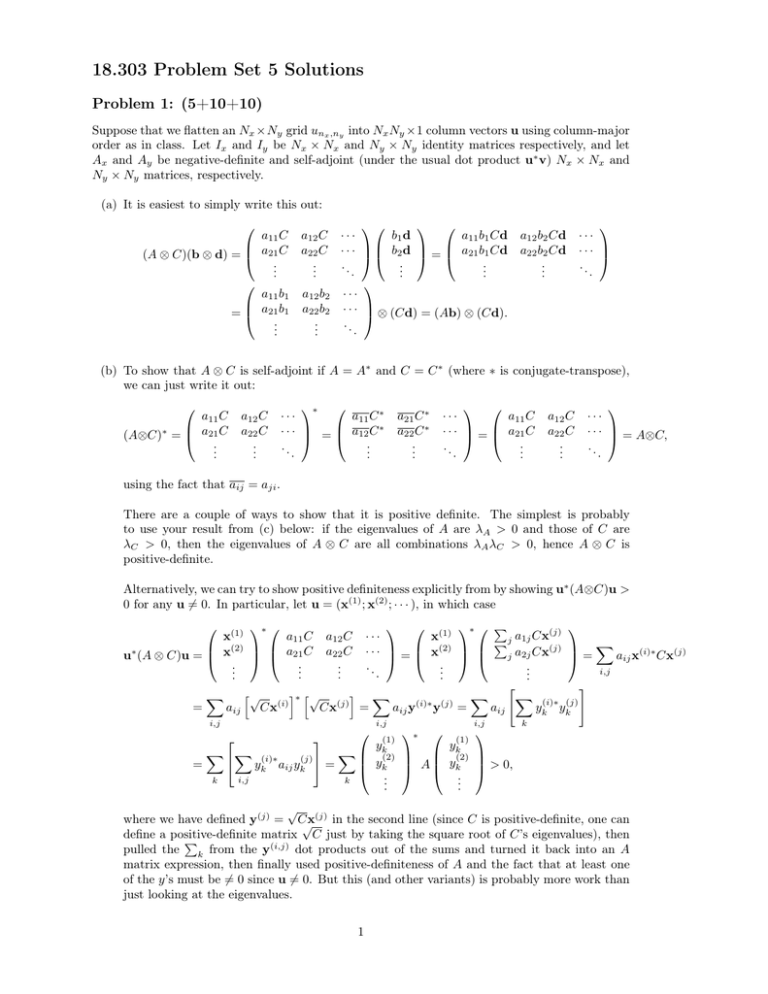

18.303 Problem Set 5 Solutions Problem 1: (5+10+10) Suppose that we flatten an Nx ×Ny grid unx ,ny into Nx Ny ×1 column vectors u using column-major order as in class. Let Ix and Iy be Nx × Nx and Ny × Ny identity matrices respectively, and let Ax and Ay be negative-definite and self-adjoint (under the usual dot product u∗ v) Nx × Nx and Ny × Ny matrices, respectively. (a) It is easiest to simply write this out: a11 C a12 C · · · b1 d a11 b1 Cd a12 b2 Cd a21 C a22 C · · · b2 d a21 b1 Cd a22 b2 Cd (A ⊗ C)(b ⊗ d) = = .. .. .. .. .. .. . . . . . . a11 b1 a12 b2 · · · a21 b1 a22 b2 · · · = ⊗ (Cd) = (Ab) ⊗ (Cd). .. .. .. . . . ··· ··· .. . (b) To show that A ⊗ C is self-adjoint if A = A∗ and C = C ∗ (where ∗ is conjugate-transpose), we can just write it out: a11 C a21 C ∗ (A⊗C) = .. . a12 C a22 C .. . ∗ a11 C ∗ ··· ∗ ··· = a12 C .. .. . . a21 C ∗ a22 C ∗ .. . a11 C ··· a21 C ··· = .. .. . . a12 C a22 C .. . ··· ··· = A⊗C, .. . using the fact that aij = aji . There are a couple of ways to show that it is positive definite. The simplest is probably to use your result from (c) below: if the eigenvalues of A are λA > 0 and those of C are λC > 0, then the eigenvalues of A ⊗ C are all combinations λA λC > 0, hence A ⊗ C is positive-definite. Alternatively, we can try to show positive definiteness explicitly from by showing u∗ (A⊗C)u > 0 for any u 6= 0. In particular, let u = (x(1) ; x(2) ; · · · ), in which case ∗ x(1) a11 C x(2) a21 C ∗ u (A ⊗ C)u = .. .. . . (1) ∗ P (j) ··· x j a1j Cx P X (j) (2) ··· aij x(i)∗ Cx(j) j a2j Cx = x = .. .. .. i,j . . . " # h√ i∗ h√ i X X X (i)∗ (j) X = aij Cx(i) Cx(j) = aij y(i)∗ y(j) = aij yk yk a12 C a22 C .. . i,j i,j i,j ∗ k (1) (1) y y X X (i)∗ X k(2) k(2) (j) yk A yk > 0, = yk aij yk = .. .. i,j k k . . √ (j) in the second line (since C is positive-definite, one can where we have defined y(j) = Cx √ define a positive-definite matrix C just by taking the square root of C’s eigenvalues), then P pulled the k from the y(i,j) dot products out of the sums and turned it back into an A matrix expression, then finally used positive-definiteness of A and the fact that at least one of the y’s must be 6= 0 since u 6= 0. But this (and other variants) is probably more work than just looking at the eigenvalues. 1 Figure 1: A few of the smallest-|λ| eigenfunctions of A. (Blue/white/red = negative/zero/positive.) (c) Form an eigenvector u = y ⊗ x. Using part (a), Au = α(Iy y) ⊗ (Ax x) + β(Ay y) ⊗ (Ix x) + γ(Ay y) ⊗ (Ax x) = α(y) ⊗ (λx x) + β(λy y) ⊗ (x) + γ(λy y) ⊗ (λx x) = (αλx + βλy + γλx λy )u which is an eigenvector with eigenvalue αλx + βλy + γλx λy . Since there are Nx independent eigensolutions of Ax and Ny of Ay (which are Hermitian hence diagonalizable), this gives Nx Ny eigensolutions of A. This must be all of the eigensolutions of A (an Nx Ny × Nx Ny matrix) as long as the different u’s are independent solutions. It is easy to check that they are not only independent, but orthogonal eigenvectors. Denote the eigenvectors of Ax and Ay by xn and ym , and suppose that these were chosen orthonormal (since Ax and Ay were Hermitian). Then, applying part (a), ∗ (ym ⊗ xn )∗ (ym0 ⊗ xn ) = (ym ym0 ) ⊗ (x∗n xn0 ) = 0 if m 6= m0 or n 6= n0 , so the different um,n = ym ⊗ xn vectors are all orthogonal hence independent as desired. Since λx and λy are both < 0 (Ax and Ay were negative-definite), A is clearly positivedefinite if α ≤ 0, β ≤ 0, and γ ≥ 0, with at least one of them nonzero. However, it is possible to come up with slightly looser conditions. For example, one can allow α > 0 if α(min λx ) + β(max λy ) + γ(min λx )(max λy ) > 0 (if β < 0 is of large enough magnitude, or if γ > 0 is large enough). Similarly one can have β > 0 or γ < 0, if the other terms are sufficiently large. Problem 2: (5+5+10+10) (a) The first and 10th eigenfunctions (sorted by |λ|) correspond to λ1,2 from pset 4, as plotted along with a few other modes in figure 1. We also plot these two eigenfunctions (which are independent of θ) versus r in figure 2, along with the corresponding “exact” (very high accuracy) eigenfunctions from pset 4 (which we have rescaled to match our finite-difference eigenfunctions at r = 0). An excellent (though not perfect) match is evident. 2 0.03 u1(r) exact u1 u10(r) 0.02 exact u10 eigenfunctions 0.01 0 −0.01 −0.02 −0.03 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 r Figure 2: First two m = 0 (θ-independent) finite-difference eigenfunctions of A (dots) along with the exact eigenfunctions (lines) from pset 4 (scaled to match at r = 0). 0 10 |error| in λ1 10 / Nx,y |error| in λ1 0.2655 0.1208 −1 10 0.0619 0.0297 −2 10 2 10 Nx,y Figure 3: Error in the first eigenvalue λ1 versus the resolution Nx,y (with the exact values of |∆λ1 | given as labels), along with a ∼ 1/Nx,y line for reference. The errors clearly decrease linearly with resolution, i.e. O(∆x) convergence. (b) The errors |∆λ1 | for Nx = Ny = 100, 200, 400, 800 are plotted in figure 3 (with the values given as labels). Each time the resolution doubles, the error roughly halves, and the convergence is clearly proportional to 1/Nx,y on the plot, or O(∆x). Even though we are using 2nd-order accurate center differences to approximate the derivatives, we are introducing a first-order error by how we are imposing the boundary conditions. We are setting u = 0 outside a “circle” that is approximated by pixels on a square grid, which means that our boundary ∂Ω in the grid is only within ∼ ∆x of the true boundary, which introduces an error ∼ ∆x. [Thinking about the 1d function u(r) for simplicity, if u(R) is supposed to be zero but we actually set u(R + ∆x) = 0, then u(R) ≈ −∆x u0 (R + ∆x) 6= 0, with an error ∼ ∆x.] ∂u (c) The key point is that ∂u ∂x and ∂y are not evaluated on the same grid as u. As depicted in ∂u figure 4, ∂x is evaluated on an (Nx +1)×Ny grid that is shifted by ∆x/2 in the x direction, and conversely ∂u ∂y is evaluated on an Nx ×(Ny +1) grid that is shifted by ∆y/2 in the y direction— this is a direct consequence of our center-difference scheme for computing derivatives, and is much like what we saw in 2d. The corresponding G matrix to approximate the gradient is 3 u 1 u/ x 2 3 Ny = 3 u/ y 1 2 3 Nx = 4 4 Figure 4: Depiction of an Nx × Ny (here, 4 × 3) grid of u values, plus the u = 0 values on the ∂Ω boundary (grey dots). When ∂u/∂x is computed by a center-difference approximation, it results in ∂u/∂x on the (Nx + 1) × Ny grid at the locations given by the vertical blue slashes. Similarly, ∂u/∂y is computed on an Nx × (Ny + 1) grid formed by the horizontal red slashes. [(Nx + 1)Ny + Nx (Ny + 1)] × (Nx Ny ), and is given in Kronecker-product form G= Gx Gy = Iy ⊗ Dx Dy ⊗ Ix in terms of the (Nx +1)×Nx finite-difference matrix Dx for differentiation along the x direction with Dirichlet boundaries (exactly like when we did the 1d d/dx in class) and similarly for the (Ny + 1) × Ny matrix Dy . We now can write A0 = −GT Cg G = −GT Cx Cy G = −GT Cx Gx Cy Gy , where Cx and Cy are the diagonal matrices that multiply ∂u/∂x and ∂u/∂y, respectively, by c evaluated on those grids. The corresponding code is listed here: % form (x,y) grids corresponding to x and y derivatives: [yx,xx] = meshgrid([1:Ny]*dy - Ly/2, [0:Nx]*dx + dx/2 - Lx/2); rx = sqrt(xx.^2 + yx.^2); [yy,xy] = meshgrid([0:Ny]*dy + dy/2 - Ly/2, [1:Nx]*dx - Lx/2); ry = sqrt(xy.^2 + yy.^2); cx = 5 * (rx < 0.5) + 1 * (rx >= 0.5); cy = 5 * (ry < 0.5) + 1 * (ry >= 0.5); % Make diagonal C matrices to multiply by c on these grids: Cx = spdiags(reshape(cx,(Nx+1)*Ny,1), 0, (Nx+1)*Ny,(Nx+1)*Ny); Cy = spdiags(reshape(cx,Nx*(Ny+1),1), 0, Nx*(Ny+1),Nx*(Ny+1)); % Put it all together to make A0: Gx = kron(speye(Ny,Ny),Dx); Gy = kron(Dy, speye(Nx,Nx)); A0 = -[Gx; Gy]’ * [Cx * Gx; Cy * Gy]; (d) Using this new A0 , we can compute u1 with eigs as above, and u1 (r) = u1 (x, 0) is plotted in figure 5. In order to have a finite ∇ · c∇ across the discontinuity in c at r = 0.5, we must have u continuous (to get finite ∇u) and have c∇u continuous . i.e. at r = 0.5, c(r) ∂u ∂r is ∂u continuous, which means that ∂r drops discontinuously by a factor of 5, as visible in the plot, 4 0.02 0.018 0.016 0.014 u1 0.012 0.01 0.008 0.006 0.004 0.002 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 r Figure 5: Smallest-|λ| eigenfunction u1 (r) for  = ∇ · c∇. Note the discontinuous slope at r = 0.5, where c is discontinuous. 11 10 R(ua) 9 8 7 6 5 0.6 0.8 1 1.2 1.4 1.6 1.8 2 a Figure 6: Plot of the Rayleigh quotient R(ua ) versus a for a trial function u(r) = (1 − r)a . As expected, R(ua ) is ≥ λ1 ≈ 5.783 (horizontal dashed line), the exact minimal eigenvalue, always. Problem 3: (10+12) (a) The numerator of R(ua ) is Z 1 r|u0a |2 dr 2 Z 1 =a 0 0 = (2a − 0 r(1 − r)2a−2 dr = (1 − r)p+1 (pr + r + 1)/(p2 + 3p + 2)1 a2 2)2 a2 a2 a = 2 = + 3(2a − 2) + 2 4a − 2a 4a − 2 for p = 2a − 2. Similarly, the denominator is Z 1 Z 1 0 r|ua |2 dr = r(1 − r)a = (1 − r)p+1 (pr + r + 1)/(p2 + 3p + 2)1 = 0 0 1 4a2 + 6a + 2 for p = 2a. So, 2a2 + 3a + 1 . 2a − 1 We can plot this in Matlab with the command fplot(@(a) a.*(2*a.^2+3*a+1)./(2*a-1), [0.6,2]), resulting in figure 6. If we zoom in on the plot, we can find the minimum at a ≈ 0.94 and R ≈ 5.97, which is a reasonable estimate for λ1 (but R > λ1 always as expected). R(ua ) = a 5 u2 0.6 0.6 + 0.4 (constant) 0.2 0 0 0.2 0.4 0.6 x 0.8 1 u4 1 1 0.8 0.8 0.6 0.6 y 0.8 u3 y 0.8 y 1 0.4 0.4 0.2 0.2 0 0 0.2 0.4 0.6 0.8 0 1 x + y u1 1 0.4 0.2 + 0 0.2 0.4 0.6 x 0.8 1 0 + 0 0.2 + 0.4 0.6 0.8 1 x Figure 7: First four (lowest-λ) eigenfunctions of a trianglular domain Ω with Neumann boundary conditions. Alternatively, Matlab has a function fminbnd that will minimize a function for us. We can just do [a,R] = fminbnd(@(a) a.*(2*a.^2+3*a+1)./(2*a-1), 0.6,2) to find a ≈ 0.9397 and R ≈ 5.9681 at the minimum. Alternatively, we could even minimize R analytically, since taking d/da of R(ua ) gives us a cubic equation in the numerator, whose roots are analytically solvable by the (messy) cubic equation (or could be computed numerically with the Matlab roots function). But this seems like overdoing it. (b) To minimize the oscillations (the numerator of the Rayleigh quotient), we should clearly have the first eigenfunction being a constant (for λ1 = 0), the second oscillating once along the long direction of the triangle, the third oscillating along the “short” direction, and the fourth oscillating twice in the long direction, as shown in figure 7. (As usual, the larger |λ| becomes, the more oscillatory the eigenfunction and the harder it is to guess. You have to think a little about the orthogonality requirement with u3 to realize that u4 must have an oscillation in both diagonal directions.) Because of the Neumann conditions, it is critical that you show the maxima/minima of the eigenfunctions at the boundary. Actually, it turns out that we could even solve this particular problem analytically, although in this problem I prefer that you just sketch your guesses for the solutions. If the domain were a square with Neumann conditions, we know that the eigenfunctions would be cos(nx πx) cos(ny πy) with eigenvalues π 2 (n2x + n2y ). The eigenfunctions of the triangle domain are just the square eigenfunctions restricted to solutions that have even mirror symmetry across the diagonal. That is, the first four eigenfunctions and eigenvalues are actually: λ1 = 0, u1 = 1 (nx = ny = 0); λ2 = π 2 , u2 = cos(πx) − cos(πy) [a combination of (nx , ny ) = (1, 0) and (0, 1)]; λ3 = 2π 2 with u3 = cos(πx) cos(πy) (nx = ny = 1); and λ4 = 4π 2 with u4 = cos(2πx) + cos(2πy) [a combination of (nx , ny ) = (2, 0) and (0, 2)]. These exact solutions are actually what are plotted in figure 7. 6