18.303 Problem Set 2 Solutions Problem 1: (10+10)

advertisement

18.303 Problem Set 2 Solutions

Problem 1: (10+10)

(a) Since we want to compute u0 (x), we should Taylor expand around x, obtaining:

i

h

i

h

2

8∆x3 000

∆x2 00

∆x3 000

00

0

u

+

u

+

b

u

+

∆xu

+

u

+

u

−u

a u + 2∆xu0 + 4∆x

2

6

2

6

,

u0 (x) ≈

c∆x

where all the u terms on the right are evaluated at x. We want this to give u0 plus something

proportional to ∆x2 , so the u and u00 terms must cancel while the u0 terms must have a

coefficient of 1. Writing down the equations for the u, u0 , and u00 coefficients, we get:

0=a+b−1

c = 2a + b

0 = 4a + b,

which is 3 equations in 3 unknowns. This is easily solved to obtain a = −1/3, b = 4/3, c = 2/3 .

You were not required to do this, but we can also get an error estimate by looking at the u000

2

∆x2 000

000

term: the error is ≈ (8a + b) ∆x

6c u (x) = − 3 u (x).

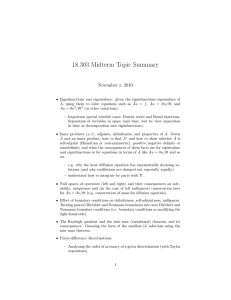

(b) Similar to the handout from class, we can check the accuracy of this for u(x) = sin(x) at

u0 (1) = cos(1) by the Matlab code below. For convenience, I also plot the error estimate

∆x2

2

3 cos(1) so that we can check that the errors are ∼ ∆x , but it would suffice for you to

2

plot any line ∼ ∆x in order to check the slope of the calculated errors. The resulting plot is

shown in Fig. , and demonstrates the predicted error convergence (until the error becomes so

small that it is limited by roundoff errors due to the computer’s finite precision).

x=1;

dx = logspace(-8,-1,50);

a = -1/3; b = 4/3; c = 2/3;

deriv = (a * sin(x+2*dx) + b * sin(x+dx) - sin(x)) ./ (c * dx);

loglog(dx, abs(cos(x) - deriv), ’o’, dx, abs(cos(x) * dx.^2 / 3), ’k-’)

xlabel(’{\Delta}x’)

ylabel(’|error| in derivative’)

legend(’computed |error|’, ’estimated |error|’)

Problem 2: (10+10)

Here, we consider inner products hu, vi on some vector space V of real-valued functions and the

corresponding adjoint Â∗ of real-valued operators Â, where the transpose is defined, as in class, by

whatever satisfies hu, Âvi = hÂ∗ u, vi for all u and v in the vector space (usually, Â∗ is obtained

from  by some kind of integration by parts).

(a) The proof of self-adjointness is almost exactly as in class. Integrating by parts:

Z

hu, Âvi =

−

0

=

L

L

0

uv 00 dx = −uv

|0 +

L

u0

v|0 −

Z

Z

L

u0 v 0 dx

0

L

00

u v dx = hÂu, vi,

0

1

−2

10

computed |error|

estimated |error|

−4

10

−6

10

|error| in derivative

−8

10

−10

10

−12

10

−14

10

−16

10

−18

10

−8

10

−7

10

−6

10

−5

−4

10

∆x

10

−3

10

−2

10

−1

10

Figure 1: Computed and estimated errors in approximate derivative u0 (1) of u(x) = sin(x), for a

second-order forward-difference approximation.

where the boundary terms cancel on the left because either u(0) or v(0) is zero, and cancel on

the right because either u0 (L) or v 0 (L) is zero. Furthermore, if we examine the middle step,

RL

we see that hu, Âui = 0 |u0 |2 dx, which is > 0 for any u 6= 0, since the only way u0 = 0 is if

u is a constant, and the left boundary condition means that the only constant u can be is zero.

This implies that −Â = d2 /dx2 is also self-adjoint and is negative-definite. Therefore d2 /dx2

on this V has orthogonal eigenfunctions, which is what we found in pset 1 when we explicitly

computed un = sin[(n + 0.5)πx/L]. Furthermore, inspection of these un from pset 1 reveals

eigenvalues λn = −[(n + 0.5)π/L]2 , which are real and negative as one would predict from a

negative-definite self-adjoint operator. Hence, this is consistent with what we found in pset 1,

but much more powerful in that we didn’t have to solve the eigenproblem explicitly.

RL

RL

(b) Since hu, qvi = 0 uqv dx = 0 quv dx = hqu, vi, the operator q(x) is self-adjoint, and we already showed above that −d2 /dx2 is self-adjoint, so their sum is self-adjoint. More explicitly:

hu, Âvi = hu, −v 00 + qvi = hu, −v 00 i + hu, qvi = h−u00 , vi + hqu, vi = h−u00 + qu, vi = hÂu, vi.

(By the same argument, the sum of any two self-adjoint operators is self-adjoint.)

Following the hint, we show that  − q0 is positive definite: hu, ( − q0 )ui = hu, −u00 i +

RL

hu, (q − q0 )ui = 0 |u0 |2 + (q − q0 )|u|2 dx > 0 for u 6= 0, since the first (|u0 |2 ) term is > 0

from above, and the second term is ≥ 0 since q ≥ q0 . Now, suppose λ is an eigenvalue of Â,

i.e. Âu = λu. Then ( − q0 )u = (λ − q0 )u, so λ − q0 is an eigenvalue of  − q0 . But  − q0

is positive definite, so its eigenvalues λ − q0 are > 0, so λ > q0 . Q.E.D.

Problem 3: (10+5+5)

(a) I found it clearest to plot the eigenvalues + 100 on a log–log scale: we want to use a vertical

log scale since the eigenvalues differ by many orders of magnitude, but have to add 100 so

2

5

10

0.2

−d2/dx2 + q(x)

u

1

−d2/dx2

(kπ/L)2 + 100

u2

0.15

u3

4

eigenfunctions (arbitrary units)

0.1

k

eigenvalues λ + 100

10

3

10

0.05

0

−0.05

2

10

−0.1

−0.15

1

10

0

10

1

10

k (k=1,2,... is index of λk)

2

10

−0.2

2

0

0.1

0.2

0.3

0.4

0.5

x

0.6

0.7

0.8

0.9

1

2

d

d

Figure 2: Left: Eigenvalues λk of the discretized − dx

2 + q and − dx2 operators, plotted as λk + 100

(since we expect λk > −100) versus k on a log–log scale. Also plotted are the exact eigenvalues

d2

d2

(kπ/L)2 (plus 100) of − dx

2 . Right: Eigenfunctions for smallest three eigenvalues of − dx2 + q.

that we are taking the logs of positive numbers, while a horizontal log scale seems helpful to

“spread out” the smallest few eigenvalues (the most interesting ones because they differ the

d2

d2

most between − dx

2 + q and − dx2 ). I also found it interesting to plot the exact eigenvalues

(kπ/L)2 of −d2 /dx2 . The plotting code is given below, and the result is shown in Fig. 1(left).

There are several points to observe:

(i) First, the eigenvalues of  are clearly > −100, as otherwise there would be missing dots

(negative values wouldn’t plot on a log scale). We could also check this explicitly in

Matlab by typing all(diag(S) > -100), which checks whether all the eigenvalues (the

diagonals of S) are > −100, returning 1 if true and 0 if false: this prints ans = 1 as

desired. Alternatively, you can check this by printing out diag(S) and inspecting them.

In the same way, you can check that they are all real, or alternatively you can type

all(imag(diag(S)) == 0) and see that it prints ans = 1.

(ii) The eigenvalues of  only differ slightly from the eigenvalues of −d2 /dx2 , except for the

first few eigenvalues. The basic reason for this is that q(x) changes the eigenvalues by

at most ∼ 100, which is significant for small eigenvalues but is insignificant for the large

eigenvalues ∼ 104 .

(iii) The eigenvalues of both  and −d2 /dx2 from the discrete approximations differ from the

exact (kπ/L)2 for large k. The reason for this, as discussed in class, is that the largest

eigenvalues correspond to eigenfunctions that oscillate almost as fast as the discretization

∆x, and in for these functions the finite-difference approximation is not accurate.

(b) The eigenfunctions are plotted in Fig. 1(right). They vaguely resemble sin(nπx/L), and certainly have the same number of sign oscillations (as they must in order to be orthogonal!), but

are more “bunched” in the center. (Later in 18.303, we will be able to see that this is because

q(x) is smallest there, because we will show that the eigenfunctions want to concentrate in

the small q regions.)

One thing that is not a significant difference (you will have points taken off if you say it

is), is the eigenfunctions go down from x = 0 rather than up. For example, the first eigenfunction is negative, but sin(πx/L) from class is positive. Remember that you can multiply

3

eigenfunctions by any constant and they are still eigenfunctions, so multiplication by −1 is

just an arbitrary normalization/sign choice.

(c) These dot products give values with magnitudes < 10−15 , which are effectively zero up to the

accuracy limitations of computer arithmetic.

% Part (a)

k = [1:100];

loglog(k, sort(diag(S))+100, ’bo’, k, sort(eig(D’*D/dx^2))+100, ’r*’, k, (k*pi/L).^2 + 100,

xlabel(’k (k=1,2,... is index of \lambda_k)’)

ylabel(’eigenvalues \lambda_k + 100’)

legend(’-d^2/dx^2 + q(x)’, ’-d^2/dx^2’, ’(k\pi/L)^2 + 100’, ’Location’, ’NorthWest’)

Problem 4: (10+5+5+10)

2

d

For  = dx

2 on functions u(x) with Dirichlet boundary conditions u(0) = u(L) = 0 on the

domain Ω = [0, L], we discretized it in class, with center-difference approximations, to obtain the

approximate “discrete Laplacian” matrix A:

2 −1

−1 2 −1

1

.

.

.

.

.

.

A=

.

.

.

∆x2

−1 2 −1

−1 2

operating on vectors of M points u1 , . . . , uM , where ∆x = L/(M + 1) and u(m∆x) ≈ um . Let’s

say M = 11 and L = 12 (so that ∆x = 1).

Now, we will restrict our vector space even further. We will consider only mirror-symmetric

functions u(x) with u(L − x) = u(x). That is, u(x) is even-symmetric around x = L/2. This will

throw out half of our eigenfunctions sin(nπx/L) of Â, leaving us only with the odd n = 1, 3, 5, . . .

eigensolutions (which are mirror-symmetric). You will now consider the finite-difference matrix

version of this symmetry.

m −um−1

(a) The center-difference rule from which each row of A is derived is −u00m = −um+1 +2u

.

∆x2

T

When applied to a symmetric vector u = (u1 , u2 , u3 , u4 , u5 , u6 , u5 , u4 , u3 , u2 , u1 ) this is unchanged for the first five rows (−u001 through −u005 ), but is modified for the sixth row (−u006 )

5 +2u6

since we set u7 = u5 . That is, −u006 = −2u∆x

. Notice also that A multipled by a symmetric

2

u gives a symmetric Au; the matrix A does not break the symmetry. In matrix form, this

gives

2 −1

−1 2 −1

−1 2 −1

.

Asym =

−1 2 −1

−1 2 −1

−2 2

[More technically, our symmetric u vectors live in a subspace of the original vector space, and

A preserves this subspace. Our Asym matrix is just the matrix form of the linear operator A

acting on the subspace of symmetric u’s.]

(b) Asym is obviously not equal to its transpose (in the usual sense of swapping rows and

columns), because the −2 in the last row does not match the −1 in the last column. We

4

can construct A in Matlab by the command given, and construct Asym by (for example):

Asym = diag([2 2 2 2 2 2]) - diag([1 1 1 1 1], 1) - diag([1 1 1 1 2], -1)

Now, computing the eigenvalues by eig(Asym), we find that they are λsym ≈ 3.931852, 3.414214, 2.517638, 1.482362, 0.

which by comparison with eig(A) are indeed real (and positive), and are indeed 6 of the eigenvalues of A. In fact, they are every other eigenvalue of A as we might expect from “skipping”

the odd eigenfunctions!

(c) If we had two symmetric vectors u = (u1 , u2 , u3 , u4 , u5 , u6 , u5 , u4 , u3 , u2 , u1 )T and v = (v1 , v2 , v3 , v4 , v5 , v6 , v5 , v4 , v3 , v2 , v

their ordinary inner product would be uT v = 2(u1 v1 + u2 v2 + u3 v3 + u4 v4 + u5 v5 ) + u6 v6 :

the symmetry would cause each of the first five elements, but not the sixth element, to be

counted twice. The corresponding inner product on our 6-component vectors is simply:

husym , vsym i =

2(u1 v1 + u2 v2 + u3 v3 + u4 v4 + u5 v5 ) + u6 v6

2

2

2

T

vsym = uTsym W vsym ,

= usym

2

2

1

defining a diagonal “weight” matrix W . Note that, by inspection, this still satisfies the three

key properties of inner products (positivity, symmetry, and bilinearity), and in particular the

positivity property (husym , usym i > 0 for usym 6= 0) relies on the fact that W is (obviously)

positive-definite. [In Matlab, W = diag([2 2 2 2 2 1]).]

(d) To be self-adjoint, we must have husym , Asym vsym i = uTsym W Asym vsym = hAsym usym , vsym i =

uTsym ATsym W vsym for all vectors usym , vsym . Therefore, we must have W Asym = ATsym W .

We can check this explicitly, either by hand or in Matlab (in Matlab, compute W*Asym and

Asym’*W):

4

−2

W Asym =

−2

4

−2

−2

4

−2

−2

4

−2

−2

4

−2

= W Asym T = ATsym W T = ATsym W,

−2

2

where we can save some work by noticing (once we compute it) that W Asym is symmetric.

(Recall that multipling by a diagonal matrix on the left multiplies each row by the corresponding diagonal, and multiplying by a diagonal matrix on the right multiplies each column.)

Here is an alternative approach: the adjoint A∗sym under this inner product (not the conjugatetranspose) must be defined so that husym , Asym vsym i = uTsym W Asym vsym = hA∗sym usym , vsym i =

T

T

T

uTsym A∗sym W vsym . Hence, we must have W Asym = A∗sym W , or A∗sym

=

T

W Asym W −1 , or A∗sym = W Asym W −1

and hence A∗sym = W −1 ATsym W (since W =

5

W T ). By explicit computation:

A∗sym = W −1 ATsym W

1/2

1/2

1/2

=

2

−1

1/2

1/2

−1

2

−1

1

2

−1

=

−1

2

−1

−1

2

−1

−1

2

−1

−1

2

−1

−2

2

2

2

2

2

−1

2

−1

−1

2

−1

−1

2

−2

= Asym ,

−1

2

since the 2 and 1/2 factors cancel out except in the last row and the last column where they

are needed.

Thus, by picking the right inner product, we get a new definition of the “transpose” (adjoint) of a matrix. In this new definition, we see that Asym is self-adjoint. This explains why

it has real eigenvalues. (With a little more work we could show it is positive-definite under

this inner product too.) Furthermore, it must be diagonalizable and the eigenvectors must be

orthogonal, but orthogonal under the new inner product.

Moral: “swapping rows and columns” is not the be-all and end-all of transposition. It is

the consequence of a particular choice of inner products, and that particular choice is not

always the right choice. A different choice of inner products gives a different “transpose”.

6

2

1