THE CENTER OF MASS OF THE ISE AND THE WIENER

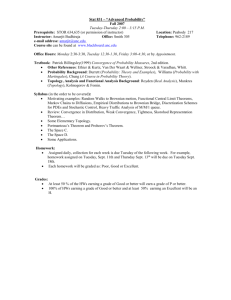

advertisement

Elect. Comm. in Probab. 9 (2004), 178–187

ELECTRONIC

COMMUNICATIONS

in PROBABILITY

THE CENTER OF MASS OF THE ISE AND THE WIENER

INDEX OF TREES

SVANTE JANSON

Departement of Mathematics, Uppsala University, P. O. Box 480, S-751 06 Uppsala, Sweden.

email: svante.janson@math.uu.se

PHILIPPE CHASSAING

Institut Élie Cartan, Université Henri Poincaré-Nancy I, BP 239, 54506 Vandœuvre-lèsNancy, France.

email: chassain@iecn.u-nancy.fr

Submitted 31 October 2003, accepted in final form 17 November 2004

AMS 2000 Subject classification: 60K35 (primary), 60J85 (secondary).

Keywords: ISE, Brownian snake, Brownian excursion, center of mass, Wiener index.

Abstract

We derive the distribution of the center of mass S of the integrated superBrownian excursion

(ISE) from the asymptotic distribution of the Wiener index for simple trees. Equivalently, this

is the distribution of the integral of a Brownian snake. A recursion formula for the moments

and asymptotics for moments and tail probabilities are derived.

1

Introduction

The Wiener index w(G) of a connected graph G with set of vertices V (G) is defined as

w(G) =

X

d(u, v),

(u,v)∈V (G)

in which d(u, v) denotes the distance between u and v in the graph.

The ISE (integrated superBrownian excursion) is a random variable, that we shall denote J ,

with value in the set of probability measures on Rd . The ISE was introduced by David Aldous

[1] as an universal limit object for random distributions of mass in Rd : for instance, Derbez

& Slade [11] proved that the ISE is the limit of lattice trees for d > 8.

A motivation for this paper comes from a tight relation established between some model of

random geometries called fluid lattices in Quantum geometry [3], or random quadrangulations

in combinatorics, and the one-dimensional ISE [6]: let (Qn , (bn , en )) denote the random uniform choice of a quadrangulation Qn with n faces, and of a marked oriented edge en with root

178

The center of mass of the ISE

179

vertex bn in Qn , and set

rn

=

Wn

=

max d(x, bn ),

X

d(x, bn ),

x∈V (Qn )

x∈V (Qn )

rn and¡Wn being the radius

of Qn 1 , respectively. As a consequence

¢ and the total path length1/4

−1/4

−5/4

of [6], n

rn , n

Wn converges weakly to (8/9) · (R − L, S − L), in which

Z

S = xJ (dx)

stands for the center of mass of the ISE, while, in the case d = 1, [L, R] denotes the support

of J . In [8], a modified Laplace transform is given, that determines the joint law of (R, L).

As a consequence, the first two moments of R − L are derived, and this is pretty much all the

information known about the joint law of (R, L, S), to our knowledge. In this paper, we prove

that

Theorem 1.1. For k ≥ 0,

£

¤

E S 2k =

√

(2k)! π

ak ,

2(9k−4)/2 Γ ((5k − 1)/2)

(1)

in which ak is defined by a1 = 1, and, for k ≥ 2,

ak = 2(5k − 4)(5k − 6)ak−1 +

k−1

X

ai ak−i .

(2)

i=1

Since S has a symmetric distribution, the odd moments vanish. Also, we have the following

asymptotics for the moments.

Theorem 1.2. For some constant β = 0.981038 . . . we have, as k → ∞,

¡

¢−2k/4

£

¤ 2π 3/2 β

3

(2k)1/2 10e3

(2k) 4 ·2k .

(3)

E S 2k ∼

5

As a consequence, Carleman’s condition holds, and the distribution of S is uniquely determined

by its moments.

In Section 2, we recall the description of J in terms of the Brownian snake, following [14, Ch.

IV.6], and we derive a distributional identity for S in term of some statistic

Z

min e(u) ds dt.

(4)

η=4

0≤s<t≤1 s≤u≤t

of the normalized Brownian excursion (e(t))0≤t≤1 . In [12], the joint moments of (η, ξ), in

which

Z 1

ξ=2

e(t) dt,

(5)

0

are computed with the help of explicit formulas for the joint moments of the Wiener index

and the total path length of random binary trees. In Section 3, we use the results of [12] to

derive Theorems 1.1 and 1.2. As a byproduct we also obtain that

1 incidentally,

nE [Wn | Qn ] /2 is the Wiener index of Qn .

180

Electronic Communications in Probability

Theorem 1.3. We have, as k → ∞,

£ ¤ 2π 3/2 β 1/2

−k/2 k/2

k (5e)

k ,

E ηk ∼

5

(6)

Finally, Section 4 deals with tail estimates for S.

2

The ISE and the Brownian snake

For the ease of non specialists, we recall briefly the description of J in terms of the Brownian

snake, from [14, Ch. IV.6] (see also [5, 10, 16], and the survey [17]). The ISE can be seen as

the limit of a suitably renormalized spatial branching process (cf. [6, 15]), or equivalently, as

an embedding of the continuum random tree (CRT) in Rd .

As for the Brownian snake, it can be seen as a description of a ”continuous” population

T , through its genealogical tree and the positions of its members. Given the lifetime process

ζ = (ζ(s))s∈T of the Brownian snake, a stochastic process with values in [0, +∞), the Brownian

snake with lifetime ζ is a family

W = (Ws (t))0≤s≤1,

0≤t≤ζ(s)

of stochastic processes Ws (·) with respective lifetimes ζ(s). The lifetime ζ specifically describes

the genealogical tree of the population T , and W describes the spatial motions of the members

of T . A member of the population is encoded by the time s it is visited by the contour traversal

of the genealogical tree, ζ(s) being the height of member s ∈ T in the genealogical tree (ζ(t)

can be seen as the ”generation” t belongs to, or the time when t is living). Let

C(s, t) = min ζ(u),

s≤u≤t

s ∧ t = argmin ζ(u).

s≤u≤t

Due to the properties of the contour traversal of a tree, any element of s ∧ t is a label for the

more recent ancestor common to s and t, and the distance between s and t in the genealogical

tree is

d(s, t) = ζ(s) + ζ(t) − 2C(s, t).

If it is not a leaf of the tree, a member of the population is visited several times (k + 1 times

if it has k sons), so it has several labels: s and t are two labels of the same member of the

population if d(s, t) = 0, or equivalently if s ∧ t ⊃ {s, t}. Finally, s is an ancestor of t iff

s ∈ s ∧ t. In this interpretation, Ws (u) is the position of the ancestor of s living at time u, and

cs = Ws (ζ(s)) ,

W

s ∈ T,

is the position of s. Before time m = C(s1 , s2 ), s1 and s2 share the same ancestor, entailing

that

(7)

(Ws1 (t))0≤t≤m = (Ws2 (t))0≤t≤m .

Obviously there is some redundancy in³ this description:

it turns out that the full Brownian

´

c

snake can be recovered from the pair Ws , ζ(s)

(see [15] for a complete discussion of

0≤s≤1

this).

In the general setting [14, Ch. IV], the spatial motion of a member of the population is any

Markov process with cadlag paths. In the special case of the ISE, this spatial motion is a

d-dimensional Brownian motion:

The center of mass of the ISE

181

a) for all 0 ≤ s ≤ 1, t → Ws (t) is a standard linear Brownian motion started at 0, defined

for 0 ≤ t ≤ ζ(s) ;

b) conditionally, given ζ, the application s → Ws (.) is a path-valued Markov process

with transition function defined as follows: for s1 < s2 , conditionally given Ws1 (.),

(Ws2 (m + t))0≤t≤ζ(s2 )−m is a standard Brownian motion starting from Ws1 (m), independent of Ws1 (.).

The lifetime ζ is usually a reflected linear Brownian motion [14], defined on T = [0, +∞).

However, in the case of the ISE,

ζ = 2e,

in which e denotes the normalized Brownian excursion, or 3-dimensional Bessel bridge, defined

on T = [0, 1]. With this choice of ζ, the genealogical tree is the CRT (see [1]), and the Brownian

snake can be seen as an embedding of the CRT in Rd . We can now give the definition of the

ISE in terms of the Brownian snake with lifetime 2e [14, Ch. IV.6]:

c.

Definition 2.1. The ISE J is the occupation measure of W

c is defined by the relation:

Recall that the occupation measure J of a process W

Z 1 ³ ´

Z

cs ds,

f (x)J (dx) =

f W

R

(8)

0

holding for any measurable test function f .

Remark 2.2. It might seem more natural to consider the Brownian snake with lifetime e

instead of 2e, but we follow the normalization in Aldous [1].

√ If we used the Brownian snake

with lifetime e instead, W , J and S would be scaled by 1/ 2.

Based on the short account on the Brownian snake given in this Section, we obtain:

Theorem 2.3. Let N be a standard Gaussian random variable, independent of η. Then

law √

S = η N.

Proof. Specializing (8) to f (x) = x, we obtain a representation of S:

Z 1

cs ds,

S=

W

0

which is the starting point of our proof. We have also, directly from the definition of the

Brownian snake,

³ ´

cs

Proposition 2.4. Conditionally, given e, W

is a Gaussian process whose covariance

0≤s≤1

is C(s, t) = 2 mins≤u≤t e(u), s ≤ t.

Proof. With the notation ζ = 2e and m = C(s1 , s2 ) = 2 mins1 ≤u≤s2 e(u), we have, conditionally, given e, for s1 ≤ s2 ,

´

³

cs

cs , W

= Cov (Ws1 (ζ(s1 )), Ws2 (ζ(s2 )) − Ws2 (m) + Ws2 (m))

Cov W

2

1

=

=

Cov (Ws1 (ζ(s1 )), Ws2 (m))

Cov (Ws1 (ζ(s1 )), Ws1 (m))

=

m,

182

Electronic Communications in Probability

in which b) yields the second equality, (7) yields the third one, and a) yields the fourth

equality.

As a consequence of Proposition 2.4, conditionally given e, S is centered Gaussian with variance

Z

C(s, t) ds dt = η.

[0,1]2

This last statement is equivalent to Theorem 2.3.

Remark 2.5. The d-dimensional analog of Theorem 2.3 is also true, by the same proof.

3

The moments

Proof of Theorem 1.1. From Theorem 2.3, we derive at once that

£

¤

£ ¤ £

¤

E S 2k = E η k E N 2k .

∗

As a special case of [12, Theorem 3.3] (where ak is denoted ω0k

),

√

£ ¤

k! π

ak ,

E η k = (7k−4)/2

2

Γ ((5k − 1)/2)

£

¤

and the result follows since E N 2k = (2k)!/(2k k!).

(9)

In particular, see again [12, Theorem 3.3],

p

£ ¤

E S 2 = E [η] = π/8,

£ ¤

£ ¤

E S 4 = 3E η 2 = 7/5,

as computed by Aldous [1] by a different method. (Aldous’ method extends to higher moments

too, but the calculations quickly become complicated.) The asymptotics for the moments are

obtained as follows.

Proof of Theorems 1.2 and 1.3. We just prove that

ak ∼ β 50k−1 (k − 1)!2 ,

Set

bk =

(10)

ak

.

50k−1 (k − 1)!2

We have b1 = a1 = 1 and, from (2), b2 = b3 = 49/50 = 0.98. For k ≥ 4, (2) translates to

bk

=

=

Pk−1

¶

1

i=1 ai ak−i

bk−1 + k−1

2

25(k − 1)

50

(k − 1)!2

Pk−2

i=2 ai ak−i

bk−1 + k−1

,

50

(k − 1)!2

µ

1−

Thus bk increases for k ≥ 3. We will show that bk < 1 for all k > 1; thus β = limk→∞ bk exists

and (10) follows. Stirling’s formula and (1), (9) then yield (6) and (3).

The center of mass of the ISE

183

More precisely, we show by induction that for k ≥ 3,

bk ≤ 1 −

1

.

25(k − 1)

This holds for k = 3 and k = 4. For k ≥ 5 we have by the induction assumption b j ≤ 1 for

1 ≤ j < k and thus

sk =

Pk−2

i=2 ai ak−i

k−1

50

(k − 1)!2

k−2

1 X (i − 1)!2 (k − i − 1)!2

bi bk−i

50 i=2

(k − 1)!2

Ã

!

1

4

≤

2 + (k − 5)

2

2

2

50 (k − 1) (k − 2)

(k − 3)

1

.

≤

2

25 (k − 1) (k − 2) (k − 3)

=

(11)

1

The induction follows. Moreover, it follows easily from (11) that bk < β < bk + 75

(k − 2)−3 .

To obtain the numerical value of β, we write, somewhat more sharply, where 0 ≤ θ ≤ 1,

sk =

1

2

25 (k − 1) (k − 2)

2 b2 bk−2

+

4

2

2

25 (k − 1) (k − 2) (k − 3)2

+ θ(k − 7)

b3 bk−3

36

2

2

25 (k − 1) (k − 2) (k − 3)2 (k − 4)2

and sum over k > n for n = 10, say, using bn−1 < bk−2 < β and bn−2 < bk−3 < β for k > n.

It follows (with Maple) by this and exact computation of b1 , . . . , b10 that 0.981038 < β <

0.9810385; we omit the details.

Remark 3.1. For comparison, we give the corresponding result for ξ defined in (5). There is a

simple relation, discovered by Spencer [18] and Aldous [2], between its moments and Wright’s

constants in the enumeration of connected graphs with n vertices and n + k edges [19], and

the well-known asymptotics of the latter lead, see [12, Theorem 3.3 and (3.8)], to

√

£ ¤

E ξ k ∼ 3 2k(3e)−k/2 k k/2 .

(12)

4

Moment generating functions and tail estimates

£ ¤

The moment asymptotics yield asymptotics for the moment generating function E etS and

the tail probabilities P (S > t) as t → ∞. For completeness and comparison, we also include

corresponding results for η. We begin with a standard estimate.

Lemma 4.1.

(i) If γ > 0 and b ∈ R, then, as x → ∞,

∞

X

k=1

k b k −γk xk ∼

³ 2π ´1/2 ¡

γ

e−γ x

¢(b+1/2)/γ

eγ(e

−γ

x)1/γ

184

Electronic Communications in Probability

(ii) If −∞ < γ < 1 and b ∈ R, then, as x → ∞,

∞

X

k b k γk xk

k=1

k!

¡

¢b/(1−γ) (1−γ)(eγ x)1/(1−γ)

∼ (1 − γ)−1/2 eγ x

e

The sums over even k only are asymptotic to half the full sums.

Sketch of proof. (i). This is standard, but since we have not found a precise reference, we

sketch the argument. Write k b k −γk xk = ef (k) = k b eg(k) where g(y) = −γy ln y + y ln x and

f (y) = g(y) + b ln y. The function g is concave with a maximum at y0 = y0 (x) = e−1 x1/γ . A

Taylor expansion yields

Z ∞

∞

X

−1/2

−1/2 −f (y0 )

b −γk k

ef (dye)−f (y0 ) dy

k k

x = y0

y0

e

k=1

=

→

Z

0

∞

1/2

1/2

−y0

Z

ef (dy0 +sy0

∞

e−γs

2

/2

ds =

−∞

e)−f (y0 )

ds

³ 2π ´1/2

γ

,

in which dye denotes the smallest integer larger than, or equal to, y.

(ii). Follows by (i) and Stirling’s formula.

tη

Combining

Lemma 4.1,

£ k ¤ kTheorem 1.2

£ tSand

¤ P

£ k ¤wek find the following asymptotics for E [e ] =

P

t /k! and E e

= k even E S t /k!.

kE η

Theorem 4.2. As t → ∞,

£ ¤ (2π)3/2 β t2 /10

te

,

E etη ∼

53/2

£ ¤ 21/2 π 3/2 β 2 t4 /40

E etS ∼

t e

.

53/2

(13)

(14)

Proof. For η we take b = 1/2, γ = 1/2, x = (5e)−1/2 t in Lemma 4.1(ii); for S we take b = 1/2,

γ = 3/4, x = (10e3 )−1/4 t.

The standard argument with Markov’s inequality yields upper bounds for the tail probabilities

from Theorem 1.2 or 4.2.

Theorem 4.3. For some constants K1 and K2 and all x ≥ 1, say,

¡

¢

(15)

P (η > x) ≤ K1 x exp − 25 x2 ,

¡ 3 1/3 4/3 ¢

2/3

.

(16)

P (S > x) ≤ K2 x exp − 4 10 x

£

¤

Proof. For any even k and x > 0, P (|S| > x) ≤ x−k E S k . We use (3) and optimize the

resulting exponent by choosing k = 101/3 x4/3 , rounded to an even integer. This yields (16);

we omit the details. (15) is obtained similarly from (6), using k = b5x2 c.

Remark 4.4. The proof of Theorem 4.3 shows that any K1 > 2π 3/2 β/51/2 ≈ 4.9 and

K2 > 101/6 βπ 3/2 /5£ ≈ ¤1.6 will do for large x. Alternatively, we could use Theorem 4.2 and

P (S > x) < e−tx E etS for t > 0 and so on; this would yield another proof of Theorem 4.3

with somewhat inferior values of K1 and K2 .

The center of mass of the ISE

185

The bounds obtained in Theorem 4.3 are sharp up to factors 1 + o(1) in the exponent, as

is usually the case for estimates derived by this method. For convenience we state a general

theorem, reformulating results by Davies [7] and Kasahara [13].

Theorem 4.5. Let X be a random variable, let p > 0 and let a and b be positive real numbers

related by a = 1/(pebp ) or, equivalently, b = (pea)−1/p .

(i) If X ≥ 0 a.s., then

is equivalent to

− ln P(X > x) ∼ axp

¡

E Xr

¢1/r

∼ br1/p

as x → ∞

as r → ∞.

(17)

(18)

Here r runs through all positive reals; equivalently, we can restrict r in (18) to integers

or even integers.

(ii) If X is a symmetric random variable, then (17) and (18) are equivalent, where r in (18)

runs through even integers.

(iii) If p > 1, then, for any X, (17) is equivalent to

¢

¡

as t → ∞,

ln E etX ∼ ctq

(19)

where 1/p + 1/q = 1 and c = q −1 (pa)−(q−1) = q −1 eq−1 bq . (This can also be written in

the more symmetric form (pa)q (qc)p = 1.) Hence, if X ≥ 0 a.s., or if X is symmetric

and r restricted to even integers, (19) is also equivalent to (18).

Proof. For X ≥ 0, (i) and (iii) are immediate special cases of more general results by Kasahara [13, Theorem 4 and Theorem 2, Corollary 1], see also [4, Theorem 4.12.7]; the difficult

implications (18) =⇒ (17) and (19) =⇒ (17) were earlier proved by Davies [7] (for p > 1,

which implies the general case of (i) by considering a power of X). Moreover, (19) =⇒ (17)

follows also from the Gärtner–Ellis theorem [9, Theorem 2.3.6] applied to n −1/p X. Note that,

assuming X ≥ 0, (E X r )1/r is increasing in r > 0, which implies that (18) for (even) integers

is equivalent to (18) for all real r.

(ii) follows from (i) applied to |X|, and (iii) for general X follows by considering max(X, 0).

We thus obtain from Theorem 1.2 or 4.2 the following estimates, less precise than Theorem 4.3

but including both upper and lower bounds.

Theorem 4.6. As x → ∞,

¡

¢

5

ln P (η > x) ∼ − x2 ,

2

¡

¢

3

ln P (S > x) ∼ − 101/3 x4/3 .

4

(20)

(21)

Proof. For η we use Theorem 4.5 with p = q = 2, b = (5e)−1/2 , a = 5/2 and c = 1/10. For S

we have p = 4/3, q = 4, b = (10e3 )−1/4 , a = 3 · 101/3 /4 and c = 1/40.

Remark 4.7. (20) can also be proved using the representation (4) and large deviation theory

for Brownian excursions, cf. [9, §5.2] and [10]. The details may perhaps appear elsewhere.

186

Electronic Communications in Probability

Remark 4.8. (21) can be compared to the tail estimates in [1] for the density function of

cU , the value of the Brownian snake at a random point U , which in particular gives

W

´¢

¡ ³

cU > x ∼ −3 · 2−5/3 x4/3 .

ln P W

Remark 4.9. For ξ we can by (12) use Theorem 4.5 with p = q = 2, b = (3e)−1/2 , a = 3/2

and c = 1/6. In [12], the variable of main interest is neither ξ nor η but ζ = ξ − η. By

£ ¤1/k

£ ¤1/k

£ ¤1/k

Minkowski’s inequality E ξ k

≤ E ζk

+ E ηk

and (6), (12) follows

¡ £ k ¤¢1/k

¡ £ k ¤¢1/k

E ζ

E ζ

1

1

1

√ − √ ≤ lim inf

≤ lim sup

≤√ .

1/2

1/2

k→∞

k

k

3e

5e

3e

k→∞

This leads to asymptotic upper and lower bounds for ln(P (ζ > x)) too by [7] or [13]. We

¡ £ ¤¢1/k

can show that limk→∞ k −1/2 E ζ k

and limx→∞ x−2 ln(P (ζ > x)) exist, but do not know

their value.

Acknowledgement. We thank Jim Fill and anonymous referees for helpful comments.

References

[1] D. Aldous. Tree-based models for random distribution of mass. Journal of Statistical

Physics 73 (1993), 625-641.

[2] D. Aldous. Brownian excursions, critical random graphs and the multiplicative coalescent.

Ann. Probab. 25 (1997), no. 2, 812–854.

[3] J. Ambjørn, B. Durhuus and T. Jónsson. Quantum gravity, a statistical field theory

approach. Cambridge Monographs on Mathematical Physics, 1997.

[4] N. H. Bingham, C. M. Goldie and J. L. Teugels. Regular variation. First edition. Encyclopedia of Mathematics and its Applications, 27. Cambridge University Press, Cambridge,

1987.

[5] C. Borgs, J. Chayes, R. van der Hofstad and G. Slade. Mean-field lattice trees. On

combinatorics and statistical mechanics. Ann. Comb. 3 (1999), no. 2–4, 205–221.

[6] P. Chassaing and G. Schaeffer. Random Planar Lattices and Integrated SuperBrownian

Excursion. Probab. Theory Related Fields, 128 (2004), no. 2, 161–212.

[7] L. Davies. Tail probabilities for positive random variables with entire characteristic functions of very regular growth. Z. Angew. Math. Mech. 56 (1976), no. 3, T334–T336.

[8] J. F. Delmas. Computation of moments for the length of the one dimensional ISE support.

Electron. J. Probab. 8 (2003), 15 pp. .

[9] A. Dembo and O. Zeitouni. Large deviations techniques and applications. Jones and

Bartlett Publishers, Boston, MA, 1993.

[10] A. Dembo and O. Zeitouni. Large deviations for random distribution of mass. Random

discrete structures (Minneapolis, MN, 1993), 45–53, IMA Vol. Math. Appl., 76, Springer,

New York, 1996.

The center of mass of the ISE

[11] E. Derbez and G. Slade. The scaling limit of lattice trees in high dimensions. Commun.

Math. Phys. 193 (1998), 69–104.

[12] S. Janson. The Wiener index of simply generated random trees. Random Struct. Alg. 22

(2003), no. 4, 337–358.

[13] Y. Kasahara. Tauberian theorems of exponential type. J. Math. Kyoto Univ. 18 (1978),

no. 2, 209–219.

[14] J.-F. Le Gall. Spatial branching processes, random snakes and partial differential equations. Lectures in Mathematics ETH Zürich, Birkhäuser Verlag, Basel, 1999.

[15] J.-F. Marckert and A. Mokkadem. States spaces of the snake and of its tour – Convergence

of the discrete snake. J. Theoret. Probab., 16 (2003), no. 4, 1015–1046.

[16] L. Serlet. A large deviation principle for the Brownian snake. Stochastic Process. Appl.

67 (1997), no. 1, 101–115.

[17] G. Slade. Scaling limits and Super-Brownian motion. Notices of the AMS 49 (2002), no.

9, 1056–1067.

[18] J. Spencer. Enumerating graphs and Brownian motion. Commun. Pure Appl. Math. 50

(1997), 291–294.

[19] E.M. Wright. The number of connected sparsely edged graphs. J. Graph Th. 1 (1977),

317–330.

187