J P E n a l

advertisement

J

Electr

on

i

o

u

rn

al

o

f

P

c

r

o

ba

bility

Vol. 14 (2009), Paper no. 5, pages 87–118.

Journal URL

http://www.math.washington.edu/~ejpecp/

On percolation in random graphs with given vertex

degrees

Svante Janson

Department of Mathematics

Uppsala University

PO Box 480

SE-751 06 Uppsala

Sweden

svante.janson@math.uu.se

http://www.math.uu.se/~svante/

Abstract

We study the random graph obtained by random deletion of vertices or edges from a random

graph with given vertex degrees. A simple trick of exploding vertices instead of deleting them,

enables us to derive results from known results for random graphs with given vertex degrees.

This is used to study existence of giant component and existence of k-core. As a variation of the

latter, we study also bootstrap percolation in random regular graphs.

We obtain both simple new proofs of known results and new results. An interesting feature is

that for some degree sequences, there are several or even infinitely many phase transitions for

the k-core.

Key words: random graph, giant component, k-core, bootstrap percolation.

AMS 2000 Subject Classification: Primary 60C05; 05C80.

Submitted to EJP on April 10, 2008, final version accepted December 17, 2008.

87

1 Introduction

One popular and important type of random graph is given by the uniformly distributed random

graph with a given degree sequence, defined as follows. Let n ∈ N and let d = (di )1n be a sequence of

non-negative integers. We let G(n, d) be a random graph with degree sequence d, uniformly

P chosen

among all possibilities (tacitly assuming that there is any such graph at all; in particular, i di has

to be even).

It is well-known that it is often simpler to study the corresponding random

multigraph G ∗ (n, d) with

P

n

given degree sequence d = (di )1 , defined for every sequence d with i di even by the configuration

model (see e.g. Bollobás [3]): take a set of di half-edges for each vertex i, and combine the halfedges into pairs by a uniformly random matching of the set of all half-edges (this pairing is called a

configuration); each pair of half-edges is then joined to form an edge of G ∗ (n, d).

We consider asymptotics as the numbers of vertices tend to infinity, and thus we assume throughout

P (n)

(n)

the paper that we are given, for each n, a sequence d(n) = (di )1n with i di even. (As usual,

we could somewhat more generally assume that we are given a sequence nν → ∞ and for each ν

(ν) n

a sequence d(ν) = (di )1ν .) For notational simplicity we will usually not show the dependency on

n explicitly; we thus write d and di , and similarly for other (deterministic or random) quantities

introduced below. All unspecified limits and other asymptotic statements are for n → ∞. For

p

example, w.h.p. (with high probability) means ’with probability tending to 1 as n → ∞’, and −→

means ’convergence in probability as n → ∞’. Similarly, we use o p and Op in the standard way,

always implying n → ∞. For example, if X is a parameter of the random graph, X = o p (n) means

p

that P(X > ǫn) → 0 as n → ∞ for every ǫ > 0; equivalently, X /n −→ 0.

We may obtain G(n, d) by conditioning the multigraph G ∗ (n, d) on being a (simple) graph, i.e., on

not having any multiple edges or loops. By Janson [9] (with earlier partial results by many authors),

!

n

n

X

X

2

∗

di .

(1.1)

di = O

lim inf P G (n, d) is simple > 0 ⇐⇒

i=1

i=1

In this case, many results transfer immediately from G ∗ (n, d) to G(n, d), for example, every result of

the type P(En ) → 0 for some events En , and thus every result saying that some parameter converges

in probability to some non-random value. This includes every result in the present paper.

We will in this paper study the random multigraph G ∗ (n, d); the reader can think of doing this

either for its own sake or as a tool for studying G(n, d). We leave the statement of corollaries for

G(n, d), using (1.1), to the reader. Moreover, the results for G(n, d) extend to some other random

graph models too, in particular G(n, p) with p ∼ λ/n and G(n, m) with m ∼ λn/2 with λ > 0, by

the standard device of conditioning on the degree sequence; again we omit the details and refer to

[10; 11; 12] where this method is used.

We will consider percolation of these random (multi)graphs, where we first generate a random

graph G ∗ (n, d) and then delete either vertices or edges at random. (From now on, we simply write

’graph’ for ’multigraph’.) The methods below can be combined to treat the case of random deletion

of both vertices and edges, which is studied by other methods in e.g. Britton, Janson and Martin-Löf

[4], but we leave this to the reader.

To be precise, we consider the following two constructions, given any graph G and a probability

π ∈ [0, 1].

88

Site percolation Randomly delete each vertex (together with all incident edges) with probability

1 − π, independently of all other vertices. We denote the resulting random graph by Gπ,v .

Bond percolation Randomly delete each edge with probability 1 − π, independently of all other

edges. (All vertices are left.) We denote the resulting random graph by Gπ,e .

Thus π denotes the probability to be kept in the percolation model. When, as in our case, the

original graph G itself is random, it is further assumed that we first sample G and then proceed as

above, conditionally on G.

The cases π = 0, 1 are trivial: G1,v = G1,e = G, while G0,v = ;, the null graph with no vertices and no

edges, and G0,e is the empty graph with the same vertex set as G but no edges. We will thus mainly

consider 0 < π < 1.

We may generalize the site percolation model by letting the probability depend on the degree of the

vertex. Thus, if π = (πd )∞

0 is a given sequence of probabilities πd ∈ [0, 1], let Gπ,v be the random

graph obtained by deleting vertices independently of each other, with vertex v ∈ G deleted with

probability 1 − πd(v) where d(v) is the degree of v in G.

For simplicity and in order to concentrate on the main ideas, we will in this paper consider only the

case when the probability π (or the sequence π) is fixed and thus does not depend on n, with the

exception of a few remarks where we briefly indicate how the method can be used also for a more

detailed study of thresholds.

The present paper is inspired by Fountoulakis [7], and we follow his idea of deriving results for the

percolation models G ∗ (n, d)π,v and G ∗ (n, d)π,e from results for the model G ∗ (n, d) without deletions,

but for different degree sequences d. We will, however, use another method to do this, which we

find simpler.

Fountoulakis [7] shows that for both site and bond percolation on G ∗ (n, d), if we condition the

resulting random graph on its degree sequence d′ , and let n′ be the number of its vertices, then the

graph has the distribution of G ∗ (n′ , d′ ), the random graph with this degree sequence constructed by

the configuration model. He then proceeds to calculate the distributions of the degree sequence d′

for the two percolation models and finally applies known results to G ∗ (n′ , d′ ).

Our method is a version of this, where we do the deletions in two steps. For site percolation, instead

of deleting a vertex, let us first explode it by replacing it by d new vertices of degree 1, where d

is its degree; we further colour the new vertices red. Then clean up by removing all red vertices.

Note that the (random) explosions change the number of vertices, but not the number of half-edges.

Moreover, given the set of explosions, there is a one-to-one correspondence between configurations

before and after the explosions, and thus, if we condition on the new degree sequence, the exploded

graph is still described by the configuration model. Furthermore, by symmetry, when removing the

red vertices, all vertices of degree 1 are equivalent, so we may just as well remove the right number

of vertices of degree 1, but choose them uniformly at random. Hence, we can obtain G ∗ (n, d)π,v as

follows:

Site percolation For each vertex i, replace it with probability 1 − π by di new vertices of degree 1

(independently of all other vertices). Let d̃π,v be the resulting (random) degree sequence, let

ñ be its length (the number of vertices), and let n+ be the number of new vertices. Construct

the random graph G ∗ (ñ, d̃π,v ). Finish by deleting n+ randomly chosen vertices of degree 1.

The more general case when we are given a sequence π = (πd )∞

0 is handled in the same way:

89

Site percolation, general For each vertex i, replace it with probability 1 − πdi by di new vertices

of degree 1. Let d̃π,v be the resulting (random) degree sequence, let ñ be its length (the

number of vertices), and let n+ be the number of new vertices. Construct the random graph

G ∗ (ñ, d̃π,v ). Finish by deleting n+ randomly chosen vertices of degree 1.

Remark 1.1. We have here assumed that vertices are deleted at random, independently of each

other. This is not essential for our method, which may be further extended to the case when we

remove a set of vertices determined by any random procedure that is independent of the edges in

G(n, d) (but may depend on the vertex degrees). For example, we may remove a fixed number m of

vertices, chosen uniformly at random. It is easily seen that if m/n → π, the results of Subsection 2.1

below still hold (with all π j = π), and thus the results of the later sections hold too. Another,

deterministic, example is to remove the first m vertices.

p

For bond percolation, we instead explode each half-edge with probability 1 − π, independently of

all other half-edges; to explode a half-edge means that we disconnect it from its vertex and transfer

it to a new, red vertex of degree 1. Again this does not change the number of half-edges, and there

is a one-to-one correspondence between configurations before and after the explosions. We finish

by removing all red vertices and their incident edges. Since an edge consists of two half-edges, and

p

each survives with probability π, this gives the bond percolation model G ∗ (n, d)π,e where edges

are kept with probability π. This yields the following recipe:

Bond percolation Replace the degrees di in the sequence d by independent random

Pn degrees d̃i ∼

p

p

Bi(di , π). (I.e., d̃i has the binomial distribution Bi(di , π).) Add n+ := i=1 (di − d̃i ) new

degrees 1 to the sequence (d̃i )1n , and let d̃π,e be the resulting degree sequence and ñ = n + n+

its length. Construct the random graph G ∗ (ñ, d̃π,e ). Finish by deleting n+ randomly chosen

vertices of degree 1.

In both cases, we have reduced the problem to a simple (random) modification of the degree sequence, plus a random removal of a set of vertices of degree 1. The latter is often more or less

trivial to handle, see the applications below. We continue to call the removed vertices red when

convenient.

Of course, to use this method, it is essential to find the degree sequence d̃ after the explosions. We

study this in Section 2. We then apply this method to three different problems:

Existence of a giant component in the percolated graph, i.e., what is called percolation in random

graph theory (Section 3). Our results include and extend earlier work by Fountoulakis [7], which

inspired the present study, and some of the results by Britton, Janson and Martin-Löf [4].

Existence of a k-core in the percolated graph (Section 4). We obtain a general result analogous to

(and extending) the well-known result by Pittel, Spencer and Wormald [17] for G(n, p). We study

the phase transitions that may occur in some detail and show by examples that it is possible to have

several, and even an infinite number of, different phase transitions as the probability π increases

from 0 to 1.

Bootstrap percolation in random regular graphs (Section 5), where we obtain a new and simpler

proof of results by Balogh and Pittel [1].

For a graph G, let v(G) and e(G) denote the numbers of vertices and edges in G, respectively, and

let v j (G) be the number of vertices of degree j, j ≥ 0. We sometimes use G ∗ (n, d)π to denote any of

the percolation models G ∗ (n, d)π,v , G ∗ (n, d)π,v or G ∗ (n, d)π,e .

90

2 The degree sequence after explosions

P∞

Let n j := #{i ≤ n : di = j}. Thus j=0 n j = n, and n j equals the number v j (G ∗ (n, d)) of vertices of

degree j in G ∗ (n, d). We assume for simplicity the following regularity condition.

Condition 2.1. There exists a probability distribution (p j )∞

j=0 with finite positive mean λ :=

P

j jp j ∈ (0, ∞) such that (as n → ∞)

n j /n → p j ,

and

P∞

j=0

n

jn j

j ≥ 0,

→ λ :=

∞

X

jp j .

(2.1)

(2.2)

j=0

Note that, in order to avoid trivialities, we assume that λ > 0, which is equivalent to p0 < 1. Thus,

there is a positive fraction of vertices of degree at least 1.

P

P

Note that j jn j = i di equals twice the number of edges in G ∗ (n, d), and that (2.2) says that the

average degree in G ∗ (n, d) converges to λ.

Let the random variable D̂ = D̂n be the degree of a random vertex in G ∗ (n, d), thus D̂n has the

distribution P( D̂n = j) = n j /n, and let D be a random variable with the distribution (p j )∞

0 . Then

d

(2.1) is equivalent to D̂n −→ D, and (2.2) is E D̂n → λ = E D. Further, assuming (2.1), (2.2)

is equivalent to uniform integrability of D̂n , or equivalently uniform summability (as n → ∞) of

P

j jn j /n, see for example Gut [8], Theorem 5.5.9 and Remark 5.5.4.

P

Remark 2.2. The uniform summability of j jn j /n is easily seen to imply that if H is any (random

or deterministic) subgraph on G ∗ (n, d) with v(H) = o(n), then e(H) = o(n), and similarly with

o p (n).

We will also use the probability generating function of the asymptotic degree distribution D:

D

g D (x) := E x =

∞

X

pj x j,

(2.3)

j=0

defined at least for |x| ≤ 1.

We perform either site or bond percolation as in Section 1, by the explosion method described there,

and let ñ j := #{i ≤ ñ : d̃i = j} be the number of vertices of degree j after the explosions. Thus

∞

X

ñ j = ñ.

j=0

It is easy to find the distribution of (ñ j ) and its asymptotics for our two percolation models.

91

(2.4)

2.1 Site percolation

We treat the general version with a sequence π. Let n◦j be the number of vertices of degree j that

are not exploded. Then

n◦j ∼ Bi(n j , π j )

n+ =

∞

X

j=0

ñ1 =

(2.5)

j(n j − n◦j ),

(2.6)

j 6= 1,

(2.7)

ñ j = n◦j ,

n◦1

(independent of each other),

+ n+ .

(2.8)

By the law of large numbers, n◦j = n j π j + o p (n) and thus, using the assumption (2.1) and the

P

uniform summability of j jn j /n (which enables us to treat the infinite sums in (2.10) and (2.13)

by a standard argument),

n◦j = n j π j + o p (n) = π j p j n + o p (n),

n+ =

∞

X

j=0

(2.9)

j(1 − π j )p j n + o p (n),

(2.10)

ñ j = π j p j n + o p (n), j 6= 1,

∞

X

ñ1 = π1 p1 +

j(1 − π j )p j n + o p (n),

(2.11)

(2.12)

j=0

ñ =

∞

X

j=0

π j + j(1 − π j ) p j n + o p (n).

We can write (2.13) as

ñ

n

p

−→ ζ :=

∞

X

j=0

(2.13)

π j + j(1 − π j ) p j > 0.

(2.14)

Further, by (2.11) and (2.12),

ñ j

ñ

(

p

−→ p̃ j :=

ζ−1 π j p j ,

ζ

−1

π1 p1 +

P∞

j=1

j(1 − π j )p j ,

j 6= 1,

j = 1.

(2.15)

Since ñ j ≤ n j for j ≥ 2 and ñ ≥ n − n0 , the uniform summability of jn j /n implies uniform summability of j ñ j /ñ, and thus also

P∞

∞

X

j=0 j ñ j p

−→ λ̃ :=

jp̃ j < ∞.

(2.16)

ñ

j=0

Hence Condition 2.1 holds, in probability, for the random degree sequence d̃ too. Further, the total

number of half-edges is not changed by the explosions, and thus also, by (2.14) and (2.2),

P∞

P∞

P∞

j

ñ

jn

n

j

j

j=0

j=0

j=0 jn j p

−→ ζ−1 λ;

(2.17)

=

= ·

n

ñ

ñ

ñ

92

hence (or by (2.15)),

λ̃ = ζ−1 λ.

(2.18)

In the proofs below it will be convenient to assume that (2.15) and (2.16) hold a.s., and not just

in probability, so that Condition 2.1 a.s. holds for d̃; we can assume this without loss of generality

by the Skorohod coupling theorem [13, Theorem 4.30]. (Alternatively, one can argue by selecting

suitable subsequences.)

Let D̃ have the probability distribution (p̃ j ), and let g D̃ be its probability generating function. Then,

by (2.15),

ζg D̃ (x) =

∞

X

ζp̃ j x j =

∞

X

πj pj x j +

j=0

j=0

j=0

∞

X

j(1 − π j )p j x = λx +

∞

X

j=0

π j p j (x j − j x).

(2.19)

In particular, if all π j = π,

ζg D̃ (x) = πg D (x) + (1 − π)λx,

(2.20)

where now ζ = π + (1 − π)λ.

2.2 Bond percolation

For bond percolation, we have explosions that do not destroy the vertices, but they may reduce their

degrees.

vertices that had degree l before the explosions and j after. Thus

P Let ñl j be the number ofP

ñ j = l≥ j ñl j for j 6= 1 and ñ1 = l≥1 ñl1 + n+ . A vertex of degree l will after the explosions have

a degree with the binomial distribution Bi(l, π1/2 ), and thus the probability that it will become a

vertex of degree j is the binomial probability bl j (π1/2 ), where we define

bl j (p) := P Bi(l, p) = j =

l

j

p j (1 − p)l− j .

(2.21)

Since explosions at different vertices occur independently, this means that, for l ≥ j ≥ 0,

ñl j ∼ Bi nl , bl j (π1/2 )

and thus, by the law of large numbers and (2.1),

ñl j = bl j (π1/2 )pl n + o p (n).

Further, the number

vertices equals the number of explosions, and thus has the binomial

P n+ of new

1/2

distribution

Bi( l lnl , 1 − π ). Consequently, using also (2.2) and the uniform summability of

P

jn

/n,

j

j

n+ =

X

l

ñ j =

X

l≥ j

ñ1 =

X

l≥1

lnl (1 − π1/2 ) + o p (n) = (1 − π1/2 )λn + o p (n),

ñl j =

X

bl j (π1/2 )pl n + o p (n),

l≥ j

ñl1 + n+ =

X

l≥1

j 6= 1,

bl1 (π1/2 )pl n + 1 − π1/2 λn + o p (n),

93

(2.22)

(2.23)

(2.24)

ñ = n + n+ = n + 1 − π1/2 λn + o p (n).

(2.25)

In analogy with site percolation we thus have, by (2.25),

ñ

n

p

−→ ζ := 1 + 1 − π1/2 λ

(2.26)

and further, by (2.23) and (2.24),

P

1/2

)pl ,

l≥ j bl j (π

P

−→ p̃ j :=

1/2

1/2

−1

ζ

ñ

b

(π

)p

+

1

−

π

λ ,

l

l≥1 l1

ñ j

ζ−1

p

j 6= 1,

j = 1.

(2.27)

Again, the uniform summability of jn j /n implies uniform summability of j ñ j /ñ, and the total number of half-edges is not changed; thus (2.16), (2.17) and (2.18) hold, now with ζ given by (2.26).

Hence Condition 2.1 holds in probability for the degree sequences d̃ in bond percolation too, and

by the Skorohod coupling theorem we may assume that it holds a.s.

The formula for p̃ j is a bit complicated, but there is a simple formula for the probability generating

P

function g D̃ . We have, by the binomial theorem, j≤l bl j (π)x j = (1 − π + πx)l , and thus (2.27)

yields

ζg D̃ (x) =

∞

X

l=0

(1 − π1/2 + π1/2 x)l pl + (1 − π1/2 )λx

= g D (1 − π

1/2

+π

1/2

x) + (1 − π

1/2

(2.28)

)λx.

3 Giant component

The question of existence of a giant component in G(n, d) and G ∗ (n, d) was answered by Molloy and

Reed [16], who showed that (under some weak technical assumptions) a giant component exists

w.h.p. if and only if (in the notation above) E D(D − 2) > 0. (The term giant component is in this

paper used, somewhat informally, for a component containing at least a fraction ǫ of all vertices,

for some small ǫ > 0 that does not depend on n.) They further gave a formula for the size of this

giant component in Molloy and Reed [15]. We will use the following version of their result, given by

Janson and Luczak [12], Theorem 2.3 and Remark 2.6. Let, for any graph G, Ck (G) denote the k:th

largest component of G. (Break ties by any rule. If there are fewer that k components, let Ck := ;,

the null graph.)

Theorem 3.1 ([15; 12]). Consider G ∗ (n, d), assuming that Condition 2.1 holds and p1 > 0. Let

Ck := Ck (G ∗ (n, d)) and let g D (x) be the probability generating function in (2.3).

P

(i) If E D(D − 2) = j j( j − 2)p j > 0, then there is a unique ξ ∈ (0, 1) such that g D′ (ξ) = λξ, and

p

v(C1 )/n −→ 1 − g D (ξ) > 0,

(3.1)

v j (C1 )/n −→ p j (1 − ξ j ), for every j ≥ 0,

(3.2)

p

p

e(C1 )/n −→ 12 λ(1 − ξ2 ).

p

p

Furthermore, v(C2 )/n −→ 0 and e(C2 )/n −→ 0.

94

(3.3)

(ii) If E D(D − 2) =

P

p

j

p

j( j − 2)p j ≤ 0, then v(C1 )/n −→ 0 and e(C1 )/n −→ 0.

Remark 3.2. E D2 = ∞ is allowed in Theorem 3.1(i).

Remark 3.3. In Theorem 3.1(ii), where E D(D − 2) ≤ 0 and p1 > 0, for 0 ≤ x < 1

λx − g D′ (x) =

∞

X

j=1

jp j (x − x j−1 ) = p1 (x − 1) + x

≤ p1 (x − 1) + x

<

∞

X

j=1

∞

X

j=2

∞

X

j=2

jp j (1 − x j−2 )

jp j ( j − 2)(1 − x)

j( j − 2)p j x(1 − x) = E D(D − 2)x(1 − x) ≤ 0.

Hence, in this case the only solution in [0, 1] to g D′ (ξ) = λξ is ξ = 1, which we may take as the

definition in this case.

Remark 3.4. Let D∗ be a random variable with the distribution

P(D∗ = j) = ( j + 1) P(D = j + 1)/λ,

j ≥ 0;

this is the size-biased distribution of D shifted by 1, and it has a well-known natural interpretation

as follows. Pick a random half-edge; then the number of remaining half-edges at its endpoint has

asymptotically the distribution of D∗ . Therefore, the natural (Galton–Watson) branching process

approximation of the exploration of the successive neighbourhoods of a given vertex is the branching

process X with offspring distributed as D∗ , but starting with an initial distribution given by D. Since

g D∗ (x) =

∞

X

j=1

∗

P(D = j − 1)x

j−1

=

∞

X

jp j

j=1

λ

x

j−1

=

g D′ (x)

λ

,

the equation g D′ (ξ) = λξ in Theorem 3.1(i) can be written g D∗ (ξ) = ξ, which shows that ξ has an

interpretation as the extinction probability of the branching process X with offspring distribution

D∗ , now starting with a single individual. (This also agrees with the definition in Remark 3.3 for the

case Theorem 3.1(ii).) Thus g D (ξ) in (3.1) is the extinction probability of X . Note also that

E D∗ =

E D(D − 1)

λ

=

E D(D − 1)

ED

,

so the condition E D(D − 2) > 0, or equivalently E D(D − 1) > E D, is equivalent to E D ∗ > 1, the

classical condition for the branching process to be supercritical and thus have a positive survival

probability.

The intuition behind the branching process approximation of the local structure of a random graph

at a given vertex is that an infinite approximating branching process corresponds to the vertex being

in a giant component. This intuition agrees also with the formulas (3.2) and (3.3), which reflect the

fact that a vertex of degree j [an edge] belongs to the giant component if and only if one of its j

attached half-edges [one of its two constituent half-edges] connects to the giant component. (It is

rather easy to base rigorous proofs on the branching process approximation, see e.g. [4], but in the

present paper we will only use the branching process heuristically.)

95

Consider one of our percolation models G ∗ (n, d)π , and construct it using explosions and an intermediate random graph G ∗ (ñ, d̃) as described in the introduction. (Recall that d̃ is random, while d

and the limiting probabilities p j and p̃ j are not.) Let C j := C j G ∗ (n, d)π and C˜j := C j G ∗ (ñ, d̃)

denote the components of G ∗ (n, d)π , and G ∗ (ñ, d̃), respectively.

As remarked in Section 2, we may assume that G ∗ (ñ, d̃) too satisfies Condition 2.1, with p j replaced

by p̃ j . (At least a.s.; recall that d̃ is random.) Hence, assuming p̃1 > 0, if we first condition on

d̃, then Theorem 3.1 applies immediately to the exploded graph G ∗ (ñ, d̃). We also have to remove

n+ randomly chosen “red” vertices of degree 1, but luckily this will not break up any component.

Consequently, if E D̃( D̃ − 2) > 0, then G ∗ (ñ, d̃) w.h.p. has a giant component C˜1 , with v(C˜1 ), v j (C˜1 )

and e(C˜1 ) given by Theorem 3.1 (with p j replaced by p̃ j ), and after removing the red vertices,

the remainder of C˜1 is still connected and forms a component C in G ∗ (n, d)π . Furthermore, since

E D̃( D̃ − 2) > 0, p̃ j > 0 for at least one j > 2, and it follows by (3.2) that C˜1 contains cn +

o p (n) vertices of degree j, for some c > 0; all these belong to C (although possibly with smaller

degrees), so C contains w.h.p. at least cn/2 vertices. Moreover, all other components of G ∗ (n, d)π

are contained in components of G ∗ (ñ, d̃) different from C˜1 , and thus at most as large as C˜2 , which by

Theorem 3.1 has o p (ñ) = o p (n) vertices. Hence, w.h.p. C is the largest component C1 of G ∗ (n, d)π ,

and this is the unique giant component in G ∗ (n, d)π .

Since we remove a fraction n+ /ñ1 of all vertices of degree 1, we remove by the law of large numbers

(for a hypergeometric distribution) about the same fraction of the vertices of degree 1 in the giant

component C˜1 . More precisely, by (3.2), C˜1 contains about a fraction 1 − ξ of all vertices of degree

1, where g D̃′ (ξ) = λ̃ξ; hence the number of red vertices removed from C˜1 is

(1 − ξ)n+ + o p (n).

(3.4)

v(C1 ) = v(C˜1 ) − (1 − ξ)n+ + o p (n) = ñ 1 − g D̃ (ξ) − n+ + n+ ξ + o p (n).

(3.5)

By (3.1) and (3.4),

Similarly, by (3.3) and (3.4), since each red vertex that is removed from C1 also removes one edge

with it,

e(C1 ) = e(C˜1 ) − (1 − ξ)n+ + o p (n) = 12 λ̃ñ(1 − ξ2 ) − (1 − ξ)n+ + o p (n).

(3.6)

The case E D̃( D̃ − 2) ≤ 0 is even simpler; since the largest component C1 is contained in some

component C˜j of G ∗ (ñ, d̃), it follows that v(C1 ) ≤ v(C˜j ) ≤ v(C˜1 ) = o p (ñ) = o p (n).

This leads to the following results, where we treat site and bond percolation separately and add

formulas for the asymptotic size of C1 .

Theorem 3.5. Consider the site percolation model G ∗ (n, d)π,v , and suppose that Condition 2.1 holds

and that π = (πd )∞

0 with 0 ≤ π d ≤ 1; suppose further that there exists j ≥ 1 such that p j > 0 and

π j < 1. Then there is w.h.p. a giant component if and only if

∞

X

j=0

j( j − 1)π j p j > λ :=

∞

X

jp j .

(3.7)

j=0

(i) If (3.7) holds, then there is a unique ξ = ξv (π) ∈ (0, 1) such that

∞

X

j=1

jπ j p j (1 − ξ j−1 ) = λ(1 − ξ)

96

(3.8)

and then

p

v(C1 )/n −→ χv (π) :=

∞

X

j=1

π j p j (1 − ξ j ) > 0,

∞

X

p

e(C1 )/n −→ µv (π) := (1 − ξ)

p

j=1

jπ j p j −

(3.9)

∞

(1 − ξ)2 X

2

jp j .

(3.10)

j=1

p

Furthermore, v(C2 )/n −→ 0 and e(C2 )/n −→ 0.

p

p

(ii) If (3.7) does not hold, then v(C1 )/n −→ 0 and e(C1 )/n −→ 0.

Proof. We apply Theorem 3.1 to G ∗ (ñ, d̃) as discussed above. Note that p̃1 > 0 by (2.15) and the

assumption (1 − π j )p j > 0 for some j. By (2.15),

ζ E D̃( D̃ − 2) = ζ

=

∞

X

j=0

∞

X

j=1

j( j − 2)p̃ j =

∞

X

j=1

j( j − 1)π j p j −

j( j − 2)π j p j −

∞

X

∞

X

j=1

j(1 − π j )p j

jp j .

j=1

Hence, the condition E D̃( D̃ − 2) > 0 is equivalent to (3.7).

In particular, it follows that v(C2 ) = o p (n) in (i) and v(C1 ) = o p (n) in (ii). That also e(C2 ) = o p (n)

in (i) and e(C1 ) = o p (n) in (ii) follows by Remark 2.2 applied to G ∗ (ñ, d̃).

It remains only to verify the formulas (3.8)–(3.10). The equation g D̃′ (ξ) = λ̃ξ is by (2.18) equivalent

to ζg D̃′ (ξ) = λξ, which can be written as (3.8) by (2.15) and a simple calculation.

By (3.5), using (2.10), (2.14) and (2.19),

p

v(C1 )/n −→ ζ − ζg D̃ (ξ) − (1 − ξ)

=

∞

X

j=0

=

∞

X

j=0

πj pj −

∞

X

j=0

∞

X

j=1

j(1 − π j )p j

∞

X

j(1 − π j )p j

π j p j ξ j + j(1 − π j )p j ξ + ξ

j=0

π j p j (1 − ξ j ).

Similarly, by (3.6), (2.18), (2.14) and (2.10),

p

e(C1 )/n −→ 21 λ(1 − ξ2 ) − (1 − ξ)

= (1 − ξ)

∞

X

j=1

jπ j p j −

∞

X

j=1

j(1 − π j )p j

(1 − ξ)2

2

λ.

In the standard case when all πd = π, this leads to a simple criterion, which earlier has been shown

by Britton, Janson and Martin-Löf [4] and Fountoulakis [7] by different methods. (A modification

of the usual branching process argument for G ∗ (n, d) in [4] and a method similar to ours in [7].)

97

Corollary 3.6 ([4; 7]). Suppose that Condition 2.1 holds and 0 < π < 1. Then there exists w.h.p. a

giant component in G ∗ (n, d)π,v if and only if

π > πc :=

ED

E D(D − 1)

(3.11)

.

Remark 3.7. Note that πc = 0 is possible; this happens if and only if E D2 = ∞. (Recall that we

assume 0 < E D < ∞, see Condition 2.1.) Further, πc ≥ 1 is possible too: in this case there is w.h.p.

no giant component in G ∗ (n, d) (except possibly in the special case when p j = 0 for all j 6= 0, 2),

and consequently none in the subgraph G ∗ (n, d)π .

Note that by (3.11), πc ∈ (0, 1) if and only if E D < E D(D − 1) < ∞, i.e., if and only if 0 <

E D(D − 2) < ∞.

Remark 3.8. Another case treated in [4] (there called E1) is πd = αd for some

P∞ α ∈ (0, 1). Theorem 3.5 gives a new proof that then there is a giant component if and only if j=1 j( j − 1)α j p j > λ,

which also can be written α2 g D′′ (α) > λ = g D′ (1). (The cases E2 and A in [4] are more complicated

and do not follow from the results in the present paper.)

For edge percolation we similarly have the following; this too has been shown by Britton, Janson

and Martin-Löf [4] and Fountoulakis [7]. Note that the percolation threshold π is the same for site

and bond percolation, as observed by Fountoulakis [7].

Theorem 3.9 ([4; 7]). Consider the bond percolation model G ∗ (n, d)π,e , and suppose that Condition 2.1 holds and that 0 < π < 1. Then there is w.h.p. a giant component if and only if

π > πc :=

ED

E D(D − 1)

.

(3.12)

(i) If (3.12) holds, then there is a unique ξ = ξe (π) ∈ (0, 1) such that

π1/2 g D′ 1 − π1/2 + π1/2 ξ + (1 − π1/2 )λ = λξ,

(3.13)

and then

p

v(C1 )/n −→ χe (π) := 1 − g D 1 − π1/2 + π1/2 ξ > 0,

(3.14)

e(C1 )/n −→ µe (π) := π1/2 (1 − ξ)λ − 12 λ(1 − ξ)2 .

(3.15)

p

p

p

Furthermore, v(C2 )/n −→ 0 and e(C2 )/n −→ 0.

p

p

(ii) If (3.12) does not hold, then v(C1 )/n −→ 0 and e(C1 )/n −→ 0.

Proof. We argue as in the proof of Theorem 3.5, noting that p̃1 > 0 by (2.27). By (2.28),

ζ E D̃( D̃ − 2) = ζg D̃′′ (1) − ζg D̃′ (1) = πg D′′ (1) − π1/2 g D′ (1) − (1 − π1/2 )λ

= π E D(D − 1) − λ,

which yields the criterion (3.12). Further, if (3.12) holds, then the equation g D̃′ (ξ) = λ̃ξ, which by

(2.18) is equivalent to ζg D̃′ (ξ) = ζλ̃ξ = λξ, becomes (3.13) by (2.28).

98

By (3.5), (2.26), (2.22) and (2.28),

p

v(C1 )/n −→ ζ − ζg D̃ (ξ) − (1 − ξ)(1 − π1/2 )λ = 1 − g D 1 − π1/2 + π1/2 ξ) ,

which is (3.14). Similarly, (3.6), (2.26), (2.18) and (2.22) yield

p

e(C1 )/n −→ 12 λ(1 − ξ2 ) − (1 − ξ)(1 − π1/2 )λ = π1/2 (1 − ξ)λ − 21 λ(1 − ξ)2 ,

which is (3.15). The rest is as above.

Remark 3.10. It may come as a surprise that we have the same criterion (3.11) and (3.12) for

site and bond percolation, since the proofs above arrive at this equation in somewhat different

ways. However, remember that all results here are consistent with the standard branching process

approximation in Remark 3.4 (even if our proofs use different arguments) and it is obvious that both

site and bond percolation affect the mean number of offspring in the branching process in the same

way, namely by multiplication by π. Cf. [4], where the proofs are based on such branching process

approximations.

Define

ρv = ρv (π) := 1 − ξv (π)

ρe = ρe (π) := 1 − ξe (π);

and

(3.16)

recall from Remark 3.4 that ξv and ξe are the extinction probabilities in the two branching processes

defined by the site and bond percolation models, and thus ρv and ρe are the corresponding survival

probabilities. For bond percolation, (3.13)–(3.15) can be written in the somewhat simpler forms

(3.17)

π1/2 g D′ 1 − π1/2 ρe = λ(π1/2 − ρe ),

p

(3.18)

v(C1 )/n −→ χe (π) := 1 − g D 1 − π1/2 ρe (π) ,

p

e(C1 )/n −→ µe (π) := π1/2 λρe (π) − 12 λρe (π)2 .

Note further that if we consider site percolation with all π j = π, (3.8) can be written

π λ − g D′ (1 − ρv ) = λρv

(3.19)

(3.20)

and it follows by comparison with (3.17) that

ρv (π) = π1/2 ρe (π).

Furthermore, (3.9), (3.10), (3.18) and (3.19) now yield

χv (π) = π 1 − g D (ξv (π)) = π 1 − g D (1 − ρv (π)) = πχe (π),

µv (π) = πλρv (π) −

1

λρv (π)2

2

= πµe (π).

(3.21)

(3.22)

(3.23)

We next consider how the various parameters above depend on π, for both site percolation and

bond percolation, where for site percolation we in the remainder of this section consider only the

case when all π j = π.

We have so far defined the parameters for π ∈ (πc , 1) only; we extend the definitions by letting

ξv := ξe := 1 and ρv := ρe := χv := χe := µv := µe := 0 for π ≤ πc , noting that this is compatible

with the branching process interpretation of ξ and ρ in Remark 3.4 and that the equalities in (3.8)–

(3.23) hold trivially.

99

Theorem 3.11. Assume Condition 2.1. The functions ξv , ρv , χv , µv , ξe , ρe , χe , µe are continuous functions of π ∈ (0, 1) and are analytic except at π = πc . (Hence, the functions are analytic in (0, 1) if and

only if πc = 0 or πc ≥ 1.)

Proof. It suffices to show this for ξv ; the result for the other functions then follows by (3.16) and

(3.21)–(3.23). Since the case π ≤ πc is trivial, it suffices to consider π ≥ πc , and we may thus

assume that 0 ≤ πc < 1.

If π ∈ (πc , 1), then, as shown above, g D̃′ (ξv ) = λ̃ξv , or, equivalently, G(ξv , π) = 0, where G(ξ, π) :=

g D̃′ (ξ)/ξ− λ̃ is an analytic function of (ξ, π) ∈ (0, 1)2 . Moreover, G(ξ, π) is a strictly convex function

¯

∂ G(ξ,π) ¯

< 0. The implicit

of ξ ∈ (0, 1] for any π ∈ (0, 1), and G(ξ , π) = G(1, π) = 0; hence

v

ξ=ξv

∂ξ

function theorem now shows that ξv (π) is analytic for π ∈ (πc , 1).

For continuity at πc , suppose πc ∈ (0, 1) and let ξ̂ = limn→∞ ξv (πn ) for some sequence πn → πc .

′

(ξ (πn )) = λ̃(πn )ξv (πn ) and thus

Then, writing D̃(π) and λ̃(π) to show the dependence on π, g D̃(π

) v

n

′

(ξ̂) = λ̃(πc )ξ̂. However, for π ≤ πc , we have E D̃( D̃ − 2) ≤ 0

by continuity, e.g. using (2.28), g D̃(π

)

c

and then ξ = 1 is the only solution in (0, 1] of g D̃′ (ξ) = λ̃ξ; hence ξ̂ = 1. This shows that ξv (π) → 1

as π → πc , i.e., ξv is continuous at πc .

Remark 3.12. Alternatively, the continuity of ξv in (0, 1) follows by Remark 3.4 and continuity of

the extinction probability as the offspring distribution varies, cf. [4, Lemma 4.1]. Furthermore, by

the same arguments, the parameters are continuous also at π = 0 and, except in the case when

p0 + p2 = 1 (and thus D̃ = 1 a.s.), at π = 1 too.

At the threshold πc , we have linear growth of the size of the giant component for (slightly) larger

π, provided E D3 < ∞, and thus a jump discontinuity in the derivative of ξv , χv , . . . . More precisely,

the following holds. We are here only interested in the case 0 < πc < 1, which is equivalent to

0 < E D(D − 2) < ∞, see Remark 3.7.

Theorem 3.13. Suppose that 0 < E D(D − 2) < ∞; thus 0 < πc < 1. If further E D3 < ∞, then as

ǫ ց 0,

ρv (πc + ǫ) ∼

2 E D(D − 1)

2

ǫ

E D · E D(D − 1)(D − 2)

2 E D · E D(D − 1)

χv (πc + ǫ) ∼ µv (πc + ǫ) ∼ πc λρv (πc + ǫ) ∼

ǫ.

E D(D − 1)(D − 2)

πc E D(D − 1)(D − 2)

ǫ=

2 E D(D − 1)

(3.24)

(3.25)

Similar results for ρe , χe , µe follow by (3.21)–(3.23).

Proof. For π = πc + ǫ ց πc , by g D′′ (1) = E D(D − 1) = λ/πc , see (3.11), and (3.20),

ǫ g D′′ (1)ρv = (π − πc )g D′′ (1)ρv = πg D′′ (1)ρv − λρv = π g D′′ (1)ρv − λ + g D′ (1 − ρv ) .

(3.26)

Since E D3 < ∞, g D is three times continuously differentiable on [0, 1], and a Taylor expansion

yields g D′ (1 − ρv ) = λ − ρv g D′′ (1) + ρv2 g D′′′ (1)/2 + o(ρv2 ). Hence, (3.26) yields, since ρv > 0,

ǫ g D′′ (1) = πρv g D′′′ (1)/2 + o(ρv ) = πc ρv g D′′′ (1)/2 + o(ρv ).

100

Thus, noting that g D′′ (1) = E D(D − 1) and g D′′′ (1) = E D(D − 1)(D − 2) > 0 (since E D(D − 2) > 0),

ρv ∼

2g D′′ (1)

ǫ

πc g D′′′ (1)

=

2 E D(D − 1)

πc E D(D − 1)(D − 2)

ǫ,

which yields (3.24). Finally, (3.25) follows easily by (3.22) and (3.23).

If E D3 = ∞, we find in the same way a slower growth of ρv (π), χv (π), µv (π) at πc . As an example,

we consider D with a power law tail, pk ∼ ck−γ , where we take 3 < γ < 4 so that E D2 < ∞ but

E D3 = ∞.

Theorem 3.14. Suppose that pk ∼ ck−γ as k → ∞, where 3 < γ < 4 and c > 0. Assume further that

E D(D − 2) > 0. Then πc ∈ (0, 1) and, as ǫ ց 0,

ρv (πc + ǫ) ∼

E D(D − 1)

1/(γ−3)

cπc Γ(2 − γ)

ǫ 1/(γ−3) ,

χv (πc + ǫ) ∼ µv (πc + ǫ) ∼ πc λρv (πc + ǫ)

E D(D − 1) 1/(γ−3) 1/(γ−3)

ǫ

.

∼ πc λ

cπc Γ(2 − γ)

Similar results for ρe , χe , µe follow by (3.21)–(3.23).

Proof. We have, for example by comparison with the Taylor expansion of 1 − (1 − t)

g D′′′ (1 − t) =

∞

X

k=3

k(k − 1)(k − 2)pk (1 − t)k−3 ∼ cΓ(4 − γ)t γ−4 ,

γ−4

,

t ց 0,

and thus by integration

g D′′ (1) − g D′′ (1 − t) ∼ cΓ(4 − γ)(γ − 3)−1 t γ−3 = c|Γ(3 − γ)|t γ−3 ,

and, integrating once more,

ρv g D′′ (1) − (λ − g D′ (1 − ρv )) ∼ cΓ(2 − γ)ρvγ−2 .

Hence, (3.26) yields

ǫ g D′′ (1)ρv ∼ cπc Γ(2 − γ)ρvγ−2

and the results follow, again using (3.22) and (3.23).

4

k-core

Let k ≥ 2 be a fixed integer. The k-core of a graph G, denoted by Corek (G), is the largest induced

subgraph of G with minimum vertex degree at least k. (Note that the k-core may be empty.) The

question whether a non-empty k-core exists in a random graph has attracted a lot of attention for

various models of random graphs since the pioneering papers by Bollobás [2], Łuczak [14] and

Pittel, Spencer and Wormald [17] for G(n, p) and G(n, m); in particular, the case of G(n, d) and

101

G ∗ (n, d) with given degree sequences have been studied by several authors, see Janson and Luczak

[10, 11] and the references given there.

We study the percolated G ∗ (n, d)π by the exposion method presented in Section 1. For the k-core,

the cleaning up stage is trivial: by definition, the k-core of G ∗ (ñ, d̃) does not contain any vertices of

degree 1, so it is unaffected by the removal of all red vertices, and thus

(4.1)

Corek G ∗ (n, d)π = Corek G ∗ (ñ, d̃) .

Let, for 0 ≤ p ≤ 1, D p be the thinning of D obtained by taking D points and then randomly and

independently keeping each of them with probability p. Thus, given D = d, D p ∼ Bi(d, p). Define,

recalling the notation (2.21),

∞ X

∞

X

h(p) := E D p 1[D p ≥ k] =

jpl bl j (p),

(4.2)

j=k l= j

h1 (p) := P(D p ≥ k) =

∞ X

∞

X

pl bl j (p).

(4.3)

j=k l= j

Note that D p is stochastically increasing in p, and thus both h and h1 are increasing in p, with

P∞

P∞

h(0) = h1 (0) = 0. Note further that h(1) = j=k jp j ≤ λ and h1 (1) = j=k p j ≤ 1, with strict

inequalities unless p j = 0 for all j = 1, . . . , k − 1 or j = 0, 1, . . . , k − 1, respectively. Moreover,

h(p) = E D p − E D p 1[D p ≤ k − 1] = E D p −

= λp −

= λp −

k−1 X

X

jpl

l

j=1 l≥ j

k−1

X

pj

j=1

( j − 1)!

j

k−1

X

j P(D p = j)

j=1

p j (1 − p)l− j

(4.4)

( j)

g D (1 − p).

Since g D (z) is an analytic function in {z : |z| < 1}, (4.4) shows that h(p) is an analytic function in

the domain {p : |p − 1| < 1} in the complex plane; in particular, h is analytic on (0, 1]. (But not

necessarily at 0, as seen by Example 4.13.) Similarly, h1 is analytic on (0, 1].

We will use the following result by Janson and Luczak [10], Theorem 2.3.

Theorem 4.1 ([10]). Suppose that Condition 2.1 holds. Let k ≥ 2 be fixed, and let Core∗k be the k-core

of G ∗ (n, d). Let b

p := max{p ∈ [0, 1] : h(p) = λp2 }.

(i) If h(p) < λp2 for all p ∈ (0, 1], which is equivalent to b

p = 0, then Core∗k has o p (n) vertices and

Pn αd

o p (n) edges. Furthermore, if also k ≥ 3 and i=1 e i = O(n) for some α > 0, then Core∗k is

empty w.h.p.

(ii) If h(p) ≥ λp 2 for some p ∈ (0, 1], which is equivalent to b

p ∈ (0, 1], and further b

p is not a local

maximum point of h(p) − λp 2 , then

p

v(Core∗k )/n −→ h1 (b

p) > 0,

102

(4.5)

v j (Core∗k )/n

p

−→ P(Dbp = j) =

∞

X

pl bl j (b

p),

j ≥ k,

l= j

p

e(Core∗k )/n −→ h(b

p)/2 = λb

p2 /2.

(4.6)

(4.7)

Remark 4.2. The result (4.6) is not stated explicitly in [10], but as remarked in [11, Remark 1.8],

it follows immediately from the proof in [10] of (4.5). (Cf. [5] for the random graph G(n, m).)

Remark 4.3. The extra condition in (ii) that b

p is not a local maximum point of h(p)−λp 2 is actually

stated somewhat differently in [10], viz. as λp2 < h(p) in some interval (b

p − ǫ, b

p). However, since

2

g(p) := h(p) − λp is analytic at b

p, a Taylor expansion at b

p shows that either g(p) = 0 for all p (and

then b

p = 1), or for some such interval (b

p −ǫ, b

p), either g(p) > 0 or g(p) < 0 throughout the interval.

Since g(b

p) = 0 and g(p) < 0 for b

p < p ≤ 1, the two versions of the condition are equivalent.

The need for this condition is perhaps more clearly seen in the percolation setting, cf. Remark 4.8.

There is a natural interpretation of this result in terms of the branching process approximation of

the local exploration process, similar to the one described for the giant component in Remark 3.4.

For the k-core, this was observed already by Pittel, Spencer and Wormald [17], but (unlike for the

giant component), the branching process approximation has so far mainly been used heuristically;

the technical difficulties to make a rigorous proof based on it are formidable, and have so far been

overcome only by Riordan [18] for a related random graph model. We, as most others, avoid this

complicated method of proof, and only identify the limits in Theorem 4.1 (which is proved by other,

simpler, methods in [10]) with quantities for the branching process. Although this idea is not new,

we have, for the random graphs that we consider, not seen a detailed proof of it in the literature, so

for completeness we provide one in Appendix A.

Remark 4.4. If k = 2, then (4.4) yields

h(p) = λp −

and thus

∞

X

l=0

pl l p(1 − p)l−1 = λp − pg D′ (1 − p)

(4.8)

h(p) − λp 2 = p λ(1 − p) − g D′ (1 − p) .

It follows that b

p = 1 − ξ, where ξ is as in Theorem 3.1 and Remark 3.3; i.e., by Remark 3.4, b

p = ρ,

the survival probability of the branching process X with offspring distribution D∗ . (See Appendix A

for further explanations of this.)

We now easily derive results for the k-core in the percolation models. For simplicity, we consider

for site percolation only the case when all πk are equal; the general case is similar but the explicit

formulas are less nice.

Theorem 4.5. Consider the site percolation model G ∗ (n, d)π,v with 0 ≤ π ≤ 1, and suppose that

Condition 2.1 holds. Let k ≥ 2 be fixed, and let Core∗k be the k-core of G ∗ (n, d)π,v . Let

πc = πc(k) := inf

0<p≤1

λp2

h(p)

103

=

sup

0<p≤1

h(p)

λp2

−1

.

(4.9)

(i) If π < πc , then Core∗k has o p (n) vertices and o p (n) edges. Furthermore, if also k ≥ 3 and

Pn αd

i = O(n) for some α > 0, then Core∗ is empty w.h.p.

i=1 e

k

p=b

p(π) is the largest p ≤ 1 such

(ii) If π > πc , then w.h.p. Core∗k is non-empty. Furthermore, if b

2

−1

that h(p)/(λp ) = π , and b

p is not a local maximum point of h(p)/(λp2 ) in (0, 1], then

p

v(Core∗k )/n −→ πh1 (b

p) > 0,

p

v j (Core∗k )/n −→ π P(Dbp = j),

p

j ≥ k,

e(Core∗k )/n −→ πh(b

p)/2 = λb

p2 /2.

Theorem 4.6. Consider the bond percolation model G ∗ (n, d)π,e with 0 ≤ π ≤ 1, and suppose that

Condition 2.1 holds. Let k ≥ 2 be fixed, and let Core∗k be the k-core of G ∗ (n, d)π,e . Let πc = πc(k) be

given by (4.9).

(i) If π < πc , then Core∗k has o p (n) vertices and o p (n) edges. Furthermore, if also k ≥ 3 and

Pn αd

i = O(n) for some α > 0, then Core∗ is empty w.h.p.

i=1 e

k

(ii) If π > πc , then w.h.p. Core∗k is non-empty. Furthermore, if b

p=b

p(π) is the largest p ≤ 1 such

that h(p)/(λp2 ) = π−1 , and b

p is not a local maximum point of h(p)/(λp2 ) in (0, 1], then

p

p) > 0,

v(Core∗k )/n −→ h1 (b

p

v j (Core∗k )/n −→ P(Dbp = j),

p

j ≥ k,

e(Core∗k )/n −→ h(b

p)/2 = λb

p2 /(2π).

For convenience, we define ϕ(p) := h(p)/p2 , 0 < p ≤ 1.

Remark 4.7. Since h(p) is analytic in (0, 1), there is at most a countable number of local maximum

points of ϕ(p) := h(p)/p2 (except when h(p)/p2 is constant), and thus at most a countable number

of local maximum values of h(p)/p2 . Hence, there is at most a countable number of exceptional

values of π in part (ii) of Theorems 4.5 and 4.6. At these exceptional values, we have a discontinuity

of b

p(π) and thus of the relative asymptotic size πh1 (b

p(π)) or h1 (b

p(π)) of the k-core; in other words,

there is a phase transition of the k-core at each such exceptional π. (See Figures 1 and 2.) Similarly,

if ϕ has an inflection point at b

p(π), i.e., if ϕ ′ (b

p) = ϕ ′′ (b

p) = · · · = ϕ (2ℓ) (b

p) = 0 and ϕ (2ℓ+1) (b

p) < 0

for some ℓ ≥ 1, then b

p(π) and h1 (b

p(π)) are continuous but the derivatives of b

p(π) and h1 (b

p(π))

become infinite at this point, so we have a phase transition of a different type. For all other π > πc ,

the implicit function theorem shows that b

p(π) and h1 (b

p(π)) are analytic at π.

Say that p̃ is a critical point of ϕ if ϕ ′ (p̃) = 0, and a bad critical point if further, p̃ ∈ (0, 1), ϕ(p̃) > λ

and ϕ(p̃) > ϕ(p) for all p ∈ (p̃, 1). It follows that there is a 1–1 correspondence between phase

transitions in (πc , 1) or [πc , 1) and bad critical points p̃ of ϕ, with the phase transition occurring at

π̃ = λ/ϕ(p̃). This includes πc if and only if sup(0,1] ϕ(p) is attained and larger than λ, in which case

the last global maximum point is a bad critical point; if this supremum is finite not attained, then

there is another first-order phase transition at πc , while if the supremum is infinite, then πc = 0.

Finally, there may be a further phase transition at π̃ = 1 (with p̃ = 1); this happens if and only if

ϕ(1) = h(1) = λ and ϕ ′ (1) ≤ 0.

104

The phase transitions are first-order when the corresponding p̃ is a bad local maximum point of ϕ,

i.e., a bad critical point that is a local maximum point. (This includes πc when sup(0,1] ϕ is attained,

but not otherwise.) Thus, the phase transition that occur are typically first order, but there are

exceptions, see Examples 4.13 and 4.18.

Remark 4.8. The behaviour at π = πc depends on more detailed properties of the degree sequences

(n)

(di )1n , or equivalently of D̂n . Indeed, more precise results can be derived from Janson and Luczak

(n)

[11], Theorem 3.5, at least under somewhat stricter conditions on (di )1n ; in particular, it then follows that the width of the threshold is of the order n−1/2 , i.e., that there is a sequence πcn depending

(n)

on (di )1n , with πcn → πc , such that G ∗ (n, d)π,v and G ∗ (n, d)π,e w.h.p. have a non-empty k-core if

π = πcn + ω(n)n−1/2 with ω(n) → ∞, but w.h.p. an empty k-core if π = πcn − ω(n)n−1/2 , while

in the intermediate case π = πcn + cn−1/2 with −∞ < c < ∞, P(G ∗ (n, d)π has a non-empty k-core)

converges to a limit (depending on c) in (0, 1). We leave the details to the reader.

The same applies to further phase transitions that may occur.

Remark 4.9. If k = 2, then (4.8) yields

ϕ(p) := h(p)/p2 =

X

j≥2

p j j(1 − (1 − p) j−1 )/p,

which is decreasing on (0, 1] (or constant, when P(D > 2) = 0), with

X

sup ϕ(p) = lim ϕ(p) =

p j j( j − 1) = E D(D − 1) ≤ ∞.

p∈(0,1]

p→0

j

Hence

π(2)

c = λ/ E D(D − 1) = E D/ E D(D − 1),

coinciding with the critical value in (3.11) for a giant component.

Although there is no strict implication in any direction between “a giant component” and “a nonempty 2-core”, in random graphs these seem to typically appear together (in the form of a large

connected component of the 2-core), see Appendix A for branching process heuristics explaing this.

Remark 4.10. We see again that the results for site and bond percolation are almost identical. In fact, they become the same if we measure the size of the k-core in relation to the size

of the percolated graph G ∗ (n, d)π , since v(G ∗ (n, d)π,e ) = n but v(G ∗ (n, d)π,v ) ∼ Bi(n, π), so

p

v(G ∗ (n, d)π,v )/n −→ π. Again, this is heuristically explained by the branching process approximations; see Appendix A and note that random deletions of vertices or edges yield the same result

in the branching process, assuming that we do not delete the root.

Proof of Theorem 4.5. The case P(D ≥ k) = 0 is trivial; in this case h(p) = 0 for all p and

πc = 0 so (i) applies. Further, Theorem 4.1(i) applies to G ∗ (n, d), and the result follows from

Corek (G ∗ (n, d)π,v ) ⊆ Corek (G ∗ (n, d)). In the sequel we thus assume P(D ≥ k) > 0, which implies

h(p) > 0 and h1 (p) > 0 for 0 < p ≤ 1.

We apply Theorem 4.1 to the exploded graph G ∗ (ñ, d̃), recalling (4.1).

G ∗ (n, d)π,v , p̃ j = ζ−1 πp j for j ≥ 2 by (2.15), and thus

P( D̃ p = j) = ζ−1 π P(D p = j),

105

j ≥ 2,

For site percolation,

and, because k ≥ 2,

h̃(p) := E D̃ p 1[ D̃ p ≥ k] = ζ−1 πh(p),

h̃1 (p) := P( D̃ p ≥ k) = ζ

−1

(4.10)

πh1 (p).

(4.11)

Hence, the condition h̃(p) ≥ λ̃p2 can, using (2.18), be written

πh(p) ≥ λp 2 .

(4.12)

If π < πc , then for every p ∈ (0, 1], by (4.9), π < πc ≤ λp 2 /h(p) so (4.12) doesP

not hold and h̃(p) <

n

2

∗

λ̃p . Hence Theorem 4.1(i) applies to G (ñ, d̃), which proves (i); note that if i=1 eαdi = O(n) for

some α > 0, then also

ñ

X

i=1

eαd̃i ≤

n

X

i=1

eαdi + n+ eα ≤

n

X

eαdi + eα

i=1

X

jn j = O(n).

j≥1

If π > πc , then there exists p ∈ (0, 1] such that π > λp2 /h(p) and thus (4.12) holds and Theorem 4.1(ii) applies to G ∗ (ñ, d̃). Moreover, b

p in Theorem 4.1(ii) is the largest p ≤ 1 such that (4.12)

holds. Since πh(1) ≤ h(1) ≤ λ and h is continuous, we have equality in (4.12) for p = b

p, i.e.,

2

2

−1

πh(b

p) = λb

p , so b

p is as asserted the largest p ≤ 1 with h(p)/(λp ) = π .

Further, if b

p is a local maximum point of h̃(p) − λ̃p2 = ζ−1 (πh(p) − λp 2 ), then πh(p) − λp 2 ≤ 0 in

a neighbourhood of b

p and thus h(p)/(λp2 ) ≤ 1/π = h(b

p)/(λb

p2 ) there; thus b

p is a local maximum

2

point of h(p)/(λp ). Excluding such points, we obtain from Theorem 4.1(ii) using (4.1), (2.14),

(2.15), (2.18), (4.10) and (4.11),

v(Core∗k )

ñ v(Core∗k ) p

−→ ζh̃1 (b

p) = πh1 (b

p),

·

ñ

n

n

v j (Core∗k )

ñ v j (Core∗k ) p

−→ ζ P( D̃bp = j) = π P(Dbp = j),

= ·

ñ

n

n

e(Core∗k )

λ̃b

p2

λb

p2

ñ e(Core∗k ) p

−→ ζ

= ·

=

.

ñ

n

n

2

2

=

j ≥ k,

This proves the result when b

p is not a local maximum point of h(p)/(λp2 ). In particular, since

∗

h1 (b

p) > 0, Corek is non-empty w.h.p. when π > πc is not a local maximum value of h(p)/(λp2 ).

Finally, even if π is such a local maximum value, we can find π′ with πc < π′ < π that is not,

because by Remark 4.7 there is only a countable number of exceptional π. By what we just have

shown, G ∗ (n, d)π′ ,v has w.h.p. a non-empty k-core, and thus so has G ∗ (n, d)π,v ⊇ G ∗ (n, d)π′ ,v .

Proof of Theorem 4.6. We argue as in the proof just given of Theorem 4.6, again using (4.1) and

applying Theorem 4.1 to the exploded graph G ∗ (ñ, d̃). We may again assume P(D ≥ k) > 0, and

thus h(p) > 0 and h1 (p) > 0 for 0 < p ≤ 1. We may further assume π > 0.

The main difference from the site percolation case is that for bond percolation G ∗ (n, d)π,e , (2.27)

yields

j ≥ 2,

P( D̃ = j) = ζ−1 P(Dπ1/2 = j),

and hence

P( D̃ p = j) = ζ−1 P(D pπ1/2 = j),

106

j ≥ 2,

(4.13)

and thus

h̃(p) := E D̃ p 1[ D̃ p ≥ k] = ζ−1 h(pπ1/2 ),

h̃1 (p) := P( D̃ p ≥ k) = ζ

−1

h1 (pπ

1/2

(4.14)

).

(4.15)

Consequently, the condition h̃(p) ≥ λ̃p2 can, using (2.18), be written as h(pπ1/2 ) ≥ λp 2 , or

2

πh(pπ1/2 ) ≥ λ pπ1/2 .

(4.16)

If π < πc , then for every p ∈ (0, 1] we have pπ1/2 ∈ (0, 1] and thus by (4.9)

π < πc ≤

λ(pπ1/2 )2

h(pπ1/2 )

so (4.16) does not hold and h̃(p) < λ̃p2 . Hence Theorem 4.1(i) applies to G ∗ (ñ, d̃) as in the proof

of Theorem 4.5.

If π > πc , then there exists p ∈ (0, 1] such that π > λp2 /h(p) and, as before, b

p is the largest such p

2

−1

1/2

and satisfies h(b

p)/(λb

p ) = π . Furthermore, if π < p ≤ 1, then

πh(p) ≤ πh(1) ≤ πλ < λp 2 ,

(4.17)

and thus p 6= b

p. Hence b

p ≤ π1/2 . Let b

p0 := b

p/π1/2 . Then b

p0 ∈ (0, 1] and b

p0 is the largest p ≤ 1 such

2

that (4.16) holds; i.e., the largest p ≤ 1 such that h̃(p) ≥ λ̃p . We thus can apply Theorem 4.1(ii)

to G ∗ (ñ, d̃), with b

p replaced by b

p0 , noting that if b

p0 is a local maximum point of h̃(p) − λ̃p2 , then b

p

is a local maximum point of

h̃(pπ−1/2 ) − λ̃(pπ−1/2 )2 = ζ−1 h(p) − π−1 λp2

and thus of πh(p) − λp 2 , which as in the proof of Theorem 4.5 implies that b

p is a local maximum

point of h(p)/(λp2 ). (The careful reader may note that there is no problem with the special case

b

p0 = 1, when we only consider a one-sided maximum at b

p0 : in this case b

p = π1/2 and πh(b

p) =

2

λb

p = λπ so h(b

p) = λ and b

p = 1, π = 1.) Consequently, when b

p is not a local maximum point of

h(p)/(λp2 ), Theorem 4.1(ii) yields, using (2.26), (4.13), (4.15),

v(Core∗k )

ñ v(Core∗k ) p

−→ ζh̃1 (b

p0 ) = h1 (b

p),

·

ñ

n

n

v j (Core∗k )

ñ v j (Core∗k ) p

−→ ζ P( D̃bp0 = j) = P(Dbp = j),

= ·

ñ

n

n

e(Core∗k )

λ̃b

p2

λb

p2

ñ e(Core∗k ) p

−→ ζ 0 =

= ·

.

ñ

n

n

2

2π

=

j ≥ k,

The proof is completed as before.

Consider now what Theorems 4.5 and 4.6 imply for the k-core as π increases from 0 to 1. (We

will be somewhat informal; the statements below should be interpreted as asymptotic as n → ∞ for

p

fixed π, but we for simplicity omit “w.h.p.’’ and “−→”.)

If k = 2, we have by Remark 4.9 a similar behaviour as for the giant component in Section 3: in the

interesting case 0 < πc < 1, the 2-core is small, o(n), for π < πc and large, Θ(n), for π > πc , with

107

p(π)) that is a continuous function of π also at πc and analytic everywhere else in

a relative size h1 (b

(0, 1), cf. Theorem 3.11.

Assume now k ≥ 3. For the random graph G(n, p) with p = c/n, the classical result by Pittel,

Spencer and Wormald [17] shows that there is a first-order (=discontinuous) phase transition at

some value ck ; for c < ck the k-core is empty and for c > ck it is non-empty and with a relative

size ψk (c) that jumps to a positive value at c = ck , and thereafter is analytic. We can see this as a

percolation result, choosing a large λ and regarding G(n, c/n) as obtained by bond percolation on

G(n, λ/n) with π = c/λ for c ∈ [0, λ]; G(n, λ/n) is not exactly a random graph of the type G ∗ (n, d)

studied in the present paper, but as said in the introduction, it can be treated by our methods by

conditioning on the degree sequence, and it has the asymptotic degree distribution D ∼ Po(λ). In

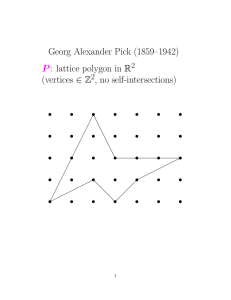

this case, see Example 4.11 and Figure 1, ϕ is unimodal, with ϕ(0) = 0, a maximum at some interior

point p0 ∈ (0, 1), and ϕ ′ < 0 on (p0 , 1). This is a typical case; ϕ has these properties for many other

degree distributions too (and k ≥ 3), and these properties of ϕ imply by Theorems 4.5 and 4.6 that

there is, provided ϕ(p0 ) > λ, a first-order phase transition at π = πc = λ/ϕ(p0 ) where the k-core

suddenly is created with a positive fraction h1 (p0 ) of all vertices, but no other phase transitions since

h1 (b

p(π)) is analytic on (πc , 1). Equivalently, recalling Remark 4.7, we see that p0 is the only bad

critical point of ϕ.

However, there are other possibilities too; there may be several bad critical points of ϕ, and thus

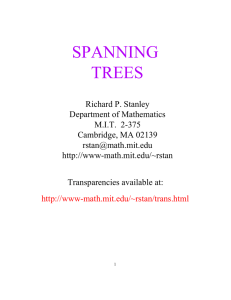

several phase transitions of ϕ. There may even be an infinite number of them. We give some

examples showing different possibilities that may occur. (A similar example with several phase

transitions for a related hypergraph process is given by Darling, Levin and Norris [6].)

30

25

20

15

10

5

0

0.2

0.4

0.6

0.8

1

Figure 1: ϕ(p) = h(p)/p2 for D ∼ Po(10) and k = 3.

Example 4.11. A standard case is when D ∼ Po(λ) and k ≥ 3. (This includes, as said above, the case

G(n, λ/n) by conditioning on the degree sequence, in which case we recover the result by [17].)

Then D p ∼ Po(λp) and a simple calculation shows that h(p) = λp P(Po(λp) ≥ k − 1), see [10, p.

108

40

35

30

25

20

15

0

0.2

0.4

0.6

0.8

1

Figure 2: ϕ(p) = h(p)/p2 for k = 3 and p10i = 99 · 10−2i , i = 1, 2, . . . , (p j = 0 for all other j). Cf.

Example 4.15.

59]. Hence, if ck := minµ>0 µ/ P(Po(µ) ≥ k − 1) and λ > c k , then πc = inf0<p≤1 λp2 /h(p) =

ck /λ. Moreover, it is easily shown that h(p)/p2 is unimodal, see [10, Lemma 7.2] and Figure 1.

Consequently, there is as discussed above a single first-order phase transition at π = ck /λ [17].

Example 4.12. Let k = 3 and consider graphs with only two vertex degrees, 3 and m, say, with

m ≥ 4. Then, cf. (4.4),

h(p) = 3p3 P(D p = 3 | D = 3) + pm E(D p − D p 1[D p ≤ 2] | D = m)

= 3p3 p3 + pm mp − mp(1 − p)m−1 − m(m − 1)p 2 (1 − p)m−2 .

Now, let p3 := 1 − a/m and pm := a/m, with a > 0 fixed and m ≥ a, and let m → ∞. Then, writing

h = hm , hm (p) → 3p3 + ap for p ∈ (0, 1] and thus

ϕm (p) :=

hm (p)

p2

→ ϕ∞ (p) := 3p +

a

p

.

′

′

Since ϕ∞

(1) = 3 − a, we see that if we choose a = 1, say, then ϕ∞

(1) > 0. Furthermore, then

3

′

′

(1), it follows that

ϕ∞ (1/4) = 4 + 4 > ϕ∞ (1) = 4. Since also ϕm (1) = 3p3 − mpm = 3p3 − a → ϕ∞

′

if m is large enough, then ϕm (1) > 0 but ϕm (1/4) > ϕm (1). We fix such an m and note that ϕ = ϕm

is continuous on [0,1] with ϕ(0) = 0, because the sum in (4.2) is finite with each term O(p3 ).

Let p̃0 be the global maximum point of ϕ in [0,1]. (If not unique, take the largest value.) Then,

by the properties just shown, p̃0 6= 0 and p̃0 6= 1, so p̃0 ∈ (0, 1); moreover, 1 is a local maximum

point but not a global maximum point. Hence, πc = λ/ϕ(p̃0 ) is a first-order phase transition where

the 3-core suddenly becomes non-empty and containing a positive fraction h1 (p̃0 ) of all (remaining)

vertices. There is another phase transition at π = 1. We have ϕ(1) = h(1) = λ, but since ϕ ′ (1) > 0,

109

p(π) ր p̃1 and h(b

p(π)) ր

if p̃1 := sup{p < 1 : ϕ(p) > ϕ(1)}, then p̃1 < 1. Hence, as π ր 1, b

h1 (p̃1 ) < 1. Consequently, the size of the 3-core jumps again at π = 1.

For an explicit example, numerical calculations (using Maple) show that we can take a = 1 and

m = 12, or a = 1.9 and m = 6.

Example 4.13. Let k = 3 and let D be a mixture of Poisson distributions:

X

P(D = j) = p j =

qi P(Po(λi ) = j),

j ≥ 0,

(4.18)

i

for some finite or infinite sequences (qi ) and (λi ) with qi ≥ 0,

D ∼ Po(λ) we have, cf. Example 4.11, D p ∼ Po(λp) and thus

P

i

qi = 1 and λi ≥ 0. In the case

h(p) = E D p − P(D p = 1) − 2 P(D p = 2) = λp − λpe −λp − (λp)2 e−λp

= (λp)2 f (λp),

where f (x) := 1 − (1 + x)e−x /x. Consequently, by linearity, for D given by (4.18),

X

h(p) =

qi (λi p)2 f (λi p),

(4.19)

i

and thus

ϕ(p) =

X

qi λ2i f (λi p).

(4.20)

i

−2

i

−2i

As a specific

P example, take λi = 2 and qi = λi = 2 , i ≥ 1, and add q0 = 1 −

to make qi = 1. Then

∞

X

f (2i p).

ϕ(p) =

P

i≥1 qi

and λ0 = 0

(4.21)

i=1

Note that f (x) = O(x) and f (x) = O(x −1 ) for 0 < x < ∞. Hence, the sum in (4.21) converges

uniformly on every compact interval [δ, 1]; moreover, if we define

ψ(x) :=

∞

X

f (2i x),

(4.22)

i=−∞

then the sum converges uniformly on compact intervals of (0, ∞) and |ϕ(p)−ψ(p)| = O(p). Clearly,

ψ is a multiplicatively periodic function on (0, ∞): ψ(2x)

compute the Fourier series of

P∞ = ψ(x); we

2πin y

b

with, using integration by

the periodic function ψ(2 y ) on R and find ψ(2 y ) = n=−∞ ψ(n)e

110

parts,

Z

Z

1

b

ψ(n)

=

y

ψ(2 )e

−2πin y

0

=

=

=

=

j+ y

)e

−2πin y

1

ln 2

f (2 j 2 y )e−2πin y d y

∞

0

∞

f (2 y )e−2πin y d y

−∞

f (x)x −2πin/ ln 2

Z

dy =

0

j=−∞

∞

0

=

Z

1

f (2

Z

∞

X

0 j=−∞

Z

∞

X

1

dy =

dx

x ln 2

1 − (1 + x)e−x x −2πin/ ln 2−2 dx

Z

1

ln 2(2πin/ ln 2 + 1)

Γ(1 − 2πin/ ln 2)

ln 2 + 2πin

∞

x e−x x −2πin/ ln 2−1 dx

0

.

Since these Fourier coefficients are non-zero, we see that ψ is a non-constant continuous function

on (0, ∞) with multiplicative period 2. Let a > 0 be any point that is a global maximum of ψ,

let b ∈ (a, 2a) be a point that is not, and let I j := [2− j−1 b, 2− j b]. Then ψ attains its global

maximum at the interior point 2− j a in I j , and since ϕ(p) − ψ(p) = O(2− j ) for p ∈ I j , it follows

that if j is large enough, then ϕ(2− j a) > max(ϕ(2− j−1 b), ϕ(2− j b)). Hence, if the maximum of ϕ

on I j is attained at p̃ j ∈ I j (choosing the largest maximum point if it is not unique), then, at least

for large j, p̃ j is in the interior of I j , so p̃ j is a local maximum point of ϕ. Further, as j → ∞,

P

P∞

b

ϕ(p̃ j ) → max ψ > ψ(0)

= 1/ ln 2 while λ := E D = i qi λi = 1 2−i = 1, so ϕ(p̃ j ) > λ for large j.

Moreover, p̃ j /2 ∈ I j+1 , and since (4.21) implies

ϕ(p/2) =

∞

X

i=1

f (2

i−1

p) =

∞

X

f (2i p) > ϕ(p),

p > 0,

(4.23)

i=0

thus ϕ(p̃ j ) < ϕ(p̃ j /2) ≤ ϕ(p̃ j+1 ). It follows that if p ∈ I i for some i < j, then ϕ(p) ≤ ϕ(p̃i ) < ϕ(p̃ j ).

Consequently, for large j at least, p̃ j is a bad local maximum point, and thus there is a phase

transition at π j := λ/ϕ(p̃ j ) ∈ (0, 1). This shows that there is an infinite sequence of (first-order)

phase transitions.

Further, in this example ϕ is bounded (with sup ϕ = max ψ), and thus πc > 0. Since, by (4.23),

sup ϕ is not attained, this is an example where the phase transition at πc is continuous and not firstorder; simple calculations show that as π ց πc , b

p(π) = Θ(π − πc ) and h1 (b

p(π)) = Θ((π − πc )2 ).

b

Because of the exponential decrease of |Γ(z)| on the imaginary axis, |ψ(n)|

is very small for n 6= 0;

−6

b

b

we have |ψ(±1)| ≈ 0.78 · 10 and the others much smaller, so ψ(x) deviates from its mean ψ(0)

=

1/ ln 2 ≈ 1.44 by less than 1.6 · 10−6 . The oscillations of ψ and ϕ are thus very small and hard

to observe numerically or graphically unless a large precision is used. (Taking e.g. λi = 10i yields

larger oscillations.)

Note also that in this example, ϕ is not continuous at p = 0; ϕ(p) is bounded but does not converge

as p ց 0. Thus h is not analytic at p = 0.

111

Example 4.14. Taking λi = 2i as in Example 4.13 but modifying qi to 2(ǫ−2)i for some small ǫ > 0,

similar calculations show that pǫ ϕ(p) = ψǫ (p) + O(p) for a non-constant function ψǫ with multiplicative period 2, and it follows again that, at least if ǫ is small enough, there is an infinite number

of phase transitions. In this case, ϕ(p) → ∞ as p → 0, so πc = 0.

Since ϕ is analytic on (0, 1], if there is an infinite number of bad critical points, then we may

order them (and 1, if ϕ ′ (1) ≤ 0 and ϕ(1) = λ) in a decreasing sequence p̃1 > p̃2 > . . . , with

p̃ j → 0. It follows from the definition of bad critical points that then ϕ(p̃1 ) < ϕ(p̃2 ) < . . . , and

sup0<p≤1 ϕ(p) = sup j ϕ(p̃ j ) = lim j→∞ ϕ(p̃ j ). Consequently, if there is an infinite number of phase

transitions, they occur at {π j }∞

1 ∪ {πc } for some decreasing sequence π j ց πc ≥ 0.

Example 4.15. We can modify 4.13 and 4.14 and consider random graphs where all vertex degrees

di are powers of 2; thus D has support on {2i }. If we choose p2i ∼ 2−2i or p2i ∼ 2(ǫ−2)i suitably,

the existence of infinitely many phase transitions follows by calculations similar to the ones above.

(But the details are a little more complicated, so we omit them.) A similar example concentrated on

{10i } is shown in Figure 2.

Example 4.16. We may modify Example 4.13 by conditioning D on D ≤ M for some large M .

If we denote the corresponding h and ϕ by h M and ϕ M , it is easily seen that h M → h unformly

on [0,1] as M → ∞, and thus ϕ M → ϕ uniformly on every interval [δ, 1]. It follows that if we

consider N bad local maximum points of ϕ, then there are N corresponding bad local maximum

points of ϕ M for large M , and thus at least N phase transitions. This shows that we can have any

(n)

finite number of phase transitions with degree sequences (di )1n where the degrees are uniformly

bounded. (Example 4.17 shows that we cannot have infinitely many phase transitions in this case.)

Example 4.17. In Examples 4.13 and 4.14 with infinitely many phase transitions, we have E D2 =

P

2

2

i qi (λi + λi ) = ∞. This is not a coincidence; in fact, we can show that: If E D < ∞, then the

k-core has only finite number of phase transitions.

This is trivial for k = 2, when there never is more than one phase transition. Thus, assume k ≥ 3. If

k = 3, then, cf. (4.4),

X

ϕ(p) = h(p)/p2 =

l pl p − p(1 − p)l−1 − (l − 1)p 2 (1 − p)l−2 p−2

l≥3

=

X

l≥3

l pl

1 − (1 − p)l−1

p

− (l − 1)(1 − p)l−2 .

Each term in the sum is non-negative and bounded by l pl 1−(1−p)l−1 /p ≤ l pl (l−1), and as p → 0

it converges to l pl (l − 1 − (l − 1)) = 0. Hence, by dominated convergence, using the assumption

P

pl l(l − 1) = E D(D − 1) < ∞, we have ϕ(p) → 0 as p → 0. For k > 3, h(p) is smaller than for

k = 3 (or possibly the same), so we have the same conclusion. Consequently, ϕ is continuous on

[0, 1] and has a global maximum point p0 in (0,1]. Every bad critical point has to belong to [p0 , 1].

Since ϕ is analytic on [p0 , 1], it has only a finite number of critical points there (except in the trivial

case ϕ(p) = 0), and thus there is only a finite number of phase transitions.

Example 4.18. We give an example of a continuous (not first-order) phase transition, letting D be

a mixture as in Example 4.13 with two components and carefully chosen weights q1 and q2 .

Let f be as in Example 4.13 and note that f ′ (x) ∼ 1/2 as x → 0 and f ′ (x) ∼ −x −2 as x → ∞.

Hence, for some a, A ∈ (0, ∞), 14 < f ′ (x) < 1 for 0 < x ≤ 4a and 21 x −2 ≤ − f ′ (x) ≤ 2x −2 for

112

x ≥ A. Let f1 (x) := f (Ax) and f2 (x) := f (ax). Then, f1′ (x) < 0 for x ≥ 1. Further, if g(x) :=

f2′ (x)/| f1′ (x)| = (a/A) f ′ (ax)/| f ′ (Ax)|, then

a f ′ (a)

a

= 2aA,

A · A−2 /2

a/4

g(4) =

>

= 2aA,

′

A| f (4A)| A · 2(4A)−2

g(1) =

′ (A)|

A| f

a f ′ (4a)

<

and thus g(1) < g(4). Further, if x ≥ A/a, then f2′ (x) < 0 and thus g(x) < 0. Consequently,

sup x≥1 g(x) = max1≤x≤A/a g(x) < ∞, and if x 0 is the point where the latter maximum is attained

(choosing the largest value if the maximum is attained at several points), then 1 < x 0 < ∞ and

g(x) < g(x 0 ) for x > x 0 . Let β := g(x 0 ) and

ψ(x) := β f 1 (x) + f2 (x) = β f (Ax) + f (ax).

(4.24)

Then ψ′ (x) ≤ 0 for x ≥ 1, ψ′ (x 0 ) = 0 and ψ′ (x 0 ) < 0 for x > x 0 .

Let b be large, to be chosen later, and let D be as in Example 4.13 with q1 := β a2 /(β a2 + A2 ),

q2 := 1 − q1 , λ1 := bA, λ2 := ba. Then, by (4.20),

ϕ(p) = q1 (bA)2 f (bAp) + q2 (ba)2 f (bap) =

b2 a2 A2

β a2 + A2

ψ(bp).

(4.25)

Hence, ϕ ′ (x 0 /b) = 0, ϕ ′ (x) ≤ 0 for x ≥ 1/b and ϕ ′ (x) < 0 for x > x 0 /b. Consequently, x 0 /b

is a critical point but not a local maximum point. Furthermore, ϕ(x 0 /b) = q1 b2 A2 f (Ax 0 ) +

q2 b2 a2 f (ax 0 ) and λ := E D = q1 λ1 + q2 λ2 = b(q1 A + q2 a); hence, if b is large enough, then

ϕ(x 0 /b) > λ. We choose b such that this holds and b > x 0 ; then p̃ := x 0 /b is a bad critical point

which is an inflection point and not a local maximum point. Hence there is a continuous phase

transition at π̃ := λ/ϕ(p̃) ∈ (πc , 1).

We have ϕ ′ (p̃) = ϕ ′′ (p̃) = 0; we claim that, at least if A is chosen large enough, then ϕ ′′′ (p̃) 6= 0.

This implies that, for some c1 , c2 , c3 > 0, ϕ(p) − ϕ(p̃) ∼ −c1 (p − p̃)3 as p → p̃, and b

p(π) − b

p(π̃) ∼

c2 (π − π̃)1/3 and h1 (b

p(π)) − h1 (b

p(π̃)) ∼ c3 (π − π̃)1/3 as π → π̃, so the critical exponent at π̃ is 1/3.

To verify the claim, note that if also ϕ ′′′ (p̃) = 0, then by (4.25) and (4.24), ψ′ (x 0 ) = ψ′′ (x 0 ) =

ψ′′′ (x 0 ) = 0„ and thus

βA j f ( j) (Ax 0 ) + a j f ( j) (ax 0 ) = 0,

j = 1, 2, 3.

(4.26)

Let x 1 := Ax 0 and x 2 := ax 0 . Then x 1 ≥ A, and f ′ (x 2 ) > 0 so x 2 ≤ C for some C. Further, (4.26)

yields

x 12 f ′′′ (x 1 )

x 22 f ′′′ (x 2 )

x 1 f ′′ (x 1 )

x 2 f ′′ (x 2 )

=

and

=

.

(4.27)

f ′ (x 2 )

f ′ (x 1 )

f ′ (x 2 )

f ′ (x 1 )

Recall that x 1 and x 2 depend on our choices of a and A, and that we always can decrease a and

increase A. Keep a fixed and let A → ∞ (along some sequence). Then x 1 → ∞ but x 2 = O(1), so

by selecting a subsequence we may assume x 2 → y ≥ 0. As x → ∞, f ′ (x) ∼ −x −2 , f ′′ (x) ∼ 2x −3 ,

and f ′′′ (x) ∼ −6x −4 . Hence, if (4.27) holds for all large A (or just a sequence A → ∞), we obtain

by taking the limit

y f ′′ ( y)

f ′ ( y)

= lim

x→∞

x f ′′ (x)

f ′ (x)

= −2

and

113

y 2 f ′′′ ( y)

f ′ ( y)

= lim

x→∞

x 2 f ′′′ (x)

f ′ (x)

= 6.

Finally, let F (x) := x f (x) = 1 − (1 + x)e−x . Then F ′′ ( y) = y f ′′ ( y) + 2 f ′ ( y) = 0 and F ′′′ ( y) =

y f ′′′ ( y) + 3 f ′′ ( y) = 6 y −1 f ′ ( y) − 6 y −1 f ′ ( y) = 0. On the other hand, F ′ (x) = x e−x , F ′′ (x) =

(1 − x)e−x , F ′′′ (x) = (x − 2)e−x , so there is no solution to F ′′ ( y) = y F ′′′ ( y) = 0. This contradiction

finally proves that ϕ ′′′ (p̃) 6= 0, at least for large A.

5 Bootstrap percolation in random regular graphs

Bootstrap percolation on a graph G is a process that can be regarded as a model for the spread of an

infection. We start by infecting a subset A0 of the vertices; typically we let A0 be a random subset

of the vertex set V (G) such that each vertex is infected with some given probability q, independently

of all other vertices, but other choices are possible, including a deterministic choice of A0 . Then, for

some given threshold ℓ ∈ N, every uninfected vertex that has at least ℓ infected neighbours becomes

infected. (Infected vertices stay infected; they never recover.) This is repeated until there are no

(ℓ)

further infections. We let A f = A f be the final set of infected vertices. (This is perhaps not a good

model for infectious diseases, but may be reasonable as a model for the spread of rumors or beliefs:

you are skeptical the first time you hear something but get convinced the ℓth time.)

Bootstrap percolation is more or less the opposite to taking the k-core. For regular graphs, there

is an exact correspondence: it is easily seen that if the common vertex degree in G is d, then

(ℓ)

the set V (G) \ A f of finally uninfected vertices equals the (d + 1 − ℓ)-core of the set V (G) \

A0 of initially uninfected vertices. Furthermore, if the initial infection is random, with vertices

infected independently with a common probability q, then the initial infection can be seen as a site