MATH 409 Advanced Calculus I Paul Skoufranis April 29, 2016

advertisement

MATH 409

Advanced Calculus I

Paul Skoufranis

April 29, 2016

ii

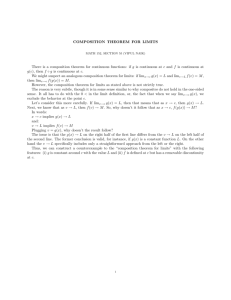

Preface:

These are the first edition of these lecture notes for MATH 409.

Consequently, there may be several typographical errors. Furthermore, these

notes will not contain much additional material outside the topics covered in

class. However, due to time constraints, some subsections may be skipped in

class. We leave those subsections as part of these notes for the curious

student, but students will not be responsible for those sections.

iv

Contents

1 Axioms of Number Systems

1.1 Set Notation . . . . . . . . . . . . . . . . . . . .

1.2 The Natural Numbers . . . . . . . . . . . . . . .

1.2.1 Peano’s Axioms . . . . . . . . . . . . . . .

1.2.2 The Principle of Mathematical Induction

1.2.3 The Well-Ordering Principle . . . . . . .

1.3 The Real Numbers . . . . . . . . . . . . . . . . .

1.3.1 Fields . . . . . . . . . . . . . . . . . . . .

1.3.2 Partially Ordered Sets . . . . . . . . . . .

1.3.3 The Triangle Inequality . . . . . . . . . .

1.3.4 The Least Upper Bound Property . . . .

1.3.5 Constructing the Real Numbers . . . . . .

2 Sequences of Real Numbers

2.1 The Limit of a Sequence . . . . . . . . . . .

2.1.1 Definition of a Limit . . . . . . . . .

2.1.2 Uniqueness of the Limit . . . . . . .

2.2 The Monotone Convergence Theorem . . .

2.3 Limit Theorems . . . . . . . . . . . . . . . .

2.3.1 Limit Arithmetic . . . . . . . . . . .

2.3.2 Diverging to Infinity . . . . . . . . .

2.3.3 The Squeeze Theorem . . . . . . . .

2.3.4 Limit Supremum and Limit Infimum

2.4 The Bolzano–Weierstrass Theorem . . . . .

2.4.1 Subsequences . . . . . . . . . . . . .

2.4.2 The Peak Point Lemma . . . . . . .

2.4.3 The Bolzano–Weierstrass Theorem .

3 An Introduction to Topology

3.1 Completeness of the Real Numbers . . . .

3.1.1 Cauchy Sequences . . . . . . . . .

3.1.2 Convergence of Cauchy Sequences

3.2 Topology of the Real Numbers . . . . . .

v

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

1

3

3

4

6

7

7

9

10

13

15

.

.

.

.

.

.

.

.

.

.

.

.

.

17

17

17

19

20

21

21

24

25

26

29

29

29

30

.

.

.

.

31

31

31

32

33

vi

CONTENTS

3.3

3.2.1 Open Sets . . . . . . . . . . . . .

3.2.2 Closed Sets . . . . . . . . . . . .

Compactness . . . . . . . . . . . . . . .

3.3.1 Definition of Compactness . . . .

3.3.2 The Heine-Borel Theorem . . . .

3.3.3 Sequential Compactness . . . . .

3.3.4 The Finite Intersection Property

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4 Cardinality of Sets

4.1 Functions . . . . . . . . . . . . . . . . . . .

4.1.1 The Axiom of Choice . . . . . . . .

4.1.2 Bijections . . . . . . . . . . . . . . .

4.2 Cardinality . . . . . . . . . . . . . . . . . .

4.2.1 Definition of Cardinality . . . . . . .

4.2.2 Cantor-Schröder–Bernstein Theorem

4.2.3 Countable and Uncountable Sets . .

4.2.4 Zorn’s Lemma . . . . . . . . . . . .

4.2.5 Comparability of Cardinals . . . . .

5 Continuity

5.1 Limits of Functions . . . . . . . . . .

5.1.1 Definition of a Limit . . . . .

5.1.2 Limit Theorems for Functions

5.1.3 One-Sided Limits . . . . . . .

5.1.4 Limits at and to Infinity . . .

5.2 Continuity of Functions . . . . . . .

5.3 The Intermediate Value Theorem . .

5.4 Uniform Continuity . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

33

36

38

38

40

41

42

.

.

.

.

.

.

.

.

.

45

45

45

48

51

51

53

55

57

59

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

63

63

63

67

68

71

73

76

78

6 Differentiation

6.1 The Derivative . . . . . . . . . . . . . . . .

6.1.1 Definition of a Derivative . . . . . .

6.1.2 Rules of Differentiation . . . . . . .

6.2 Inverse Functions . . . . . . . . . . . . . . .

6.2.1 Monotone Functions . . . . . . . . .

6.2.2 Inverse Function Theorem . . . . . .

6.3 Extreme Values of Functions . . . . . . . .

6.4 The Mean Value Theorem . . . . . . . . . .

6.4.1 Proof of the Mean Value Theorem .

6.4.2 Anti-Derivatives . . . . . . . . . . .

6.4.3 Monotone Functions and Derivatives

6.4.4 L’Hôpital’s Rule . . . . . . . . . . .

6.4.5 Taylor’s Theorem . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

83

83

83

87

91

91

93

96

99

100

101

102

104

111

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

CONTENTS

7 Integration

7.1 The Riemann Integral . . . . . . . . . . .

7.1.1 Riemann Sums . . . . . . . . . . .

7.1.2 Definition of the Riemann Integral

7.1.3 Some Integrable Functions . . . . .

7.1.4 Properties of the Riemann Integral

7.2 The Fundamental Theorems of Calculus .

vii

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

115

115

115

119

124

127

134

viii

CONTENTS

Chapter 1

Axioms of Number Systems

To discuss advanced calculus, we must return to many of the basis structures

that are taken for granted in previous courses. In particular, what exactly

are the natural numbers and the real numbers, and what properties do these

number systems have that we may use?

1.1

Set Notation

All mathematics must contain some notation in order for one to adequately

describe the objects of study. As such, we begin by developing the notation

for one of the ‘simplest’ constructs in mathematics.

Heuristic Definition. A set is a collection of distinct objects.

Our first task is to develop notation to adequately describe sets and

symbols to represent sets that will be common in this course. The following

table list several sets, the symbol used to represent the set, and a set

notational way to describe the set.

Set

natural numbers

integers

real numbers

rational numbers

Symbol

N

Z

R

Q

Set Notation

{1, 2, 3, 4, . . .}

{0, 1, −1, 2, −2, 3, −3, . . .}

{real numbers}

a

b | a, b ∈ Z, b 6= 0

Notice two different types of notation are used in the above table to describe

sets: namely {objects} and {objects | conditions on the objects}. Furthermore, the symbol ∅ will denote the empty set; that is, the set with no

elements.

Given a set X and an object x, we need notation to describe when x

belongs to X. In particular, we say that x is an element of X, denoted

x ∈ X, when x is one of the objects that make up X. Furthermore,

we will

√

√

use x ∈

/ X when x is not an element of X. For example, 2 ∈ R yet 2 ∈

/Q

1

2

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

and 0 ∈ Z but 0 ∈

/ N. Furthermore, given two sets X and Y , we say that Y

is a subset of X, denoted Y ⊆ X, if each element of Y is an element of X;

that is, if a ∈ Y then a ∈ X. For example N ⊆ Z ⊆ Q ⊆ R. Furthermore,

note if X ⊆ Y and Y ⊆ X, then X = Y .

Given two sets X and Y , there are various operations one can perform

on these two sets. Three such operations are as follows:

• The union of X and Y , denoted X ∪ Y , is the set

X ∪ Y = {a | a ∈ X or a ∈ Y };

that is, the union of X and Y consists of joining the two sets into one.

• The intersection of X and Y , denoted X ∩ Y , is the set

X ∩ Y = {a | a ∈ X and a ∈ Y };

that is, the intersection of X and Y is the set of elements contained in

both X and Y .

• The set difference of X and Y , denoted X \ Y , is the set

X \ Y = {a | a ∈ X and a ∈

/ Y };

that is, the set of all elements of X that are not elements of Y .

For example, if X = {1, 2, 3} and Y = {2, 4, 6}, then

X ∪ Y = {1, 2, 3, 4, 6},

X ∩ Y = {2},

and

X \ Y = {1, 3}.

In this course, we will often have a set X (usually R) and will be considering

subsets of X. Consequently, given a subset Y of X, the set difference

X \ Y will be called the complement of Y (in X) and will be denoted Y c for

convenience.

Sets will play an important role in this course. However, one important

question that has not been addressed is, “What exactly is a set?” This

questions must be asked as we have not provided a rigorous definition of a

set. This leads to some interesting questions, such as, “Does the collection

of all sets form a set?”

Let us suppose that there is a set of all sets; that is

Z = {X | X is a set}

makes sense. Note Z has the interesting property that Z ∈ Z. Furthermore,

if Z exists, then

Y = {X | X is a set and X ∈

/ X}

1.2. THE NATURAL NUMBERS

3

would be a valid subset of Z. However, we clearly have two disjoint cases:

either Y ∈ Y or Y ∈

/ Y (that is, either Y is an element of Y or Y is not an

element of Y ).

If Y ∈ Y , then the definition of Y implies Y ∈

/ Y which is a contradiction

since we cannot have both Y ∈ Y and Y ∈

/ Y . Thus, if Y ∈ Y is false, then

it must be the case that Y ∈

/ Y.

However, Y ∈

/ Y implies by the definition of Y that Y ∈ Y . Again this is

a contradiction since we cannot have both Y ∈

/ Y and Y ∈ Y . This argument

is known as Russell’s Paradox and demonstrates that there cannot be a set

of all sets.

The above paradox illustrates the necessity of a rigorous definition of a

set. However, said definition takes us beyond the study of this class. Instead

we will focus on two unforeseen questions, “What are the natural numbers?”

and “How do we define the natural numbers?”

1.2

The Natural Numbers

As seen through Russell’s Paradox, rigorous definitions are required to prevent

misconceptions with the objects we desire to study. As such, we need to

discuss what exactly the natural numbers are.

1.2.1

Peano’s Axioms

The following, known as Peano’s Axioms, completely characterize the natural

numbers.

Definition 1.2.1. The natural numbers, denoted N, are the unique number

system satisfying the following five axioms:

1. There is a number, denoted 1, such that 1 ∈ N.

2. For each number n ∈ N, there is a number S(n) ∈ N called the successor

of n (i.e. S(n) = n + 1).

3. The number 1 is not the successor of any number in N.

4. If m, n ∈ N and S(n) = S(m), then n = m.

5. (Induction Axiom) If X ⊆ N has the properties

(a) 1 ∈ X, and

(b) if k ∈ N and k ∈ X, then S(k) ∈ X,

then X = N.

Each of the above five axioms are necessary. The following examples

demonstrate the necessity of the third, fourth, and fifth axioms.

4

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Example 1.2.2. Consider the set X = {1, 2} where we define S(1) = 2

and S(2) = 1. One may verify that X satisfies all but the third of Peano’s

Axioms.

Example 1.2.3. Consider the set X = {1, 2} where we define S(1) = 2 and

S(2) = 2. One may verify that X satisfies all but the fourth of Peano’s

Axioms.

Example 1.2.4. Consider the set N2 = {(n, m) | n, m ∈ Z} where we

define 1 = (1, 1) and S(n, m) = (n + 1, m + 1). One may verify that N2

satisfies all but the fifth of Peano’s Axioms since X = {(n, n) | n ∈ N} has

properties (a) and (b) but is not all of N2 .

The axioms of the natural numbers provide some nice properties. The

next subsection will focus on applications of the fifth axiom. For now, we note

that the other axioms give us a nice ‘ordering’ on N, which is consistent with

the ordering one expects. In particular, for n, m ∈ N, we define n < m if m

can be obtained by taking (possibly multiple) successors of n. Furthermore,

we define n ≤ m if n < m or n = m. The notion of ordering will play an

essential role in the construction of the real numbers (see Subsection 1.3.2).

1.2.2

The Principle of Mathematical Induction

The Induction Axiom of the natural numbers leads to the following principle.

Theorem 1.2.5 (The Principle of Mathematical Induction). For each

k ∈ N, let Pk be a mathematical statement that is either true or false. Suppose

1. (base case) P1 is true, and

2. (inductive step) if k ∈ N and Pk is true, then Pk+1 is true.

Then Pn is true for all n ∈ N.

Proof. Let

X = {n ∈ N | Pn is true}.

By assumption we see that 1 ∈ X as P1 is true.

Assume that k ∈ X. By the definition of X, we know Pk is true. By

the assumptions in the statement of the theorem, Pk+1 is true and hence

k + 1 ∈ X by the definition of X. Hence the Induction Axiom in Definition

1.2.1 implies X = N. Hence Pn is true for all n.

The Principle of Mathematical Induction is an essential method for

proving mathematical statements. The following is a specific example.

1.2. THE NATURAL NUMBERS

5

Example 1.2.6. For each n ∈ N, we claim that

n

X

m = 1 + 2 + 3 + ··· + n =

m=1

n(n + 1)

.

2

To see this result is true, for each n ∈ N let Pn be the statement that

Pn

n(n+1)

. To show that Pn is true for all n ∈ N, we will apply the

k=1 k =

2

Principle of Mathematical Induction. To do so, we must demonstrate the

two conditions in Theorem 1.2.5.

Base Case: To see that P1 is true, notice that when n = 1,

1

X

n(n + 1)

1(1 + 1)

=

=1=

m.

2

2

m=1

Hence P1 is true.

P

Inductive Step: Suppose that Pk is true; that is, suppose km=1 m =

k(k+1)

(this assumption

2

Pk+1 is true, notice

k+1

X

is known as the induction hypothesis). To see that

m = (k + 1) +

m=1

k

X

m

m=1

k(k + 1)

by the induction hypothesis

2

2(k + 1) + (k 2 + k)

=

2

k 2 + 3k + 2

(k + 1)(k + 2)

(k + 1)((k + 1) + 1)

=

=

=

.

2

2

2

= (k + 1) +

Hence Pk+1 is true.

Therefore, as we have demonstrated the base case and the inductive step,

the result follows by the Principle of Mathematical Induction.

Some people (mainly computer scientists) argue that the Induction Axiom

must be false as it would take an infinite amount of time for a computer to

verify Pn is true for all n by using the fact P1 is true and Pk is true implies

Pk+1 is true. We will not adopt this notion. In fact, often one wants to

assume more than just Pk is true in order to show that Pk+1 is true.

Theorem 1.2.7 (Strong Induction). Suppose X ⊆ N. If

1. 1 ∈ X, and

2. if k ∈ N and {1, 2, . . . , k} ⊆ X, then k + 1 ∈ X,

then X = N.

6

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Proof. For each n ∈ N, let Pn be the statement that {1, . . . , n} ⊆ X. We

claim that Pn is true for all n ∈ N. To show this, we will apply the Principle

of Mathematical Induction.

Base Case: As 1 ∈ X by assumption, clearly P1 is true.

Inductive Step: Suppose that Pk is true; that is, {1, 2, . . . , k} ⊆ X. By

assumption on X, k + 1 ∈ X. Hence {1, . . . , k, k + 1} ⊆ X so Pk+1 is true.

Hence, by the Principle of Mathematical Induction, {1, . . . , n} ⊆ X for

all n ∈ N. In particular, n ∈ X for all n ∈ N. Hence X = N.

Theorem 1.2.8 (The Principle of Strong Mathematical Induction).

For each k ∈ N, let Pk be a mathematical statement that is either true or

false. Suppose

1. P1 is true, and

2. if k ∈ N and Pm is true is true for all m ≤ k, then Pk+1 is true.

Then Pn is true for all n ∈ N.

Proof. The proof of this result is nearly identical to that of Theorem 1.2.5.

Let

X = {n ∈ N | Pn is true}.

By assumption we see that 1 ∈ X as P1 is true.

Assume that {1, . . . , k} ⊆ X. By the definition of X, we know Pm is true

for all m ≤ k. By the assumptions in the statement of the theorem, Pk+1 is

true and hence k + 1 ∈ X by the definition of X. Hence Strong Induction

implies X = N. Hence Pn is true for all n ∈ N.

1.2.3

The Well-Ordering Principle

There is one additional form of the Principle of Mathematical Induction that

is quite useful.

Theorem 1.2.9 (The Well-Ordering Principle). Every non-empty subset of N has a least element; that is, if Y ⊆ N and Y =

6 ∅, then there is an

element m ∈ Y such that m ≤ k for all k ∈ Y .

Proof. Suppose Y is a non-empty subset of N that does not have a least

element. Let

X = N \ Y = {n ∈ N | n ∈

/ X}.

We will apply Strong Induction to show that X = N. This will complete

the proof since X = N implies Y = ∅, which contradicts the fact that Y

is non-empty. To apply Strong Induction, we must demonstrate the two

necessary assumptions in Theorem 1.2.7.

Base Case: Since Y does not have a least element, we know that 1 ∈

/Y

or else 1 would be the least element of Y . Hence 1 ∈ X.

1.3. THE REAL NUMBERS

7

Inductive Step: Suppose k ∈ N and {1, . . . , k} ⊆ X. Then each element

of {1, . . . , k} is not in Y . Hence k + 1 ∈

/ Y for otherwise k + 1 would be

the least element of Y since none of 1, . . . , k are in Y . Hence k + 1 ∈ X as

k+1∈

/ Y.

Hence, by Strong Induction, X = N thereby completing the proof by

earlier discussions.

In the above, we assumed the Induction Axiom as one of Peano’s Axioms,

deduced Strong Induction, and used Strong Induction to deduce the WellOrdering Principle. In fact, the Induction Axiom and the Well-Ordering

Principle are logically equivalent; that is, if one replaces the Induction Axiom

with the Well-Ordering Principle in Definition 1.2.1, one may deduce the

Induction Axiom (see the homework).

1.3

The Real Numbers

With a rigorous construction of the natural numbers now complete, we turn

our attention to the real numbers. In particular, how does one construct the

real numbers, what properties do the real numbers have, and are there any

number systems with the same properties as the real numbers?

1.3.1

Fields

To begin our discussion of the real numbers, we note there are some common

operations we may apply to the real numbers: namely addition, subtraction,

multiplication, and division. These operations have specific properties that

we shall explore.

We begin with addition and multiplication. Recall that addition and

multiplication are operations on pairs of real numbers; that is, for every

x, y ∈ R there are numbers, denoted x + y and x · y, which are elements

of R. Furthermore, there are two properties we require for addition and

multiplication to behave well, and one property that says addition and

multiplication play together nicely:

(F1) (Commutativity) x + y = y + x and x · y = y · x for all x, y ∈ R.

(F2) (Associativity) (x + y) + z = x + (y + z) and (x · y) · z = x · (y · z) for

all x, y, z ∈ R.

(F3) (Distributivity) x · (y + z) = (x · y) + (x · z) for all x, y, z ∈ R.

To introduce the operations of subtraction and division, we must understand what these operations are and how they may be derived from addition

and multiplication. For example, what does subtracting 3 from 4 mean in

terms of addition? Well, it really means add the number −3 to 4. And how

8

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

are 3 and −3 related? Well, −3 is the unique number x such that 3 + x = 0.

And what is 0 in terms of addition? Well, 0 is the unique number y that

when you add y to any number z, you end up with z.

Similarly, what does dividing by 7 mean in terms of multiplication? Well,

it really means multiply by 17 . And how are 7 and 17 related? Well, 17 is the

unique number x such that 7x = 1. And what is 1 in terms of multiplication?

Well, 1 is the unique number y that when you multiply y to any number z,

you end up with z.

Using the above, we added the following properties to our list of properties

defining R:

(F4) (Existence of Identities) There are numbers 0, 1 ∈ R with 0 6= 1 such

that 0 + x = x and 1 · x = x for all x ∈ R.

(F5) (Existence of Inverses) For all x, y ∈ R with y 6= 0, there exists

−x, y −1 ∈ R such that x + (−x) = 0 and y · y −1 = 1.

Using these two properties, one then defines subtraction and division via

x−y = x+(−y) and x÷z = x·z −1 for all x, y, z ∈ R with z =

6 0. Furthermore,

it is possible to show that all of the numbers listed in (F4) and (F5) are

unique (that is, any number with the same properties as one of 0, 1, −x, or

y −1 must be the corresponding number)

Although the real numbers have the above five properties, they are

not the only number system that has all five properties. For example,

clearly the rational numbers Q (which are not equal to the real numbers

by the homework) also satisfy all five properties when we replace R with Q.

Consequently, we make the following definition.

Definition 1.3.1. A field is a set F together with two operations + and ·

such that a + b ∈ F and a · b ∈ F for all a, b ∈ F, and + and · satisfy (F1),

(F2), (F3), (F4), and (F5) as written above (replacing R with F).

Notice if one is given a field F and a subset E of F that has the property

that a + b ∈ E and a · b ∈ E for all a, b ∈ E, then E is a field with the

operations + and · provided 0, 1 ∈ E and −x, z −1 ∈ E for all x, z ∈ E with

z 6= 0. In this case, we call E a subfield of F. For example,

√

√

Q[ 2] := {x + y 2 | x, y ∈ Q}

is a subfield of R.

However, there are fields that look strikingly different from R.

Example 1.3.2. Consider Z2 = {0, 1} with the following rules for addition

and multiplication:

+

0

1

0

0

1

1

1

0

·

0

1

0

0

0

1

0

1

1.3. THE REAL NUMBERS

9

(think of 0 as all even numbers and 1 as all odd numbers; an odd plus an

odd is odd, an odd times an even is even, etc). One can verify that Z2 is a

field with the above operations.

All of the above properties listed are algebraic properties. Are there other

properties of R we can include to distinguish R from other fields?

1.3.2

Partially Ordered Sets

One notion that exists for the real numbers that does not exist for other

fields is the notion of an ordering; that is, given two numbers, we have a

notion which tells us which number is bigger. We begin with the following

concept.

Definition 1.3.3. Let X be a set. A relation on the elements of X is

called a partial ordering if:

1. (reflexivity) a a for all a ∈ X,

2. (antisymmetry) if a b and b a, then a = b for all a, b ∈ X, and

3. (transitivity) if a, b, c ∈ X are such that a b and b c, then a c.

Clearly ≤ (as usually defined) is a partial ordering on R. Here is another

example:

Example 1.3.4. Let

P(R) := {X | X ⊆ R}.

The set P(R) is known as the power set of R and consists of all subsets of R.

We define a relation on P(R) as follows: given X, Y ∈ P(R),

XY

if and only if

X ⊆ Y.

It is not difficult to verify that is a partial ordering on P(R).

The partial ordering in the previous example is not as nice as our ordering

on R. To see this, consider the sets X = {1} and Y = {2}. Then X Y

and Y X; that is, we cannot use the partial ordering to compare X and Y .

However, if x, y ∈ R, then either x ≤ y or y ≤ x. Consequently, we desire to

add in this additional property to our ordering:

Definition 1.3.5. Let X be a set. A partial ordering on X is called a

total ordering if for all x, y ∈ X, either x y or y x (or both).

The ordering one usually considers on R is clearly a total ordering.

However, it is also easy to place a total ordering on Z2 .

10

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Example 1.3.6. Let Z2 be as in Example 1.3.2. Define 0 0, 0 1, 1 1,

and 1 0. It is easy to verify that is a total ordering on Z2 .

The problem with the ordering on Z2 is that addition and multiplication

do not interact well with respect to the ordering. The following describes

fields with ‘nice’ orderings:

Definition 1.3.7. An ordered field is a field F together with a total ordering

such that for all x, y, z ∈ F with x y, the following two properties hold:

• (Additive Property) x + z y + z.

• (Multiplicative Property) x · z y · z provided 0 z and y · z x · z

provided z 0.

In any ordered field, it must be the case that 0 1. Indeed, if 1 0,

then the Multiplicative Property implies 0 · 1 1 · 1 so 0 1 and 1 0 and

therefore antisymmetry implies 0 = 1 which contradicts (F4).

Note the ordering on Z2 given in Example 1.3.6 does not make Z2 into

an ordered field since 0 1 yet 0 + 1 1 + 1 (so this total ordering does not

satisfy the Additive Property).

It is clear that R is an

√ ordered field. However, it is clear that any subfield

of R (such as Q and Q[ 2]) are then also ordered fields. Consequently, we

still need a way to distinguish R from its subfields.

1.3.3

The Triangle Inequality

Before discussing how R differs from its subfields, we will analyze a useful

concept the ordering on R provides.

Definition 1.3.8. Given x ∈ R, the absolute value of x is

(

|x| =

x

if x ≥ 0

.

−x if x < 0

The absolute value has many important properties. For example, clearly

| − x| = |x|

for all x ∈ R (split the proof into two cases: x ≥ 0 and x < 0). Furthermore,

since x = ±|x| for all x ∈ R, it is not difficult to check that

|xy| = |x||y|

for all x, y ∈ R (split the proof into four cases: the two cases x ≥ 0 and x < 0,

each of which has the two cases y ≥ 0 and y < 0). However, the absolute

value is not important just for its properties, but for what it represents.

1.3. THE REAL NUMBERS

11

Notice that |x| represents the distance from x to 0. Consequently, we

can also see that |b − a| represents the distance from b to a for all a, b ∈ R.

Furthermore, for all a, δ ∈ R with δ > 0, the set

{x ∈ R | |x − a| < δ}

describes all points in R whose distance to a is strictly less than δ. Notice

|x−a| < δ if and only if −δ < x−a < δ if and only if a−δ < x < a+δ, which

provides an alternate description of the above set without using absolute

values. Such sets are quite important in this course so we make the following

notation.

Notation 1.3.9. For all a, b ∈ R with a ≤ b, we define

(a, b) := {x ∈ R | a < x < b}

[a, b) := {x ∈ R | a ≤ x < b}

(a, b] := {x ∈ R | a < x ≤ b}

[a, b] := {x ∈ R | a ≤ x ≤ b}.

For the first two, we permit ∞ to replace b, and, for the first and third, we

permit −∞ to replace a. Each of the above sets is called an interval with

(a, b) called an open interval and [a, b] called a closed interval.

In order to have a well-defined notion of distance in mathematics, several

properties need to be satisfied. Notice that if a, b ∈ R, then the distance

from b to a is zero exactly when |b − a| = 0, which is the same as saying

b = a. Furthermore, since |b − a| = | − (b − a)| = |a − b|, the distance from

b to a is the same as the distance from a to b. Finally, the last property

required to have a well-defined notion of distance is as follows:

Theorem 1.3.10 (The Triangle Inequality). Let x, y, z ∈ R. Then

|x − y| ≤ |x − z| + |z − y|.

That is, the distance from x to y is no more than the sum of the distance

from x to z and the distance from z to y.

y

z

x

12

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Proof. If x = y, the result is trivial to verify. Consequently we will assume

x < y (if y < x, we can relabel y with x and x with y to run the following

proof). We have three cases to consider.

Case 1

z

x

Case 2

y

x

y

Case 3

z

x

z

y

Case 1. z < x: In this case, notice

|x − y| ≤ |z − y| = 0 + |z − y| ≤ |x − z| + |z − y|

as desired.

Case 2. y < z: In this case, notice

|x − y| ≤ |x − z| = |x − z| + 0 ≤ |x − z| + |z − y|

as desired.

Case 3. x ≤ z ≤ y: In this case, we easily see that

|x − y| = |x − z| + |z − y|.

Hence, as we have exhausted all cases (up to flipping x and y), the proof

is complete.

The Triangle Inequality is an incredibly useful tool in analysis. Furthermore, there are many other forms of the Triangle Inequality. For example,

letting x = a, y = −b, and z = 0 produces

|a + b| ≤ |a| + |b|

for all a, b ∈ R.

In addition, if we let x = a, y = 0, and z = b, we obtain

|a| ≤ |a − b| + |b|

so

|a| − |b| ≤ |a − b|,

and if we let x = b, y = 0, and z = a, we obtain

|b| ≤ |a − b| + |a|

so

− (|a| − |b|) ≤ |a − b|.

Consequently, we obtain that

||a| − |b|| ≤ |a − b|

for all a, b ∈ R.

1.3. THE REAL NUMBERS

1.3.4

13

The Least Upper Bound Property

We have seen that R (along with its subfields) are ordered fields. We now

begin the discussion of how to use this ordering to construct the final property

needed to distinguish R from all other fields!

Definition 1.3.11. Let X ⊆ R. An element α ∈ R is said to be an upper

bound for X if x ≤ α for all x ∈ X. An element α ∈ R is said to be a lower

bound for X if α ≤ x for all x ∈ X. Finally, X is said to be bounded above if

X has an upper bound, bounded, below if X has a lower bound, and bounded

if X has both an upper and lower bound.

Example 1.3.12. Let X = (0, 1). Then 1 is an upper bound of X and 0 is

a lower bound of X. Thus X is bounded. Furthermore, note that 5 is also

an upper bound of X and −7 is a lower bound of X.

Example 1.3.13. Let X = ∅. Then every number in R is both an upper

and lower bound of X vacuously (that is, there are no elements of X to

which to check the defining property).

Notice that N is bounded below as 1 is a lower bound (as is −2, 0, 0.5,

etc.). Does N have an upper bound? Our intuition says no so that N is not

bounded above. However, how do we prove this?

To tackle the above problem (in addition to describing the property

required to distinguish R from other ordered fields), one probably has noticed

in the above examples there were special upper/lower bounds that were

‘optimal’.

Definition 1.3.14. Let X ⊆ R. An element α ∈ R is said to be the least

upper bound of X if

• α is an upper bound of X, and

• if β is an upper bound of X, then α ≤ β.

We write lub(X) in place of α, provided α exists.

Similarly, an element α ∈ R is said to be the greatest lower bound of X if

• α is a lower bound of X, and

• if β is a lower bound of X, then β ≤ α.

We write glb(X) in place of α, provided α exists.

In the above definition, notice we have used the term ‘the least upper

bound’ instead of ‘a least upper bound’. This is because it is elementary

to show that a set with a least upper bound has exactly one least upper

bound. Indeed if α1 and α2 are both least upper bounds of a set X, then

α1 ≤ α2 and α2 ≤ α1 by the two defining properties of a least upper bound,

so α1 = α2 .

14

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Example 1.3.15. Let X = [0, 1] and let Y = (0, 1). Then lub(X) =

lub(Y ) = 1 and glb(X) = glb(Y ) = 0. However, notice 0, 1 ∈ X whereas

0, 1 ∈

/ Y . This demonstrates that the least upper bound and greatest lower

bounds may or may not be in the set.

Example 1.3.16. Clearly a set that is not bounded above cannot have a

least upper bound and a set that is not bounded below cannot have a greatest

lower bound. Consequently ∅ has no least upper bound nor greatest lower

bound.

Example 1.3.17. Let

X = {x ∈ Q | x ≥ 0 and x2 < 2}.

Clearly glb(X) = 0 and lub(X) =

√

2.

The above example emphasizes the difference between Q and R. Notice

that X ⊆ Q. However, if we only consider numbers

√ in Q, then X does not

have a least upper bound

in

Q

as

if

b

∈

Q

and

2 < b, there is always

√

an r ∈ Q such that 2 < r < b (see homework). The following property

guarantees that R does not have such pitfalls.

Theorem 1.3.18 (The Least Upper Bound Property). Every nonempty subset of R that is bounded above has a least upper bound.

Note the term ‘non-empty’ must be included because of Example 1.3.16.

Furthermore, this completes our discussion of how to distinguish R from

other number systems since it is possible to show that any ordered field with

the Least Upper Bound Property is R! We will not demonstrate this fact as

it detours us from the goals of this course.

The Least Upper Bound Property is an amazing property that makes all

of analysis on R possible. In fact, we note the following corollaries of the

Least Upper Bound Property.

Corollary 1.3.19 (The Greatest Lower Bound Property). Every nonempty subset of R that is bounded below has a greatest lower bound.

Proof Sketch. Let X be a non-empty subset of R that is bounded below. Let

Y = {−x | x ∈ X}.

One can verify that if a ∈ R, then a is an upper bound for Y if and only

if −a is a lower bound for X. Consequently Y is bounded above (as X is

bounded below) and thus Y has a least upper bound by the Least Upper

Bound Property. Furthermore, it is not difficult to check that −lub(Y ) is

then the greatest lower bound of X.

1.3. THE REAL NUMBERS

15

Corollary 1.3.20 (The Archimedean Property). The natural numbers

are not bounded above in R.

Proof. Suppose N is bounded above in R. Then N must have a least upper

bound, say α, by the Least Upper Bound Property. Since α is an upper

bound of N, we know that n ≤ α for all n ∈ N. Hence n + 1 ≤ α and

thus n ≤ α − 1 for all n ∈ N. Thus α − 1 is an upper bound for N, which

contradicts the fact that α is the least upper bound of N as α − 1 < α.

1.3.5

Constructing the Real Numbers

In the previous section, we claimed that the real numbers are the unique

ordered field with the Least Upper Bound Property. However, how do we

know the real numbers exist at all? There are two main constructions of

the reals. The first uses equivalence (see Chapter 3) of Cauchy sequences

(see Chapter 3) of rational numbers. The other is more complicated and

is quickly sketched below. A more interested reader may consult https:

//en.wikipedia.org/wiki/Construction_of_the_real_numbers.

In Section 1.2 we rigorously constructed the natural numbers. From N

we can construct the integers Z by adding a symbol −n for all n ∈ N. One

then must define + and · using the notion of successors in Definition 1.2.1

and verify all of the desired properties. One must also extend the notion of

< from N to Z in the obvious way.

From Z we can then construct Q by defining Q to be the set with elements

of the form ab where a, b ∈ Z with b 6= 0, where we define ab = dc whenever

ad = bc. Care must be taken in subsequent definitions as there are multiple

ways to write a rational number. One then defines + and · as one does with

fractions, and then verifies that Q is a field. To extend < to Q, if a, b, c, d ∈ N

are all positive, we define ab < dc whenever ad < bc, and similar definitions

are provided in other cases. One then verifies that Q is an ordered field.

The real numbers may then be defined to be the set

X is bounded above,

R = X ⊆ Q X contains no greatest element, and

if x ∈ X then y ∈ X for all y < x

.

It remains to show that R is an ordered field with the Least Upper Bound

Property. This requires defining +, ·, and ≤, and verifying all of the above

properties, which can be quite time consuming. As an example, one defines

addition via

X + Y := {x + y | x ∈ X, y ∈ Y }

and then must check (F1), (F2), and that the zero element of R is

{q ∈ Q | q < 0}.

Furthermore, one obtains the least upper bound of elements of R, which are

being viewed as subsets of Q, by taking the union of the subsets.

16

CHAPTER 1. AXIOMS OF NUMBER SYSTEMS

Chapter 2

Sequences of Real Numbers

One of the most important concepts in calculus is the notion of converging

sequences. Knowing that a sequence converges to a number allows one to

use elements of the sequence to better and better approximate the number.

However, having a precise definition of a limit allows one to better understand

what limits really are.

2.1

2.1.1

The Limit of a Sequence

Definition of a Limit

Before discussing limits, we must ask, “What is a sequence?”

Definition 2.1.1. A sequence of real numbers is an ordered list of real

numbers indexed by the natural numbers.

If we have ak ∈ R for all k ∈ N, we will use (an )n≥1 or (a1 , a2 , a3 , . . .) to

denote a sequences whose first element is a1 , whose second element is a2 , etc.

Example 2.1.2. If c ∈ R and an = c for all n ∈ N, then the sequence

(an )n≥1 is the constant sequence with value c.

Example 2.1.3. For all n ∈ N, let an = n1 . Then (an )n≥1 is the sequence

(1, 21 , 13 , 14 , . . .).

Example 2.1.4. For all n ∈ N, let an = (−1)n+1 . Then (an )n≥1 is the

sequence (1, −1, 1, −1, 1, −1, . . .).

Example 2.1.5. Let a1 = 1 and a2 = 1. For n ∈ N with n ≥ 3, let

an = an−1 + an−2 . Then (an )n≥1 is the sequence

(1, 1, 2, 3, 5, 8, 13, . . .).

This sequence is known as the Fibonacci sequence and is an example of a

recursively defined sequence (a sequence where subsequent terms are defined

using the previous terms under a fixed pattern).

17

18

CHAPTER 2. SEQUENCES OF REAL NUMBERS

With the above notion of a sequence, we turn to the notion of limits. If

we consider the sequence ( n1 )n≥1 , we intuitively know that as n gets larger

and larger, the sequence gets closer and closer to zero. Thus we would want

to use this to say that 0 is the limit of ( n1 )n≥1 . This may lead us to take the

following as our definition of a limit:

“A sequence (an )n≥1 has limit L (as n tends to infinity)

if as n gets larger and larger, an gets closer to L.”

However, the fault in the above idea of a limit is that ( n1 )n≥1 also gets

‘closer and closer’ to −1. We prefer 0 over −1 as the limit of ( n1 )n≥1 since n1

better and better approximates 0 whereas we intuitively know that ( n1 )n≥1

cannot approximate −1. This leads us to the following better idea of what a

limit is:

Heuristic Definition. A sequence (an )n≥1 has limit L if the terms of

(an )n≥1 are eventually all as close to L as we would like.

Using the above as a guideline, we obtain a rigorous, mathematical

definition of the limit of a sequence of real numbers.

Definition 2.1.6. Let (an )n≥1 be a sequence of real numbers. A number

L ∈ R is said to be the limit of (an )n≥1 if for every > 0 there exists an

N ∈ N (which depends on ) such that |an − L| < for all n ≥ N .

If (an )n≥1 has limit L, we say that (an )n≥1 converges to L and write

L = limn→∞ an . Otherwise we say that (an )n≥1 diverges.

Example 2.1.7. Consider the constant sequence (an )n≥1 where an = c for

all n ∈ N and some c ∈ R. Notice for all > 0, |an − c| = 0 < for all n ∈ N.

Hence (an )n≥1 converges to c.

Example 2.1.8. To see that limn→∞ n1 = 0 using the definition of the limit,

let > 0 be arbitrary. Then (by the homework) there exists an N ∈ N such

that 0 < N1 < . Therefore, for all n ≥ N we obtain that 0 < n1 ≤ N1 < .

Hence n1 − 0 < for all n ≥ N . Hence limn→∞ n1 = 0. Note that ( n1 )n≥1

has limit zero, but no term in the sequence is zero.

Example 2.1.9. Using the definition of a limit, we see that a sequence

(an )n≥1 does not converge if for all L ∈ R there is an > 0 (depending on

the L) such that for every N ∈ N there is an n ≥ N such that |an − L| ≥ .

Using the above paragraph, we can show that ((−1)n+1 )n≥1 does not

converge. Indeed let L ∈ R be arbitrary and let = 12 . Suppose there exists

an N ∈ N such that |(−1)n+1 − L| < for all n ≥ N . Since there exists an

odd number n greater than N , we obtain that |1 − L| < . Therefore, since

= 12 , we obtain that L ∈ ( 12 , 32 ). Similarly, since there exists an even number

n greater than N , we obtain that | − 1 − L| < . Therefore, since = 12 ,

2.1. THE LIMIT OF A SEQUENCE

19

we obtain that L ∈ (− 32 , − 12 ). Hence L ∈ ( 12 , 32 ) ∩ (− 32 , − 12 ) = ∅ which is

absurd. Hence we have a contradiction so L is not the limit of ((−1)n+1 )n≥1 .

Therefore, since L ∈ R was arbitrary, ((−1)n+1 )n≥1 does not converge.

2.1.2

Uniqueness of the Limit

Notice in the definition of ‘the’ limit of a sequence, we used ‘the’ instead of ‘a’;

that is, how do we know that there is at most one limit to a sequence? The

following justifies the use of the word ‘the’ and demonstrates one important

proof technique when dealing with limits.

Proposition 2.1.10. Let (an )n≥1 be a sequence of real numbers. If L and

K are limits of (an )n≥1 , then L = K.

6 K, we know that

Proof. Suppose that L 6= K. Let = |L−K|

2 . Since L =

> 0.

Since L is a limit of (an )n≥1 , we know by the definition of a limit that

there exists an N1 ∈ N such that if n ≥ N1 then |an − L| < . Similarly,

since K is a limit of (an )n≥1 , we know by the definition of a limit that there

exists an N2 ∈ N such that if n ≥ N2 then |an − K| < .

Let n = max{N1 , N2 }. By the above paragraph, we have that |an −L| < and |an − K| < . Hence by the Triangle Inequality

|L − K| ≤ |L − an | + |an − K| < + = 2 = |L − K|

which is absurd (i.e. x < x is false for all x ∈ R). Thus we have obtained a

contradiction so it must be the case that L = K.

To conclude this section, we note the following that demonstrates that

|an − L| < may be replaced with |an − L| ≤ in the definition of the limit of

a sequence. This can be useful on occasion and also establishes an important

idea in handling limits: is simply a constant and may be modified.

Proposition 2.1.11. Let (an )n≥1 be a sequence of real numbers and let

L ∈ R. Then (an )n≥1 converges to L if and only if for all > 0 there exists

an N ∈ N such that |an − L| ≤ for all n ≥ N .

Proof. Suppose (an )n≥1 converges to L. Let > 0 be arbitrary. By the

definition of the limit, there exists an N ∈ N such that |an − L| < for all

n ≥ N . As this implies |an − L| ≤ for all n ≥ N and as > 0 was arbitrary,

one direction of the proof is complete.

For the other direction, assume that (an )n≥1 and L have the property

listed in the statement. Let > 0 be arbitrary. Let 0 = 2 . Since 0 > 0, the

assumptions of this direction imply that there exists an N ∈ N such that

|an − L| ≤ 0 for all n ≥ N . Hence |an − L| ≤ 0 < for all n ≥ N . As > 0

was arbitrary, (an )n≥1 converges to L by the definition of the limit.

20

CHAPTER 2. SEQUENCES OF REAL NUMBERS

Remark 2.1.12. By analyzing the above proof, we see that the definition

of the limit can be modified to involve a constant multiple of . That is, if

(an )n≥1 is a sequence of real numbers, L ∈ R, and k > 0, then L = limn→∞ an

if and only if for all > 0 there exists an N ∈ N such that |an − L| < k

for all n ≥ N . It is very important to note that the constant k CANNOT

depend on n nor .

2.2

The Monotone Convergence Theorem

With the above, there are two main questions for us to ask: “What types of

sequences converge?” and “How can we find the limits of sequences without

always appealing to the definition?” The goal of this section is to look at

the first question.

First let us ask, “Does the sequence (n)n≥1 converge?” Intuitively the

answer is no since this sequence does not approximate a number. To make

this rigorous, consider the following.

Definition 2.2.1. A sequence (an )n≥1 of real numbers is said to be bounded

if the set {an | n ∈ N} is bounded.

Proposition 2.2.2. Every convergent sequence is bounded.

Proof. Let (an )n≥1 be a sequence of real numbers that converge to a number

L ∈ R. Let = 1. By the definition of a limit, there exists an N ∈ N such

that |an − L| ≤ = 1 for all n ≥ N . Hence |an | ≤ |L| + 1 for all n ≥ N by

the Triangle Inequality.

Let M = max{|a1 |, |a2 |, . . . , |aN |, |L| + 1}. Using the above paragraph,

we see that |an | ≤ M for all n ∈ N. Hence −M ≤ an ≤ M for all n ∈ N so

(an )n≥1 is bounded.

The above shows us that boundness is a requirement for convergence of a

sequence. However, a bounded sequence need not converge. Indeed Example

2.1.9 shows that the sequence ((−1)n+1 )n≥1 (which is clearly bounded) does

not converge. However, a natural question to ask is, “Is there a condition we

may place on a sequence so that boundedness implies convergence?” Indeed

there is!

Definition 2.2.3. A sequence (an )n≥1 of real numbers is said to be

• increasing if an < an+1 for all n ∈ N,

• non-decreasing if an ≤ an+1 for all n ∈ N,

• decreasing if an > an+1 for all n ∈ N,

• non-increasing if an ≥ an+1 for all n ∈ N, and

2.3. LIMIT THEOREMS

21

• monotone if (an )n≥1 is non-decreasing or non-increasing.

Theorem 2.2.4 (Monotone Convergence Theorem). A monotone sequence (an )n≥1 of real numbers converges if and only if (an )n≥1 is bounded.

Proof. By Proposition 2.2.2, if (an )n≥1 converges, then (an )n≥1 is bounded.

For the other direction, suppose that (an )n≥1 is a monotone sequence

that is bounded. We will assume that (an )n≥1 is a non-decreasing sequence

for the remainder of the proof as the case when (an )n≥1 is non-increasing

can be demonstrated using similar arguments.

Since (an )n≥1 is bounded, {an | n ∈ N} has a least upper bounded, say

α, by the Least Upper Bound Property (Theorem 1.3.18). We claim that α

is the limit of (an )n≥1 . To see this, let > 0 be arbitrary. Since α is the least

upper bound of {an | n ∈ N}, we know that an ≤ α for all n ∈ N and α − is not an upper bound of {an | n ∈ N}. Hence there exists an N ∈ N such

that α − < aN . Since (an )n≥1 is non-decreasing, we obtain for all n ≥ N

that α − < aN ≤ an ≤ α, which implies |an − α| < for all n ≥ N . Since

> 0 was arbitrary, we obtain that α is the limit of (an )n≥1 by definition.

Hence (an )n≥1 converges.

Example 2.2.5. Consider

the sequence (an )n≥1 defined recursively via

√

a1 = 1 and an+1 = 3 + 2an for all n ≥ 1. In the homework, it was

demonstrated that 0 ≤ an ≤ an+1 ≤ 3 for all n ∈ N. Hence (an )n≥1

converges by the Monotone Convergence Theorem. The question remains,

“What is the limit of (an )n≥1 ?” By the proof of the Monotone Convergence

Theorem, we know the answer is lub({an | n ∈ N}), which is at most 3. But

is the answer 3 or a number less than 3?

2.3

Limit Theorems

To answer the above question and aid us in our computation of limits, there

are several theorems we may explore to aid us.

2.3.1

Limit Arithmetic

Our first goal is to determine how limits behave with respect to the simplest

operations on R.

Theorem 2.3.1. Let (an )n≥1 and (bn )n≥1 be sequences of real numbers such

that L = limn→∞ an and K = limn→∞ bn exist. Then

a) limn→∞ an + bn = L + K.

b) limn→∞ an bn = LK.

c) limn→∞ can = cL for all c ∈ R.

22

CHAPTER 2. SEQUENCES OF REAL NUMBERS

d) limn→∞

1

bn

=

1

K

whenever K 6= 0 (see proof for technicality).

e) limn→∞

an

bn

=

L

K

whenever K 6= 0 (see proof for technicality).

Proof. a) Let > 0 be arbitrary. Since L = limn→∞ an , there exists an

N1 ∈ N such that |an − L| < 2 for all n ≥ N1 . Similarly, since K =

limn→∞ bn , there exists an N2 ∈ N such that |bn − L| < 2 for all n ≥ N2 .

Let N = max{N1 , N2 }. Hence, using the Triangle Inequality, for all n ≥ N ,

|(an + bn ) − (L + K)| ≤ |an − L| + |bn − K| <

+ .

2 2

Hence (an + bn )n≥1 converges to L + K by definition.

b) Let > 0 be arbitrary. First note that 0 ≤ |K| < |K| + 1 so

|K|

0 ≤ |K|+1

≤ 1 (we will use this later). Next, since (an )n≥1 convergence,

(an )n≥1 is bounded by Proposition 2.2.2. Hence there exists an M > 0 such

that |an | < M for all n ∈ N.

Since L = limn→∞ an , there exists an N1 ∈ N such that |an −L| < 2(|K|+1)

1

for all n ≥ N1 (as 2(|K|+1)

> 0 is a constant). Similarly, since K = limn→∞ bn ,

1

there exists an N2 ∈ N such that |bn − L| < 2M

for all n ≥ N2 (as 2M

is a

constant). Let N = max{N1 , N2 }. Hence, using the Triangle Inequality, for

all n ≥ N ,

|an bn − LK| = |(an bn − an K) + (an K − LK)|

≤ |an bn − an K| + |an K − LK|

≤ |an ||bn − K| + |K||an − L|

≤ M |bn − K| + |K||an − L|

+ |K|

≤M

2M

2(|K| + 1)

≤ + = .

2 2

Hence (an bn )n≥1 converges to LK by definition.

c) Apply part (b) with bn = c for all n ∈ N.

d) The one technicality here is that if bn = 0, then

|K|

2

1

bn

does not make

sense. However, since K = limn→∞ bn and since

> 0 as K =

6 0, there

|K|

exists an N1 ∈ N such that |bn − K| < 2 for all n ≥ N1 . Therefore, by

|K|

the Triangle Inequality, |bn | ≥ |K| − |K|

2 = 2 > 0 for all n ≥ N1 . Hence, if

n ≥ N1 we have that |bn | > 0 and thus b1n is well-defined for suitably large n.

Furthermore, since limits depend only on the behaviour for large n, it makes

sense to consider the sequence ( b1n )n≥1 .

Let > 0 be arbitrary. In the above paragraph, we saw that |bn | ≥ |K|

2

2

for all n ≥ N1 and thus |b1n | ≤ |K|

for all n ≥ N1 (as K =

6 0). Since

2.3. LIMIT THEOREMS

23

K = limn→∞ bn , there exists an N2 ∈ N such that |bn − K| <

n ≥ N2 (as

|K|2

2

|K|2

2

for all

> 0 is a constant). Therefore, for all n ≥ max{N1 , N2 },

1

1 |K − bn |

b − K = |b ||K|

n

n

|K|2

2|bn ||K|

|K| 1

≤

2 |bn |

|K| 2

≤

= .

2 |K|

≤

Hence ( b1n )n≥1 converges to K1 by definition.

e) By part (d), limn→∞ b1n = K1 . Hence, as limn→∞ an = L, part (b)

implies that limn→∞ an b1n = L K1 completing the proof.

Example 2.3.2. Consider

the sequence (an )n≥1 defined recursively via

√

a1 = 1 and an+1 = 3 + 2an for all n ≥ 1. In Example 2.2.5, we used the

Monotone Convergence Theorem (Theorem 2.2.4) along with the fact that

0 ≤ an ≤ an+1 ≤ 3 to show that (an )n≥1 converges. It remains to compute

the limit of this sequence.

√

Let L = limn→∞ an . Since an+1 = 3 + 2an for all n ≥ 1, we have that

a2n+1 = 3 + 2an for all n ∈ N. Therefore, using Theorem 2.3.1, we obtain

that

3 + 2L = lim 3 + 2an = lim a2n+1

n→∞

n→∞

= lim a2n

index shift does not change the limit

n→∞

=

lim an

n→∞

2

= L2 .

Hence L2 − 2L − 3 = 0 so (L − 3)(L + 1) = 0 so L = 3 or L = −1. However,

since −1 < 0 < 1 = a1 ≤ an for all n ∈ N, |an − (−1)| ≥ 2 for all n ∈ N and

thus −1 cannot be the limit of (an )n≥1 by the definition of the limit. Hence

limn→∞ an = 3.

Example 2.3.3. Consider the sequence (an )n≥1 where an =

n ∈ N. Does (an )n≥1 converge and, if so, what is its limit?

To answer this question, notice that

an =

5n2

n2 5 +

+ 2n

=

3n2 − n + 4

n2 3 −

1

n

2

n2

+

4

n2

=

5n2 +2n

3n2 −n+4

for all

5 + n22

.

3 − n1 + n42

Since limn→∞ n1 = 0 by Example 2.1.8, and since limn→∞ n12 = 0 (see

homework), we obtain that

2

1

4

5

lim 5 + 2 = 5 and lim 3 − + 2 = 3 so lim an = .

n→∞

n→∞

n→∞

n

n n

3

24

CHAPTER 2. SEQUENCES OF REAL NUMBERS

In part (e) of Theorem 2.3.1, it was required in the proof that the

denominator does not converge to 0. This is due to the fact that there are

many different types of behaviour that may occur when the denominator of

a sequence of fractions tends to zero.

For two examples, first consider the sequences (an )n≥1 and (bn )n≥1 where

an = bn = n1 for all n ∈ N. Then clearly limn→∞ an = 0 = limn→∞ bn , and

1

an

n

= =1

1

bn

n

for all n ∈ N. Hence limn→∞ abnn = 1.

Alternatively, consider the sequences (an )n≥1 and (bn )n≥1 where an = 1

and bn = n1 for all n ∈ N. Then clearly limn→∞ an = 1 and limn→∞ bn = 0,

yet

an

1

= =n

1

bn

n

does not converge as (n)n≥1 is not bounded (see Proposition 2.2.2).

Thus, if (a

n≥1 and (bn )n≥1 are sequences and limn→∞ bn = 0, it is

n )

possible that abnn

does not converge. However, if limn→∞ abnn exists, then

n≥1

by part (b) of Theorem 2.3.1 we must have that

an

lim an = lim

bn =

n→∞

n→∞ bn

an an

lim

lim bn = lim

(0) = 0.

n→∞ bn

n→∞

n→∞ bn

Thus a necessary condition for limn→∞

limn→∞ an = 0.

2.3.2

an

bn

to exist when limn→∞ bn = 0 is

Diverging to Infinity

We have seen several examples of sequences that do not converge. In particular, Proposition 2.2.2 says that unbounded sequences have no chance to

converge. However, it is useful to discuss specific notions of divergence for

unbounded sequences.

Definition 2.3.4. A sequence (an )n≥1 of real numbers is said to diverge to

infinity , denoted limn→∞ an = ∞, if for every M ∈ R there exists an N ∈ N

such that an ≥ M for all n ≥ N .

Similarly, a sequence (an )n≥1 of real numbers is said to diverge to negative

infinity, denoted limn→∞ an = −∞, if for every M ∈ R there exists an N ∈ N

such that an ≤ M for all n ≥ N .

Example 2.3.5. It is clear that limn→∞ n = ∞.

Using the same proof ideas as in Theorem 2.3.1, we obtain the following.

2.3. LIMIT THEOREMS

25

Theorem 2.3.6. Let (an )n≥1 and (bn )n≥1 be sequences of real numbers.

Suppose that (bn )n≥1 diverges to ∞ (respectively −∞). Then

a) If (an )n≥1 is bounded below (respectively above), then limn→∞ an +bn = ∞

(respectively limn→∞ an + bn = −∞).

b) If there exists an M > 0 such that an ≥ M for all n ∈ N, then

limn→∞ an bn = ∞ (respectively limn→∞ an bn = −∞).

c) If (an )n≥1 is bounded, then limn→∞

an

bn

= 0.

Proof. See the homework.

The above theorem aids us in computing limits of fractions where the

denominator grows faster than the numerator.

Example 2.3.7. Consider the sequence (an )n≥1 where an =

n ∈ N. Then

n 2 + n1

2 + n1

=

an = .

n + n3

n n + n3

Therefore, since limn→∞

3

n

= 0 so

3

n n≥1

2n+1

n2 +3

is bounded, and since limn→∞ n =

∞, we have limn→∞ n+ n3 = ∞. Hence since limn→∞ 2+ n1 = 2 so 2 +

is bounded, we have that limn→∞

2.3.3

2n+1

n2 +3

for all

1

n n≥1

= 0.

The Squeeze Theorem

Using Theorem 2.3.6, it is possible to show that

cos(n)

n

n≥1

converges to

zero. Indeed, if an = cos(n) and bn = n for all n ∈ N, then (an )n≥1 is

bounded (above by 1 and below by −1) and limn→∞ bn = ∞, so part (c)

of Theorem 2.3.6 implies limn→∞ cos(n)

= 0. Alternatively, we can show

n

cos(n)

1

limn→∞ n = 0 by noting that − n ≤ cos(n)

≤ n1 for all n ∈ N and by

n

applying the following useful theorem (which may be used to prove part (c)

of Theorem 2.3.6).

Theorem 2.3.8 (Squeeze Theorem). Let (an )n≥1 , (bn )n≥1 and (cn )n≥1

be sequences of real numbers such that there exists an N0 ∈ N such that

an ≤ bn ≤ cn

for all n ≥ N0 .

If limn→∞ an = limn→∞ cn = L, then (bn )n≥1 converges and limn→∞ bn = L.

Proof. Let > 0 be arbitrary. Since L = limn→∞ an , there exists an N1 ∈ N

such that |an − L| < for all n ≥ N1 . Hence L − < an for all n ≥ N1 .

Similarly, since L = limn→∞ cn , there exists an N2 ∈ N such that |cn − L| < 26

CHAPTER 2. SEQUENCES OF REAL NUMBERS

for all n ≥ N2 . Hence cn < L + for all n ≥ N2 . Therefore, for all

n ≥ max{N0 , N1 , N2 }, we have that

L − < an ≤ bn ≤ cn ≤ L + .

Hence L − ≤ bn ≤ L + for all n ≥ max{N0 , N1 , N2 }, which implies

− ≤ bn − L ≤ and thus |bn − L| < for all n ≥ max{N0 , N1 , N2 }. Hence

(bn )n≥1 converges and limn→∞ bn = L by definition.

2.3.4

Limit Supremum and Limit Infimum

There are several sequences that do not converge nor diverge to ±∞. For

example, the sequence ((−1)n+1 )n≥1 has been shown to not converge and

clearly does not diverge to ±∞ as it is bounded. Consequently, we may ask,

“Is it possible to obtain some information about this sequence as n tends to

infinity?”

Clearly everything we want to know about the sequence ((−1)n+1 )n≥1

can be obtained by taking the least upper bound and greatest lower bound

of its elements. Consequently, we extend the notions of least upper bound

and greatest lower bound to include infinities.

Definition 2.3.9. Let X be a set of real numbers. The supremum of X,

denoted sup(X), is defined to be

−∞

if X = ∅

lub(X) if X =

6 ∅ and X is bounded above .

sup(X) :=

∞

if X is not bounded above

Similarly, the infimum of X, denoted inf(X), is defined to be

∞

if X = ∅

glb(X) if X =

6 ∅ and if X is bounded below .

inf(X) :=

−∞

if X is not bounded below

The infimum and supremum of sequences are not the objects we are after

since we are more interested in the behaviour of sequences

as n gets large.

For example, consider the sequence (−1)n+1 (1 + n1 )

. It is not difficult

n≥1

to see that 2 is the supremum of this sequence and − 23 is the infimum of

this sequence. However, as n gets larger and larger, the largest values of

the sequence are very close to 1 and the smallest values of the sequence are

very close to −1. How can we express this notion for arbitrary sequences

mathematically?

Let (an )n≥1 be a sequence. To see how the largest values of (an )n≥1

behave as n grows, we can take the suprumum after we ignore the first few

terms. Consequently, we define a new sequence (bn )n≥1 defined by

bn = sup{ak | k ≥ n}.

2.3. LIMIT THEOREMS

27

It is not difficult to see that b1 ≥ b2 ≥ b3 ≥ · · · as the supremum may only

get smaller as we remove terms from the set from which we are taking the

supremum. Consequently we see that (bn )n≥1 is a monotone sequence. Since

(bn )n≥1 is non-increasing, (bn )n≥1 either converges to a number, diverges to

−∞, or bn = ∞ for all n.

Applying the same idea with the sequence (cn )n≥1 where

cn = inf{ak | k ≥ n}

we arrive at the following.

Definition 2.3.10. The limit supremum of a sequence (an )n≥1 of real numbers, denoted lim supn→∞ an , is

lim sup an = lim sup{ak | k ≥ n} ∈ R ∪ {±∞}.

n→∞

n→∞

Similarly, the limit infimum of a sequence (an )n≥1 of real numbers,denoted

lim inf n→∞ an , is

lim inf an = lim inf{ak | k ≥ n} ∈ R ∪ {±∞}.

n→∞

n→∞

To see that all values are possible, it is not difficult to see that

n+1

lim sup(−1)

n→∞

1

1+

n

n+1

=1

and

lim inf (−1)

lim sup n = ∞

and

lim inf n = ∞.

n→∞

1

1+

n

= −1

whereas

n→∞

n→∞

Unsurprisingly, there is a solid connection between lim inf, lim sup, and

lim. To see this connection, we require the following.

Theorem 2.3.11 (Comparison Theorem). Let (an )n≥1 and (bn )n≥1 be

convergent sequences of real numbers. Suppose that there exists an N0 ∈ N

such that an ≤ bn for all n ≥ N0 . Then limn→∞ an ≤ limn→∞ bn .

Proof. Let L = limn→∞ an and let K = limn→∞ bn . Suppose that K < L.

Therefore if = L−K

2 , then > 0.

Since L = limn→∞ an , there exists an N1 ∈ N such that |an −L| < for all

n ≥ N1 . Hence L < an + for all n ≥ N1 . Similarly, since K = limn→∞ bn ,

there exists an N2 ∈ N such that |bn − K| < for all n ≥ N2 . Hence

bn ≤ K + for all n ≥ N2 .

Therefore, if n ≥ max{N1 , N2 , N0 }, we obtain that

L < an + ≤ bn + ≤ K + 2 = K + |K − L|.

Hence L − K < |K − L|. However, this is impossible as we are assuming

that K < L which would imply |K − L| = L − K. Hence we have obtained a

contradiction in the case that K < L so it must be the case that L ≤ K.

28

CHAPTER 2. SEQUENCES OF REAL NUMBERS

Proposition 2.3.12. Let (an )n≥1 be a sequence of real numbers such that

lim inf an , lim sup an ∈ R.

n→∞

n→∞

Then

lim inf an ≤ lim sup an .

n→∞

n→∞

In addition, (an )n≥1 converges if and only if lim inf n→∞ an = lim supn→∞ an .

In this case

lim an = lim inf an = lim sup an .

n→∞

n→∞

n→∞

Proof. For the remainder of the proof, for each n ∈ N let

bn = sup{ak | k ≥ n} ∈ R

cn = inf{ak | k ≥ n} ∈ R.

and

Clearly

lim sup an = lim bn ,

n→∞

lim inf an = lim cn ,

n→∞

n→∞

and

n→∞

cn ≤ an ≤ bn for all n.

Hence, the Comparison Theorem (Theorem 2.3.11) implies lim inf n→∞ an ≤

lim supn→∞ an .

Next, suppose that lim inf n→∞ an = lim supn→∞ an . Therefore, since

cn ≤ an ≤ bn for all n ∈ N, we obtain that (an )n≥1 converges and

lim an = lim inf an = lim sup an

n→∞

n→∞

n→∞

by the Squeeze Theorem (Theorem 2.3.8).

Finally, suppose L = limn→∞ an exists. Let > 0. Hence there exists an

N ∈ N such that |an − L| < for all n ∈ N. Thus L − ≤ an ≤ L + for all

n ≥ N . Therefore L − ≤ cn ≤ bn ≤ L + for all n ≥ N by the definition of

bn and cn . Hence, the Comparison Theorem (Theorem 2.3.11) implies that

L − ≤ lim cn ≤ lim bn ≤ L + n→∞

n→∞

for all > 0. In particular,

L−

1

1

≤ lim cn ≤ lim bn ≤ L +

n→∞

n→∞

m

m

1

for all m ∈ N. Therefore, since limm→∞ m

= 0, the above is only possible

(for example, by the Squeeze Theorem (Theorem 2.3.8)) if

L = lim bn = lim cn .

n→∞

n→∞

2.4. THE BOLZANO–WEIERSTRASS THEOREM

2.4

29

The Bolzano–Weierstrass Theorem

We have seen that many sequences do not converge. The lim inf and lim sup

do provide us with some information about the sequence. However, one

natural question to ask is, “If we have a sequence that does not converge,

can we remove terms from the sequence to make it converge?” Of course for

convergence, our new sequence must be bounded by Proposition 2.2.2. Thus

perhaps a better question is, “If we have a bounded sequence that does not

converge, can we remove terms from the sequence to make it converge?”

2.4.1

Subsequences

To answer the above question, we must describe what we mean by ‘remove

terms from a sequence’. This is made precise by the mathematical notion of

a subsequence.

Definition 2.4.1. A subsequence of a sequence (an )n≥1 of real numbers is

any sequence (bn )n≥1 of real numbers such that there exists an increasing

sequence of natural numbers (kn )n≥1 so that bn = akn for all n ∈ N.

For example, if (an )n≥1 is our favourite sequence an = (−1)n+1 for all

n ∈ N and if we choose kn = 2n − 1 for all n ∈ N, then (akn )n≥1 is the

sequence (1, 1, 1, . . .). Similarly, if (bn )n≥1 is the sequence where bn = n1

2

for all n ∈ N and if we choose

kn = n for all n ∈ N, then (bkn )n≥1 is the

sequence (1, 14 , 91 , . . .) = n12

.

n≥1

In the above paragraph, notice that the sequence (an )n≥1 diverges whereas

the given subsequence converges. Thus it is possible that divergent sequences

have convergent subsequences. Furthermore, (bn )n≥1 and the given subsequence both converge to 0. It is not difficult to see that every subsequence

of (bn )n≥1 converges to zero and this is no coincidence.

Proposition 2.4.2. Let (an )n≥1 be a sequence of real numbers that converges

to L. Every subsequence of (an )n≥1 converges to L.

Proof. Let (akn )n≥1 be a subsequence of (an )n≥1 . Let > 0. Since L =

limn→∞ an , there exists an N ∈ N such that |an − L| < for all n ≥ N .

Since (kn )n≥1 is an increasing sequence of natural numbers, there exists an

N0 ∈ N such that kn ≥ N for all n ≥ N0 . Hence |akn − L| < for all n ≥ N0 .

Therefore, as > 0 was arbitrary, we obtain that limn→∞ akn = L by the

definition of the limit.

2.4.2

The Peak Point Lemma

It is natural to ask, “Given a sequence, is there a ‘nice’ subsequence?” Of

course ‘nice’ is ambiguous, but the following demonstrates a specific form of

subsequence we may always construct.

30

CHAPTER 2. SEQUENCES OF REAL NUMBERS

Lemma 2.4.3 (The Peak Point Lemma). Every sequence of real numbers

has a monotone subsequence.

In order to prove the above lemma (and from which it gets its name), we

will use the following notion:

Definition 2.4.4. Let (an )n≥1 be a sequence of real numbers. An index

n0 ∈ N is said to be a peak point for the sequence (an )n≥1 if an ≤ an0 for all

n ≥ n0 .

Proof of Lemma 2.4.3. Let (an )n≥1 be a sequence of real numbers. The

proof is divided into two cases:

Case 1. (an )n≥1 has an infinite number of peak points: By assumption

there exists indices k1 < k2 < k3 < · · · such that kj is a peak point for all

j ∈ N. Therefore, we have by the definition of a peak point that akn ≥ akn+1

for all n ∈ N. Hence (akn )n≥1 is a non-increasing subsequence of (an )n≥1 .

Case 2. (an )n≥1 has a finite number (or no) peak points: Let n0 be the

largest peak point of (an )n≥1 (or n0 = 1 if (an )n≥1 has no peak points),

and let k1 = n0 + 1. Thus k1 is not a peak point of (an )n≥1 . Therefore

there exists a k2 > k1 = n0 + 1 such that ak2 > ak1 . Subsequently, since

k2 > k1 > n0 , k2 is not a peak point. Therefore there exists a k3 > k2 such

that ak3 > ak2 . Repeating this process ad nauseum, we obtain a sequence

of indices k1 < k2 < k3 < · · · such that akn+1 > akn for all n ∈ N. Hence

(akn )n≥1 is an increasing subsequence of (an )n≥1 .

As in either case a monotone subsequence can be constructed, the result

follows.

2.4.3

The Bolzano–Weierstrass Theorem

Combining the Peak Point Lemma together with the Monotone Convergence

Theorem, we easily obtain the following.

Theorem 2.4.5 (The Bolzano-Weierstrass Theorem). Every bounded

sequence of real numbers has a convergent subsequence.

Proof. Let (an )n≥1 be a bounded sequence of real numbers. By the Peak

Point Lemma (Lemma 2.4.3), there exists a monotone subsequence (akn )n≥1

of (an )n≥1 . Since (an )n≥1 is bounded, (akn )n≥1 is also bound and thus

converges by the Monotone Convergence Theorem (Theorem 2.2.4).

Chapter 3

An Introduction to Topology

With the above study of sequences complete, we can turn our attention to

analyzing properties of the real numbers and their subsets through convergent

sequences. One of the most important properties of the real numbers is

the notion of completeness, which implies the convergence of specific types

of sequences. Furthermore, when a subset of real numbers has specific

properties, the limit of a convergent sequence of real numbers from the subset

must also be in the subset.

3.1

Completeness of the Real Numbers

Currently, one difficulty with determining when a sequence converges is that

one must have an idea of what the limit of the sequence is in order to prove

convergence. This even holds for bounded monotone sequences as intuition

(and results) tell us the limit is either the least upper bound or greatest

lower bound of the sequence. Thus it is natural to ask, “Is there a way to

determine whether a sequence converges?”

3.1.1

Cauchy Sequences

If a sequence were to converge, then eventually all terms in the sequence

are as close to the limit as we would like. In particular, by the Triangle

Inequality, eventually all terms in the sequence are as close to each other as

we would like. This leads us to the notion of a Cauchy sequence.

Heuristic Definition. A sequence (an )n≥1 is said to be Cauchy if the terms

of (an )n≥1 are as close to each other as we would like as long as n is large

enough.

As with the definition of the limit of a sequence, the notion of Cauchy

sequence can be made mathematically precise.

31

32

CHAPTER 3. AN INTRODUCTION TO TOPOLOGY

Definition 3.1.1. A sequence (an )n≥1 of real numbers is said to be Cauchy

if for all > 0 there exists an N ∈ N such that |an − am | < for all n, m ≥ N .

Note that it is possible a sequence (an )n≥1 satisfies limn→∞ an+1 − an = 0

P

but is not Cauchy. Indeed if an = nk=1 k1 for each n ∈ N, then an+1 − an =

1

n+1 which clearly converges to zero. However, it is possible to show that

(an )n≥1 diverges to infinity. Although we cannot prove this divergence at this

time, many students will have seen series in previous courses and techniques

of the last chapter of this course will enable this proof.

As our definition of Cauchy sequence was motivated by convergence, the