Lectures 15-20: Dynamic Programming CSE 431/531: Analysis of Algorithms Lecturer: Shi Li

advertisement

CSE 431/531: Analysis of Algorithms

Lectures 15-20: Dynamic Programming

Lecturer: Shi Li

Department of Computer Science and Engineering

University at Buffalo

Spring 2016

MoWeFr 3:00-3:50pm

Knox 110

Different Paradigms for Designing Algorithms

Greedy algorithm

Make a greedy choice

Reduce the problem to a sub-problem and solve it iteratively

Divide-and-conquer

Break a problem into many independent sub-problems

Solve each sub-problem

Combine solutions for sub-problems to form a solution for the

original one

Dynamic programming

Break up a problem into many overlapping sub-problems

Build solutions for larger and larger sub-problems

Use a table to store solutions for sub-problems for reuse

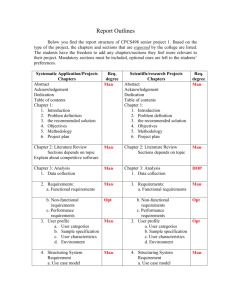

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Recall: Interval Schduling

Input: n jobs, job i with start time si and finish time fi

each job has a weight (or value) vi > 0

i and j are compatible if [si , fi ) and [sj , fj ) are disjoint

Output: a maximum-size subset of mutually compatible jobs

0

2

1

3

4

100

5

6

50

9

30

25

50

90

80

8

7

80

70

Optimum value = 220

Hard to Design a Greedy Algorithm

Job

Job

Job

No,

0

with

with

with

with

the earliest finish time? No, we are ignoring weights

the largest weight? No, we are ignoring times

the largest weight/length?

uniform weights, we are choosing the shortest job

1

2

3

4

5

6

7

8

9

Designing a Dynamic Programming Algorithm

0

2

2

1

3

100

4

3

1

80

4

5

5

50

6

7

8

7

6

9

9 30

25

90

8

50

80

70

Sort jobs according to non-decreasing order of finish times

opt(i): optimal value for instance defined by jobs

{1, 2, · · · , i}

opt(0) = 0, opt(1) = 80, opt(2) = 100, opt(3) = 100

opt(4) = 105, opt(5) = 150, opt(6) = 170, opt(7) = 185

Designing a Dynamic Programming Algorithm

0

2

2

1

3

100

4

3

1

80

4

5

5

50

6

7

8

7

6

9

9 30

25

90

8

50

80

70

opt(i): optimal value for instance defined by jobs

{1, 2, · · · , i}

In the optimum solution for {1, 2, 3, · · · , i},

if job i is not selected, then opt(i) = opt(i − 1)

if job i is selected, then opt(i) = vi + opt(pi )

pi : the largest j such that fj ≤ si

opt(i) = max{opt(i − 1), vi + opt(pi )}

0

2

2

1

3

100

4

3

1

80

4

5

5

50

6

7

8

7

6

9

9 30

25

90

8

50

80

70

opt(i) = max{opt(i − 1), vi + opt(pi )}

opt(0) = 0

opt(1) = max{opt(0), 80 + opt(0)} = 80

opt(2) = max{opt(1), 100 + opt(0)} = 100

opt(3) = max{opt(2), 90 + opt(0)} = 100

opt(4) = max{opt(3), 25 + opt(1)} = 105

opt(5) = max{opt(4), 50 + opt(3)} = 150

0

2

2

1

3

100

4

3

1

80

4

5

5

50

6

7

8

7

6

9

9 30

25

90

8

50

80

70

opt(i) = max{opt(i − 1), vi + opt(pi )}

opt(0) = 0, opt(1) = 80, opt(2) = 100

opt(3) = 100, opt(4) = 105, opt(5) = 150

opt(6) = max{opt(5), 70 + opt(3)} = 170

opt(7) = max{opt(6), 80 + opt(4)} = 185

opt(8) = max{opt(7), 50 + opt(6)} = 220

opt(9) = max{opt(8), 30 + opt(7)} = 220

Recursive Algorithm

1

2

sort and compute p

return compute-opt(n)

compute-opt(i)

1

2

3

4

if i = 0 then

return 0

else

return max{compute-opt(i − 1), vi + compute-opt(pi )}

Running time can be exponential in n

Dynamic Programming

1

2

3

4

sort and compute p

opt[0] ← 0

for i ← 1 to n

opt[i] ← max{opt[i − 1], vi + opt[pi ]}

Bottleneck is sorting and computing p: O(n lg n).

Memoized Recursive Algorithm

1

2

3

sort and compute p

opt[0] ← 0 and opt[i] ← ∞ for every i = 1, 2, 3, · · · , n

return compute-opt(n)

compute-opt(i)

1

2

3

4

5

if opt[i] 6= ∞ then

return opt[i]

else

opt[i] ← max{compute-opt(i − 1), vi + compute-opt(pi )}

return opt[i]

Running time the same as dynamic programming: O(n lg n)

How Can We Recover the Optimum Schedule?

1

2

3

4

5

6

7

8

9

sort and compute p

opt[0] ← 0

for i ← 1 to n

if opt[i − 1] ≥ vi + opt[pi ]

opt[i] ← opt[i − 1]

b[i] ← N

else

opt[i] ← vi + opt[pi ]

b[i] ← Y

1

2

3

4

5

6

7

8

i ← n, S ← ∅

while i 6= 0

if b[i] = N

i←i−1

else

S ← S ∪ {i}

i ← pi

return S

Longest Path Problem in Node Weighted DAG

Input: a DAG (Directed Acyclic Graph) G = (V, E)

for every v ∈ V , a weight wv ∈ R≥0 ;

two special vertices s, t ∈ V

P

Output: the path P from s to t that maximizes v∈P wv

i.e., the total weight of nodes in the path

s

1

6

4

t

2

3

5

OPT=13

Reduction to Longest Path Problem

For every job i, there is a node i with weight vi .

For every two jobs i, j such that ti ≤ sj , there is an edge

(i, j)

Add source s and sink t with weight 0, and an edge from s

to every vertex, and add an edge from every vertex to t.

Compute longest path from s to t

Reduction to Longest Path Problem

s

0

0

2

2

1

3

100

4

3

1

80

4

5

5

50

6

7

8

7

6

9

80

100 2

1

90

3

9 30

25

90

8

edges to all vertices

80

50

4

25

50

5

6

70

70

7

80

8

9

50

t

30

0

edges from all vertices

Ideas of Dynamic Programming

Break up a problem into many overlapping sub-problems

Build solutions for larger and larger sub-problems

Use a table to store solutions for sub-problems for reuse

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Subset Sum Problem

Input: an integer bound W > 0

a set of n items, each with an integer weight wi > 0

Output: a subset S of items that

X

X

maximizes

wi

s.t.

wi ≤ W.

i∈S

i∈S

Motivation: you have budget W , and want to buy a subset

of items, so as to spend as much money as possible.

Example:

W = 35, n = 5, w = (14, 9, 17, 10, 13)

Optimum: S = {1, 2, 4} and 14 + 9 + 10 = 33

Greedy Algorithms for Subset Sum

Candidate Algorithm:

Sort according to non-increasing order of weights

Select items in the order as long as the total weight remains

below W

Q: Does candidate algorithm always produce optimal solutions?

A: No. W = 100, n = 3, w = (51, 50, 50).

Q: What if we change “non-increasing” to “non-decreasing”?

A: Still no. W = 100, n = 3, w = (1, 50, 50)

Design a Dynamic Programming Algorithm

Instance: n, W, (w1 , w2 , · · · , wn );

opt[n, W, (w1 , w2 , · · · , wn )]: the optimum value of instance

If we do not choose item n

residual instance: n − 1, W, (w1 , w2 , · · · , wn−1 )

value: opt[n − 1, W, (w1 , w2 , · · · , wn−1 )]

If we choose item n (assuming W ≥ wn )

residual instance: n − 1, W − wn , (w1 , w2 , · · · , wn−1 )

value: opt[n − 1, W − wn , (w1 , w2 , · · · , wn−1 )] + wn

No Need to Remember the w Vector

Fix (w1 , w2 , · · · , wn ). Initial instance: n, W .

opt[n, W ]: the optimum value of instance

If we do not choose item n

residual instance: n − 1, W

value: opt[n − 1, W ]

If we choose item n (assuming W ≥ wn )

residual instance: n − 1, W − wn

value: opt[n − 1, W − wn ] + wn

Dynamic Programming

for every i ∈ {0, 1, 2, 3, · · · , n}, W 0 ∈ {0, 1, 2, · · · , W }, let

opt[i, W 0 ] be the optimum value of the instance where we

have budget W 0 and items {1, 2, · · · , i}

If i = 0, then opt[i, W 0 ] = 0

If i > 0 and wi > W 0 , then

opt[i, W 0 ] = opt[i − 1, W 0 ].

If i > 0 and wi ≤ W 0 , then

0

opt[i, W ] = max

opt[i − 1, W 0 ]

.

opt[i − 1, W 0 − wi ] + wi

Dynamic Programming

1

2

3

4

5

6

7

8

for W 0 ← 0 to W

opt[0, W 0 ] ← 0

for i ← 1 to n

for W 0 ← 0 to W

opt[i, W 0 ] ← opt[i − 1, W 0 ]

if wi ≥ W 0 and opt[i − 1, W 0 − wi ] + wi ≥ opt[i, W 0 ]

then

opt[i, W 0 ] ← opt[i − 1, W 0 − wi ] + wi

return opt[n, W ]

Recover the Optimum Set

1

2

3

4

5

6

7

8

9

10

for W 0 ← 0 to W

opt[0, W 0 ] ← 0

for i ← 1 to n

for W 0 ← 0 to W

opt[i, W 0 ] ← opt[i − 1, W 0 ]

b[i, W 0 ] ← N

if wi ≥ W 0 and opt[i − 1, W 0 − wi ] + wi ≥ opt[i, W 0 ]

then

opt[i, W 0 ] ← opt[i − 1, W 0 − wi ] + wi

b[i, W 0 ] ← Y

return opt[n, W ]

Recover the Optimum Set

1

2

3

4

5

6

7

i ← n, W 0 ← W, S ← ∅

while i > 0

if b[i, W 0 ] = Y then

W 0 ← W 0 − wi

S ← S ∪ {i}

i←i−1

return S

Running Time of Algorithm

1

2

3

4

5

6

7

8

for W 0 ← 0 to W

opt[0, W 0 ] ← 0

for i ← 1 to n

for W 0 ← 0 to W

opt[i, W 0 ] ← opt[i − 1, W 0 ]

if wi ≥ W 0 and opt[i − 1, W 0 − wi ] + wi ≥ opt[i, W 0 ]

then

opt[i, W 0 ] ← opt[i − 1, W 0 − wi ] + wi

return opt[n, W ]

Running time is O(nW )

Running time is pseudo-polynomial because it depends on

value of the input integers.

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Knapsack Problem

Input: an integer bound W > 0

a set of n items, each with an integer weight wi > 0

a value vi > 0 for each item i

Output: a subset S of items that

X

X

maximizes

vi

s.t.

wi ≤ W.

i∈S

i∈S

Motivation: you have budget W , and want to buy a subset

of items, so as to spend as much money as possible.

Greedy Algorithm

1

sort items according to non-increasing order of vi /wi

2

for each item in the ordering

3

take the item if we have enough budget

Bad example: W = 100, n = 2, w = (1, 100), v = (1.1, 100).

Optimum takes item 2 and greedy takes item 1.

Indeed, greedy algorithm “almost” works, except for the last

item we missed

Fractional Knapsack Problem

Input: an integer bound W > 0,

a set of n items, each with an integer weight wi > 0

a value vi > 0 for each item i

Output: a vector (α1 , α2 , · · · , αn ) ∈ [0, 1]n

maximizes

n

X

i=1

αi vi

s.t.

n

X

i=1

αi wi ≤ W.

sort items according to non-increasing order of vi /wi ,

for each item according to the ordering, take as much fraction

of the item as possible.

Greedy is Optimum for Fractional Knapsack

sort items according to selecting the item with the largest

vi /wi ,

for each item according to the ordering, take as much fraction

of the item as possible.

W = 100, n = 2, w = (1, 100), v = (1.1, 100).

α1 = 1, α2 = 0.99, value = 1.1 + 99 = 100.1.

Idea of proof: exchanging argument.

DP for ({0, 1}-)Knapsack Problem

opt[i, W 0 ]: the optimum value when budget is W 0 and items

are {1, 2, 3, · · · , i}.

If i = 0, opt[i, W 0 ] = 0 for every W 0 = 0, 1, 2, · · · , W .

If i > 0 and wi > W 0 , then

opt[i, W 0 ] = opt[i − 1, W 0 ].

If i > 0 and wi ≤ W 0 , then

0

opt[i, W ] = max

opt[i − 1, W 0 ]

.

opt[i − 1, W 0 − wi ] + vi

Path-Structure Dynamic Programming

Weighted Interval Scheduling:

opt[i] = max{opt[i − 1], opt[pi ] + vi }

Knapsack Problem:

opt[i, W 0 ] = max{opt[i − 1, W 0 ], opt[i − 1, W 0 − wi ] + vi }

0

1

2

···

n−1

Cell in the table indexed by (i, C)

i: index of node in the path

C: additional information.

opt(i, C) depends on the values of opt(j, C 0 ) for j < i.

n

Exercise: Items with 3 Parameters

Input: integer bounds W > 0, S > 0,

a set of n items, each with an integer weight wi > 0

a size si > 0 for each item i

a value vi > 0 for each item i

Output: a subset S of items that

X

maximizes

vi

s.t.

i∈S

X

i∈S

wi ≤ W and

X

i∈S

si ≤ S

Exercise: Maximum-Weight Subsets with Gaps

Input: n, integers w1 , w2 , · · · , wn ≥ 0.

Output: a set S ⊆ {1, 2, 3 · · · , n} that

X

maximizes

wi

s.t.

i∈S

∀i, j ∈ S, i 6= j, we have |i − j| ≥ 2.

Example: n = 7, w = (10, 80, 100, 90, 30, 50, 70)

Choose item 2, 4, 7: value = 80 + 90 + 70 = 240

Exercise: Counting Number of Domino Coverings

Input: n

Output: number of ways to cover a n × 2 grid using domino

tiles

Example: 5 different ways if n = 4:

How about number of ways to cover a n × 3 grid?

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Subsequence

A = bacdca

C = adca

C is a subsequence of A

Def. Given two sequences A[1 .. n] and C[1 .. t] of letters, C is

called a subsequence of A if there exists integers

1 ≤ i1 < i2 < i3 < . . . < it ≤ n such that A[ij ] = C[j] for every

j = 1, 2, 3, · · · , t.

Exercise: how to check if sequence C is a subsequence of A?

Longest Common Subsequence

Input: A[1 .. n] and B[1 .. m]

Output: the longest common subsequence of A and B

Example:

A = bacdca

B = adbcda

LCS(A, B) = adca

Applications: edit distance (diff utility), similarity of DNAs

Matching View of LCS

b

a

c

d

c

a

a

d

b

c

d

a

Goal of LCS: find a maximum-size non-crossing matching

between letters in A and letters in B.

Reduce to Subproblems

A = bacdca

B = adbcda

either the last letter of A is not matched:

need to compute LCS(bacdc, adbc)

or the last letter of B is not matched:

need to compute LCS(bacd, adbcd)

Dynamic Programming for LCS

opt[i, j], 0 ≤ i ≤ n, 0 ≤ j ≤ m: length of longest common

sub-sequence of A[1 .. i] and B[1 .. j].

if i = 0 or j = 0, then opt[i, j] = 0.

if i > 0, j > 0, then

if A[i] = B[j]

opt[i −

( 1, j − 1] + 1

opt[i, j] =

opt[i − 1, j]

if A[i] 6= B[j]

max

opt[i, j − 1]

Dynamic Programming for LCS

1

2

3

4

5

6

7

8

9

10

11

for j ← 0 to m do

opt[0, j] ← 0

for i ← 1 to n

opt[i, 0] ← 0

for j ← 1 to m

if A[i] = B[j]

opt[i, j] ← opt[i − 1, j − 1] + 1, π[i, j] ← “-”

elseif opt[i, j − 1] ≥ opt[i − 1, j]

opt[i, j] ← opt[i, j − 1], π[i, j] ←“←”

else

opt[i, j] ← opt[i − 1, j], π[i, j] ← “↑”

Example

A

B

0

1

2

3

4

5

6

0

0

0

0

0

0

0

0

⊥

⊥

⊥

⊥

⊥

⊥

⊥

1

0⊥

0←

11↑

1↑

1↑

1-

1

b

a

2 3

a c

d b

2

0⊥

0←

1←

1←

22↑

2↑

3

0⊥

11←

1←

2←

2←

2←

4 5 6

d c a

c d a

4

5

0⊥ 0⊥

1← 1←

1← 1←

2- 2←

2← 33- 3←

3↑ 3←

6

0⊥

1←

22←

3←

3←

4-

Example: Find Common Subsequence

A

B

0

1

2

3

4

5

6

0

0

0

0

0

0

0

0

⊥

⊥

⊥

⊥

⊥

⊥

⊥

1

0⊥

0←

11↑

1↑

1↑

1-

1

b

a

2 3

a c

d b

2

0⊥

0←

1←

1←

22↑

2↑

3

0⊥

11←

1←

2←

2←

2←

4 5 6

d c a

c d a

4

5

0⊥ 0⊥

1← 1←

1← 1←

2- 2←

2← 33- 3←

3↑ 3←

6

0⊥

1←

22←

3←

3←

4-

Find Common Subsequence

1

2

3

4

5

6

7

8

9

i ← n, j ← m, S ←“”

while i > 0 and j > 0

if π[i, j] =“-” then

S ← A[i] o

n S, i ← i − 1, j ← j − 1

else if π[i, j] =“↑”

i←i−1

else

j ←j−1

return S

Variants of Problem

Edit Distance with Insertions and Deletions

Input: a string A

each time we can delete a letter from A or insert a

letter to A

Output: minimum number of operations (insertions or

deletions) we need to change A to B?

Example:

A = ocurrance, B = occurrence

3 operations: insert ’c’, remove ’a’ and insert ’e’

Obs. #OPs = length(A) + length(B) - 2 · length(LCS(A, B))

Variants of Problem

Edit Distance with Insertions, Deletions and Replacing

Input: a string A,

each time we can delete a letter from A, insert a letter

to A or change a letter

Output: how many operations do we need to change A to B?

Example:

A = ocurrance, B = occurrence.

3 operations: insert ’c’, change ’a’ to ’e’

No tight connection to LCS

Edit Distance (with Replacing)

opt[i, j], 0 ≤ i ≤ n, 0 ≤ j ≤ m: edit distance between

A[1 .. i] and B[1 .. j].

if i = 0 then opt[i, j] = j; if j = 0 then opt[i, j] = i.

if i > 0, j > 0, then

− 1, j − 1]

if A[i] = B[j]

opt[i

opt[i − 1, j] + 1

opt[i, j] =

min

if A[i] 6= B[j]

opt[i, j − 1] + 1

opt[i − 1, j − 1] + 1

More General Version of Edit Distance

insertion or deletion costs δ

replacing a letter x with a letter y costs cx,y (assume

cx,x = 0)

if i = 0 then opt[i, j] = jδ; if j = 0 then opt[i, j] = iδ.

if i > 0, j > 0, then

opt[i − 1, j] + δ

opt[i, j − 1] + δ

opt[i, j] = min

opt[i − 1, j − 1] + cA[i],B[j]

Def. A palindrome is a string which reads the same backward or

forward.

example: “racecar”, “wasitacaroracatisaw”, ”putitup”

Longest Palindrome Subsequence

Input: a sequence A

Output: the longest subsequence C of A that is a palindrome.

Example:

Input: acbcedeacab

Output: acedeca

Longest Path Problem in Edge Weighted DAG

Input: a DAG (Directed Acyclic Graph) G = (V, E);

for every e ∈ E, a weight we ∈ R≥0 ;

two special vertices s, t ∈ V

P

Output: the path P from s to t that maximizes e∈P we , i.e.,

the total weight of edges in the path

6

1

s

2

3

5

4

6

t

OPT=13

LCS as Longest-Path: Example

b

a

c

d

c

a

s

A = bacdca

B = adbcda

0 for vertical and

horizontal edges

1 for diagonal edges

a

d

b

c

d

a

t

LCS as a Longest-Path Problem

for every i ∈ {0, 1, 2, · · · , n} and j ∈ {0, 1, 2, 3, · · · , m},

there is a vertex vi,j

for every i ∈ {0, 1, 2, · · · , n} and j ∈ {1, 2, 3, · · · , m}, there

is a horizontal edge of value 0 from vi,j−1 to vi,j

for every i ∈ {1, 2, · · · , n} and j ∈ {0, 1, 2, 3, · · · , m}, there

is a vertical edge of value 0 from vi−1,j to vi,j

for every i ∈ {1, 2, · · · , n} and j ∈ {1, 2, 3, · · · , m} such

that A[i] = B[j], there is a diagonal edge of value 1 from

vi−1,j−1 to vi,j

goal is to compute the longest path from v0,0 to vn,m

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Computing the Length of LCS

1

2

3

4

5

6

7

8

9

10

11

for j ← 0 to m do

opt[0, j] ← 0

for i ← 1 to n

opt[i, 0] ← 0

for j ← 1 to m

if A[i] = B[j]

opt[i, j] ← opt[i − 1, j − 1] + 1

elseif opt[i, j − 1] ≥ opt[i − 1, j]

opt[i, j] ← opt[i, j − 1]

else

opt[i, j] ← opt[i − 1, j]

Obs. The i-th row of table only depends on (i − 1)-th row.

Reducing Space to O(n + m)

Obs. The i-th row of table only depends on (i − 1)-th row.

Q: How to use this observation to reduce space?

A: We only keep two rows: the (i − 1)-th row and the i-th row.

Linear Space Algorithm to Compute Length of LCS

1

2

3

4

5

6

7

8

9

10

11

12

for j ← 0 to m do

opt[0, j] ← 0

for i ← 1 to n

opt[i mod 2, 0] ← 0

for j ← 1 to m

if A[i] = B[j]

opt[i mod 2, j] ← opt[i − 1 mod 2, j − 1] + 1

elseif opt[i mod 2, j − 1] ≥ opt[i − 1 mod 2, j]

opt[i mod 2, j] ← opt[i mod 2, j − 1]

else

opt[i mod 2, j] ← opt[i − 1 mod 2, j]

return opt[n mod 2, m]

Linear Space ALgorithm to Recover LCS

| · | denotes length of a sequence

Lemma There is a q ∈ {0, 1, · · · , m}, such that LCS(A, B)

equals

LCS(A[1..n/2], B[1..q]) + LCS(A[n/2 + 1..n], B[q + 1..m])

n/2 + 1

n/2

A

B

q

q+1

n/2 + 1

n/2

A

B

q

q+1

Q: How can we find such a q using linear space?

A: Use both forward and backward DP

Q: After we found such a q, what can we do?

A: Use divide-and-conquer to solve two sub problems

Backward Dynamic Programming for LCS

opt[i, j], 1 ≤ i ≤ n + 1, 1 ≤ j ≤ m + 1: length of longest

common sub-sequence of A[i .. n] and B[j .. m].

if i = n + 1 or j = m + 1, then opt[i, j] = 0.

if i ≤ n, j ≤ m, then

if A[i] = B[j]

opt[i +

( 1, j + 1] + 1

opt[i, j] =

opt[i + 1, j]

if A[i] 6= B[j]

max

opt[i, j + 1]

Backward Dynamic Programming for LCS

1

2

3

4

5

6

7

8

9

10

11

12

for j ← 1 to m + 1 do

opt[n + 1 mod 2, j] ← 0

for i ← n downto 1

opt[i mod 2, n + 1] ← 0

for j ← m downto 1

if A[i] = B[j]

opt[i mod 2, j] ← opt[i + 1 mod 2, j + 1] + 1

elseif opt[i mod 2, j + 1] ≥ opt[i + 1 mod 2, j]

opt[i mod 2, j] ← opt[i mod 2, j + 1]

else

opt[i mod 2, j] ← opt[i + 1 mod 2, j]

return opt[1, m]

Q: How can we find such a q using linear space?

A: Use both forward and backward DP

opt: table used for forward DP

opt0 : table used for backward DP

forward DP: consider A[1..n/2] and B

backward DP: consider A[n/2 + 1..n] and B

find the q ∈ {0, 1, · · · , m} that maximizes

opt[n/2 mod 2, q] + opt0 [n/2 + 1 mod 2, q + 1]

Algorithm uses O(m) space

Q: How to use this observation to reduce space?

A: We only keep two rows: the (i − 1)-th row and the i-th row.

linear-space-LCS(A[1..n], B[1..m])

1

2

3

if n = 1 solve the problem directly and return

find q using O(m) space

return concatenation of linear-space-LCS(A[1..n/2], B[1..q])

and linear-space-LCS(A[n/2 + 1, n], B[q + 1, m])

we can reuse the space used for Statement 2

Space usage: O(n + m)

O(m + n) for storing input sequences and output sequence

O(m) for Statement 2

O(lg n) for storing the recursion tree

Analyze Running Time of Linear-Space-LCS

linear-space-LCS(A[1..n], B[1..m])

1

2

3

if n = 1 solve the problem directly and return

find q using O(m) space

return concatenation of linear-space-LCS(A[1..n/2], B[1..q])

and linear-space-LCS(A[n/2 + 1, n], B[q + 1, m])

Recurrence for running time:

T (n, m) ≤ cmn + T (n/2, q) + T (n/2, m − q)

To get intuition, let m = n and q = n/2:

T (n) ≤ 2T (n/2) + cn2 ⇒ T (n) = O(n2 )

T (n, m) ≤ cmn + T (n/2, q) + T (n/2, m − q)

Using substitution method to prove T (n, m) ≤ c0 nm:

T (n, m) ≤ cmn + T (n/2, q) + T (n/2, m − q)

≤ cmn + c0 (n/2)q + c0 (n/2)(m − q)

= cmn + c0 (n/2)m

= (c + c0 /2)nm = c0 nm

if c0 = 2c

Outline

1

Weighted Interval Scheduling

2

Subset Sum Problem

3

Knapsack Problem

4

Longest Common Subsequence

5

Longest Common Subsequence in Linear Space

6

Matrix Chain Multiplication

Matrix Chain Multiplication

Fact Multiplying two matrices of size r × k and k × c takes

r × k × c multiplications.

Matrix Chain Multiplication

Input: n matrices A1 , A2 , · · · , An of sizes

r1 × c1 , r2 × c2 , · · · , rn × cn , such that ci = ri+1 for

every i = 1, 2, · · · , n − 1.

Output: the order of computing A1 A2 · · · An with the minimum

number of multiplications

Example:

A1 : 10 × 100,

A2 : 100 × 5,

10 × 100

5 × 50

100 × 5

10 × 5

10 · 100 · 5

= 5000

10 × 50

10 · 5 · 50

= 2500

cost = 5000 + 2500 = 7500

A3 : 5 × 50

10 × 100

100 × 5

5 × 50

100 · 5 · 50

= 25000 100 × 50

10 · 100 · 50

= 50000

10 × 5

cost = 25000 + 50000 = 75000

(A1 A2 )A3 : 10 × 100 × 5 + 10 × 5 × 50 = 7500

A1 (A2 A3 ): 100 × 5 × 50 + 10 × 100 × 50 = 75000

Exercise: How many different ways are there to multiply n

matrics?

n = 4: there are 5 different ways

((A1 A2 )A3 )A4

(A1 (A2 A3 ))A4

(A1 A2 )(A3 A4 )

A1 ((A2 A3 )A4 )

A1 (A2 (A3 A4 ))

O(nc ) for some c, or cΩ(n) for some c?

exact answer: 2n−2

/n = (n − 1)-th Catalan number

n−1

1, 1, 2, 5, 14, 42, 132, 429, 1430, 4862, 16796, 58786,

208012, 742900, 2674440, 9694845, 35357670, 129644790,

477638700, · · ·

Matrix Chain Multiplication: Design DP

Given A1 , A2 , · · · , An

Assume the last step is (A1 A2 · · · Ai )(Ai+1 Ai+2 · · · An )

Cost of last step: r1 × ci × cn

Optimality for sub-instances: we need to compute

A1 A2 · · · Ai and Ai+1 Ai+2 · · · An optimally

opt[i, j] : the minimum cost of computing Ai Ai+1 · · · Aj

for every i ∈ {1, 2, · · · , n}: opt[i, i] = 0

for every i, j such that 1 ≤ i < j ≤ n:

opt[i, j] = min (opt[i, k] + opt[k + 1, j] + ri ck cj )

k:i≤k<j

Matrix-Chain-Multiplication: DP

1

2

3

4

5

6

7

8

9

let opt[i, i] ← 0 for every i = 1, 2, · · · , n

for l ← 2 to n

for i ← 1 to n − l + 1

j ←i+l−1

opt[i, j] ← ∞

for k ← i to j − 1

if opt[i, k] + opt[k + 1, j] + ri ck cj < opt[i, j]

opt[i, j] ← opt[i, k] + opt[k + 1, j] + ri ck cj

return opt[1, n]

matrix

A1

size

30 × 35

1

2

3

4

5

6

A2

35 × 15

A3

15 × 5

A4

5 × 10

A5

10 × 20

A6

20 × 25

1

2

3

4

5

6

0 15750 7875 9375 11875 15125

⊥

0

2625 4375 7125 10500

⊥

⊥

0

750 2500 5375

⊥

⊥

⊥

0

1000 3500

⊥

⊥

⊥

⊥

0

5000

⊥

⊥

⊥

⊥

⊥

0

opt[3, 6] = minimum of

opt[3, 3] + f [4, 6] + r3 c3 c6 =

0 + 3500 + 15 × 5 × 25 = 5375

opt[3, 4] + f [5, 6] + r3 c4 c6 = 750 + 5000 + 15 × 10 × 25 = 9500

opt[3, 5] + f [6, 6] + r3 c5 c6 = 2500 + 0 + 15 × 20 × 25 =10000

Recover the Optimum Way of Multiplication

1

2

3

4

5

6

7

8

9

10

let opt[i, i] ← 0 for every i = 1, 2, · · · , n

for l ← 2 to n

for i ← 1 to n − l + 1

j ←i+l−1

opt[i, j] ← ∞

for k ← i to j − 1

opt[i, k] + opt[k + 1, j] + ri ck cj < opt[i, j]

opt[i, j] ← opt[i, k] + opt[k + 1, j] + ri ck cj

alertπ[i, j] ← k

return opt[1, n]

Constructing Optimal Solution

Print-Optimal-Order(i, j)

1

2

3

4

5

6

7

if i = j

print(“A”i )

else

print(“(”)

Print-Optimal-Order(i, π[i, j])

Print-Optimal-Order(π[i, j] + 1, j)

print(“)”)

Similar Problem: Optimum Binary Search Tree

n elements e1 < e2 < e3 < · · · < en

ei has frequency fi

goal: build a binary search tree for {e1 , e2 , · · · , en } with the

minimum accessing cost:

n

X

i=1

fi × (depth of ei in the tree)

Optimum Binary Search Tree

Example: f1 = 10, f2 = 5, f3 = 3

e1

e2

e2

e1

e3

10 × 1 + 5 × 2 + 3 × 3 = 29

10 × 2 + 5 × 1 + 3 × 2 = 31

10 × 3 + 5 × 2 + 3 × 1 = 43

e3

e3

e2

e1

suppose we decided to let ei be the root

e1 , e2 , · · · , ei−1 are on left sub-tree

ei+1 , ei+2 , · · · , en are on right sub-tree

dj : depth of ej in our tree

C, CL , CR : cost of tree, left sub-tree and right sub-tree

respectively

C=

n

X

f j dj =

j=1

=

=

n

X

j=1

n

X

j=1

n

X

fj +

j=1

fj +

i−1

X

j=1

n

X

j=1

fj (dj − 1)

fj (dj − 1) +

fj + CL + CR

n

X

j=i+1

fj (dj − 1)

C=

n

X

fj + CL + C R

j=1

In order to minimize C, need to minimize CL and CR

respectively

opti,j : the optimum cost for the instance (fi , fi+1 , · · · , fj )

for every i ∈ {1, 2, · · · , n, n + 1}: opt[i, i − 1] = 0

for every i, j such that 1 ≤ i ≤ j ≤ n,

opt[i, j] =

j

X

k=i

fk + min

k:i≤k≤j

opt[i, k − 1] + opt[k + 1, j]