Mixing Dynamic Linear Models Kevin R. Keane Computer Science & Engineering

advertisement

Mixing Dynamic Linear Models

Kevin R. Keane

krkeane@buffalo.edu

Computer Science & Engineering

University at Buffalo SUNY

Modeling label distributions on a lattice

prior distribution of labels

p(L)

likelihood of observed data given a label

p(D|L)

posterior distribution of labels given data

p(L|D) ∝ p(D|L)p(L)

obtain other distributions of interest

Z

p(X|D) ∝ p(X|L)p(L|D)dL

Mixing Dynamic Linear Models – 1/33

A slight twist

Usual setup

model distribution of labels at lattice sites

This project

model distribution of linear models at lattice sites

Mixing Dynamic Linear Models – 2/33

Mixing Models

Why bother modeling distributions over models?

Avoid the need to carefully specify model

parameters in advance

use simple components

grid approximation spanning likely

parameter values

let the data figure it out . . .

To obtain better models . . .

Mixing Dynamic Linear Models – 3/33

What’s a better model?

A model with higher posterior density for quantities of

interest. For example:

parameters in a stock returns model

parameters describing a plane

...

Mixing Dynamic Linear Models – 4/33

What’s a better model?

Posterior Density P( Θ D )

2

"Better" model

1.5

1

0.5

0

−3

−2

−1

0

Value of Θ

1

2

3

Mixing Dynamic Linear Models – 5/33

Bayesian framework

prior distribution of models

p(M )

likelihood of observed data given a model

p(D|M )

posterior distribution of models given data

p(M |D) ∝ p(D|M )p(M )

obtain other distributions of interest

Z

p(X|D) ∝ p(X|M )p(M |D)dM

Mixing Dynamic Linear Models – 6/33

Drilling down further . . .

Linear regression models

Y = F 0θ + Yi is the observed response

Fi is the regression vector / independent variables

θ is the regression parameter vector

i is the error of fit

FYI, ordinary least squares estimate of θ is

0 −1

θ̂ = (F F )

FY

Mixing Dynamic Linear Models – 7/33

Examples of linear models

Return of a stock is a function of market, industry and

stock specific return components

1

α

0

r = F θ + , F = rM , θ = βM .

rI

βI

Points on a plane

0 = F 0 θ + ,

x

y

F = ,

z

−1

A

B

θ = .

C

D

Mixing Dynamic Linear Models – 8/33

Dynamic Linear Models

Ordinary least squares yields a single estimate θ̂ of the

regression parameter vector θ for the entire data set.

we may not have a finite data set, but rather an

infinite data stream!

we expect / permit θ to vary (slightly) as we traverse

the lattice, θs ≈ θt . So, our models are dynamic.

Dynamic linear models (West & Harrison) are a

generalized form of / able to represent:

Kalman filters (Kalman)

flexible least squares (Kalaba & Tesfatsion)

linear dynamical systems (Bishop)

...

Mixing Dynamic Linear Models – 9/33

Specifying a dynamic linear model {Ft , G, V, W }

Ft0 is a row in the design matrix

vector of independent variables effecting Yt

G is the evolution matrix

captures deterministic changes to θ

θt ≈ Gθt−1

V is the observational variance

a.k.a. Var() in ordinary least squares

W is the evolution variance matrix

captures random changes to θ

θt = Gθt−1 + wt , wt ∼ N (0, W )

G and W make the linear model dynamic

Mixing Dynamic Linear Models – 10/33

Specifying a dynamic linear model {Ft , G, V, W }

The observation equation is

Yt = Ft0 θt + νt ,

The evolution equation is

θt = Gθt−1 + ωt ,

νt ∼ N (0, V )

ωt ∼ N (0, W )

The initial information is summarized

(θ0 |D0 ) ∼ N (m0 , C0 )

Information at time t

Dt = {Yt , Dt−1 }

Mixing Dynamic Linear Models – 11/33

Experiment #1

Specify 3 DLMs

{F = 1, G = 1, W = 5.0000, V = 1}

{F = 1, G = 1, W = 0.0500, V = 1}

{F = 1, G = 1, W = 0.0005, V = 1}

Generate 1000 observations YM

Select a model, generate 200 observations

Select a model, generate 200 observations

...

Mixing Dynamic Linear Models – 12/33

Generated observations Yt from 3 DLMs

30

Observed Data Y

t

W=5

20

W=.0005

10

W=.05

DLM {1,1,1,W}

0

−10

0

200

400

600

Time t

800

1000

local consistency is a function of Wm . . .

Mixing Dynamic Linear Models – 13/33

Inference with a dynamic linear model {Ft , G, V, W }

Posterior at t − 1,

Prior at t,

(θt−1 |Dt−1 ) ∼ N (mt−1 , Ct−1 )

(θt |Dt−1 ) ∼ N (at , Rt )

at = Gmt−1 ,

Rt = GCt−1 G0 + W

Mixing Dynamic Linear Models – 14/33

Inference with a dynamic linear model {Ft , G, V, W }

Posterior at t − 1,

Prior at t,

(θt |Dt−1 ) ∼ N (at , Rt )

at = Gmt−1 ,

(θt−1 |Dt−1 ) ∼ N (mt−1 , Ct−1 )

Rt = GCt−1 G0 + W

One-step forecast at t,

ft = Ft0 at

(Yt |Dt−1 ) ∼ N (ft , Qt )

Qt = Ft0 Rt Ft + V

Mixing Dynamic Linear Models – 15/33

Inference with a dynamic linear model {Ft , G, V, W }

Posterior at t − 1,

Prior at t,

(θt |Dt−1 ) ∼ N (at , Rt )

at = Gmt−1 ,

Rt = GCt−1 G0 + W

One-step forecast at t,

ft = Ft0 at

(θt−1 |Dt−1 ) ∼ N (mt−1 , Ct−1 )

(Yt |Dt−1 ) ∼ N (ft , Qt )

Qt = Ft0 Rt Ft + V

One-step forecast is the model likelihood

(Yt |Dt−1 ) = (Yt , Dt−1 |Dt−1 , {Ft , G, V, W }) = (D|M )

Mixing Dynamic Linear Models – 16/33

Inference with a dynamic linear model {Ft , G, V, W }

Prior at t,

(θt |Dt−1 ) ∼ N (at , Rt )

Rt = GCt−1 G0 + W

at = Gmt−1 ,

One-step forecast at t,

Qt = Ft0 Rt Ft + V

ft = Ft0 at

Posterior at t,

(Yt |Dt−1 ) ∼ N (ft , Qt )

(θt |Dt ) ∼ N (mt , Ct )

e t = Yt − f t

mt = at + At et

At =

−1

Rt Ft Qt

Ct = Rt − At Qt A0t

Mixing Dynamic Linear Models – 17/33

Experiment #1 continued / inference about θt

Specify 3 DLMs

{F = 1, G = 1, W = 5.0000, V = 1}

{F = 1, G = 1, W = 0.0500, V = 1}

{F = 1, G = 1, W = 0.0005, V = 1}

Generate 1000 observations YM

Select a model, generate 200 observations

Select a model, generate 200 observations

...

Compute posteriors (θt−1 |M = m, Dt−1 )

Mixing Dynamic Linear Models – 18/33

Posterior mean mt from 3 DLMs

30

W=5

W=.05

W=.0005

m

t

20

10

DLM {1,1,1,W}

0

−10

0

200

400

600

Time t

800

1000

(θt |Dt ) ∼ N (mt , Ct )

Mixing Dynamic Linear Models – 19/33

Experiment #1 continued / inference about models

Specify 3 DLMs

{F = 1, G = 1, W = 5.0000, V = 1}

{F = 1, G = 1, W = 0.0500, V = 1}

{F = 1, G = 1, W = 0.0005, V = 1}

Generate 1000 observations YM

Select a model, generate 200 observations

Select a model, generate 200 observations

...

Compute posteriors (θt−1 |M = m, Dt−1 )

Assume prior p(M = m) =

Compute likelihoods (Dt |M = m)

Compute posterior model probabilities p(m|Dt )

1

3

Mixing Dynamic Linear Models – 20/33

Trailing interval model log likelihoods

1

10−day log likelihood

−10

2

−10

W=5 W=.05 W=.0005

3

−10

0

200

400

600

Time t

800

1000

Mixing Dynamic Linear Models – 21/33

Posterior model probabilities and ground truth

W=5 W=.05 W=.0005

1

P( M | D )

0.8

0.6

0.4

0.2

0

−0.2

0

200

400

600

Time t

800

1000

Mixing Dynamic Linear Models – 22/33

Modeling interpretation / tricks

Large W permits the state vector θ to vary abruptly

in a stochastic manner

Examples of when is this important?

stock price model — one company aquires

another company

planar geometry model — a building’s plane

intersects the ground plane

Easy to obtain parameter estimates from a mixture

model. For example,evolution variance WM is

obtained from individual model variances,

likelihoods, and posterior probabilities

Z

Z

WM =

Wm p(Wm |m)p(m|D)dm =

Wm p(m|D)dm

M

M

Mixing Dynamic Linear Models – 23/33

A statistical arbitrage application

Montana, Triantafyllopoulos, and Tsagaris.

Flexible least squares for temporal data mining and

statistical arbitrage. Expert Systems with

Applications, 36(2):2819-2830, 2009.

Parameter δ = WW+V controls adaptiveness.

W = 0, δ = 0 equivalent to ordinary least squares.

Published results for 11 constant parameter models

Model S&P 500 Index return as a function of the

largest principal component return (score) of the

underlying stocks

i

i

h

h

rs&p 500 = F 0 θ + , F = rpca , θ = βpca .

Mixing Dynamic Linear Models – 24/33

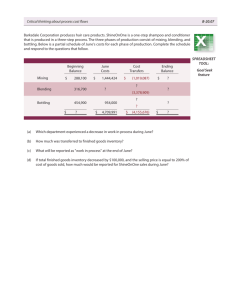

Experiment #2

Data used is the log price return series

for SPY and RSP

SPY is the capitalization weighted S&P 500 ETF

RSP is the equal-weighted S&P 500 ETF; our proxy

for Montana’s βpca

i

h

h i

rspy = F 0 θ + , F = rrsp , θ = βrsp .

Mixing Dynamic Linear Models – 25/33

250

200

RSP

150

100

50

SPY

2004

2006

2008

2010

0.100

sqrt(W)

0.010

0.001

sqrt(V)

2004

2006

2008

2010

Experiment #2 continued

Data used is the log price return series

for SPY and RSP

SPY is the capitalization weighted S&P 500 ETF

RSP is the equal-weighted S&P 500 ETF; our proxy

for Montana’s βpca

i

h

h i

rspy = F 0 θ + , F = rrsp , θ = βrsp .

Can a mixture model outperform the

ex post best single DLM?

Mixing Dynamic Linear Models – 28/33

150

100

Mixture Model

50

Best DLM {F,1,1,W=221}

DLMs {F,1,1,W}

0

-50

2004

2006

2008

2010

3.0

Mixture Model

2.5

2.0

1.5

10

DLMs {F,1,1,W}

100

1000

Future work

Longer term (after this semester), generalize the

one-dimensional DLM framework to permit

application to images and video.

For video, would probably need to consider

variation W in different directions,

δθ

,

δx

δθ

,

δy

and

δθ

δt

.

(image coordinates x and y, frame t).

Mixing Dynamic Linear Models – 32/33

THANK YOU.

Mixing Dynamic Linear Models – 33/33