Introduction to Machine Learning 1.2 Kernel Regression

advertisement

1.2 Kernel Regression

1.2

2

Kernel Regression

• Ridge regression estimate:

Introduction to Machine Learning

• Prediction at x∗ :

CSE474/574: Kernel Methods

w = (λID + X> X)−1 X> y

y ∗ = w> x∗ = ((λID + X> X)−1 X> y)> x∗

• Still needs training and test examples as D length vectors

Varun Chandola <chandola@buffalo.edu>

• Rearranging above (Sherman-Morrison-Woodbury formula or Matrix Inversion Lemma [See Murphy

p120, Matrix Cookbook])

y ∗ = y> (λIN + XX> )−1 Xx∗

Outline

The above mentioned “rearrangement” can be obtained using the Matrix Inversion Lemma, which in

general term states for matrices E,F,G,H:

Contents

(E − FH−1 G)−1 FH−1 = E−1 F(H − GE−1 F)−1

1 Kernel Methods

1.1 Extension to Non-Vector Data Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Kernel Regression . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1

1

2 Kernel Trick

2.1 Choosing Kernel Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Constructing New Kernels Using Building Blocks . . . . . . . . . . . . . . . . . . . . . . .

3

3

3

Consider the prediction equation for ridge regression (we use the fact that (λID + X X) is a square and

symmetric matrix):

3 Kernels

3.1 RBF Kernel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2 Probabilistic Kernel Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3 Kernels for Other Types of Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

4

4

5

Using the result in (1) with a = λ, F = X, and X> = G:

4 More About Kernels

4.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Gaussian Kernel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

5

5

5 Kernel Machines

5.1 Generalizing RBF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6

7

Setting H = I and E = −aI, where a is a scalar value, we get:

(aI + FG)−1 F = F(aI + GF)−1

y ∗ = ((λID + X> X)−1 X> y)> x∗

= y> X(λID + X> X)−1 x∗

y ∗ = y> (λIN + XX> )−1 Xx∗

XX> ?

Xx∗ ?

1

hx1 , x1 i hx1 , x2 i

hx2 , x1 i hx1 , x2 i

>

XX =

..

..

.

.

hxN , x1 i hxN , x2 i

hx1 , x∗ i

hx2 , x∗ i

Xx∗ =

..

.

Extension to Non-Vector Data Examples

• What if x ∈

/ <D ?

• Does w> x make sense?

hxN , x∗ i

• Consider a set of P functions that can be applied on input example x

• How to adapt?

1. Extract features from x

2. Is not always possible

• Sometimes it is easier/natural to compare two objects.

– A similarity function or kernel

• Domain-defined measure of similarity

Example 1. Strings: Length of longest common subsequence, inverse of edit distance

Example 2. Multi-attribute Categorical Vectors: Number of matching values

· · · hx1 , xN i

· · · hx2 , xN i

..

..

.

.

· · · hxN , xN i

Kernel Methods

1.1

(1)

>

• Prediction:

φ = {φ1 , φ2 , . . . , φP }

φ2 (x1 ) · · · φP (x1 )

φ2 (x2 ) · · · φP (x2 )

..

..

..

.

.

.

φ1 (xN ) φ2 (xN ) · · · φP (xN )

φ1 (x1 )

φ1 (x2 )

Φ = ..

.

y ∗ = y> (λIN + ΦΦ> )−1 Φφ(x∗ )

• Each entry in ΦΦ> is hφ(x), φ(x0 )i

We have already seen one such non-linear transformation in which one attribute is expanded to {1, x, x2 , x3 , . . . , xd

2. Kernel Trick

2

3

Kernel Trick

3. Kernels

3

4

Kernels

• Replace dot product hxi , xj i with a function k(xi , xj )

• If K is positive definite - Mercer Kernel

• Replace XX> with K

• K - Gram Matrix

• Radial Basis Function or Gaussian Kernel

1

k(xi , xj ) = exp − 2 kxi − xj k2

2σ

• k - kernel function

• Cosine Similarity

K[i][j] = k(xi , xj )

k(xi , xj ) =

– Similarity between two data objects

Kernel Regression

y ∗ = y> (λIN + K)−1 k(X, x∗ )

x>

i xj

kxi kkxj k

One can start with a Mercer kernel and show through the Mercer’s theorem how it can be expressed

as an inner product. Since K is positive definite we can compute an eigenvector decomposition:

K = U> ΛU

2.1

Choosing Kernel Functions

Each element of K can be rewritten as:

• Already know the simplest kernel function:

k(xi , xj ) =

1

x>

i xj

1

Let φ(xi ) = Λ 2 U:,i . Then we can write:

Kij = φ(xi )> φ(xj )

Approach 1: Start with basis functions

k(xi , xj ) = φ(xi )> φ(xj )

Approach 2: Direct design (good for non-vector inputs)

3.1

RBF Kernel

• Measure similarity between xi and xj

• Gram matrix must be positive semi-definite

• k should be symmetric

For instance, consider the following kernel function for two-dimensional inputs, (x = (x1 , x2 )):

k(x, z) =

=

=

=

(x> z)2

x21 z12 + 2x1 z1 x2 z2 + x22 z22

√

√

(x21 , 2x1 x2 , x22 )> (x2z , 2z1 z2 , z22 )

φ(x)> φ(z)

where the feature mapping φ(x) is defined as:

√

φ(x) = (x21 , 2x1 x2 , x22 )>

2.2

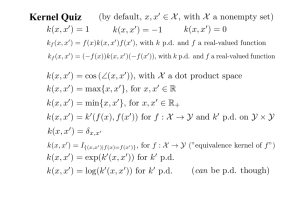

Constructing New Kernels Using Building Blocks

k(xi , xj )

k(xi , xj )

k(xi , xj )

k(xi , xj )

k(xi , xj )

k(xi , xj )

=

=

=

=

=

=

ck1 (xi , xj )

f (x)k1 (xi , xj )f (xj )

q(k1 (xi , xj )) q is a polynomial

exp(k1 (xi , xj ))

k1 (xi , xj ) + k2 (xi , xj )

k1 (xi , xj )k2 (xi , xj )

1

Kij = (Λ 2 U:,i )> (Λ 2 U:,j )

1

k(xi , xj ) = exp − 2 ||xi − xj ||2

2σ

• Mapping inputs to an infinite dimensional space

Whenever presented with a “potential” kernel function, one needs to ensure that it is indeed a valid kernel.

This can be done in two ways, one through functional analysis and second by decomposing the function

into a valid combination of valid kernel functions. For instance, for the Gaussian kernel, one can note that:

||xi − xj ||2 = x> xi + (xj )> xj − 2x>

i xj

Which means that the Gaussian kernel function can be written as:

k(xi , xj ) = exp(

x> xj

(xj )> xj

x>

i xi

)exp( i 2 )exp(

)

2

2σ

2σ

2σ 2

All three individual exponents are valid covariance functions and hence the product of these is also a valid

covariance function.

3.2

Probabilistic Kernel Functions

• Allows using generative distributions in discriminative settings

• Uses class-independent probability distribution for input x

k(xi , xj ) = p(xi |θ)p(xj |θ)

• Two inputs are more similar if both have high probabilities

Bayesian Kernel

k(xi , xj ) =

Z

p(xi |θ)p(xj |θ)p(θ)dθ

3.3 Kernels for Other Types of Data

5

5. Kernel Machines

6

5

0

x

−5

−8 −6 −4 −2

0

2

4

6

8

50

40

30

20

10

0

x2

40

Kernels for Other Types of Data

k(xi , xj ) = exp(−x2i )exp(−x2j )exp(2xi xj )

∞

X

2k xki xkj

= exp(−x2i )exp(−x2j )

k!

k=0

∞

k/2

X 2

2k/2

√ xki exp(−x2i )

√ xkj exp(−x2j )

=

k!

k!

k=0

• Pyramid Kernels

More About Kernels

4.1

Motivation

• x∈<

• Using Maclaurin Series Expansion

• No linear separator

k(xi , xj ) =

• Map x → {x, x2 }

1

21/2 x1 exp(−x2 )

i

2/2 i

2 x2 exp(−x2 )

2 i

i

..

.

• Separable in 2D space

×

1

>

21/2 x1j exp(−x2j )

2/2

2 x2 exp(−x2 )

2 j

j

..

.

One can note above that since computing the Gaussian kernel is same as taking a dot product of two

vectors of infinite length, it is equivalent to mapping the input features into an infinite dimensional space.

• x∈<

2

• No linear separator

√

• Map x → {x21 , 2x1 x2 , x22 }

5

Kernel Machines

• We can use kernel function to generate new features

• A circle as the decision boundary

4.2

0−20

0 20

10

• Assume σ = 1 and x ∈ < (denoted as x)

• String Kernel

4

20

• What about the Gaussian kernel (radial basis function)?

1

k(xi , xj ) = exp − 2 ||xi − xj ||2

2σ

x

3.3

30

• Evaluate kernel function for each input and a set of K centroids

φ(x) = [k(x, µ1 ), k(x, µ2 ), . . . , k(x, µK )]

Gaussian Kernel

• The squared dot product kernel (xi , xj ∈ <2 ):

k(xi , xj ) = xi > xj , φ(xi )> φ(xj )

√

φ(xi ) = {x2i1 , 2xi1 xi2 , x2i2 }

y = w> φ(x),

y ∼ Ber(w> φ(x))

• If k is a Gaussian kernel ⇒ Radial Basis Function Network (RBF)

• How to choose µi ?

– Clustering

– Random selection

5.1 Generalizing RBF

5.1

7

Generalizing RBF

• Another option: Use every input example as a “centroid”

φ(x) = [k(x, x1 ), k(x, x2 ), . . . , k(x, xN )]

References