Introduction to Machine Learning 2 Logistic Regression

advertisement

2. Logistic Regression

2

2

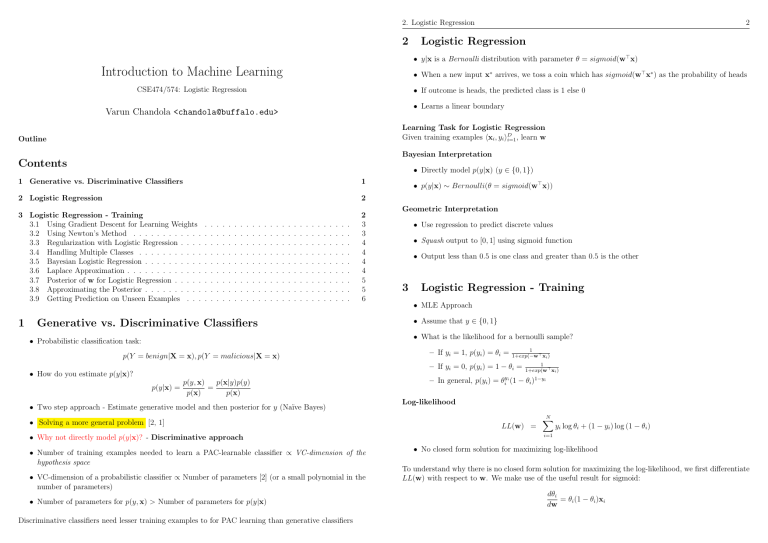

Logistic Regression

• y|x is a Bernoulli distribution with parameter θ = sigmoid(w> x)

Introduction to Machine Learning

• When a new input x∗ arrives, we toss a coin which has sigmoid(w> x∗ ) as the probability of heads

• If outcome is heads, the predicted class is 1 else 0

CSE474/574: Logistic Regression

• Learns a linear boundary

Varun Chandola <chandola@buffalo.edu>

Learning Task for Logistic Regression

Given training examples hxi , yi iD

i=1 , learn w

Outline

Bayesian Interpretation

Contents

1 Generative vs. Discriminative Classifiers

1

2 Logistic Regression

2

3 Logistic Regression - Training

3.1 Using Gradient Descent for Learning Weights

3.2 Using Newton’s Method . . . . . . . . . . . .

3.3 Regularization with Logistic Regression . . . .

3.4 Handling Multiple Classes . . . . . . . . . . .

3.5 Bayesian Logistic Regression . . . . . . . . . .

3.6 Laplace Approximation . . . . . . . . . . . . .

3.7 Posterior of w for Logistic Regression . . . . .

3.8 Approximating the Posterior . . . . . . . . . .

3.9 Getting Prediction on Unseen Examples . . .

2

3

3

4

4

4

4

5

5

6

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Generative vs. Discriminative Classifiers

• Directly model p(y|x) (y ∈ {0, 1})

• p(y|x) ∼ Bernoulli(θ = sigmoid(w> x))

Geometric Interpretation

• Use regression to predict discrete values

• Squash output to [0, 1] using sigmoid function

• Output less than 0.5 is one class and greater than 0.5 is the other

3

Logistic Regression - Training

• MLE Approach

• Assume that y ∈ {0, 1}

• What is the likelihood for a bernoulli sample?

• Probabilistic classification task:

p(Y = benign|X = x), p(Y = malicious|X = x)

– If yi = 1, p(yi ) = θi =

1

1+exp(−w> xi )

– If yi = 0, p(yi ) = 1 − θi =

• How do you estimate p(y|x)?

p(y|x) =

p(x|y)p(y)

p(y, x)

=

p(x)

p(x)

• Two step approach - Estimate generative model and then posterior for y (Naı̈ve Bayes)

• Solving a more general problem [2, 1]

• Why not directly model p(y|x)? - Discriminative approach

• Number of training examples needed to learn a PAC-learnable classifier ∝ VC-dimension of the

hypothesis space

• VC-dimension of a probabilistic classifier ∝ Number of parameters [2] (or a small polynomial in the

number of parameters)

• Number of parameters for p(y, x) > Number of parameters for p(y|x)

Discriminative classifiers need lesser training examples to for PAC learning than generative classifiers

1

1+exp(w> xi )

– In general, p(yi ) = θiyi (1 − θi )1−yi

Log-likelihood

LL(w) =

N

X

i=1

yi log θi + (1 − yi ) log (1 − θi )

• No closed form solution for maximizing log-likelihood

To understand why there is no closed form solution for maximizing the log-likelihood, we first differentiate

LL(w) with respect to w. We make use of the useful result for sigmoid:

dθi

= θi (1 − θi )xi

dw

3.1 Using Gradient Descent for Learning

Weights

Using this result we obtain:

3

3.3

N

X

d

LL(w) =

dw

i=1

N

X

=

3.3 Regularization with Logistic Regression

i=1

yi

(1 − yi )

θi (1 − θi )xi −

θi (1 − θi )xi

θi

1 − θi

(yi (1 − θi ) − (1 − yi )θi )xi

Regularization with Logistic Regression

• Overfitting is an issue, especially with large number of features

• Add a Gaussian prior ∼ N (0, τ 2 )

• Easy to incorporate in the gradient descent based approach

1

LL0 (w) = LL(w) − λw> w

2

N

X

=

(yi − θi )xi

d

d

LL0 (w) =

LL(w) − λw

dw

dw

H 0 = H − λI

i=1

Obviously, given that θi is a non-linear function of w, a closed form solution is not possible.

3.1

Using Gradient Descent for Learning Weights

• Compute gradient of LL with respect to w

• A convex function of w with a unique global maximum

N

X

d

LL(w) =

(yi − θi )xi

dw

i=1

• Update rule:

wk+1

4

where I is the identity matrix.

3.4

Handling Multiple Classes

• One vs. Rest and One vs. Other

• p(y|x) ∼ M ultinoulli(θ)

• Multinoulli parameter vector θ is defined as:

d

LL(wk )

= wk + η

dwk

exp(wj> x)

θj = PC

>

k=1 exp(wk x)

• Multiclass logistic regression has C weight vectors to learn

0

−0.5

3.5

• How to get the posterior for w?

10

5

0 0

3.2

Bayesian Logistic Regression

2

4

6

8

10

• Not easy - Why?

Using Newton’s Method

Laplace Approximation

• Setting η is sometimes tricky

• We do not know what the true posterior distribution for w is.

• Too large – incorrect results

• Is there a close-enough (approximate) Gaussian distribution?

• Too small – slow convergence

One should note that we used a Gaussian prior for w which is not a conjugate prior for the Bernoulli

distribution used in the logistic regression. In fact there is no convenient prior that may be used for

logistic regression.

• Another way to speed up convergence:

Newton’s Method

wk+1 = wk + ηH−1

k

d

LL(wk )

dwk

• Hessian or H is the second order derivative of the objective function

• Newton’s method belong to the family of second order optimization algorithms

• For logistic regression, the Hessian is:

H=−

X

i

θi (1 − θi )xi x>

i

3.6

Laplace Approximation

Problem Statement

How to approximate a posterior with a Gaussian distribution?

• When is this needed?

– When direct computation of posterior is not possible.

– No conjugate prior /

3.7 Posterior of w for Logistic Regression

5

• Assume that posterior is:

1 −E(w)

e

Z

E(w) is energy function ≡ negative log of unnormalized log posterior

3.9 Getting Prediction on Unseen Examples

3.9

6

Getting Prediction on Unseen Examples

p(w|D) =

p(y|x) =

Z

• Let w∗ be the mode or expected value of the posterior distribution of w

1. Use a point estimate of w (MLE or MAP)

• Taylor series expansion of E(w) around the mode

2. Analytical Result

E(w) = E(w∗ ) + (w − w∗ )> E 0 (w) + (w − w∗ )> E 00 (w)(w − w∗ ) + . . .

≈ E(w∗ ) + (w − w ∗ )> E 0 (w) + (w − w∗ )> E 00 (w)(w − w∗ )

E 0 (w) = ∇ - first derivative of E(w) (gradient) and E 00 (w) = H is the second derivative (Hessian)

3. Monte Carlo Approximation

• Numerical integration

• Sample finite “versions” of w using p(w|D)

p(w|D) = N (wM AP , H−1 )

• Since w∗ is the mode, the first derivative or gradient is zero

E(w) ≈ E(w∗ ) + (w − w∗ )> H(w − w∗ )

• Posterior p(w|D) may be written as:

1 −E(w∗ )

1

e

exp − (w − w∗ )> H(w − w∗ )

Z

2

= N (w∗ , H−1 )

p(w|D) ≈

∗

w is the mode obtained by maximizing the posterior using gradient ascent

3.7

Posterior of w for Logistic Regression

• Prior:

p(D|w) =

N

Y

i=1

1

>

1+e−w xi

• Posterior:

p(w|D) =

3.8

• Compute p(y|x) using the samples and add

Using point estimate of w means that the integral disappears from the Bayesian averaging equation.

References

References

[1] A. Y. Ng and M. I. Jordan. On discriminative vs. generative classifiers: A comparison of logistic

regression and naive bayes. In T. G. Dietterich, S. Becker, and Z. Ghahramani, editors, NIPS, pages

841–848. MIT Press, 2001.

[2] V. Vapnik. Statistical learning theory. Wiley, 1998.

p(w) = N (0, τ 2 I)

• Likelihood of data

where θi =

p(y|x, w)p(w|D)dw

θiyi (1 − θi )1−yi

N (0, τ 2 I)

R

QN

yi

i=1 θi (1

− θi )1−yi

p(D|w)dw

Approximating the Posterior

• Approximate posterior distribution

p(w|D) = N (wM AP , H−1 )

• H is the Hessian of the negative log-posterior w.r.t. w

Hessian (or H) is a matrix that is obtained by double differentiation of a scalar function of a vector. In

this case the scalar function is the negative log-posterior of w:

H = ∇2 (− log p(D|w) − log p(w))