A Color Spatial Display

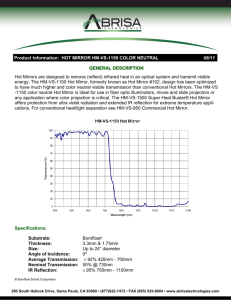

advertisement