Numerische Mathematik A Monte Carlo Method for High ...

advertisement

Numerische

Mathematik

Numer. Math. 55, 137-157 (1989)

~ Springer-Verlag 1989

A Monte Carlo Method for High Dimensional Integration

Yosihiko Ogata

The Institute of Statistical Mathematics, Minami-Azabu 4-6-7, Minato-ku, Tokyo 106, Japan

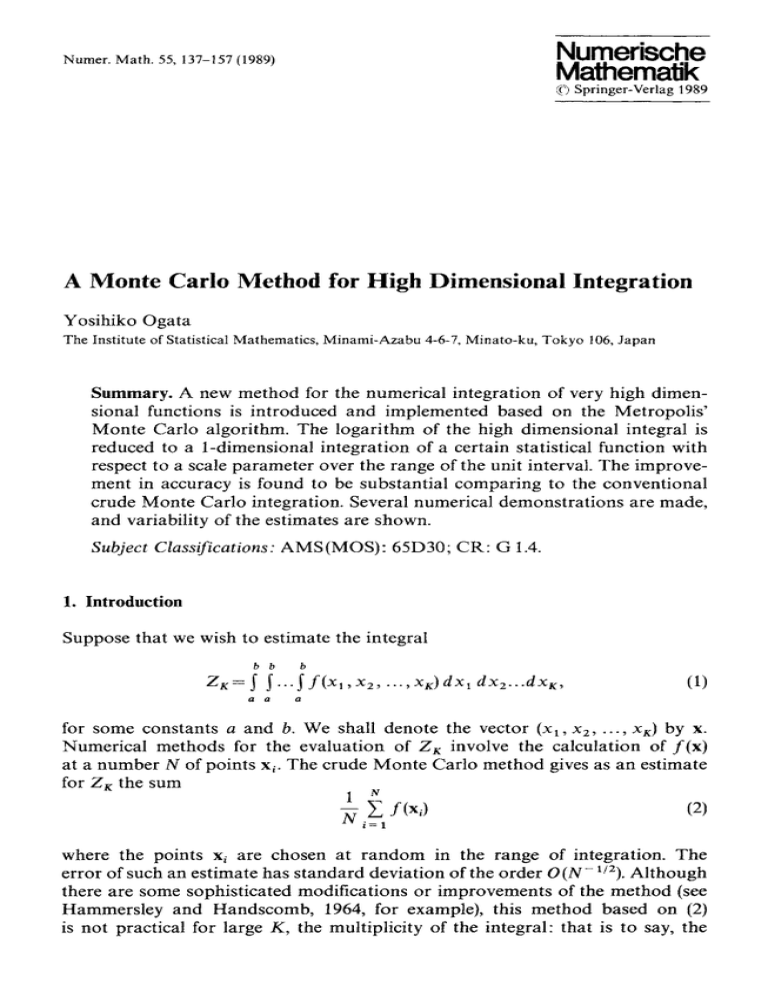

Summary. A new method for the numerical integration of very high dimensional functions is introduced and implemented based on the Metropolis'

Monte Carlo algorithm. The logarithm of the high dimensional integral is

reduced to a 1-dimensional integration of a certain statistical function with

respect to a scale parameter over the range of the unit interval. The improvement in accuracy is found to be substantial comparing to the conventional

crude Monte Carlo integration. Several numerical demonstrations are made,

and variability of the estimates are shown.

Subject Classifications: AMS(MOS): 65D30; CR: G 1.4.

1. Introduction

Suppose that we wish to estimate the integral

b b

Zr=

b

S ~ ...S f ( x, ,x2,

a

a

...,xr)dx~ dxz...dxr,

(1)

a

for some constants a and b. We shall denote the vector (xx, x2 . . . . , xK) by x.

Numerical methods for the evaluation of Z r involve the calculation of f ( x )

at a number N of points xi. The crude Monte Carlo method gives as an estimate

for Z~ the sum

N

1~

f(x,)

(2)

i=1

where the points xi are chosen at random in the range of integration. The

error of such an estimate has standard deviation of the order O ( N - z/z). Although

there are some sophisticated modifications or improvements of the method (see

Hammersley and Handscomb, 1964, for example), this method based on (2)

is not practical for large K, the multiplicity of the integral: that is to say, the

138

Y. O g a t a

integrated values are usually very small or very large, while the range of the

function in (2) can be too large to get rid of the biased error caused by the

skewness of the function: see Example 1 in Subsection 4.1.

In this paper we are interested in estimating log Z K directly rather than

Z K itself. The principal motivation of the present estimation is related to providing a solution to the objective Bayesian method which is described in the companion paper (Ogata 1988). The idea for evaluating log Z K is generalized and

developed from the papers by Ogata and Tanemura (1981, 1984a, b, 1988) which

discuss the maximum likelihood method to estimate the pairwise interaction

potential of a Gibbsian distribution for the spatial point patterns.

2. The Method

2.1. The Derivation

Suppose that f(x)=f(xl, Xz .... , xK) be a function defined and to integrated

on a K-dimensional cube [a, b] K which includes the origin 0=(0, 0 .... ,0).

Assume also that this function is of bounded from below, so that without loss

of generality we can hereafter assume positivity of the function. Consider

a scaling parameter a such that 0 _ < a < l , and let the vector a x denote

(axi, crx2, ..., axK). Define a family of probability densities {g~(x)} on the cube

[a, b] r which are characterized by the scale parameter a in such a way that

f(trx)

g~(x)----Z~(o-)

where

(3)

Zr(tr) is the normalizing factor

b b

b

ZK(o-)= J' j'... j' f(ax) dx.

a

a

(4)

a

Suppose that a random vector ~=(~1, ~z . . . . . ~ ) is simulated from the density

g~(x). Then, from (3), we have

log g~ (~) -- log f (a r - log ZK (a).

(5)

Under very broad regularity conditions, such as continuously differentiability

of the function f(x), we can expect

E~[~a log g~(~)] = 0,

(6)

where the expectation E,[--] is taken by the distribution g,(x). See Appendix 1

for the explicit conditions and proof of the Eq. (6). This equation implies the

following equality

E,,[~--~log f (~r~)l= ~ log Z~(a).

(7)

A Monte Carlo Method for High Dimensional Integration

139

For the convenience in later description let us set the both hand side of the

equality in (7) by ~9(a). Replacing the expectation in the left hand side of the

above equality by the time average, we get the consistent and unbiased estimate

of ~,(a)

~(cr)= 1 ~ ~ l o g f ( c r x ( t ) ) ,

(8)

t=l

where {x(t)=(xl (t), x 2 ( t ) . . . . , xK(t)); t = 1, 2 . . . . . T} are vector series of samples

following the distribution g,(x). The practical simulation methods include the

so-called Metropolis' procedure. For the procedure we define the potential function by

U.(x) = --log f (c~x),

(9)

for the present case. See Sect. 3 for the brief review of the Metropolis' procedure

and some notes in its application.

On the other hand, from the Eq. (7), the eventual estimation of log ZK

= log ZK(1) is written by

1

log ZK (1) = log Z/( (0 + ) + S ~b(or) d a,

(1O)

0

where

b

b

Z~(O+)= lim j'... I f ( a x ) dx =(b-a)~f(O),

~

a

(1 l)

a

and 0 = (0, 0 . . . . . 0).

2.2. Numerical Approximation and Error Estimate

Before carrying out the integration in (10), estimation (8) of O(as)

=(0/Oa) log Z~(ai) should be made for some a s such that 0 < a s < 1, preferably

with their estimated errors. In Ogata and Tanemura (1981, i984a, b, 1988) polynomials or spline functions are fitted to this sort of experimental data to get

a smooth and well fitted function, and then to be used for the integrand in

(10).

The alternative but simple method to evaluate the integral in (10) will be

by the approximation in the trapezoidal rule, for example,

J

j=I

If the estimated errors of the ~(aj) for respective a s are not highly inhomogeneous, the above sum, for example with the equispaced nodes {aj}j=t.2 ..... s,

140

Y. Ogata

for a suitable J, is expected to provide accurate estimate to the required integral

in (10). This is because that the estimate for O(crj) of each aj is consistent and

unbiased and further that the random variable ~(aj) and ~(ak) are mutually

independent for k4=j, provided a suitable generation of the random numbers

is carried out. Thus, if the standard error of each ~(aj) is s~, then error variance

of log Zk(1) estimated by using (10) and (12) is given by

/G

O \2

J

(13)

Estimation of s{ can be carried out in the following way. Let us set tlo(t )

= (~/~3a) log f ( a x (t)). Then, from (8), we have approximately

To

T

Var(~ (~)) ~ Va~-~/~ {I + ~ ( 1 - ~ ) p~ (z)},

(14)

where p,(r) = E {qo(t) q,(t + 0} = E {t~e('c) 2} is the auto-correlation but p~(z) = 0

is assumed for all v > To with some T0. Here Var(q,) is estimated by

~y~ (.o(t)- 6(~))2 =

~(t)2 _ 6(o)2,

(15)

and also p~(z) is estimated by the sample auto-correlation

1

T-z

T-~ Z (.~(0- ~(o))(~(t + 0 - ~(o))

~,(z)=

t:l

(16)

1 ~ (qo(t)_~(~r))2

t=l

Further, the step To in (14) is determined by looking at the plotted graph of

~o(z) in (16) against the time lag z. These evaluation methods will be used for

the numerical examples in later section.

3. Simulation of the Gibbsian Random Field

For a practical method to get the sample from the distribution in (3), let us

review a simulation method which uses a particular type of random walk known

as a Markov chain. The simulation was originally devised by Metropolis et al.

(1953) and developed by Wood (1968) and others for the study of atomic systems.

Consider a continuous Gibbs random field of a state space VK (the cubic

I-a, b] K in the present case for (1)) whose probability density distribution g(x)

has the form

g(x) = 1 exp { - U (x)},

where U(x) is a total potential of x, and Z is the normalizing constant.

(17)

A Monte Carlo Method for High Dimensional Integration

141

The most commonly used algorithm is described in the following manner.

Assume that, at time t, the state of the K particle system is x(t)={(xk(t)) in

VK; k = l , ..., K}. A trial state x'(t+l)~-{(x'k(t+l))} for the next time t + l is

then chosen in such a way that the coordinate x'r(t+ 1) of a randomly chosen

particle r lie in some interval [xr(t)-6, xr(t)+6] (that is to say, x',(t+ 1)=x,(t)

+3(1 2 0 for a uniform random number 0, while all other K - - 1 particles

have the same position as in state x(t), where 6 > 0 is a parameter to be discussed

below. The corresponding total potential energy U(x'(t + 1)) in (17) is then calculated and compared with U(x(t)) as follows.

1. If U ( x ' ( t + 1))< U(x(t)), then without further ado the next state x ( t + 1)

of the realization is taken as the trial state x'(t + 1).

2. If U ( x ' ( t + 1))> U(x(t)), then we obtain a uniform random number r/, and

(i) if tl<=exp{U(x(t))-U(x'(t+l))}, state x ( t + l ) i s taken to be the trial state

x ' ( t + 1); (ii) otherwise, state x(t + 1) is taken to be the previous state x(t).

It should be noticed that the normalizing factor Z in (17) has not been

used in the simulation. In essence, the Monte Carlo procedure here is nothing

but to select the transition probabilities

q(x, d y ) = P r o b { x ( t + 1 ) ~ d y l x ( t ) : x }

(18)

of a M a r k o v chain x(t) which satisfy ~p(dx)q(x, dy) p(dy) for all the state

y of the K-particle system in V K (for the stationary probability p ( d x ) = g ( x ) dx,

and further satisfy the condition that the n-step transition probability q(n)(x, dy)

converges to the given equilibrium probability p(dy) in (17). Thus, of course,

there are many possible algorithms for carrying out the condition other than

the above (Wood 1968). For example, Ripley (1979) applies a spatiotemporal

birth and death process and uses the rejection method in generating a birth

point, but the range of the trial point is the whole state space.

The parameter 6, the maximum single step displacement allowed in passing

from one state to the next, ought in principle to be adjusted for optimum rate

of convergence of the Markov chain. Wood (1968), according to his experiments,

suggests that a reasonable choice of the adjusting parameter 6 has been found

to be a value leading to the rejection of the trial configuration on about a

half of the time-steps. This is a trade-off, especially in case of highly variated

Gibbs distribution, between effective transition of the state and avoding of unnecessary repetition of the same state. This suggestion can be confirmed by comparing the convergence rate, of the auto-correlation (16) of series of ~9-values or

potential values, to zero as the time lag z increases. In addition to the selection

of 6, in order to attain the equilibrium state in fewer time-steps in Monte Carlo

simulation, the initial configuration should be suitably chosen. We adopt the

final equilibrium state x(T) used for the estimate ~(ai) in (12) for the initial

state of the next step a j + l . To examine whether the states have reached the

equilibrium or not, we can just look at the plot of the time series of potentials

or ~9-values. Also it is suggested that the same results can be obtained by taking

r = 1, 2 . . . . , N sequatially instead of the random choice of r. Further practical

discussion will be made in Sect. 5.

142

Y. O g a t a

4. Numerical Experiments

4.1. Example I

As an example the integral

1 1

I

Z = ~ f...SeX'eX~...e""dx, dx2...dxK,

0 0

(19)

0

with K = 1000 was calculated. The expected values are therefore

Zr(a)=(e ~ - 1)K/a K and

log ZK((r)=K log{(e ~

1)/a},

especially Z = ( e - 1)K= 1.24279 • I0235, log ZK(0 ) = 1 and log ZK(1)= 541.3248.

Further ~(a)=OlogZ~(cr)/Ocr, especially q J ( 0 + ) = K / 2 = 5 0 0 and ~9(1)=K/(e

- I) = 581.9767.

The potential function for simulating the samples is

K

U, (x) = -- log f ( x ) = -- a ~

X k.

(20)

k=l

Further,

K

8 logf(a;x)=

8or

(x)= ~ Xk.

(21)

k=l

We now suppose that the values of log ZK(a ) and O(a) are unknown but,

of course, that the value of log Z K ( 0 + ) = 0 , the form of the potential U,(x)

and its derivative 8 U (x)/8 cr for each o are known. The equi-distant nodal points

{ % ; j = 1, 2 . . . . , J} are taken from the unit interval [0, 1].

Since the statistic in (21), and the potential function (20), are very smooth

and rather flat with respect to ~r, the trial interval [x,(t)-6, x,(t)+ 6] (see Sect. 3)

is taken to the whole space [0, 1] in the present case. For each aj experiments

of the first 10 x K steps, which may be not in equilibrium, are to be thrown

away.

After some computer simulations with T = K x 104 steps in (8) for the K

= 1000, we obtained respective estimates ~(crj), on aj = ( j - 1)/10, j = 1, 2, ..., 11,

together with their standard errors 3~. See Fig. 1 for the shape of the true ~k

function. This looks increasing linear but not exactly. The variability of estimated

~O-functions with 20 experiments is shown in Fig. l b, and the estimation of

the Var {r/~(t)} ~/2 in (15) is plotted in Fig. t c. Each of the graphs seems homogeneous throughout [0, 1], but notice that the scale of the y-axis is quite different.

It is seen that variability of errors of 0-function is very small comparing to

that of the 0-values themselves, and further that this is quite symmetric with

respect to the origin. These are the reason of success in obtaining the accurate

estimate in spite of the very rough partition {ai}j= 1.2 . . . . . 11 of [0, 1]. The estimated log Z and their error estimates (13) using (14) are listed in Table 1. It

seems that the variability of difference between the estimate and true value

of the integral is distributed according to the normal distribution with the given

error estimate.

A Monte Carlo Method for High Dimensional Integration

143

Xo

o

c~

e-.,

I

I

i

1

i

I

I

I

1

1

0.00

0.20

0.40

0.60

0.80

1.00

0.00

0.20

0.40

0.60

0.80

l,O0

a

?

b

§

§

*

I

I

§

§

t

I

4,

§

I

!

4'

§

I

I

4'

'~r~

r

00.00

t

0.20

0.40

0.60

0.80

I

1.00

C

Fig. 1. a Plot of the theoretical ~b(a) versus a of Example 1. b Variability of ~i(a)-t~(a) versus aj

for 20 experiments in i of Example 1. Sign " + " is the estimate for each e i and each experiment.

c Estimates of Var{r/.} I/2 in (15) versus crj for respective 20 experiments in Example 1. Sign " + "

is the estimate for each crj and each experiment

Further, in Table 2, an estimate by the crude Monte Carlo method in (2)

and its logarithm are provied for the comparison. Here, to avoid the overflow,

f ( x ) = e l~176176

e x p { ~ ( x , - 1 / 2 ) } is used for the function in (2). Nevertheless, the

considerable underestimates took place, owing to the very large variation and

144

Y. Ogata

Table 1. Present Monte Carlo estimates for Example 1 with 1ogZ~ooo=541.3248. The step size is

T= K x II = 103 • 104, the number of nodal points is J = 11, and uniform random numbers 2 • J

times many as the step size T are used. Standard errors (13) using (14) are attached for respective

estimated integral values

No.

logZ(1)

s.c.

No.

logZ(l)

s.c.

I

2

3

4

5

6

7

8

9

10

-541.3636

-541.3393

- 541.3299

- 541.3783

-541.3408

-54t.3430

-541.3027

-541.3445

-541.3728

-541.3756

0.02329

0,02323

0.02318

0.02331

0.02325

0.02322

0.02328

0.02328

0.02329

0.02326

I1

t2

13

14

15

16

17

18

19

20

--541.3411

--541.3232

--541.3272

--541.3508

--541.3467

--541.3698

--541.4006

--541.3463

--541.2827

--541.3457

0.02329

0.02332

0.02324

0.02329

0.02331

0.02327

0.02330

0.02323

0.02323

0.02338

Table 2. Crude Monte Carlo estimates for Example 1 with Ziooo=0.124279x 10236 o r log Zlooo

=541.3248. Number of steps in (2) is N=106. Random numbers of K=1000 times as many as

the N = 106 are used

No.

21000

log 2

No.

21000

1

2

3

4

5

0.3384991 • 10232

0.8431270 • 10230

0.3299905 x 10TM

0.4164859 • 10T M

0.9635451 • 10230

532.5976

529.4239

530.7885

531.0213

529.5574

6

7

8

9

10

0.1432387

0.5987711

0,1544526

0.1184123

0.6898787

log 2

•

•

x

•

•

10T M

10230

10TM

10T M

10230

529.9539

529.0817

530.0293

529.7636

529.2233

s k e w n e s s o f t h e f u n c t i o n . E s p e c i a l l y , t h e v a l u e o f t h e t i m e a v e r a g e (2) f o r t h e

p r e s e n t e x a m p l e is a l m o s t d o m i n a t e d b y t h e e x t r e m e s o f t h e f u n c t i o n f ( x ) , so

t h a t t h e e n o u g h s t e p size T s e e m s t o b e t o o h u g e t o a p p r o a c h t h e r i g h t o r d e r

of the true value. The used random numbers for the crude Monte-Carlo method

a r e m o r e t h a n 5 t i m e s u s e d f o r t h e m e t h o d s u g g e s t e d in t h i s p a p e r . F r o m t h e s e ,

t h e s i g n i f i c a n t i m p r o v e m e n t i n a c c u r a c y b y t h e p r e s e n t m e t h o d is s e e n clearly.

From our experience, the accuracy does not seem to be dependent on the dimens i o n o f t h e i n t e g r a l , a l t h o u g h t h e c.p.u, t i m e b e c o m e s l o n g p r o p o r t i o n a l l y t o

the dimension.

4.2. E x a m p l e 2

T h e n e x t e x a m p l e is t o see h o w t h e h i g h d i m e n s i o n a l i n t e g r a t i o n o n t h e i n f i n i t e

domain can be reasonably calculated. Consider an integral

--oo

--o0

--o0

A Monte Carlo Methodfor High DimensionalIntegration

for x = ( x 1. . . . , x r ) where f ( x ) = e x p { -- 89

that

t - 12

B=

0

- 12

-I

145

and B is a Toeplitz matrix such

--10

2

.0 0 1

0 . ....

- I ... 0 .

............

0

0

0

0...2

Therefore Z = ( 2 r c ) r/z det(B)= (2~)K/2(K+ 1). One possible way to calculate

(22) by our method may be resetting the integral by using a transformation

of variables from the infinite range into some finite range, but here we directly

approximate the integral by taking the range [a, b] in (1) sufficiently large.

Let us take a = - 2 0 . 0 and b=20.0 and K = I 0 0 0 , then we can expect in the

present case that the trancated integral (1) well approximate (22). The analytically

expected values to check the accuracy are

log Zr(0)= K l o g ( b - a ) = I000 log 40.0,

log Z r (1)= log Z = (K/2) log 2 7c-(1/2) log (K + 1)= 915.484,

and ~(1)= - K = -- 1000.

The potential function for simulating the sample is

0-2

U~(x) = - l o g f ( 0 - x ) = ~ xBx',

(23)

and then

0 logf(0-x)=

0o

~ - a ~ ( x ) = - 0 - x B x '.

(24)

Following the suggestion of Wood described in the last paragraph in Sect. 3,

I made some test experiments to choose the maximum single step displacement

parameter 6=2.0 for the random walk of the trial points on the state space

[ - 2 0 , 20] whose extremes are identified to regard as a torus. Looking at the

time series of potentials or ~O-values for each a j, j = 1, 2. . . . . J, the experiments

of the first K • 103 steps which may not in equilibrium, were thrown away.

Since variation of the function if(o) with respect to o seems large comparing

to that of previous example, I took J = 8 1 in order to get enough accurate

estimate for log Zr(1 ) by the trapezoidal rule (12): see Fig. 2a for the shape

of the ~b function obtained by averaging the estimates if(a1) of 10 experiments

for each oj, Fig. 2b for the variability of ~ obtained from the 10 experiments,

and Fig. 2c for the standard errors of the time series t/,(t)=(0/00-)logf(0-x(t))

of t for respective 0-=0-i. It should be noted here that the V a r ( q , ( t ) ) 1/2 is very

similar to the absolute value of ~,(t) although its scale is much smaller. The

magnitude of the variability in Fig. 2 b is also related to the other two figures

but not quite in the beginning part. This is due to the different mixing rate,

or convergence rate of the correlation t~,(z) in (16), among the different 0-=0-i

in my Metropolis' procedure: see Eq. (14) for the reason. Note also that the

variability in Fig. 2b seems symmetric, which is a good news for the estimate

146

Y. Ogata

X

i -

c:)

o

o

l

1

1

1

l

I

0.20

0.00

a

!

|

!

0.60

0.40

l

i

0.80

1.00

OlD

c~

+

I

I

I

~.00

I"

i

1

0.20

t

1

0.40

|

I

I

0.60

I

I

0.80

1.00

b

00

%0 ~

x

o

(:~

I

0.00

I

0.20

!

I

0.40

|

I

0.60

I

I

0.60

|

|

1.00

C

Fig. 2. a Plot of the sample average ~(a)= ~ ~i(a)/lO versus aj for 10 experiments of Example 2.

~=a

b Variability of ~(aj)-~(aj) for 10 experiments of Example 2. Sign " + " is the estimate for each

% and each experiment, e Estimates of Var{r/.} ~/2 in (15) versus aj for respective 10 experiments

in Example 2. Sign " + " is the estimate for each 6j and each experiment

A Monte Carlo Method for High Dimensional Integration

147

Table 3. Estimations of the logarithm of the integral (22) of Example 2 and their standard errors

with T = K x I I = I000 x 5000 and J = 8 1 . The true value is log Z = 915.484

No.

log Z(1)

s.c.

No.

logZ(1)

s.c.

I

2

3

4

5

915.862

915.470

916,208

916.592

917.620

0.517

0,525

0.520

0.521

0.519

6

7

8

9

I0

914.684

918.168

916.457

916.547

916.699

0.524

0.521

0.524

0.523

0.520

~(a) from the viewpoints of the unbiased estimation theory. Table 3 lists thus

estimated values of log ZK(1) and also their estimated errors (13) using (14).

From this it is seen that the performance is reasonably well, although we see

a slight bias from the true value which, we suspect, may due to insufficient

number of partitions especially in the beginning part of the interval.

4.3. Example 3

For the last example, consider a random fields x = { x i i e S } on 32 x 32 lattice

(i,j) where S is one dimensional torus being identical to [--5, 5], and xij is

conditionally dependent on the values of Xk,,,EN~J within the second nearest

neighbourhood Nij= {(i,j+_ 1), (i-L-1,j), (i_ 1 , j + 1)} on the lattice. The potential

function here is arbitrarily defined in such a way that

31

O-X{OE+ll~xij-~

U~(xz0)=- ~

i,j = 2

~

~Xkmll2}-x

(25)

(k, m ) e N i j

with an adjusting parameter 0, and metric II" 11 is the natural distance on the

torus S. The values on the boundary of the lattice are fixed in such a way

that

xi~=

I

for i =

1 and

l<j<16,

or

1_<i_<16 and j = 1,

=

2

for i =

1 and

17<j<32,

or

i_<i<16 and j = 3 2 ,

=-1

=

0

for i = 3 2

and

l<j<16,

or

1 7 < i < 3 2 and j =

I,

for i = 3 2

and

17<j<32,

or

17_<i_<32 and j = 3 2 .

(26)

Then we calculate the normalizing constant of Gibbs distribution

Z=

5

5

~

~ ... ~ e x p { - U x ( x ; 0 ) } d x .

-5

-5

5

(27)

-5

We do not know the analytic value of the integral, but let us see the estimated

value of log Z for each 0 = 0.1, 0.2, ..., 0.8. Thus, for each 0, ~,-value is calculated

for equidistant 101 points {ak} between 0 and 1, and for further equidistant

148

Ogata

Y.

TAU=0.50

TAU=O. 10

c~

co

Co

x~_

x

v

CO

I

I

0.00

I

I

0.40

I

I

t

0,00

0.80

I

0.8o

TAU=O.20

o~

I

0,40

TAU=O.GO

. . . . . . . . . . . . . . . . . . . . . . . . .

Col

Co

~o~ ..........................

X

XCO_

v

c)

v

_

I:::1

Co

I

i

0.00

I

I

0.40

I

I

]

I

I

.00

0.80

I

TAU=0.30

. . . . . . . . . . . . . . . . . . . . . . . . .

C:)--~ CO . . . . . . . . . . . . . . . . . . . . . . . . .

CO

,-

!

~c) ~ -

><

v

I

0.80

TAU:O.70

0--

~

I

0.40

x

CoCo

r-1

b~

I

I

0.00

I

t

8.40

t

1

I

i

1

.00

0.80

TAU=O.4D

. . . . . . . . . . . . . . . . . . . . . . . . . .

Co

•

I

I

0.80

%~'-~_

-

X

v

I

0.40

TAU=0.80

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

_

C~

Co

o4

I

I

0.00

I

0.40

1

I

0.80

I

i

I

.00

1

0.40

I

I

I

0.80

Fig. 3. a Plots of ~,(o) versus a~, r = 0 . I , 0 . 2 , . . . , 0 . 8 , of Example 3. The horizontal dotted lines are

level of zero for respective z. b Estimates of Vat {r/o} t lz v e r s u s a t for respective

21 points between 0 and 0.05 (thus 116 distinct points altogether in [0, 1]).

The estimated functions ~o(ak) for 0=0.1, 0.2 . . . . . 0.8 are plotted in Fig. 3a.

As in the previous example, shape of the standard error of the time series t/~(t)

in Fig. 3b for each 0 is very similar to that of the corresponding J~(a)l. It

is seen from the definition that ~ ( 0 ) = 0 always hold for any 0, and we suspect

A Monte Carlo Method for High Dimensional Integration

149

TAU:O.50

TAU=D.10

o

o

X

X

v

cc~

o

o

I

0,00

I

1

!

I

o

0.80

0.40

!

0.00

I

I

0.40

I

I

0.80

T^U=0.60

TAU=0.20

CD

CD_

O ~

M

X

r

c~

c~

I

I

I

0.40

0.00

I

I

c~

I

0.80

.00

I

I

0.40

l

I

0.80

TAU=0.70

TAU=0.30

o

c~

o2

•

v

o

o

o

o

o

I

0.00

I

I

I

I

o

0.80

0.40

0.00

I

I

0.40

I

I

0.80

TAU=O.80

TAU=0.40

123

0

r'~

d_

X

_

i

Q

O

o

o

!

0.00

I

0.40

!

I

!

I

O

.00

0.80

I

0.40

I

I

i

0.80

Fig. 3b

that the sharp trough next to a = 0 in Fig. 3 a might relate to the sharp increase

rate of the potential function in (25) a r o u n d a = 0, especially when 0 is small.

This is the reason why we have taken the further nodal points in the interval

[0, 0.05] to maintain the reasonable accuracy of the numerical integration. Integrating these function by the trapezoidal rule, we have Table 4.

150

Y. O g a t a

Table 4. S u m m a r y o f the e s t i m a t i o n of the l o g a r i t h m of the integral (27) of E x a m p l e 3

log Z(O +)

] ~(a)da

logZ(1)

0

0.10

0.20

0.30

0.40

0.50

0.60

0.70

0.80

1 0 2 4 000.00

128 000.00

37925.93

16000.00

8192.00

4 740.74

2985.42

2000.00

- 44 895.08

- 7 893.36

-4870.52

- 3858.16

- 3 376.75

- 2 756. I 0

- 1927.37

- 1 324.99

979104.92

120106.64

33055.41

12141.84

4815.25

t 984.64

1058.06

675.01

5. Discussions

In principle, the essential assumption to the class of integrand functions for

the applicability of the present method are continuously differentiability of the

function to endure the relation (6) and (11): see Appendix 1. However, from

the preceding three examples, we have learnt some negative correlation between

the smoothness of the ~k-function with respect to (r and number of the nodal

points {ak} to maintain the reasonably accurate estimate of the integral. Indeed,

the error variance (13) shows the accuracy of the estimate log Z~(1). Suppose

the value ~(~r~)is large, then ~ = V a r { ~ ( o i ) } is also expected proportionally

large under moderate sample size T in (8): see Figs. 2a and c and also Figs. 3a

and b for instance. In such case interval length o j - o ~ _ l between the nodal

points in such particular parts should be small enough to maintain a required

accuracy as well as to avoid the bias. Further it is lucky for the estimate ~(o)

in (8) from the viewpoint of the unbiased estimation theory that the variability

of the estimate (8) seems symmetric: see Figs 1 b and 2 b.

Metropolis' simulation method includes very delicate parameters, that is

to say, the maximum single step displacement 6 and number of steps needed

to reach the equilibrium. We usually begin with large 6 and decrease this, then

the ratio of the rejection of the configuration decreases. We continue this until

to meet the Wood's suggestion that the ratio should roughly be equal to a

half of the step times: that is I D 3 = I D 1 + I D 2 very roughly holds in the output

of the program in Appendix 2. To ensure the step of the pattern having reached

the equilibrium (that is, I S in the program), we examine graphs of the moving

average of the time series of the total potential values or of 0-values. If the

time series seems to get stationary, we may judge that the patterns get in equilibrium. To get equilibrium as early as possible, the step size 6 has better to

be larger so far as the above ratio of the rejection is satisfied. This also makes

the mixing rate of the Markov process in (18) faster. The mixing rate is partly

measured by the auto-correlations of time series of potentials or 0-values: thus

To in (14) relates to the mixing rate.

A Monte Carlo Method for High Dimensional Integration

151

Acknowledgements. I have benefited greatly from useful discussions with Masaharu Tanemura who

have been working with me on the estimation of the interaction potential in a spatial point pattern.

Koichi Katsura generously helped me in preparing the Figures and Tables of the paper. Comments

of the anonymous referee was helpful for the revision of the present paper.

Appendix 1. Assumptions for the Eq. (6)

We assume that

1. For almost every xe[a, b] r, f ( x ) > 0 holds;

2. for almost every x t [ a , b] K, OJ'(ox)/0o exist for all a t [ 0 , i]; and

3. for every a t [ 0 , 1], I~f/O~I<A(x) holds, where A(x) is integrable over

[a, b]r.

Since, from (3) and (4),

g~(x)dx= 1,

(28)

[a,b]g

and because of the above assumptions 2 and 3, using the Fubini's theorem

of the change between signs of integral and differential, we have

0g~(X) d x = ~

[a,b]K

6~X

f

go(x)dx=0

(29)

[a, bl K

for every cr in [-0, i], and so, adding the above assumption 1,

E ~ [ ~ log g~(~)] =

kCO

j

S

i ago(x) g~(x) dx = 0 '

ta,blK go(x) C2C7

(30)

which is nothing but the Eq. (6).

Now, since the both ~r and x are defined on the compact sets [0, l] and

[a, b] ~ respectively, the above assumptions 2 and 3 are implied, for example,

by assuming the continuously differentiability of the function f(x) on [a, b]r.

This, of cource, implies f ( 0 ) < o o and f ' ( 0 ) < o o . The former is necessary for

the relation (1 t).

Appendix 2. Fortran Program

The Fortran program given here is for the Example 1. However, this is just

for the test program for the readers. For example, the dimension here is K = 100

instead of K = 1000: the calculations are carried out for the latter case in the

present paper and then given in Table 1. Also, a very simple subroutine for

the generation of quasi-random numbers is given here. However, it is crutial

for avoiding the biases of the present statistics ~(aj) in such a very large sample

size of Monte Carlo experiments, to choose a well tested random number generation. Throughout this work, I have used physical random number generator

(200 kilo-byte per second) installed in the Institute of Statistical Mathematics.

The program here can be easily rewritten for the computer having a parallel

processor, which will improve the c.p.u, time significantly.

152

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

Y. Ogata

PROG;~bkM MAIN

Monte-Carlo Integration: estimation of log(Znn)

Exponential function exp{X!-...-Xnn} is InteErated as an example.

Inputs:

iX, IY,IZ...Seeds for random nuzber ~enerat=r.

NN...Dimenalon (=ulzi~!Icity) of the Inte~raZlon.

ll...Repeat number of simulation such that K=NN'il.

IS...Repeat number for s=eps before the equ!llbrulm.

IM...Step interval for dlsplayln~ psl-value ear!mates.

J D T . . . N u m b e r of equidistant subintervals in [0,I] for

the trapezoidal rule for integrating psl-functlon.

Outputs:

AVF...TIme average of the psi-function at each SG_J,

where J,l,2 .... ,JDT-I.

ID1,ID2,1Db...CountlnE the numbers of rejections and acceptances.

SS...Partlal sums in the trapezoidal rule up to each SG_J.

IMPLICIT REAL'8 (A-H,O-Z)

DIMENSION X(2000)

DATA IX, IY, IZ/IS74,2Zb,~7/

DATA IDI,ID2,1D~/O,0,0/

DATA SS/0.0/

NN-100

II,200

IS-100

IM*I00

JDT-20

DO I00 N-I,NN

I00 X(N)-KANDOM(IX, IY,IZ)

DO 50 J-I,JDT-I

DT-I.D0/JDT

SG.(J-I)*DT

FN-PSI(NN,I,X(1),SG,X,FN)

K-0

POT-I.DbO

SF-O.ODO

SF2,0.0D0

WRITE(S,3)

3

FORMAT(/IH

&

,bX,'ll',tX,'Psl-values',tX,'Differences',

5X,'e.e.(Psl)',$X,'IDI',7X,'ID2',7X,'IDb')

DO i0 I-l,ll-IS

IT-I-IS

DO 20 N*I,NN

XTEST-RANDOM(IX, IY, IZ)

TESTPT*POTEN(NN,N,XTEST,SG,X,POT)

IF(TESTFT.GT.POT) GO TO 30

IDI-IDI-I

POT-TESTPT

IF(I.LT.IS) GO TO 20

FN-PSI(NN,N,XTEST,SG,X,FN)

X(~)-XT~ST

K.K-I

SF-SF-FN

SF2-SF2*FN'*2

GO TO 20

A Monte Carlo Method for High Dimensional Integration

30

CONTINUE

PROBAB=DEXP(POT-TESTPT)

RN=RANDOM(IX, IY, IZ)

IF(KN.GT.PROBAB) GO TO 40

ID2=ID2-1

POT=TESTFT

IF(I.LT.IS) GO TO 20

FN=PSI(NN,N,XTEST,SG,X,FN)

X(N)=XTEST

K=K~

SF=SF-FN

SF2.SF2.FN~176

GO TO 20

CONTINUE

ID3=ID3-1

IF(I.LT.IS) GO TO 20

K-K-I

SF=SF-FN

SF2=SF2~176

CONTINUE

40

20

IF(MOD(!T,IM).NE.0) GO TO 10

IF(K.E~.0) GO TO i0

Av~=s//x

AVF2=SF2/K

SLK=DS~RT(AVF2-AVF~176

CALL DIFFEK(NN.J,JDT~176

WRITE(6,1) IT,AVF,DI,$DK,IDI,ID2,1D3

FO~MAT(IH olT,X,DI3.E,X,2DI2.4,2X~

IDI=0

ID2=O

I0

ID3=0

CONTINUE

IF(J.EQ.I) SS=SS-AVF'DT/2

IF(J.GT.I .AND. J.LT.JDT-I) SS=SS-AVF'DT

IF(J.EQ.JDT-I) SS=SS-AVF~

CALL DIFFEE(NN,J,JDT-I,SG,DT,AVF,SS,DI,D2)

WRITE(B,2) J,SG,SS,D2

2 FO~MAT(IH ,' J=',I3,X," SG=',F5.3oX,' S$=',F15.7,' D2-',DI5.4)

50 CONTINUE

STOP

END

DOUBLE PRECISION FUNCTION KANDOM(IX,IY,IZ)

Wich=ann an~ Hill: Appl. Statist. (JRSSC), (31) 188-190 (19S2).

IMPLICIT KEAL'8 (A-H,O-Z)

IX,ITI'MOD(IX,177)-2~

IY-172"MOD(IY,176)-35"(IY/17S)

IZzlT0"MOD(IZ.178)-S3~

IF (IX.IT.O) IX=IX-3OZSS

IF (IY.LT.O) IY=IY-30307

IF (IZ.LT.O) IZ=IZ-30323

RANDOM-MOD(FLOAT(IX)/30269.0,FLOAT(IY)/30307.0.

&

FLOAT(IZ)/30323.0,1.O)

RETURN

END

153

154

Y. Ogata

DOUBLE PRECISION FUNCTION POTEN(NN,N,XTEST,SG,X,POT)

IMPLICIT REAL'8(A-H,O-Z)

DIMENSION X(2000)

I F ( N . N E . 1 ) GO TO 20

POTEN-O.0

DO i0 K-I,NN

POTEN.POTEN-SG~

10 CONTINUE

GO TO 30

20 CONTINUE

POTEN-POT

POTEN-POTEN-(-SG*X(N))

POTEN=POTEN-(-SG'XTEST)

50 CONTINUE

RETURN

END

DOUBLE PRECISION FUNCTION PSI(NN,N,XTEST,SG,X,FN)

IMPLICIT REAL'8(A-H.O-Z)

DIMENSION X(2000)

IF[N.NE.I) GO TO 20

PSI=O.O

DO 10 K-I,NN

PSI-PSI-(X(K))

I0 CONTINUE

GO TO 50

20 CONTINUE

PSI-FN

PSI-PSI-(X(N))

PSI=PSI-(XTEST)

30 CONTINUE

RETURN

END

SUBROUTINE DIFFER(NN,J,JDTI,SG,DT,FI,F2,DI,D2)

IMPLICIT REAL~

IF(J.EQ.I) FTI=NN/2

IF(J.NE I) FTI=NN'~(P(SG)/(EXP(SG)-I)-NN/SG

DI=FI-FTI

IF(J.NE.I) FT2.NN~

IF(J.EQ.JDTI) FT2=NN~

D2,F2-FT2

~ETUP_~

END

$

=onSet

J.

II

Psi-values

0 0.529478E-02

100 0.503037E~

200

0.498055E-02

1 SG=O.O00 SS=

II

Psi-values

0 0.455334E-02

100 0.499405E-02

200

0.502595E-02

J=

2 SG=0.050

SS=

Differences

s.e-(Psl)

IDI

0.2948E-01

0.1314E-01

I0000

0.3037E-00

0.2808E-01

10000

-0.1945E~

0.3085E-01

I0000

1.2451381 D2=

0.1245E-01

Differences

-0.4883E-01

-0.4761E+00

-0.1572E-00

3.7581126

s.e.(PsI)

IDI

O.2682E*OI

5734

0.2779E-01

4944

0.2765E-01

4891

D2=

-0.1532E-01

ID2

0

0

0

ID3

0

0

0

ID2

4102

48S5

4927

ID3

164

191

182

A M o n t e C a r l o M e t h o d for High D i m e n s i o n a l I n t e g r a t i o n

II

Psi-values

0 0.509892E~

I00

0.508i87E-02

200

0.509765E-02

J=

3 SG=0.100

SS=

IDI

4931

49!8

4925

ID2

4864

4816

4905

ID3

175

185

170

Psl-values

0.477783E~

0.513690E-02

0.510647E~

SG,O.150

SS=

Differences

s.e.(Psl)

IDI

-0.3471E-01

0.5673E-00

5175

0.I195E-00

0.2986E-01

4840

-0.1848E-00

0.2945E-01

4840

8.8451705 D2-0.5240E-01

ID2

4486

4828

4654

ID3

338

332

306

Ir

Psi-values

0 0.554143E-02

100

0.515734E*02

200

0.515618E*02

J=

5 SG,O.200

SS=

Differences

s.e.(Psi)

ID1

0.3749E-01

0.1593E-01

4713

-0.9213E-01

0.2816E-01

4858

-0.1038E~

0.2711E*01

4880

11.4232593 D2=

-0.3759E-01

102

4956

4791

ID3

328

318

349

II

Psl-values

0 0.523624E-02

100

0.524041E-02

200

0.523286E*02

J=

8 SG=0.250 SS =

Differences

s.e.(Psi)

IDZ

0 . 2 8 1 2 E - 0 0 0.I096E-01

4795

0.3228E-00

0.2838E~

4702

0.2474E-00

0.2755E-01

4724

1 4 . 0 3 9 6 8 7 8 D2=

-0.2522E-01

ID2

4707

ID3

468

4804

494

4776

500

II

Psi-values

0 0.532400E-02

I00

0.527219E-02

200

0.527066E-02

J=

7 SG=0.300

SS=

Differences

s.e.(Psl)

IDI

0.7437E-00

0.1279E-01

4422

0.2257Eo00

0.2602E~

4735

0.2134E~

0.2787E-01

4754

1 8 . 6 7 5 1 6 9 3 D2 =

-0.1455E-01

ID2

5009

4740

4761

ID3

569

525

505

Psl-values

0

0.523163E-02

100

0.529133E-02

200

0.528371E-02

J=

8 SG=0.350

SS=

Differences

s.e.(Psl)

IDI

-0.5945E-00

0.1219E-01

4813

0.2573E-02

0.2832E-01

4865

-0.7364E-01

0.2606E-01

4671

19.3170237 D2=

-0.1823E-01

ID2

4431

4658

4622

ID3

656

677

707

II

Psi-values

0 0.489396E-02

100

0.532312E-02

200

0.535440E-02

J=

6 SG=0.400

SS=

Differences

s.e.(Psl)

ID1

-0.4385E-01

0.1952E~

5349

-0.8324E-01

0.2883E-01

4591

0.2195E*00

0.2823E-01

4660

21.9842243 D2=

-0.7250E-02

ID2

4039

4643

4595

ID3

812

766

745

II

Psi-values

0 0.553653E*02

100

0.535057E-02

200

0.537209E,02

J= 10

SG=0.450

SS=

Differences

s.e.(Psl)

tD1

0.1628E-01

0.1172Eo0I

4675

-0.2317E-00

0.3101E-Of

4800

-0.1854E-01

0.3125E-01

4582

24.8802675 D2,

-0.8075E-02

ID2

4432

4587

4814

ID3

893

813

904

Psi-values

0.517229E-02

100 0 . 5 3 8 5 8 7 E * 0 2

200

0.541822E*02

J= It

$G=0.500

SS =

Differences

s.e.(Psi)

IDI

-0.2426E-01

0.8160E-00

4638

-0.2897E*00

0.3112E-01

4545

0.3275E-01

0.2819Eo01

4544

2 7 . 3 8 9 3 7 5 2 D2=

-0.6436E-02

ID2

4465

4582

ID3

867

873

91.5

II

Psi-values

0 0.532739E-02

I00

0.547561Eo02

200

0.54397!E-02

J= 12

SG=0.550

SS=

Differences

s.e.(Psl)

IDI

-0.1287E-01

0.1162E-01

4508

0.2087E-00

0.3014E-01

4451

-0.1533E-00

0.3035E-01

4504

30.I082307 D2=

-0.!463E-01

ID2

4473

II

0

I00

200

J=

4

II

I[

0

Differences

s.e (Psl)

-0.1440E-00

0.8742E-00

-0.1454E-01

0.2954E-01

-0.1567E-00

0.2785E-01

6 . 2 9 1 9 3 5 6 D2=

-0.2316s

155

4823

4541

4497

4512

ID3

916

1052

994

156

Y. O g a t a

II

Psi-values

0 0.557831E-02

I00

0.544720E-02

200

0.546524E-02

J= 13 SG=O.600

SS=

Differences

s.e.(Psl)

iDI

0.8128E-00

0.6446E-00

4442

-0.4~83E-00

0.2336E-0!

4~29

-0.3378E.00

0.2764E-01

4517

52.8408521D2=

-0.5149E-01

ID2

4530

4537

4547

ID3

1028

954

936

II

Psl-values

0

0.574539E-02

i00

0.549764E~

200

0.551577E~

J= 14 S G - 0 . 6 5 0 SS=

Differences

s.e.(Pst)

IDI

0.2075E-01

0.8029E-00

4274

-0.4025E-00

0.3515E-01

4389

-0.2212E-00

0.3168E,01

4382

35.5987360 D2=

-0.4255Eo01

ID2

4486

4454

4472

ID3

1240

1167

116S

Differences

s.e.(Psl)

ID1

0.I086E-01

0.9127E*00

4288

-0.1670E-01

0.3097E-01

4406

-0.1785E~

0.3070E-01

4488

58.5762341 D2-0.5136E-01

ID2

4505

4422

4409

ID3

1207

1172

1123

Psl-values

0.549843E-02

0.565611E-02

0.560001E-02

SG=0.750 SS=

Differences

s.e.(Psl)

IDI

-0.1208E-01

0.!327E~

4311

0.3689E-00

0.2833E~

4289

-0.1620E-00

0.2724E-01

4592

41.1792407 D2-0.8088E-01

ID2

4328

4423

4424

ID3

1381

1289

1184

II

Psl-v81ues

0 0.535163E-02

100

0.572751E-02

200

0.573158E~

J- 17 SG-O.600

SS=

Differences

s.e.(Pst)

ID1

-0.3080E-01

0.1280E-01

4500

0.6785E~

0.2580E-01

4286

0.7190E-00

0.2806E-01

4256

44.0450215 D2-0.2500E-01

ID2

4127

4301

4278

ID5

1373

1401

II

Psi-values

0 0.611592E.02

100 0 . 5 7 4 5 8 6 E - 0 2

200

0.571589E-02

3* 18

SG-0.650

SS-

Differences

=.e.(Psi)

ID1

0.4160E-01

0.1730E-01

4028

0.4591E-00

0.2751E-01

4328

0.1395E*00

0.2876E~

4509

4 8 . 6 0 1 9 6 8 7 D2=

-0.1803E-01

ID2

4462

4207

4305

ID3

1510

1465

1386

Differences

$.e.(Pst)

IDt

-0.3702E-00

0.1737E*01

4162

-0.2030E*00

0.2780E*01

4232

0.1114E,00

0.2859E~

4224

49.7775721D2-0.1245E-01

ID2

4354

4248

4263

ID3

1484

1522

1513

J-

Pst~

0 0.570505E*02

100

0.571977E,02

200

0.575121E~

19 SG=O.SO0 SS-

Differences

s.e.(Psl)

IDI

0.5526E~

0.1117E-01

4268

0.3680E~00

0.2551E-01

4160

0.1759E*00

0.2712E~

4152

52.8782733 D2=

-0.3751E-02

ID2

4115

4228

4212

ID3

1617

1612

1636

J-

II

Psi-values

0 0.583530E,02

100

0.581981E-02

200

0.579740E~

20 SG-0.950

SS-

Differences

s.e.(P$1)

IDI

-0.1470E*01

0.11S7E-01

4316

-0.I027E*00

0.2749E*01

4164

0.1237E-00

0.2868K-01

4128

54.1343075 D20.1822E-02

ID2

4062

4158

4151

ID3

1822

1878

1721

J-

II

Pet-values

0 0.567282E-02

100

0.580950E-02

200

0.583214Eo02

21

SG-I.000

SS-

II

Psi-values

0 0.568727E-02

100

0.557695E~

200

0.556100E~

J= 15 S G , 0 . 7 0 0

SS=

II

0

I00

200

J= 18

II

F o r t r a n STOP

r

~lme: 6 2 3 . 9 3 8 .

real

~tme:

655.335.

1484

A Monte Carlo Method for High Dimensional Integration

157

References

Metropolis, N., RosenbIuth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087-1092 (1953)

Ogata, Y.: A Monte Carlo method for the objective Bayesian procedure. Research Memorandum

No. 347, The Institute of Statistical Mathematics, Tokyo 1988

Ogata, Y., Tanemura, M.: Approximation of likelihood function in estimating the interaction potentials from spatml point patterns. Research Memorandum No. 216, The Institute of Statistical

Mathematics, Tokyo 1981

Ogata, Y., Tanemura, M.: Likelihood analysis of spatial point patterns. Research Memorandum

No. 241, The Institute of Statistical Mathematics, Tokyo 1984a

Ognta, Y., Tanemura, M.: Likelihood analysis of spatial point patterns. J.R. Statist. Soc. B46, 496-518

(1984b)

Ogata, Y., Tanemura, M.: Likelihood estimation of soft-core interaction potentials for Gibbsian

point patterns. Ann. Inst. Statist. Math. (1988)

Hammersley, J.M., Handscomb, D.C.: Monte Carlo Methods. London: Methuen & Co Ltd (1964)

Ripley, B.D.: Simulating spatial patterns: dependent samples from a multivariate density. Appl.

Statist. 28, 109-112 (1979)

Wood, W.W.: Monte Carlo studies of simple liquid models. In: Temperley, H.N.V., Rowlinson,

J.S., Rushbrooke, G.S. (eds.) Physics of simple liquids, Chap. 5, pp. 115-230, Amsterdam: NorthHolland (1968)

Received March 28, 1988/October 7, 1988