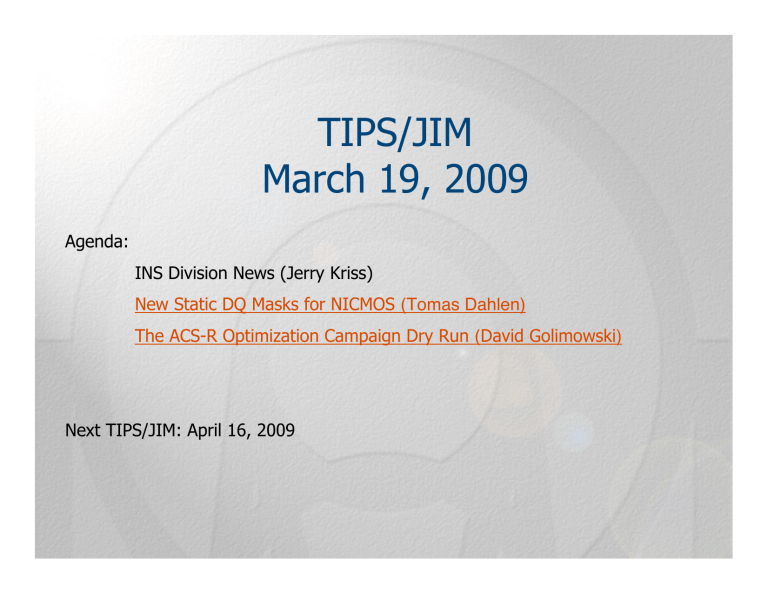

TIPS/JIM March 19, 2009

TIPS/JIM

March 19, 2009

Agenda:

INS Division News (Jerry Kriss)

New Static DQ Masks for NICMOS

(Tomas Dahlen)

The ACS-R Optimization Campaign Dry Run

(

David Golimowski

)

Next TIPS/JIM: April 16, 2009

Instruments Division News

3/19/2009

•

•

•

•

•

HST news: o

Only one landing and one launch to go---SM4 is still on track for May 12, o

2009.

The ACS team has now completed their dry runs of the optimization process for the repaired ACS WFC chip plus ASIC. Dave Golimowski o will tell you more about that this morning.

The instrument teams are putting the finishing touches on their revised

Phase II proposals for SMOV activities, with plans to complete these by tomorrow.

JWST news: o

The JWST Planning and Proposal System held a very successful design review here at STScI on March 5. Congratulations to Tony Krueger’s team for an excellent job, and to the members of the WIT team who supported these activities. o

ISIM had a successful Critical Design Review last week. Congratulations to our team here supporting that activity! Margaret Meixner will give you more details on the event this morning.

Nearly all office moves within the division have been completed. The next step is recarpeting offices on the rear sides of the 3 rd and 4 th floor center section. If you have any remaining logistical problems, please contact Robin Auer.

The next INS lunch is next Thursday, March 26, in the new Boardroom, from 12:00-

1:30. A volunteer (or few) would be welcome for coordinating this!

The next TIPS meeting will be Thursday, April 16, 2009.

TIPS/JIM

March 19, 2009

Agenda:

INS Division News (Jerry Kriss)

New Static DQ Masks for NICMOS

(Tomas Dahlen)

The ACS-R Optimization Campaign Dry Run

(

David Golimowski

)

Next TIPS/JIM: April 16, 2009

New static DQ masks for NICMOS

Tomas Dahlen & Elizabeth Barker

TIPS 3/19/2009

TIPS, Mar 19, 2009 - Tomas Dahlen 1

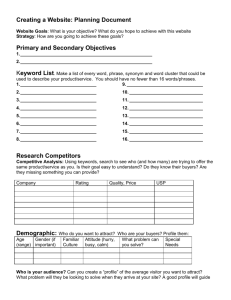

NICMOS Data Quality (DQ) extension of calibrated images (*_cal.fits or

*_ima.fits) contains information about which pixels may be problematic and should/may be excluded when combining dithered images.

DQ

0

1

2

4

8

16

32

64

128

256

512

1024

2048

4096

8192

16384

Meaning

No known problems

Reed-Solomon decoding error in telemetry

Poor or uncertain Linearity correction

Poor or uncertain Dark correction

Poor or uncertain Flat Field correction

Pixel affected by "grot" on the detector

Defective (hot or cold) pixel

Saturated pixel

Missing data in telemetry

Bad pixel determined by calibration

Pixel contains Cosmic Ray

Pixel contains source (see Section 3.4)

Pixel has signal in 0th read

CR detected by Multidrizzle

User flag

Curvature in detector response

• Most flag values are dynamic, i.e., are set during observation/calibration.

• Static flag values are DQ=16 (“grot” affected pixels) and DQ=32 (“bad” pixels).

• Each NICMOS camera has its own static mask given in the header keyword MASKFILE.

TIPS, Mar 19, 2009 - Tomas Dahlen 2

New static DQ masks for NICMOS

• The current “post-NCS” static masks were created 2002.

• We now have a large set of calibration images obtained during NICMOS monitoring programs 2002-2008.

• We have used this data to create new static masks for the three NICMOS cameras based on better statistics.

• The temporal coverage also allows us to investigate any changes in the masks with time or detector temperature.

TIPS, Mar 19, 2009 - Tomas Dahlen 3

DQ=32 “Bad” pixels

• We use long dark exposures (>1000s) to identify “Bad” pixels as pixels with a dark current deviating from what is expected.

• Hot pixels are defined as pixels with excessive charge compared to surrounding pixels.

• Cold pixels have extremely low(/zero) dark current (i.e., “dead” pixels).

• To quantify the number of bad pixels we use the recipe in Sosey (2002,

NICMOS ISR 2002-001 “ Updating the NICMOS Static Bad Pixel Masks”).

1.

A median dark image is made using available monitoring dark data after excluding CR affected pixels

2.

A smoothed image is made and subtracted from the median image

3.

The resulting image is rescaled in units of its rms

4.

Bad pixels are defined as pixels outside 5 σ

TIPS, Mar 19, 2009 - Tomas Dahlen

Sosey (2002)

4

DQ=32 “Bad” pixels

Since we have monitored the darks continuously from 2002 to 2008, we can look for changes with time/temperature.

Old DQ=32

2002

2003

2004

2005

2006

2007

2008

All new

NIC 1

193 (0.29%)

46

49

70

69

70

66

70

88 (+0.13%)

NIC 2

656 (1.0%)

17

21

28

24

24

29

31

40 (+0.06%)

NIC 3

446 (0.68%)

16

21

28

30

29

34

36

42 (+0.06%)

Old DQ=32: Number of DQ=32 pixels in existing mask.

For each year the number of additional hot pixels is tabulated.

All new: Total number of different pixels flagged as hot during at least one year.

Black dots: number of DQ=32 pixels in existing mask.

Red dots : number of DQ=32 after adding new bad pixels 5

DQ=32 “Bad” pixels

Plots show pixels that are not flagged in existing DQ mask, but pass the criteria for being bad in this investigation.

Number of pixels that “suddenly” turn bad and can be

“saved” by using multiple DQ masks is ~5 in each camera. (In addition a few bad pixels drop below the selection with time.)

We find that having multiple DQ masks to “save” at the most a few pixels per camera is not “worthwhile” and only a single mask is created for each camera.

6

DQ=16 “grot” pixels

• The grot consists of flecks of anti-reflective paint on the detectors (that were scraped off the optical baffles between the dewars due to the expansion of the solid nitrogen in Cycle 7).

• Grot leads to areas with reduced sensitivity.

• Size of grot ranges from 25

µ m to over 100

µ m and since

NICMOS pixels are 40 µ m on a side, grot can affect regions of several pixels, as well as a fraction of a pixel.

The largest example of a grot region is the "battleship" feature in NIC 1 which affects approximately 35 pixels.

Flat-field image DQ mask 7

DQ=16 “grot” pixels

• The grot affects the incoming light onto the detectors.

• Flats are ideal to estimate the effects of grot due to their high

“uniform” counts over the whole detector.

• To quantify the grot we use the recipe in NICMOS ISR

2003-003 (Schultz et al.).

1.

We use a well sampled non-inverted flat-field (F160W filter).

2.

A smoothed flat image is subtracted from this flat.

3.

Grot pixels are defined as a pixels deviating more than 4 σ in the subtracted image (excluding bad DQ=32 pixels).

8

NIC1

NIC2

NIC3

Existing

180

243

249

New

170

123

113

DQ=16 “grot” pixels

Table: The number of grot pixels. The number found here is significantly lower than in the existing mask for nic2 and nic3.

Q: what are the characteristics of the pixels not selected using the new selection?

To investigate this we look at the relative DQE response of the flagged pixels

- this is the pixel value of a ratio image created by dividing the flat image with a smoothed flat image. “Normal” pixel has response=1.

9

NIC1

NIC2

NIC3

Existing

180

243

249

New

170

123

113

DQ=16 “grot” pixels

Relative DQE response

The new selection suggest that grot pixels have response ~< 0.8.

The pixels that are flagged in the existing mask and NOT flagged in the new selection have a fairly normal response in the range

0.8-1.2.

Existing New Existing - New

10

NIC1

NIC2

NIC3

Existing

180

243

249

New

170

123

113

DQ=16 “grot” pixels

Relative DQE response

The new selection suggest that grot pixels have response ~< 0.8.

The pixels that are flagged in the existing mask and NOT flagged in the new selection have a fairly normal response in the range

0.8-1.2.

Furthermore, the rms of the previously flagged pixels look normal

Black dots: rms of non-flagged normal pixels

Green dots: rms of previously flagged pixels

Rms look similar, green dots should be OK

We should therefore be able to “de-flag” these

Rms vs. response Previously flagged

11

DQ=16 “grot” pixels

NIC1

NIC2

NIC3

Existing New

180

243

249

170

123

113

2002

172

119

113

2008

174

139

112

Q: Does the number of grot pixels change with time?

A: No.

But there are somewhat more flagged pixels in NIC2-

2008, but these have fairly normal response 0.7-0.9.

We will not include a time dependence in the grot mask

(i.e., not create multiple masks).

FINAL SELECTION

As a final selection, we include all “4 σ pixels” from above as grot pixels, except those that have a response >0.8 and a normal signal-to-noise.

The new MASKFILEs for the post NCS era will be delivered by end of March -09.

12

Existing:

New static DQ masks for NICMOS

Summary:

NIC 1

NIC 2

NIC 3

Grot, DQ=16

Existing

180

243

249

New

163

119

96

Bad, DQ=32

Existing

193

656

446

New

281

696

448

DQ=16, grot pixels

New:

13

NIC 1 NIC 2 NIC 3

TIPS/JIM

March 19, 2009

Agenda:

INS Division News (Jerry Kriss)

New Static DQ Masks for NICMOS

(Tomas Dahlen)

The ACS-R Optimization Campaign Dry Run

(

David Golimowski

)

Next TIPS/JIM: April 16, 2009

ACS-R Optimization Campaign Dry Run:

Status Report

David Golimowski

TIPS/JIM

19 March 2009

ACS-R Flight Hardware

During SM4 (EVA-3) four elements of the ACS will be replaced or modified:

CCD Electronics Box Replacement (CEB-R) is the heart of the ACS-R hardware: it will control and read out the ACS WFC CCDs.

Schematic Diagram of CEB-R

• CEB-R features Teledyne

SIDECAR* ASIC** that permits optimization of WFC performance via adjustment of CCD clocks, biases, and pixel transmission timing

•

Built in oscilloscope mode

(O-mode) allows sensing of analog signal from each output amplifier

* System for Image Digitization,

Enhancement, Control, and Retrieval

** Application Specific Integrated Circuit

Reset Gate

DSI output B

Raw video B

Raw video A

Sample O-scope Image

ACS-R Optimization Campaign

Background:

• ACS-R Optimization Campaign (OC) in SMOV begins ~10 days after ACS-R installation and AT/FT

•

8 iterations over 24 days designed to optimize CCD read noise, dark current, CTE, full-well depth, linearity, cross-talk, and data transmission timing

•

Bias, dark, flat, EPER, and subarray images taken at different gain, CDS modes (DSI and Clamp & Sample), and readout speeds.

•

Teledyne and GSFC will analyze O-mode data; STScI will analyze image data.

Optimization Campaign Timeline

• Visits A and G (general performance tests) are executed in all iterations

• Visits B, C, D, E, and F optimize specific performance characteristics

(settling times, clock coupling, voltages) and are not executed in all iterations

•

Visits A, B, D, E, and F contain image data

• Visits B, C, and G contain O-mode data

• OC may be truncated at SMS boundary (currently between Iters 5 & 6)

ACS-R OC Dry Run

• Complicated technical and logistical issues demand Dry Run rehearsal of

OC in February/March time frame

⇒ ⇒ ⇒

Completed yesterday!

•

GSFC, Teledyne, and STScI participated

•

GSFC configured EM-3 unit (CEB-R and LVPS-R) and Build-5 WFC in ESTIF

•

Only first 4 iterations of the OC were simulated

•

All iterations were SMS driven

Dry Run dates and durations:

Iteration 1: Feb 11-13 31 hr SMS

Iteration 2: Feb 23, Mar 2-3 15 hr SMS

Iteration 3: Mar 5-6, 9-10 27 hr SMS

Iteration 4: Mar 12-13, 16-18 18 hr SMS

Dry Run Personnel

ACS-R PI:

Ed Cheng (Conceptual Analytics)

GSFC:

Olivia Lupie , Steve Arslanian, Kevin Boyce, Rick Burley, Darryl Dye, Dennis

Garland, Mike Kelly, Kathleen Mil, Barbara Scott, Beverly Serrano, Colleen

Townsley, Augustyn Waczynski, Erin Wilson

Teledyne:

Markus Loose , Raphael Ricardo

STScI:

David Golimowski , Linda Smith, Marco Sirianni (ESTEC), Carl Biagetti,

George Chapman, Marco Chiaberge, Tyler Desjardins, Norman Grogin,

Tracy Ellis, Pey-Lian Lim, Ray Lucas, Aparna Maybhate, Max Mutchler,

Anatoly Suchkov (JHU), Mike Swam, Tom Wheeler

Dry Run Results (1)

Iteration 1 : a bumpy start

• All performance and optimization tests executed to assess baseline

•

Bias & clock voltages set for WFC#4 (Flight build)

•

Problems found in several areas:

1) ESTIF: Lamp too bright (saturated data); “un-dark” biases and darks

2) SMS: No 1-min delay between changes of gain or CDS

3) OPUS: Crashes and incorrect keywords exposed outdated EUDL and

CCDTAB reference files

4) FT: Mismatched gain/offset parameters; subarray readout problem

Items 1-3 fixed for Iteration 2; FT issues now resolved

•

Despite limited data, STScI correctly assessed read noise and limited full-well depth expected for non-optimal initial voltage configuration.

Dry Run Results (2)

Iteration 2:

• General performance tests (A & G) and one optimization test (C)

•

Bias & clock voltages set for WFC#5 (flight spare)

•

Full-speed read noise 4.0-4.5 e – (DSI); 4.5-5.0 e – (C&S)

Variable striation in bias images attributed to 1/f noise from MOSFET

• Lamp now too faint; full-well exposure not obtained

Full-well depth raised to > 60K e –

•

Bias offset for half-speed DSI frames too low; ADC saturated at low end

• High frequency vertical striping in half-speed, C&S A-amp bias frames

•

Lack of hot pixels & cosmic rays preclude CTE and cross-talk tests

Iteration 2: Bias Frames

Full speed, Dual-Slope Integrator Full speed, Clamp & Sample

Iteration 2: Bias Frames

Half speed, Dual-Slope Integrator Half speed, Clamp & Sample

Dry Run Results (3)

Iteration 3:

• General performance tests (A & G) and 2 optimization tests (D and E)

•

Bias & clock voltages set for WFC#5 (flight spare)

•

Lamp brightness well matched to on-orbit cal lamp

Full-well depth measured at nominal ~80K e –

• Visit D — Bias Voltage Optimization Test:

Read noise lower, amp gains more consistent with

Δ

V

OD

=

Δ

V

DD

= +1 V

•

Visit E — Clock Voltage Optimization Test:

CTE too good for analysis software; need to improve algorithm

• Half-speed read noise 3.5-4.5 e – (DSI); 6.5-7.0 e – (C&S)

DSI: ~0.5 e – better than full-speed

C&S: significantly worse than full-speed

Iteration 3: Photon Transfer Test

• Full-well depth measured at nominal ~80K e –

•

Gain = 2.3 e – /DN

•

Excellent linearity

Dry Run Results (4)

Iteration 4:

• General performance tests (A & G) and 2 optimization tests (C and F)

•

“Up-linked” new default V

OD and V

DD for Iteration 4

•

Visit F — Science Data Transmission Optimization Test:

Find optimal size and delays of bit transfers from CEB-R to MEB

Summary and Imminent Tasks

• Dry Run was extremely useful for testing analysis software, training analysts, verifying communication and data flow, and exposing bugs and features. STScI is well prepared to tackle “The Real Thing.”

•

Need to verify and summarize analysis of all Iterations for Dry Run debrief

•

Need to revise some analysis software with newly exposed flaws

•

Rerun all data through OPUS to ensure proper keyword population

•

Insert 1-extra day of analysis for each iteration into SMOV timeline

SMSes take longer than expected to execute; data will come to us less promptly and frequently during OC; need more thinking time.

•

Ensure that successful implementation of FT is captured in EVA-3 command plan

Review of revised CP occurred yesterday; all proposed changes found to be in order and accepted.