UP-STAT 2016 ABSTRACTS SESSION 1A

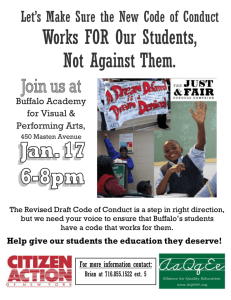

advertisement