Team 7: SUPER B RBAMAN ENGINEERING &

advertisement

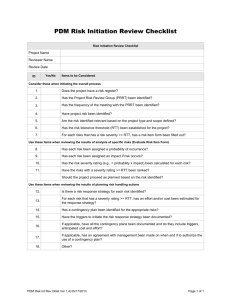

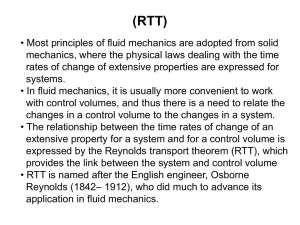

Team 7: SUPER B RBAMAN 18-749: Fault-Tolerant Distributed Systems Electrical &Computer ENGINEERING Team Members Mike Seto mseto@ Wee Ming wmc@ Ian Kalinowski igk@ Jeremy Ng jwng@ http://www.ece.cmu.edu/~ece749/teams/team7/ or just for ‘borbaman’ 2 Baseline Application 1 SYNOPSIS: – – – – – Fault-tolerant, real-time multiplayer game Inspired by Hudsonsoft’s Bomberman Players interact with other players, and their actions affect the shared environment Players plant timed bombs which can destroy walls, players and other bombs. Last player standing is the winner! PLATFORM: Middleware: Orbacus (CORBA/C++) on Linux Graphics: NCURSES Backend: MySQL 3 Baseline Application 2 COMPONENTS: – – – Front end: one client per player Middle-tier game servers Back end: database STRUCTURE: – – – – – A client participates in a game with other clients 4 clients per game (more challenging and has real-time elements) A client may only belong to one game A server may support multiple games Game does not start until 4 players have joined 4 Baseline Architecture New Game (4 Clients each) joinGame() Game Server (Server Object) Server(s) db_add_player() Database Create game object Clients Active Game (4 Clients each) update() db_get_gameState() db_set_gameState() Game Object(s) Persistent State of Current Games (Bombs, Players, etc) 5 Fail-Over Measurements – Fault-free RTT vs. Number of Invocations 250000 RTT (us) 200000 150000 Database RTT Client RTT 100000 50000 0 1 68 135 202 269 336 403 470 537 604 671 738 805 872 939 1006 Number of Invocations 6 Fault-Tolerance Goals ORIGINAL FAULT-TOLERANT GOALS: 1) Preserves current game state under server failure • • • • Coordinates of players, and player state Bomb locations, timers State of map Score 2) Switch from failed server to another server within 1 second 3) Players who “drop” may rejoin game within 2 seconds 7 FT-Baseline Architecture Stateless Servers – – Two servers run on two machines with a single shared database Passive replication: • No distinction between “primary” and “backup” servers • No checkpointing – – – Each server replica can receive and process client requests But…clients only talk to one replica at any one time Naming Service and Database system are single point of failure. 8 FT-Baseline Architecture Guaranteeing Determinism – – – – State is committed to the reliable database at every client invocation State is read from the DB before processing any requests, and committed back to the DB after processing Table locking per replica in the database insures atomic access per game and guarantees determinism between our replicas Transactions with non-increasing sequence numbers are discarded Transaction processing – – Database transactions are guaranteed atomic Consistent state is achieved by having the servers read state from the database before beginning any transaction 9 FT-Baseline Architecture Server-1 Clients 1. update() 2. COMM failure 3. Retrieve server list X Naming Service db_get_gameState() db_set_gameState() Database db_get_gameState() db_set_gameState() 4. retry() Active Game (4 Clients each) 5. update() Server-2 10 Mechanisms for Fail-Over Failing-Over 1. 2. 3. 4. Client detects failure by catching COMM/TRANSIENT exception Client queries Naming Service for list of servers Client connects to first available server in order listed in Naming Service If this list is null, the client waits until a new server registers with the naming service 11 Fail-Over Measurements – 16 Faults RTT vs. Number of Invocations (with 16 Faults) 250000 RTT (us) 200000 150000 Client RTT 100000 50000 0 1 68 135 202 269 336 403 470 537 604 671 738 805 872 939 1006 Number of Invocations 12 Fail-Over Measurements – Breakdown with 16 Faults 13 Fail-Over Measurements Problem: • Average Fault-Free RTT: 14.7 ms • Average Failure-Induced RTT: 78.8 ms • Maximum Failure-Induced RTT: 1045.8 ms Solution: Too High! Have servers pre-resolved by client, and have clients pre-establish connections with working servers. 14 RT-FT-Baseline Architecture What we tried: – – Clients create a low-priority Update thread which contacts the Naming Service at a regular interval, caches references of working servers, and attempts to preestablish connections. This thread also performs maintenance on existing connections and repopulate cache with new launched servers What we expected: X 15 RT-FT Optimization – Part 1 Before and after multi-threaded optimization No Faults - Threaded 40000 40000 35000 35000 30000 30000 Invocation Time(us) Invocation Time(us) No Faults - Non Threaded 25000 20000 15000 25000 20000 15000 10000 10000 5000 5000 0 0 1 61 121 181 241 301 361 421 481 541 601 661 721 781 841 901 961 1 61 121 181 241 301 361 421 481 541 601 661 721 781 841 901 961 Invocation # (spaced every 50ms) Invocation # (spaced every 50ms) What went wrong? 16 Bounded “Real-Time” Fail-Over Measurements Jitter: – – – Maximum Jitter BEFORE: 36 ms Maximum Jitter AFTER: 176 ms We have “improved” the jitter in our system by -389% ! RTT: – – – Average RTT BEFORE: 13 ms Average RTT AFTER: 21 ms We have “improved” the average RTT by -59% ! Why?? – – High overhead from the Update thread Queried the Naming Service every 200 us! • Oops…. 17 RT-FT Optimization – Part 2 RTT with No Faults - 500ms Multi-threaded Optimization 300000 250000 RTT (us) 200000 150000 100000 50000 0 1 63 125 187 249 311 373 435 497 559 621 683 745 807 869 931 993 Number of Invocations Reduced the update period from 200 us to 500 ms 18 RT-FT Optimization – Part 2 RTT with 16 Faults - 1 min apart - 500 ms - Buggy Multi-threaded Optimization 600000 13 spikes above 200 ms 500000 RTT (us) 400000 300000 200000 100000 0 1 1026 2051 3076 4101 5126 6151 7176 8201 9226 10251 Number of Invocations With faults…but why the high periodic jitter? 19 RT-FT Optimization – Part 2 RTT with 16 Faults - 1 min apart - 500 ms - Fixed Multi-threaded Optimization 600000 3 spikes above 200 ms 500000 RTT (us) 400000 300000 200000 100000 0 1 1035 2069 3103 4137 5171 6205 7239 8273 9307 10341 Number of Invocations Bug discovered and fixed from analyzing results 20 RT-FT Fail-Over Measurements Average RTT: – 41 ms Jitter: – – Average Faulty Jitter: 81 ms Maximum Jitter: 480 ms Failover time: – – Previous max: 210 ms Current max: 230 ms However, these numbers are not realistically useful because - Cluster variability influences jitter considerably - Measurements are environment dependent % of Outliers before = 0.1286% % of Outliers after = 0.0296% 21 RT-FT-Performance Strategy LOAD BALANCING: – – – Load balancing is performed on the client-side Client randomly selects initial server to connect to Upon failure, client randomly connects to another alive server MOTIVATION: – – Take advantage of multiple servers Explore the effects of spreading a single game across multiple servers 23 22 Performance Measurements RTT - 16 clients (4 Games) - With Load Balancing 3500000 3500000 3000000 3000000 2500000 2500000 RTT (us) RTT (us) RTT - 16 clients (4 Games) - Without Load Balancing 2000000 1500000 2000000 1500000 1000000 1000000 500000 500000 0 0 1 164 327 490 653 816 979 1142 1305 1468 1631 1794 1957 2120 2283 2446 Number of Invocations 1 102 203 304 405 506 607 708 809 910 1011 1112 1213 1314 1415 1516 Number of Invocations Load Balancing Worsens RTT Performance 23 Performance Measurements Load balancing decreased performance – – This is counter-intuitive One single-threaded server should be slower than multiple singlethreaded servers • Load balancing should have improved RTT since multiple servers could service separate games simultaneously Server code was not modified in implementing load balancing – – Problem has to be with concurrent accesses to the database This pointed us to a bug in the database table locking code • Transactions and table locks were out of order, causing table locks to be released prematurely 24 Average RTT (µs) Performance Measurements Load-Balancing with DB lock bug fixed 25 Performance Measurements Corrected Load Balancing – Load balancing resulted in improved performance • Non-balanced average RTT: 454 ms • Balanced average RTT: 255 ms 26 Insights from Measurements FT-Baseline – Can’t assume failover time is consistent or bounded RT-FT-Optimization (update thread) – – Reducing jitter resulted in increased average RTT Scheduling the update thread too frequently results in increased jitter and overhead Load Balancing – Load balancing can easily be done incorrectly • Spreading games across multiple machines does not necessarily improve performance • It can be difficult to select the right server to fail-over to • Single shared resource can be the bottleneck 27 Open Issues Let’s discuss some issues… – Newer cluster software would be nice! • Newer MySQL with finer-grained locking, stored procedures, bug fixes, etc. • Newer gcc needed for certain libraries – – Clients can’t rejoin the game if the client crashes Database server is a huge scalability bottleneck If only we had more time… – – – – GUI using real graphics instead of ASCII art Login screen and lobby for clients to interact before game starts Rankings for top ‘Borbamen’ based on some scoring system Multiple maps, power-ups (e.g. drop more bombs, move faster, etc.) 28 Conclusions What we’ve learned… – Stateless servers make server recovery relatively simple • But this moves the performance bottleneck to the database – – – Testing to see that your system works is not good enough – looking at performance measurements can also point out implementation bugs Really hard to test performance on shared servers… Testing can be fun when you have a fun project Accomplishments – – – Failover is really fast for non-loaded servers We created a “real-time” application BORBAMAN = CORBA+BOMBERMAN is really fun! 29 Conclusions For the next time around… – – – Pick a different middleware that will let us run clients on machines outside of the games cluster Run our own SQL server that doesn’t crash during stress testing O:-) Language interoperability (e.g. Java clients with C++ servers) could be cool • Orbacus supposedly supports this – Store some state on the servers to reduce the load on the database 30 And the winner goes to ….. 31 Finale! No Questions Please. GG EZ$$$ kthxbye 32 Appendix RTT - No Faults - 500 ms Multi-threaded Optimization RTT - No Faults - 200 us Multi-threaded Optimization 500000 500000 450000 450000 400000 400000 350000 350000 300000 300000 250000 250000 200000 200000 150000 150000 100000 100000 50000 50000 0 0 1 59 117 175 233 291 349 407 465 523 581 639 697 755 813 871 929 987 1 59 117 175 233 291 349 407 465 523 581 639 697 755 813 871 929 987 Varying Server Load Affects RTT 33 RT-FT Fail-Over Measurements Breakdown of RTT (with 16 Faults) Misc., 13181.85539, 17% Normal Time, 37864.08211, 47% Retry(), 28490.5, 36% 34